Wolfram Language Paclet Repository

Community-contributed installable additions to the Wolfram Language

FubiniStudyMetricTensor[QuantumState[...] ,opts] | calculates the Fubini–Study metric tensor as defined by the VQE approach from a QuantumState with defined parameters |

QuantumState |

|

|

1 | 0 |

0 | 2 Sin[2θ1] |

"Matrix" | obtain the correspondant Fubini-Study metric tensor matrix in as a list. |

"MatrixForm" | obtain the correspondant Fubini-Study metric tensor matrix in MatrixForm. |

"Parameters" | obtain parameters used for the differentiation process. |

"SparseArray" | obtain the correspondant Fubini-Study metric tensor matrix as a SparseArray. |

1 | 0 |

0 | 2 Sin[2θ1] |

|

|

1 | 0 |

0 | 2 Sin[2θ1] |

|

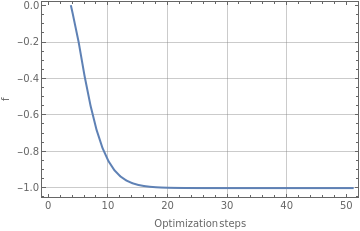

QuantumNaturalGradientDescent[f,metric ,opts] | calculates the gradient descent of f using the defined metric tensor for the parameters space. |

QuantumState |

|