Details and Options

The function ResourceFunction["MutualInformation"][dist] expects a bivariate or multivariate distribution.

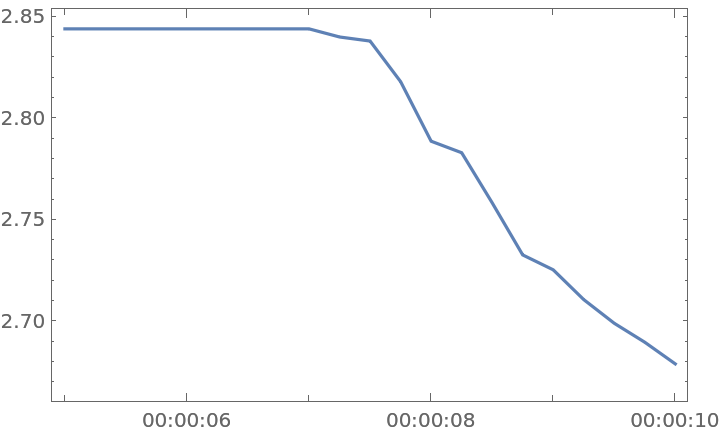

The estimator ResourceFunction["MutualInformation"][list,t] computes the mutual information between list[i] and list[i+s], with s=1,2,…,t.

The function

ResourceFunction["MutualInformation"][list,___] uses parallel kernels by default. To force sequential execution, use the option value

"Parallelize"→False.

ResourceFunction["MutualInformation"][list,{t,0}] is equivalent to ResourceFunction["MutualInformation"][list,t].

In addition to the options for

KullbackLeiblerDivergence, the function

ResourceFunction["MutualInformation"], when used on distributions, accepts the following options:

| "CopulaKernel" | "Product" | uses the specified copula kernel to compute the joint distribution from the provided marginals |

| "Marginals" | Automatic | specifies how to extract marginals from the joint distribution |

The option setting

"Marginals"→Automatic splits the joint space in half.

Any kernel supported by

CopulaDistribution can be provided, though some copulas will be hard to compute exactly. Use the option

Method→NExpectation to compute a numerical approximation, which is much faster most of the time.

When used on lists of samples, the function ResourceFunction["MutualInformation"] accepts the following options:

| DistanceFunction | ChessboardDistance | distance used to compute the estimator |

| "Errors" | False | whether or not to compute the error on the estimator |

| "KNeighbour" | 4 | kth-nearest neighbor parameter to be used for the estimator on lists of samples |

| "Parallelize" | True | whether or not to use parallel computation |

ResourceFunction["MutualInformation"][list,___] uses an estimator that relies on a kth-nearest neighbors algorithm (see Autor Notes). The default value of k is 4; to set a different value, use the option "KNeighbour".

The estimator performs poorly on very skewed distributions. In such cases, one should renormalize the distribution with

Standardize to get better results.

![v1 = VideoExtractFrames[Video["ExampleData/Caminandes.mp4"], Quantity[Range[50, 100], "Frames"]];

v2 = VideoExtractFrames[Video["ExampleData/Caminandes.mp4"], Quantity[Range[100, 150], "Frames"]];](https://www.wolframcloud.com/obj/resourcesystem/images/abb/abbf9979-2288-4b7f-9099-7992f54b85b8/30a28df007583255.png)

![timeseries = TimeSeries[

ResourceFunction["MutualInformation"][videoframes, 20, DistanceFunction -> ImageDistance], {5, 10}];](https://www.wolframcloud.com/obj/resourcesystem/images/abb/abbf9979-2288-4b7f-9099-7992f54b85b8/27f045dfb81612a8.png)