Wolfram Language Paclet Repository

Community-contributed installable additions to the Wolfram Language

This paclet utilizes XGBoost algorithm in Wolfram Language

Contributed by: Mike Yeh

In this paclet, we provide Wolfram Language functions for implementing XGBoost python functions, e.g., xgb.DMatrix(), xgb.train(), and predict(). So far we have XgbTrain[] to implement xgb.train() and XgbModelPredict[] to perform model prediction. More functions will be added later.

To install this paclet in your Wolfram Language environment,

evaluate this code:

PacletInstall["MikeYeh/XGBPaclet"]

To load the code after installation, evaluate this code:

Needs["MikeYeh`XGBPaclet`"]

A XGBoost python session is recommended before using XgbTrain[]. The code demonstrates the minimum packages needed to be installed before creating a python session:

| In[1]:= |

To create a XGBoost python session:

| In[2]:= |

Within the session our function will automatically import xgboost and other packages.

Create binary classification training set and validation set for the following examples:

| In[3]:= | ![binary`traindata = RandomReal[{-10, 10}, {100, 3}];

binary`testdata = RandomReal[{-10, 10}, {100, 3}];

binary`trainlabel = RandomChoice[{"a", "b"}, {100}];

binary`testlabel = RandomChoice[{"a", "b"}, {100}];

binary`trainset = {binary`traindata -> binary`trainlabel};

binary`testset = {binary`testdata -> binary`testlabel};](https://www.wolframcloud.com/obj/resourcesystem/images/c7b/c7b76bf3-d8ba-4d49-af93-2b11bd67b007/4d0cf1bc90039eee.png) |

UUse XgbTrain[] to train a model with binary`trainset and XGBoost python session, and store the trained model in the output session:

| In[4]:= |

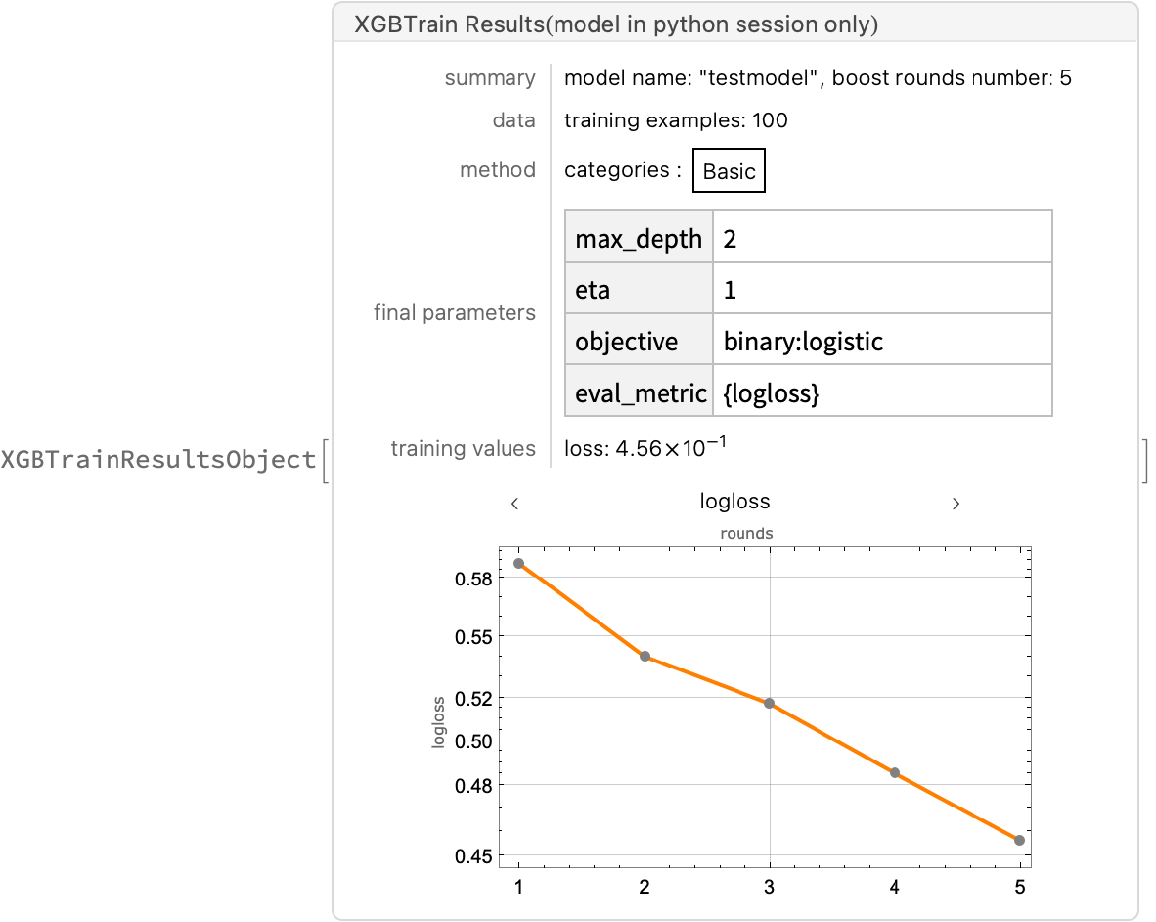

Store all information of the trained model in the XGBTrainResultsObject[]:

| In[5]:= |

| Out[5]= |  |

XgbMeasurement[] can evaluate the model in the session and make a prediction on given data:

| In[6]:= |

| Out[7]= |  |

Change XGBoost training runs "numBoostRound" to be 2:

| In[8]:= |

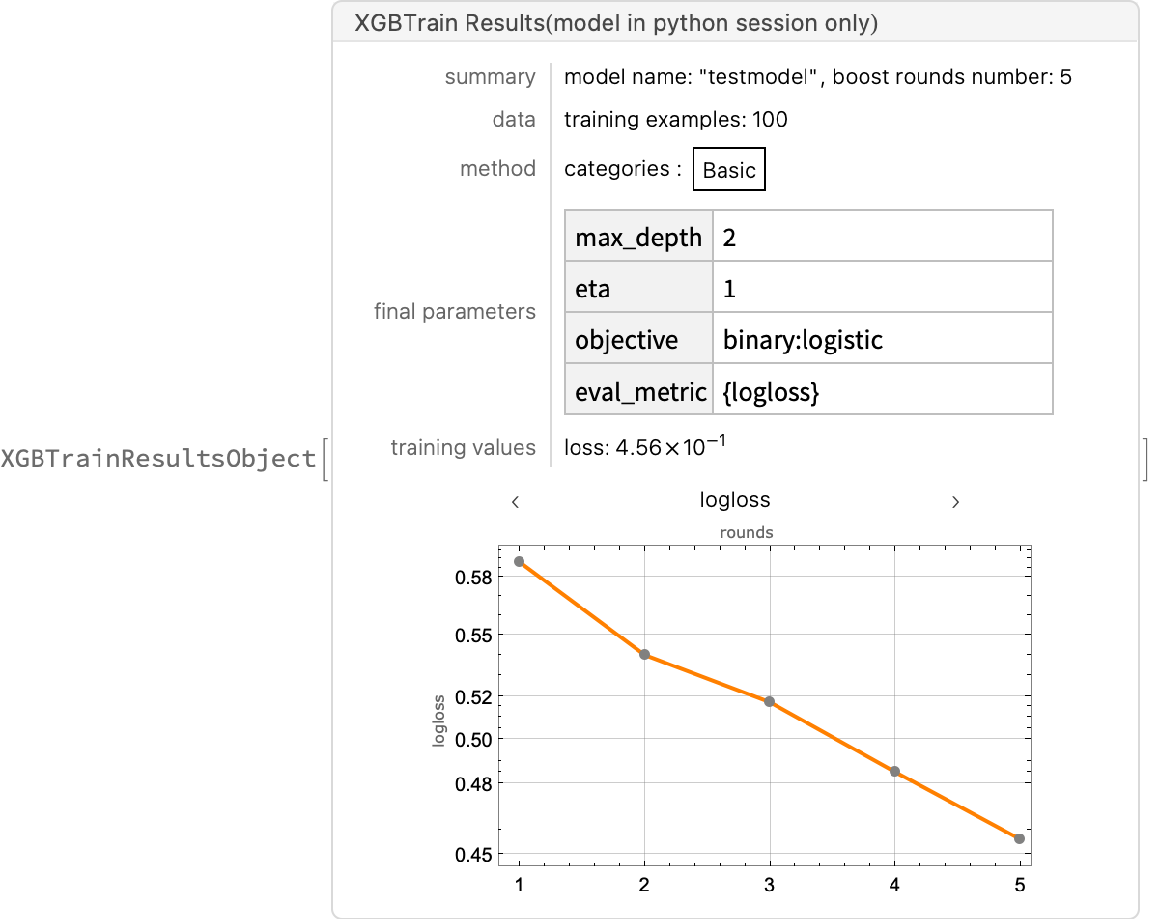

XgbTrain with All as input parameter will return XGBTrainResultsObject[], which stores all training information:

| In[9]:= |

| Out[9]= |  |

Wolfram Language Version 14.1