Basic Examples (5)

The first article ever on ArXiv:

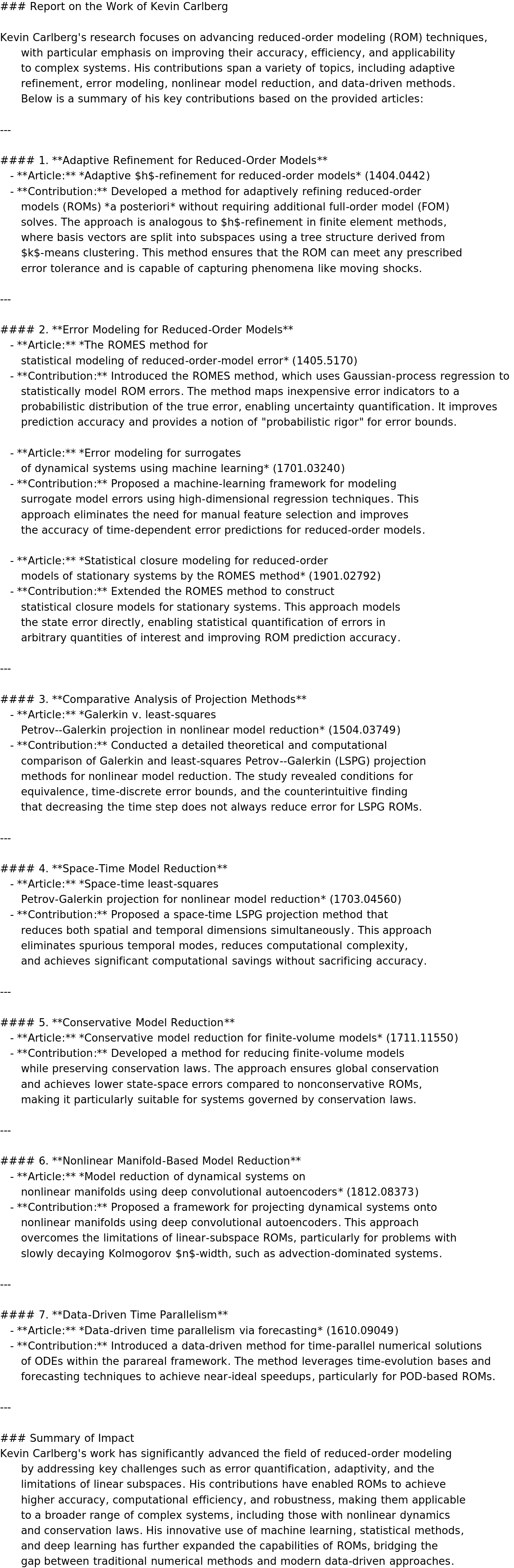

A DateListPlot showing the trends in the most popular title words in theoretical physics category ("hep-th", primary or cross-list):

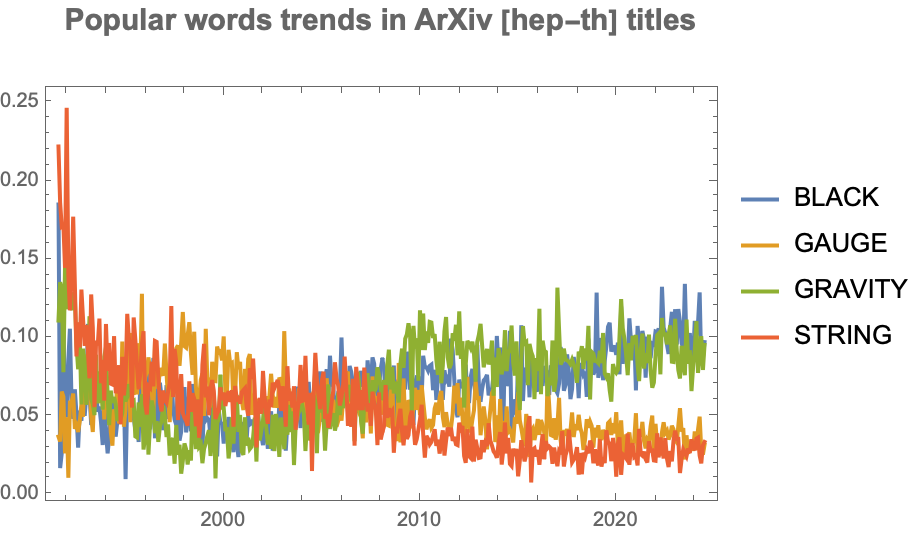

All the 50 most common 2-neighbour title words on the whole ArXiv, ever:

Authors with more than one possible name (and categories) are conveniently registered as "ArXivAuthor" entities. For example:

We can then also easily create an author citations graph, with the tooltip indicating the articles ids:

Scope (13)

The dimensions of the whole ArXiv main dataset (at the end of November 2024):

Let us create a super-database with all computer science "cs" type (primary or cross-list) categories:

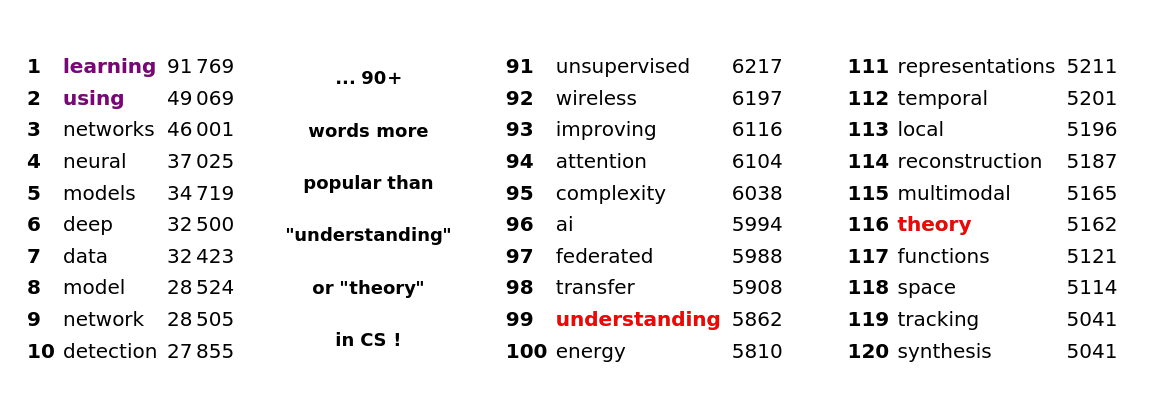

and then let us visualize the most and less frequent title words:

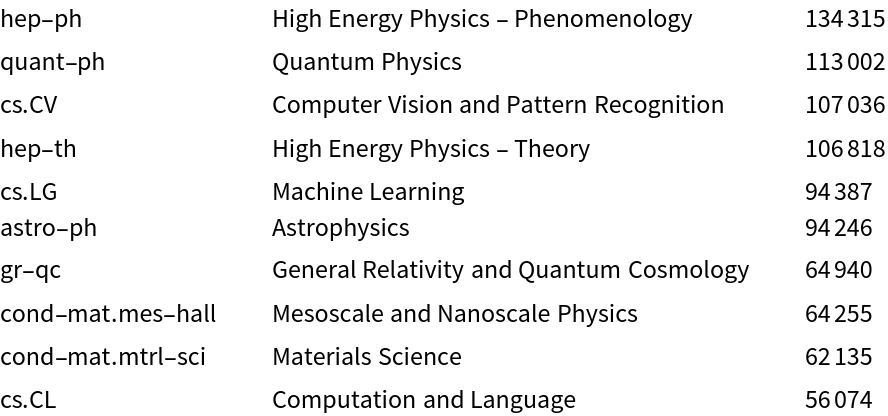

Let us calculate the 10 most frequent categories, with their meaning and number of articles each:

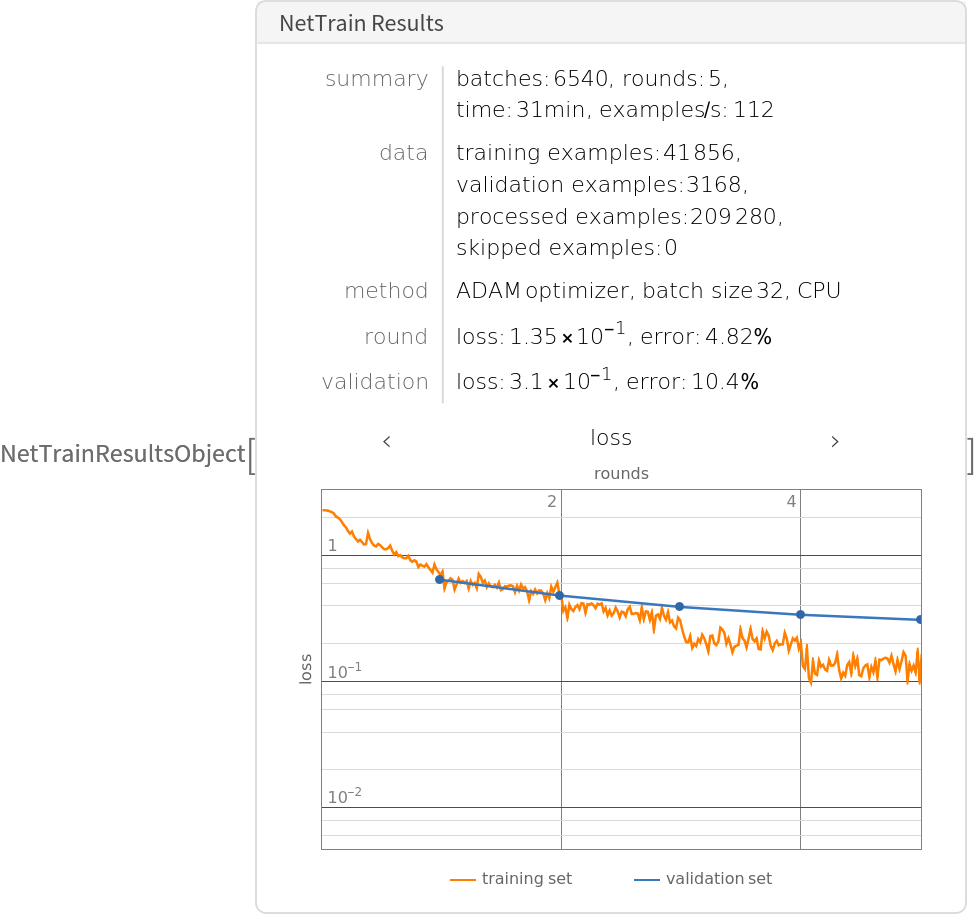

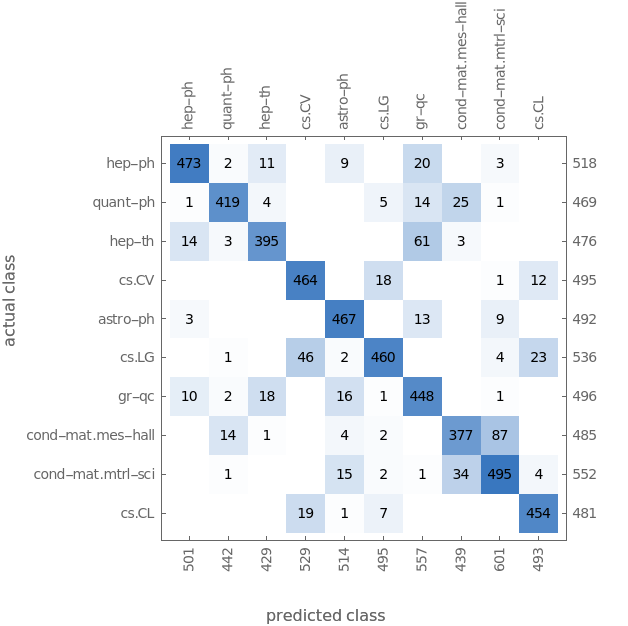

We can create train and test sets using only 5000={4500,500} titles and abstracts for each category:

we can train a NN to classify these categories, with layers' dimension 80 and dropout level 0.5:

Even with a basic 30 minutes training on laptop CPU, we obtain 89% accuracy:

and a rather clean confusion matrix:

We could even classify authors within the same category, with ArXivClassifyAuthorNet.

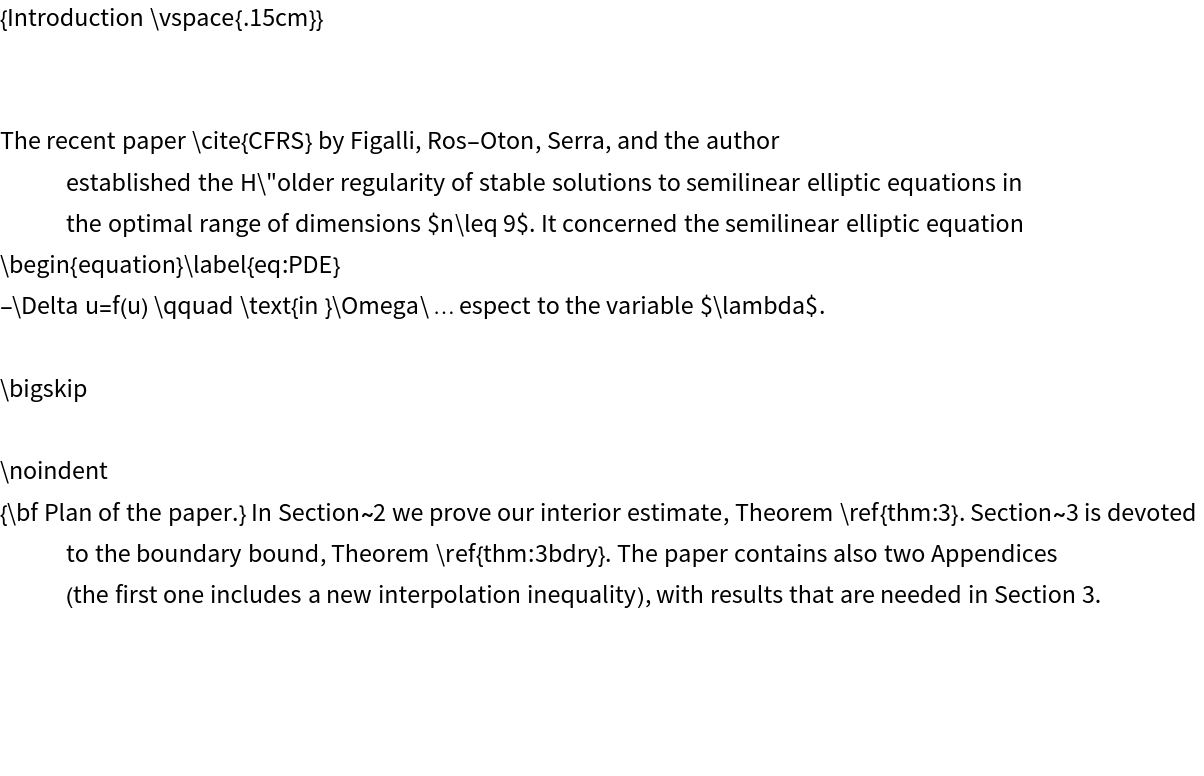

Extracting TEX introduction:

also TEX formulae:

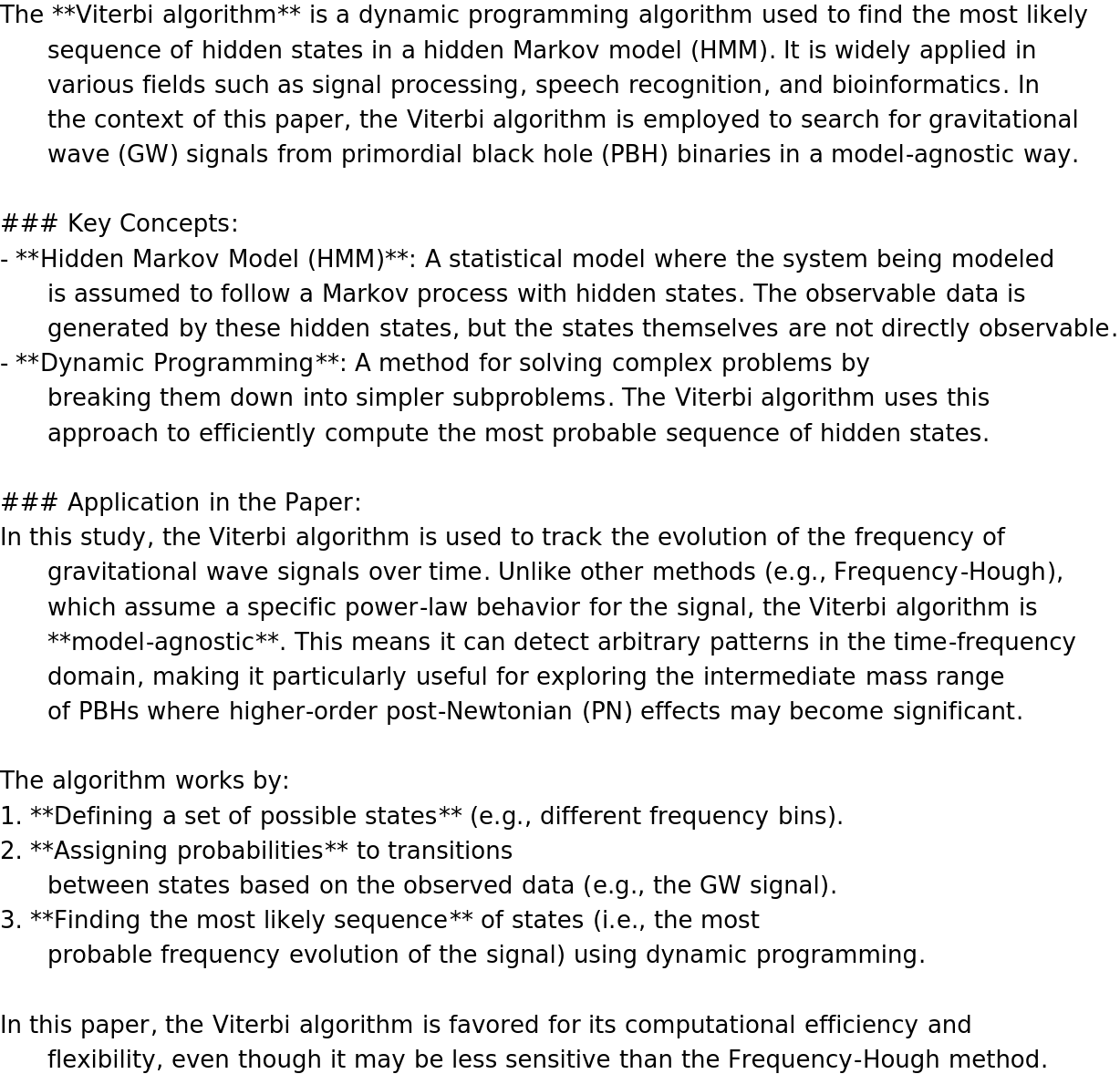

Explain a technical concept using an article introduction and LLMSynthesize:

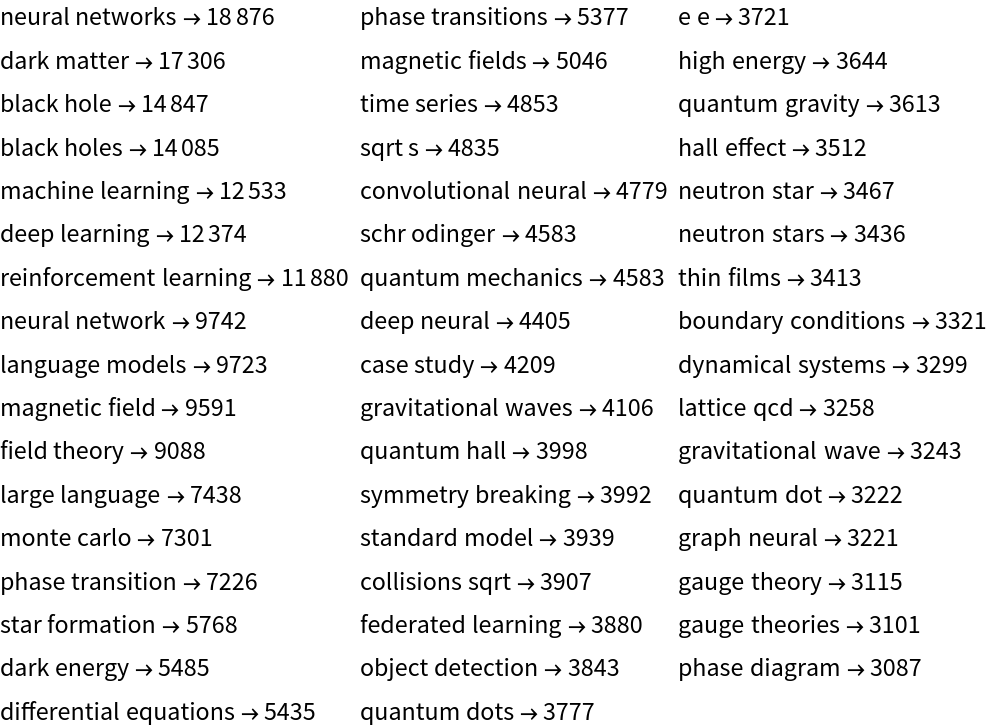

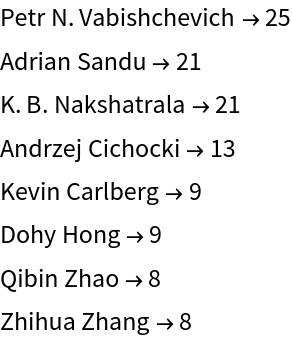

Let us visualize all authors with more than 7 papers, in primary category "cs.NA":

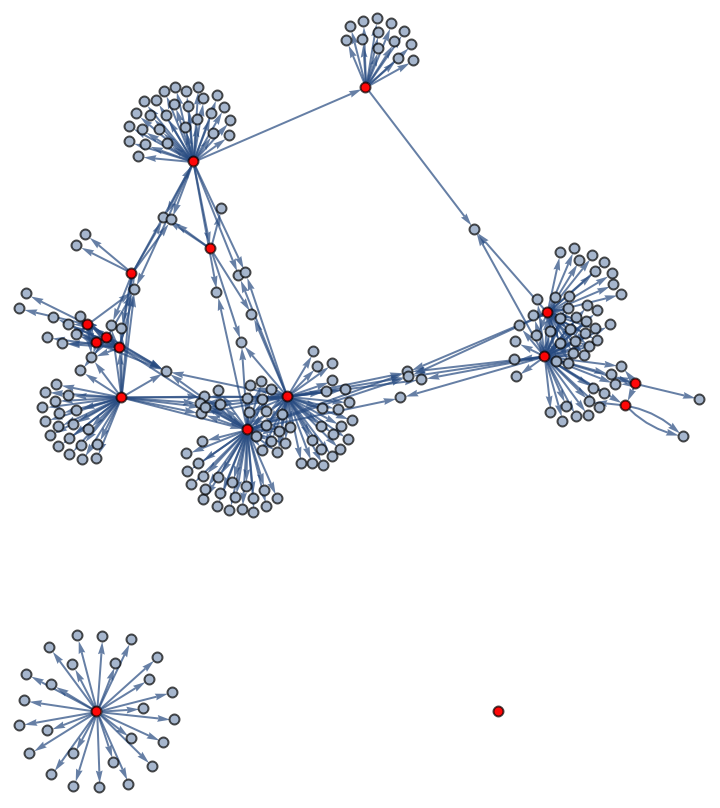

Let us pick a random author among them and use LLM functionality to explain his overall work:

![Block[{words = {"black", "gauge", "gravity", "string"}},

InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ArXivPlot", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"DanieleGregori/ArXivExplore\"", ",", "\"DanieleGregori`ArXivExplore`ArXivPlot\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivPlot"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivPlot"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]][words, {"hep-th", All}, PlotRange -> Full, PlotLegends -> words]]](https://www.wolframcloud.com/obj/resourcesystem/images/d64/d64a1e95-2d49-46a8-ab0e-5fc84166f916/73509104ef66f101.png)

![Block[{cat = {"cs", All}, tabs, colrules, tabskey, compl, cut = 160, res = 10},

colrules = {"learning" -> Style["learning", Purple, Bold], "using" -> Style["using", Purple, Bold], "theory" -> Style["theory", Red, Bold], "understanding" -> Style["understanding", Red, Bold]};

tabs = MapAt[Apply[Sequence, #] &,

MapIndexed[

Partition[

Riffle[Map[Style[#, Bold] &, Range[res*(First[#2] - 1) + 1, res*First[#2]]], #], 2] &, Partition[

Normal@InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ArXivTopTitles", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"DanieleGregori/ArXivExplore\"", ",", "\"DanieleGregori`ArXivExplore`ArXivTopTitles\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivTopTitles"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivTopTitles"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]][cat, cut], UpTo@res]], {All, All, 2}] /. colrules;

tabskey = Cases[tabs, _List?(MemberQ[#[[All, 2]], Alternatives["theory", "understanding"] /. colrules] &)];

compl = Text[Style[

"... " <> ToString[Round[First@tabskey[[1, 1, 1]] - 1, 10]] <> "+\n

words more\n

popular than\n

\"understanding\"\n

or \"theory\"\n

in CS !", Bold, 9, TextAlignment -> Center]];

GraphicsRow[Join[{TextGrid@tabs[[1]], compl}, TextGrid /@ tabskey], ImageSize -> Large]]](https://www.wolframcloud.com/obj/resourcesystem/images/d64/d64a1e95-2d49-46a8-ab0e-5fc84166f916/0383fd368c56a513.png)

![KeyValueMap[{#1, InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ArXivCategoriesLegend", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"DanieleGregori/ArXivExplore\"", ",", "\"DanieleGregori`ArXivExplore`ArXivCategoriesLegend\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivCategoriesLegend"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivCategoriesLegend"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]][#1], #2} &, InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ArXivTopCategories", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"DanieleGregori/ArXivExplore\"", ",", "\"DanieleGregori`ArXivExplore`ArXivTopCategories\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivTopCategories"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivTopCategories"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]][10]] // Normal // TableForm](https://www.wolframcloud.com/obj/resourcesystem/images/d64/d64a1e95-2d49-46a8-ab0e-5fc84166f916/5c126a830e2f56aa.png)

![InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ArXivExplainConcept", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"DanieleGregori/ArXivExplore\"", ",", "\"DanieleGregori`ArXivExplore`ArXivExplainConcept\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivExplainConcept"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivExplainConcept"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]]["Viterbi algorithm", "2401.02314", LLMEvaluator ->

<|"Prompts" -> "Keep the output contained and emphasize the relation to this paper"|>] // Text](https://www.wolframcloud.com/obj/resourcesystem/images/d64/d64a1e95-2d49-46a8-ab0e-5fc84166f916/16c4525faef79968.png)

![InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ArXivExplainAuthor", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"DanieleGregori/ArXivExplore\"", ",", "\"DanieleGregori`ArXivExplore`ArXivExplainAuthor\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivExplainAuthor"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["DanieleGregori/ArXivExplore", "DanieleGregori`ArXivExplore`ArXivExplainAuthor"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]]["Kevin Carlberg", "cs.NA", LLMEvaluator -> <|

"Prompts" -> "Keep the output contained"|>] // Text](https://www.wolframcloud.com/obj/resourcesystem/images/d64/d64a1e95-2d49-46a8-ab0e-5fc84166f916/5a3e4ab59ff93404.png)