Wolfram Function Repository

Instant-use add-on functions for the Wolfram Language

Function Repository Resource:

Create a NetGraph with a simplified rule-based interface

ResourceFunction["RuleNetGraph"][layer1→layer2→…→layern] creates a NetGraph object from the specified layers. | |

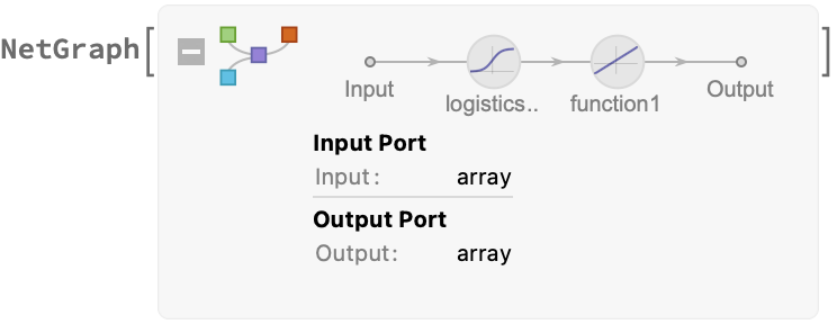

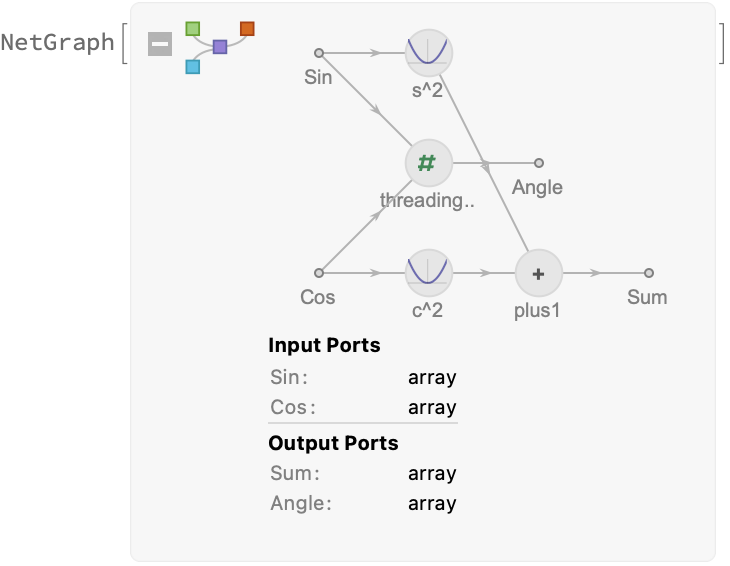

Build a simple NetGraph using a list of rules:

| In[1]:= |

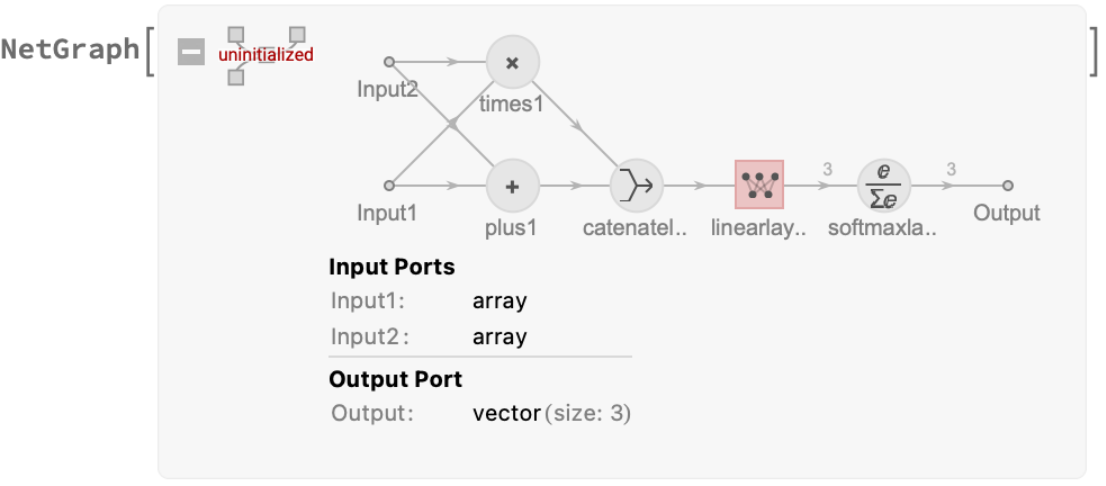

Use lists of NetPort objects and layers for more complicated networks:

| In[2]:= | ![ResourceFunction["RuleNetGraph"][{NetPort["Input1"], NetPort["Input2"]}

-> {Plus, Times} -> CatenateLayer[] -> LinearLayer[3] -> SoftmaxLayer[]]](https://www.wolframcloud.com/obj/resourcesystem/images/de6/de65c6dc-c7e5-478e-9c81-48e04a0072aa/3441e3e413ebea60.png) |

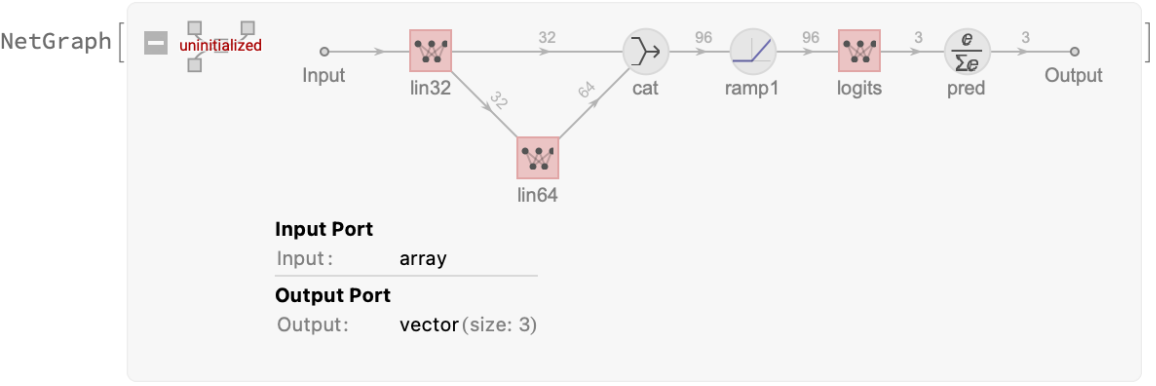

Use string subscripts to manually specify layer names:

| In[3]:= | ![ResourceFunction["RuleNetGraph"][{

\!\(\*SubscriptBox[\(LinearLayer[32]\), \("\<lin32\>"\)]\) ->

\!\(\*SubscriptBox[\(LinearLayer[64]\), \("\<lin64\>"\)]\),

"lin32"

} ->

\!\(\*SubscriptBox[\(CatenateLayer[]\), \("\<cat\>"\)]\) -> Ramp ->

\!\(\*SubscriptBox[\(LinearLayer[3]\), \("\<logits\>"\)]\) ->

\!\(\*SubscriptBox[\(SoftmaxLayer[]\), \("\<pred\>"\)]\) ]](https://www.wolframcloud.com/obj/resourcesystem/images/de6/de65c6dc-c7e5-478e-9c81-48e04a0072aa/459b0d35495969a7.png) |

| Out[3]= |  |

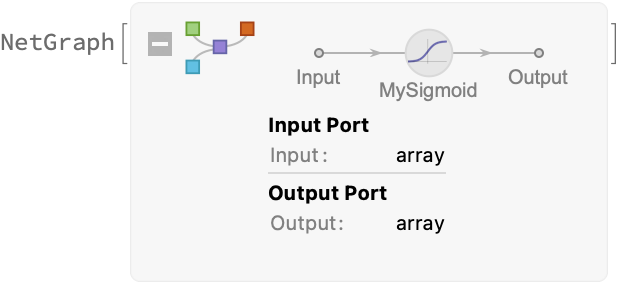

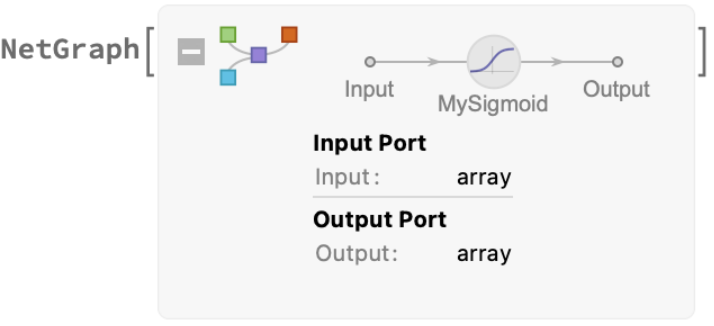

| In[4]:= |

| Out[4]= |  |

The above is equivalent to:

| In[5]:= | ![ResourceFunction["RuleNetGraph"][NetPort["Input"] ->

Subscript[ElementwiseLayer["Sigmoid"], "MySigmoid"]]](https://www.wolframcloud.com/obj/resourcesystem/images/de6/de65c6dc-c7e5-478e-9c81-48e04a0072aa/71bab812779c3d54.png) |

And is also equivalent to:

| In[6]:= | ![NetGraph[<|"MySigmoid" -> ElementwiseLayer["Sigmoid"]|>,

{NetPort["Input"] -> "MySigmoid"}]](https://www.wolframcloud.com/obj/resourcesystem/images/de6/de65c6dc-c7e5-478e-9c81-48e04a0072aa/6d2c92ab116339d4.png) |

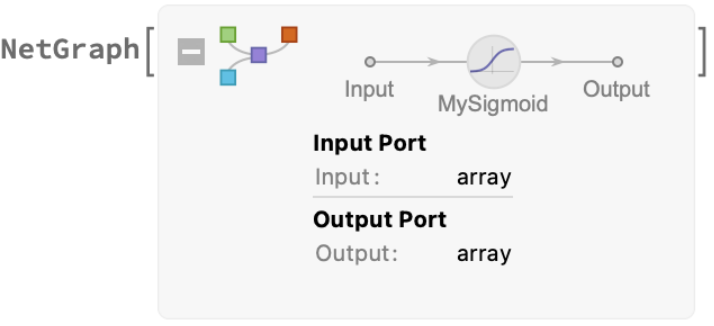

A list of rules can be used to specify network connections in any order:

| In[7]:= | ![ResourceFunction["RuleNetGraph"][{

{"act1", "act2"} -> Times,

NetPort["Input1"] ->

\!\(\*SubscriptBox[\(ElementwiseLayer["\<Sigmoid\>"]\), \("\<act1\>"\)]\),

NetPort["Input1"] ->

\!\(\*SubscriptBox[\(SoftmaxLayer[]\), \("\<act2\>"\)]\)

}]](https://www.wolframcloud.com/obj/resourcesystem/images/de6/de65c6dc-c7e5-478e-9c81-48e04a0072aa/1e450ee31cf52fbd.png) |

| Out[7]= |  |

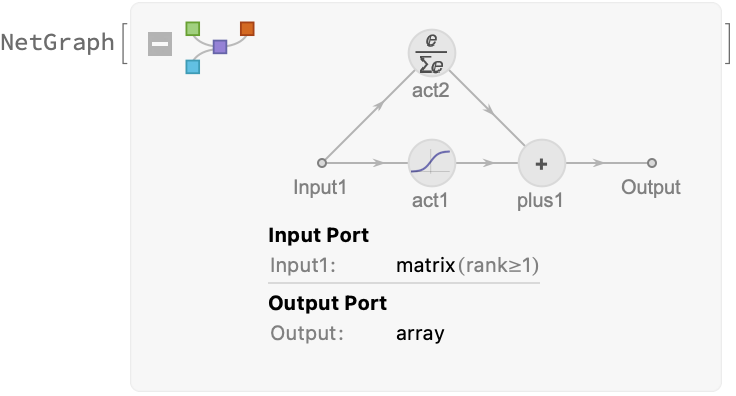

Networks can be specified with multiple inputs and outputs:

| In[8]:= | ![ResourceFunction["RuleNetGraph"][{

{NetPort["Sin"] ->

\!\(\*SubscriptBox[\((#^2 &)\), \("\<s^2\>"\)]\), NetPort["Cos"] ->

\!\(\*SubscriptBox[\((#^2 &)\), \("\<c^2\>"\)]\)} -> Plus -> NetPort["Sum"],

{NetPort["Cos"], NetPort["Sin"]} -> ThreadingLayer[(ArcTan[#cos, #sin] &)] -> NetPort["Angle"]

}]](https://www.wolframcloud.com/obj/resourcesystem/images/de6/de65c6dc-c7e5-478e-9c81-48e04a0072aa/1e372086c582e9e8.png) |

| Out[8]= |  |

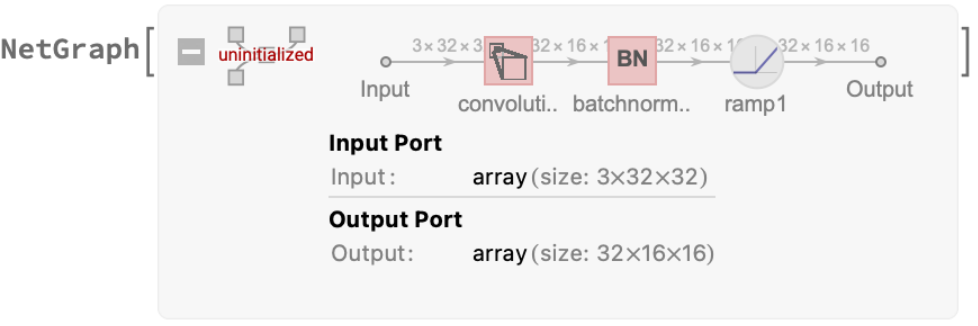

Additional arguments are passed through RuleNetGraph to NetGraph (for example, input port size):

| In[9]:= | ![ResourceFunction["RuleNetGraph"][NetPort["Input"] -> ConvolutionLayer[32, 3, "Stride" -> 2 , "PaddingSize" -> 1]

-> BatchNormalizationLayer[]

-> Ramp, "Input" -> {3, 32, 32}]](https://www.wolframcloud.com/obj/resourcesystem/images/de6/de65c6dc-c7e5-478e-9c81-48e04a0072aa/034f8e51bb746faa.png) |

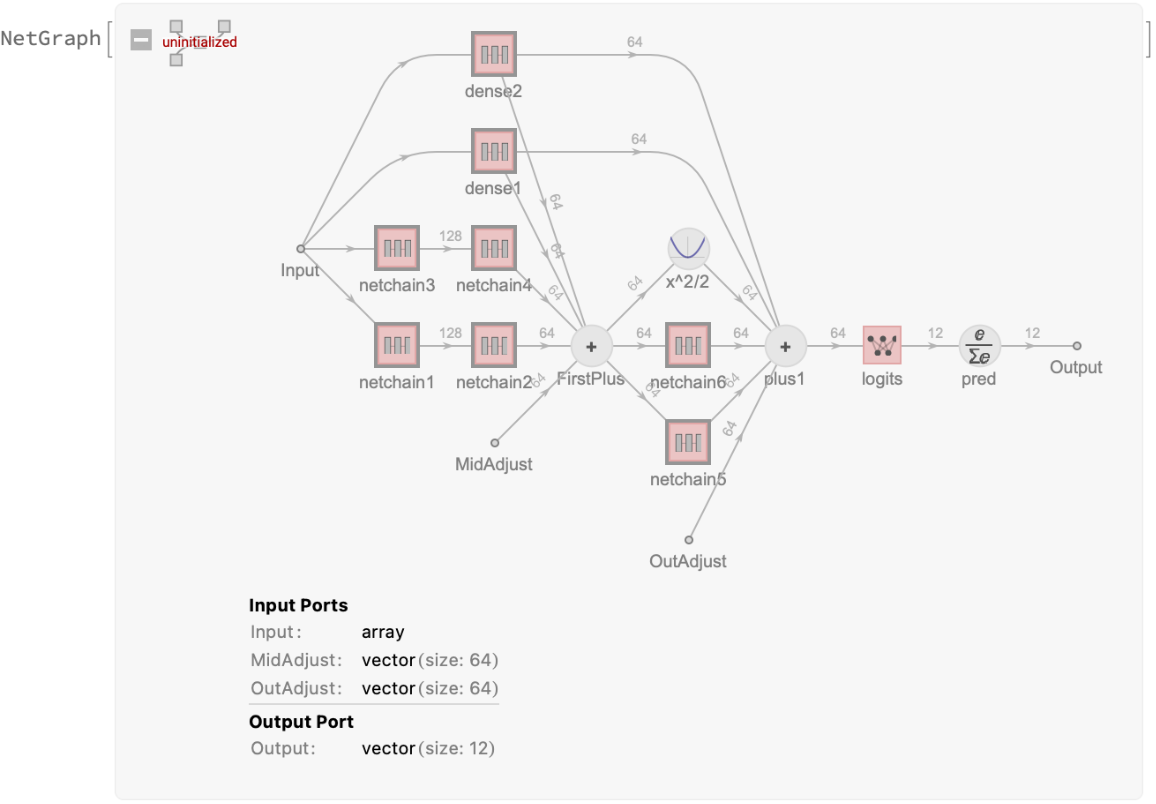

RuleNetGraph can easily describe networks with complicated data flow:

| In[10]:= | ![denseBnRelu[n_Integer] := NetChain[{

LinearLayer@n, BatchNormalizationLayer[], Ramp}]

ResourceFunction["RuleNetGraph"][{{

{

\!\(\*SubscriptBox[\(denseBnRelu[64]\), \("\<dense1\>"\)]\),

\!\(\*SubscriptBox[\(denseBnRelu[64]\), \("\<dense2\>"\)]\),

denseBnRelu[128] -> denseBnRelu[64],

denseBnRelu[128] -> denseBnRelu[64],

NetPort["MidAdjust"]

} ->

\!\(\*SubscriptBox[\(Plus\), \("\<FirstPlus\>"\)]\) -> {denseBnRelu[

64], denseBnRelu[64]},

"dense1",

"dense2",

NetPort["OutAdjust"],

"FirstPlus" ->

\!\(\*SubscriptBox[\((\((#^2)\)/2 &)\), \("\<x^2/2\>"\)]\)} -> Plus ->

\!\(\*SubscriptBox[\(LinearLayer[12]\), \("\<logits\>"\)]\) ->

\!\(\*SubscriptBox[\(SoftmaxLayer[]\), \("\<pred\>"\)]\)}]](https://www.wolframcloud.com/obj/resourcesystem/images/de6/de65c6dc-c7e5-478e-9c81-48e04a0072aa/514b0bd9e0875bc0.png) |

| Out[11]= |  |

Multiple layers cannot be declared with the same manually-assigned name:

| In[12]:= |

| Out[12]= |

You cannot reuse layers in a way that causes the output of a layer to depend on itself:

| In[13]:= |

| Out[13]= |

Manual layer names must be specified with a string subscript; non-strings prompt a message:

| In[14]:= |

| Out[14]= |

| In[15]:= |

| Out[15]= |

All layers must be properly declared:

| In[16]:= |

| Out[16]= |

Calling the layer with zero arguments works:

| In[17]:= |

| Out[17]= |

The first argument of RuleNetGraph must contain at least one Rule ( →):

| In[18]:= |

| Out[18]= |

| In[19]:= |

| Out[19]= |

You cannot use string subscripts on NetPort or any other parameter that is not a manually named layer:

| In[20]:= |

| Out[20]= |

You will get errors if you try to use a function that cannot be automatically converted to a neural network layer:

| In[21]:= |

| Out[21]= |

| In[22]:= |

| Out[22]= |

Be careful to properly group symbols. This gives an error:

| In[23]:= |

| Out[23]= |

Giving the pure function proper precedence with parentheses fixes it:

| In[24]:= |

| Out[24]= |

This work is licensed under a Creative Commons Attribution 4.0 International License