Wolfram Function Repository

Instant-use add-on functions for the Wolfram Language

Function Repository Resource:

Fuse a BatchNormalization layer preceded by a ConvolutionLayer into a single ConvolutionLayer

ResourceFunction["NetFuseBatchNorms"][convLayer, bnLayer] fuses the weights of an initialized BatchNormalizationLayer and ConvolutionLayer into a single ConvolutionLayer whenever possible. | |

ResourceFunction["NetFuseBatchNorms"][net] repeatedly fuses the weights of an initialized BatchNormalizationLayer preceded by an initialized ConvolutionLayer into a single ConvolutionLayer in a net. |

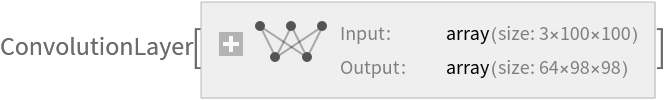

Define a non-zero initialized ConvolutionLayer followed by a BatchNormalizationLayer:

| In[1]:= |

| Out[1]= |  |

| In[2]:= | ![bnLayer = NetInitialize@ BatchNormalizationLayer[

"Scaling" -> RandomReal[1, 64],

"Biases" -> RandomReal[1, 64],

"MovingMean" -> RandomReal[1, 64],

"MovingVariance" -> RandomReal[1, 64],

"Input" -> {64, Automatic, Automatic}

]](https://www.wolframcloud.com/obj/resourcesystem/images/326/326c2790-60fb-4688-9119-2b51ccad0e39/7a6389f0cbca0bcd.png) |

| Out[2]= |  |

Fuse the convLayer and bnLayer into a single ConvolutionLayer:

| In[3]:= |

| Out[3]= |  |

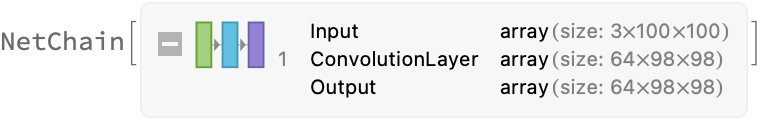

Create a NetChain:

| In[4]:= |

| Out[4]= |  |

Perform the same combination within a NetChain:

| In[5]:= |

| Out[5]= |  |

Compare the outputs on a random input:

| In[6]:= |

| Out[7]= |

Note that the fused layer is faster:

| In[8]:= |

| Out[8]= |

| In[9]:= |

| Out[9]= |

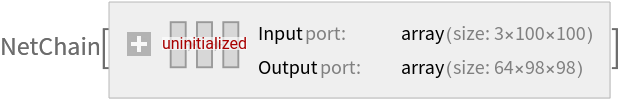

Note that NetFuseBatchNorms does not do anything on an uninitialized net:

| In[10]:= | ![uninit = NeuralNetworks`NetDeinitialize@

NetChain[{NetInitialize@

ConvolutionLayer[64, {3, 3}, "Biases" -> RandomReal[1, 64], "Input" -> {3, 100, 100}], NetInitialize@ BatchNormalizationLayer[

"Scaling" -> RandomReal[1, 64],

"Biases" -> RandomReal[1, 64],

"MovingMean" -> RandomReal[1, 64],

"MovingVariance" -> RandomReal[1, 64],

"Input" -> {64, Automatic, Automatic}

]}]](https://www.wolframcloud.com/obj/resourcesystem/images/326/326c2790-60fb-4688-9119-2b51ccad0e39/04dc9057c71a0c04.png) |

| Out[10]= |  |

| In[11]:= |

| Out[11]= |  |

| In[12]:= |

| Out[12]= |

Get pretrained ShuffleNet-V2:

| In[13]:= | ![shuffleNet = NetFlatten@

NetReplacePart[

NetModel["ShuffleNet-V2 Trained on ImageNet Competition Data"], "Output" -> None];](https://www.wolframcloud.com/obj/resourcesystem/images/326/326c2790-60fb-4688-9119-2b51ccad0e39/7d15becee0173753.png) |

Accelerate ShuffleNet-V2:

| In[14]:= |

Compare the outputs on a random image:

| In[15]:= |

| Out[16]= |

The fused net is lighter as it contains less BatchNormalizationLayer layers:

| In[17]:= |

| Out[17]= |

| In[18]:= |

| Out[18]= |

This work is licensed under a Creative Commons Attribution 4.0 International License