Wolfram Function Repository

Instant-use add-on functions for the Wolfram Language

Function Repository Resource:

Neural network layer that implements the LogSumExp operation on any level

ResourceFunction["LogSumExpLayer"][] creates a NetGraph that computes the LogSumExp of an array on level 1. | |

ResourceFunction["LogSumExpLayer"][{n1,n2,…}] computes the LogSumExp of an array on levels n1,n2,…. | |

Aggregate on different levels:

| In[2]:= |

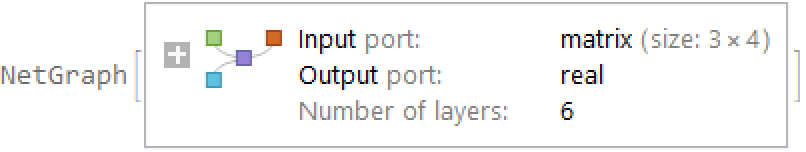

| Out[2]= |

| In[3]:= |

| Out[3]= |

| In[4]:= |

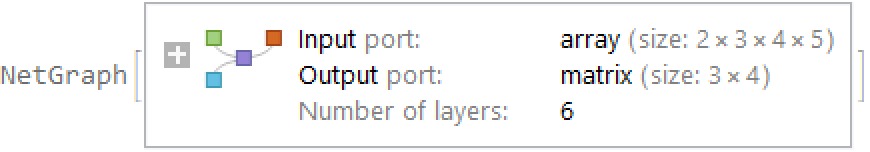

| Out[4]= |

| In[5]:= |

| Out[5]= |

Compute the mean instead of the sum:

| In[6]:= |

| Out[6]= |

Compare with the ordinary method of evaluation:

| In[7]:= |

| Out[7]= |

Using this option should not be necessary under normal circumstances. It exists mainly for cases where the default sorting does not work correctly. By default, LogSumExpLayer sorts the level specifications in such a way that the ReplicateLayer sequence in the NetGraph will reshape the computed maxima correctly:

| In[8]:= |

| Out[8]= |

Without the correct sorting order, the network cannot be constructed:

| In[9]:= |

| Out[9]= |  |

| In[10]:= |

| Out[10]= |

Calculate the LogSumExp of a list:

| In[11]:= |

| Out[11]= |

This is equivalent to chaining the functions Exp, Total and Log together:

| In[12]:= |

| Out[12]= |

However, for very small or very large numbers, machine precision numbers will overflow or underflow during the computation:

| In[13]:= |

| Out[13]= |

You need arbitrary precision numbers for this operation:

| In[14]:= |

| Out[14]= |

LogSumExpLayer will still be able to work in machine precision for such numbers:

| In[15]:= |

| Out[15]= |

| In[16]:= |

| Out[16]= |

Positive and negative level specs can only be used together as long as they remain ordered:

| In[17]:= |

| Out[17]= |  |

| In[18]:= |

| Out[18]= |

This work is licensed under a Creative Commons Attribution 4.0 International License