Basic Examples (2)

Find an answer of a question based on a given text:

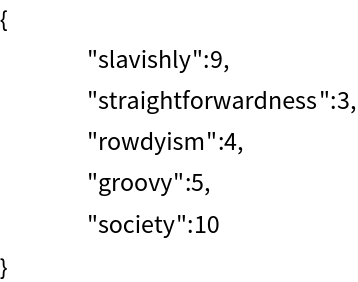

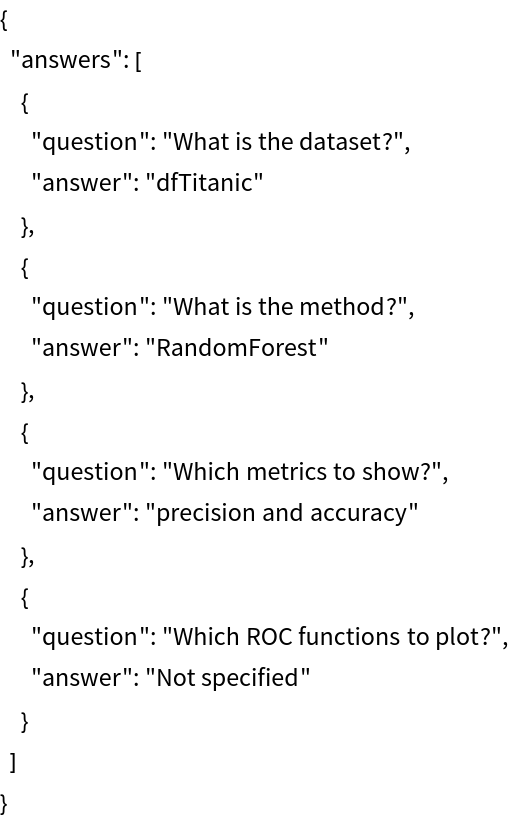

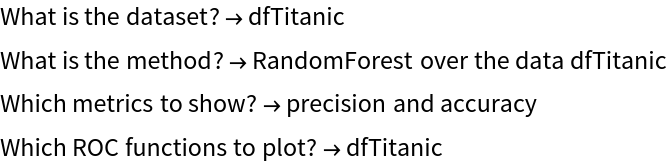

Find the parameters of a computation workflow specification:

Scope (2)

If one question is asked and the third argument is String, then a string is returned as an answer (note that date results are given in the ISO-8601 standard, per the default prompt of LLMTextualAnswer):

Here is a recommender system pipeline specification:

Here are a list of questions and a list of corresponding answers for getting the parameters of the pipeline:

Here we get a question-answer association:

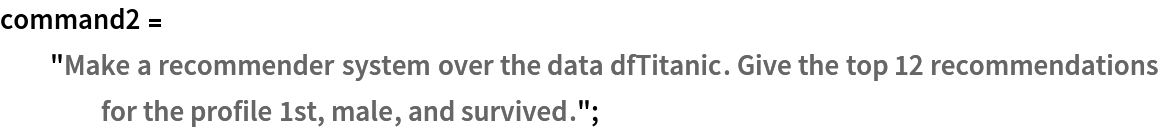

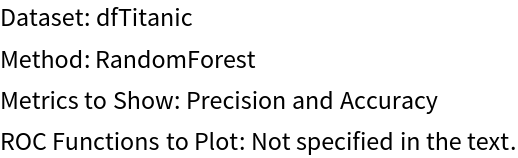

Here is the LLM function constructed by LLMTextualAnswer:

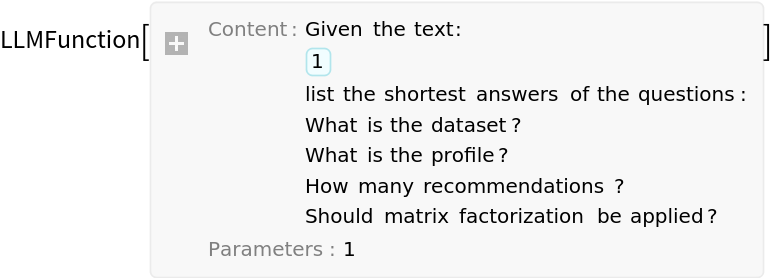

Alternatively, just the string template can be obtained:

Options (12)

Prelude (5)

Here is the default prelude:

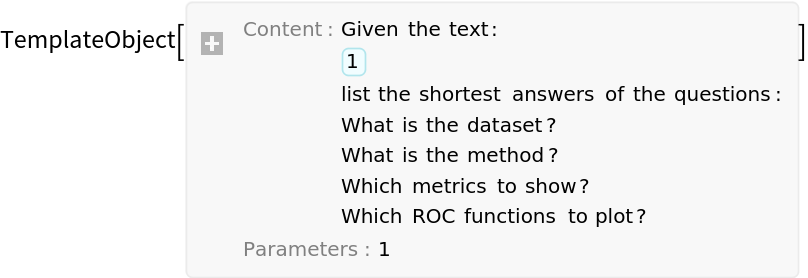

It can be beneficial to change the prelude if the first argument has a more specific form. (Like XML, JSON, WL, etc.) For example, here is a JSON string:

Here we change the prelude:

Without the prelude change some LLM models have hard time finding the answers:

But some models do succeed:

Prompt (4)

The default prompt is crafted in such a way that it allows the obtaining of a list of question-answer pairs in JSON format. Here is the default prompt:

Here the results are easy to process further in a programmatic way:

Using a different prompt guarantees actionable results:

Here no prompt is used -- the answers are correct, but further processing is needed in order to use them in computations:

Request (3)

For the default value of the "Request" option, Automatic, LLMTextualAnswer uses the request is "list the shortest answers of the questions:":

On the other hand, for a single question, "Request"→Automatic uses the request is "give the shortest answer of the question:":

Here the request is changed to "give a complete sentence as an answer to the question":

Applications (6)

Random mandala creation by verbal commands (6)

ResourceFunction["RandomMandala"] takes its arguments -- like, rotational symmetry order, or symmetry -- through option specifications. Here we make a list of the options we want to specify:

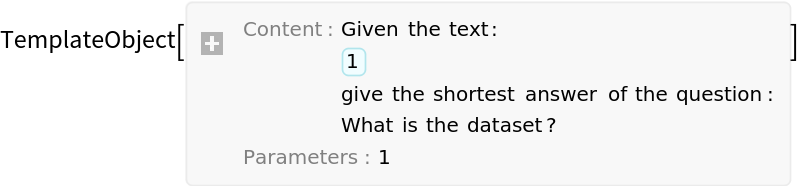

Here we create corresponding "extraction" questions and display them:

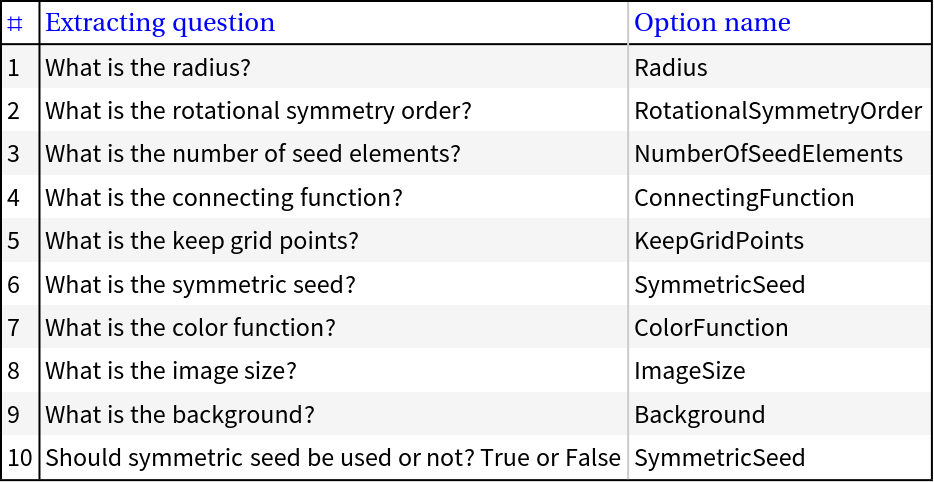

Here we define rules to make the LLM responses (more) acceptable by WL:

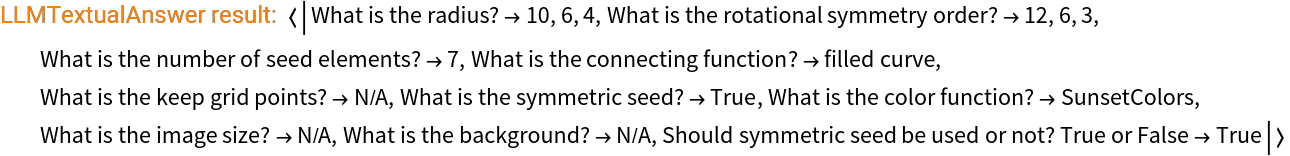

Here we define a function that converts natural language commands into images of random mandalas:

Here is an example application:

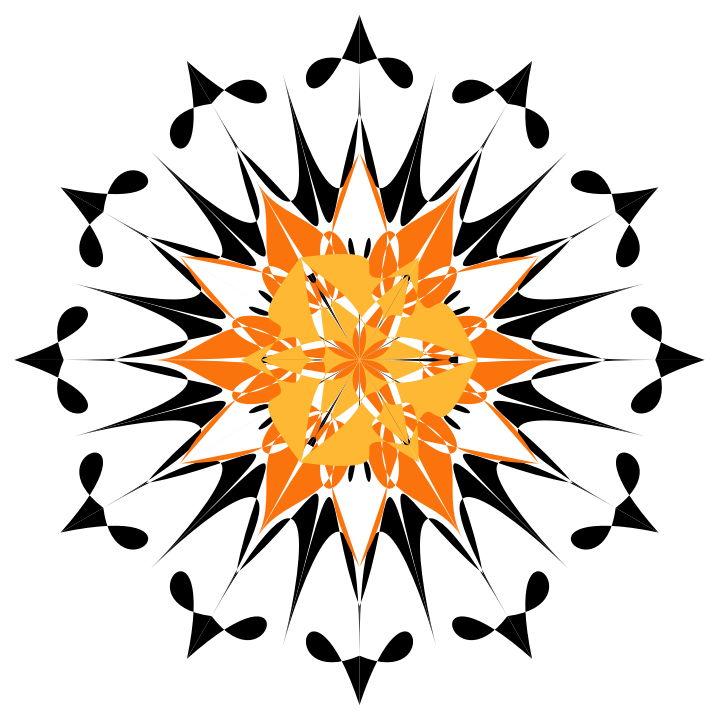

Here is an application with multi-symmetry and multi-radius specifications:

Properties and Relations (2)

The built-in function FindTextualAnswer has the same purpose and goal. LLMTextualAnswer seems to be more precise and powerful. FindTextualAnswer is often faster:

A main motivation to make this function is to have a more powerful and precise alternative to FindTextualAnswer in the paclet NLPTemplateEngine. Here is the Wolfram Language pipeline built by the function Concretize of NLPTemplateEngine for a classifier specification (similar to one used above):

Possible Issues (4)

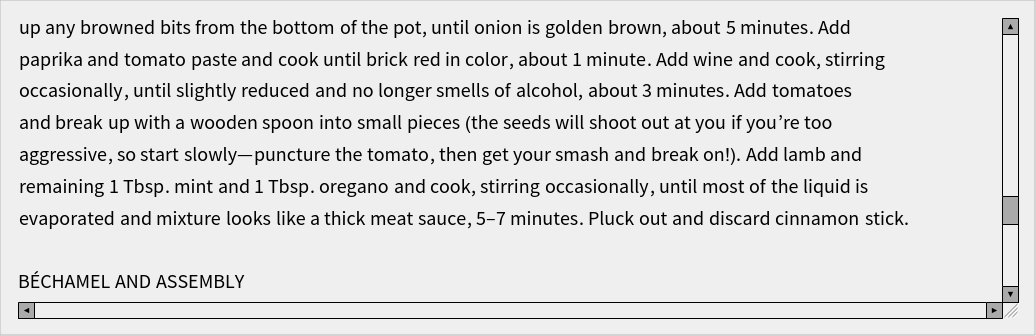

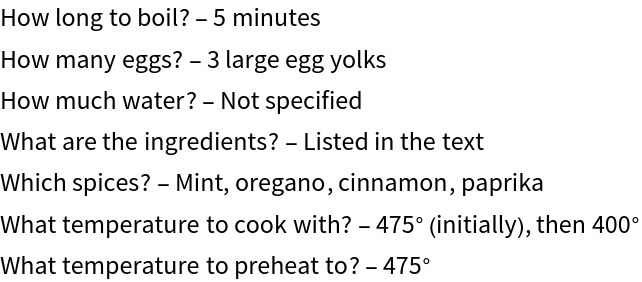

Some combinations of texts, questions, preludes and requests might be incompatible with the default prompt (which is aimed at getting JSON dictionaries.) For example, consider this recipe:

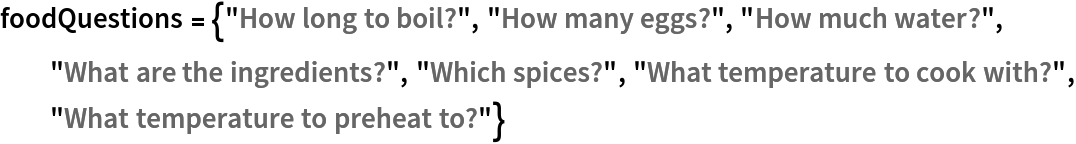

Here are related food questions:

Here LLMTextualAnswer is invoked and fails (with default settings):

Here a result is obtained after replacing the default prompt with an empty string:

Neat Examples (6)

Make a "universal" function for invoking functions from Wolfram Function Repository:

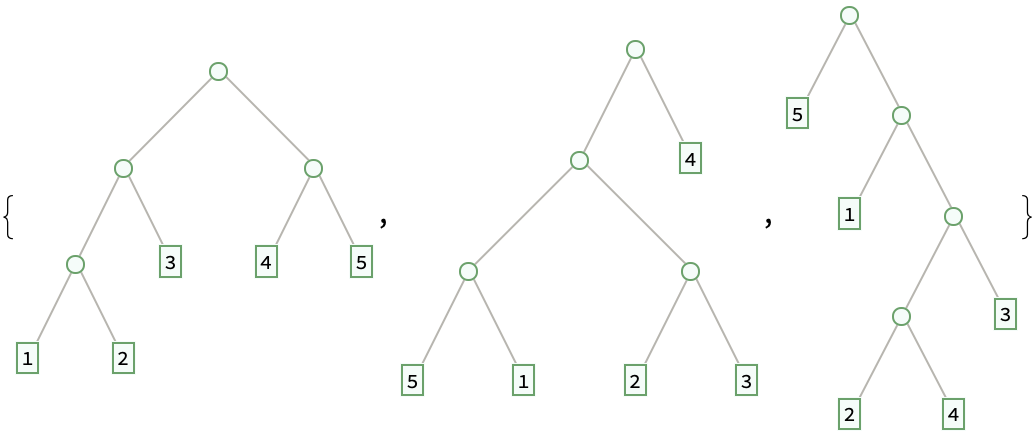

Request a binary tree:

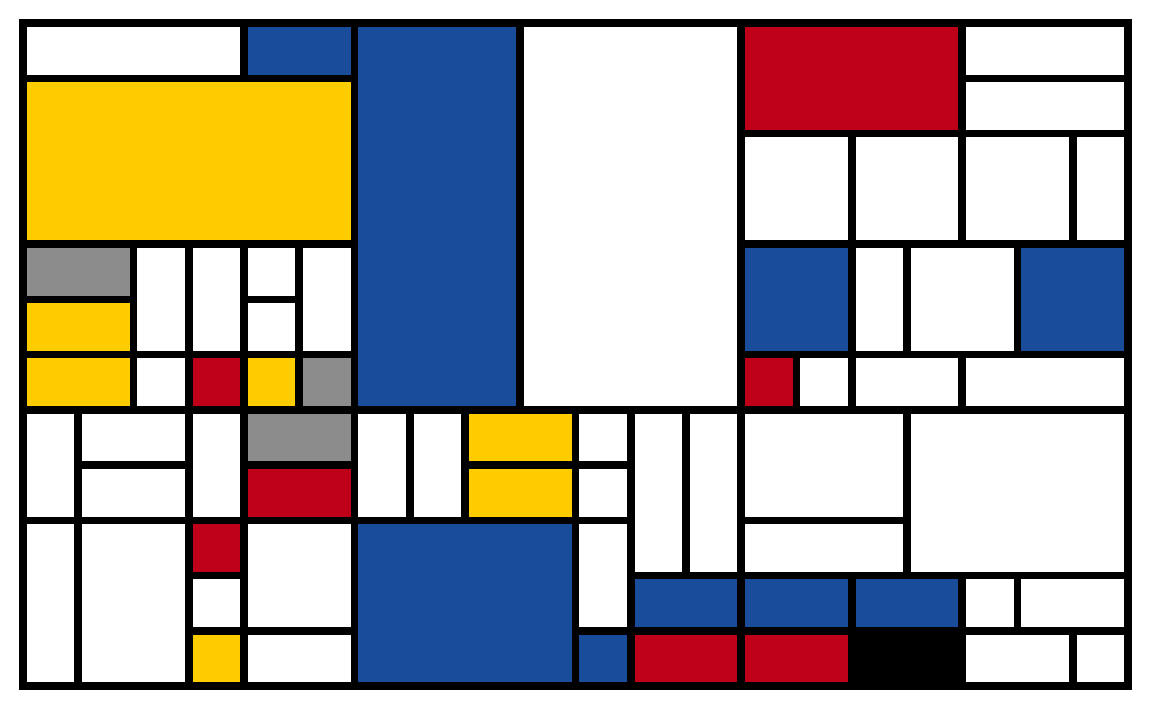

Request a Mondrian:

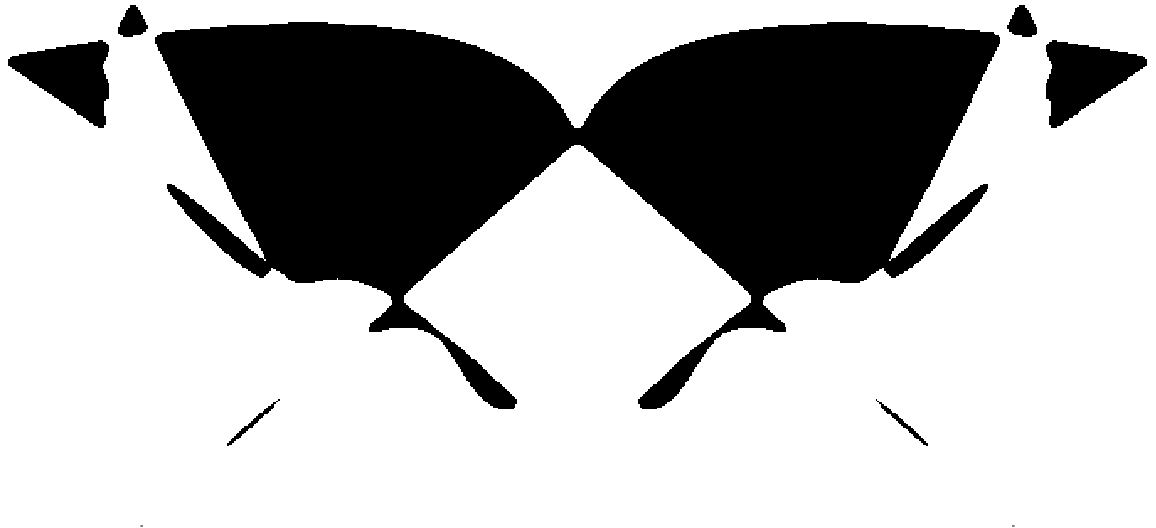

Request a Rorschach (inkblot) pattern:

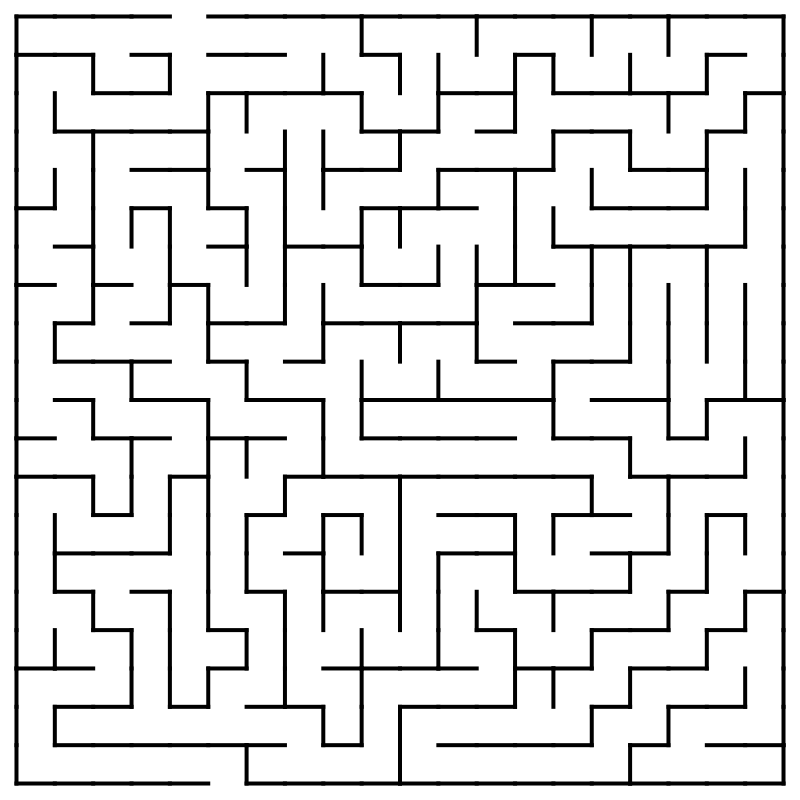

Request a maze:

Request a random English haiku and specify an LLMEvaluator to use:

![ResourceFunction["LLMTextualAnswer", ResourceVersion->"1.0.0"]["Born and raised in the Austrian Empire, Tesla studied engineering and physics in the 1870s without receiving a degree,

gaining practical experience in the early 1880s working in telephony and at Continental Edison in the new electric power industry.",

"Where born?"

]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/6e17c477e55ed8c0.png)

![command = "Make a classifier with the method RandomForest over the data dfTitanic; show precision and accuracy.";

questions = {

"What is the dataset?",

"What is the method?",

"Which metrics to show?",

"Which ROC functions to plot?"};

ResourceFunction["LLMTextualAnswer"][command, questions]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/77b092639c54c151.png)

![questions2 = {"What is the dataset?", "What is the profile?", "How many recommendations?", "Should matrix factorization be applied?"};

ResourceFunction["LLMTextualAnswer"][command2, questions2, List]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/2322a5ed6612731f.png)

![ResourceFunction["LLMTextualAnswer", ResourceVersion->"1.0.0"][json, {"What is the longest key?", "Which is the largest value"}, "Prelude" -> "Given the JSON dictionary:"]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/039df5e336104b82.png)

![ResourceFunction["LLMTextualAnswer", ResourceVersion->"1.0.0"][json, {"What is the longest key?", "Which is the largest value"}, LLMEvaluator -> LLMConfiguration["Model" -> "gpt-4o"]]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/46f34da7fc463d62.png)

![ResourceFunction["LLMTextualAnswer", ResourceVersion->"1.0.0"][json, {"What is the longest key?", "Which is the largest value"}, LLMEvaluator -> LLMConfiguration["Model" -> "gpt-3.5-turbo"]]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/07fe482c4dfb7ad8.png)

![command = "Make a classifier with the method RandomForest over the data dfTitanic; show precision and accuracy.";

questions = {

"What is the dataset?",

"What is the method?",

"Which metrics to show?",

"Which ROC functions to plot?"};

ResourceFunction["LLMTextualAnswer"][command, questions]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/701e4b89fbab6e64.png)

![command = "Make a classifier with the method RandomForest over the data dfTitanic; show precision and accuracy.";

questions = {

"What is the dataset?",

"What is the method?",

"Which metrics to show?",

"Which ROC functions to plot?"};

ResourceFunction["LLMTextualAnswer", ResourceVersion->"1.0.0"][command, questions, StringTemplate]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/35cfa5d1276c32bf.png)

![ResourceFunction["LLMTextualAnswer", ResourceVersion->"1.0.0"]["Born and raised in the Austrian Empire, Tesla studied engineering and physics in the 1870s without receiving a degree,

gaining practical experience in the early 1880s working in telephony and at Continental Edison in the new electric power industry.",

"Where born?",

String,

"Prompt" -> "",

"Request" -> "give a complete sentence as an answer to the question"

]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/63e245b04e611ad8.png)

![mandalaQuestions = Association@

Map["What is the " <> ToLowerCase@

StringReplace[ToString[#], RegularExpression["(?<=\\S)(\\p{Lu})"] :> " $1"] <> "?" -> # &, mandalaOptKeys];

mandalaQuestions = Append[mandalaQuestions, "Should symmetric seed be used or not? True or False" -> "SymmetricSeed"];

ResourceFunction["GridTableForm"][List @@@ Normal[mandalaQuestions], TableHeadings -> {"Extracting question", "Option name"}]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/51be11e25092138c.png)

![numberRules = Map[IntegerName[#, {"English", "Words"}] -> ToString[#] &, Range[100]];

curveRules = {"bezier curve" -> "BezierCurve", "bezier function" -> "BezierCurve", "filled curve" -> "FilledCurve@*BezierCurve", "filled bezier curve" -> "FilledCurve@*BezierCurve"};

boolRules = {"true" -> "True", "yes" -> "True", "false" -> "False", "no" -> "False"};](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/31a80f6bbcad4e64.png)

![Clear[LLMRandomMandala];

Options[LLMRandomMandala] = Join[{"Echo" -> False}, Options[

ResourceFunction["LLMTextualAnswer"]]];

LLMRandomMandala[cmd_String, questions_Association : mandalaQuestions,

opts : OptionsPattern[]] :=

Block[{echoQ = TrueQ[OptionValue[LLMRandomMandala, "Echo"]], params,

args, res},

params = ResourceFunction["LLMTextualAnswer"][cmd, Keys@questions, Association];

If[echoQ, Echo[params, "LLMTextualAnswer result:"]];

params = Select[params, # != "N/A" &];

args = KeyMap[# /. questions &, params];

args = Map[StringReplace[#, Join[numberRules, curveRules, boolRules], IgnoreCase -> True] &, args];

args = Map[StringReplace[#, StartOfString ~~ x : ((DigitCharacter | "," | "{" | "}" | "(" | ")" | WhitespaceCharacter) ..) ~~ EndOfString :> "{" <> x <> "}"] &, args];

args = Map[(

t = ToExpression[#];

Which[

VectorQ[t, NumericQ] && Length[t] == 1, t[[1]],

MemberQ[ColorData["Gradients"], #], #,

True, t

]

) &, args];

If[echoQ, Echo[args, "Processed arguments:"]];

ResourceFunction["RandomMandala"][Sequence @@ Normal[args]]

];](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/1f2244f969a7ea18.png)

![SeedRandom[33]; LLMRandomMandala["Make a random mandala with rotational symmetry seven, connecting function is a filled curve, and the number of seed elements is six. Use asymmetric seed.",

"Echo" -> True

]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/0096167ade6ad716.png)

![SeedRandom[775]; LLMRandomMandala["Make a random mandala with rotational symmetry 12, 6, and 3, radiuses 10, 6, 4, (keep that order), the connecting function is a filled curve, and the number of seed elements is seven. Use symmetric seed and color function SunsetColors.",

"Echo" -> True,

LLMEvaluator -> LLMConfiguration["Model" -> "gpt-3.5-turbo"]

]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/41454f5d2c4a710a.png)

![spec = "Make a classifier with the method 'RandomForest' over the dataset dfTitanic; use the split ratio 0.82; show precision and accuracy.";

PacletSymbol[

"AntonAntonov/NLPTemplateEngine", "AntonAntonov`NLPTemplateEngine`Concretize"][spec]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/1a84f8af05a46421.png)

![recipe = "\nA comfort food classic, this Greek casserole is really delicious the day after, and believe it or not, it's great straight out of the fridge for breakfast. Don't ask us how we know this, but if you like cold pizza, you'll like cold moussaka.\n\nIngredients\n\n8 SERVINGS\n\nEGGPLANT AND LAMB\n8 garlic cloves, finely grated, divided\n½ cup plus 2 tablespoons extra-virgin olive oil\n2 tablespoons chopped mint, divided\n2 tablespoons chopped oregano, divided\n3 medium eggplants (about 3½ pounds total), sliced crosswise into ½-inch-thick rounds\n2½ teaspoons kosher salt, plus more\n½ teaspoon freshly ground black pepper, plus more\n2 pounds ground lamb\n2 medium onions, chopped\n1 3-inch cinnamon stick\n2 Fresno chiles, finely chopped\n1 tablespoon paprika\n1 tablespoon tomato paste\n¾ cup dry white wine\n1 28-ounce can whole peeled tomatoes\n\nBÉCHAMEL AND ASSEMBLY\n6 tablespoons unsalted butter\n½ cup all-purpose flour\n2½ cups whole milk, warmed\n¾ teaspoon kosher salt\n4 ounces farmer cheese, crumbled (about 1 cup)\n2 ounces Pecorino or Parmesan, finely grated (about 1¾ cups), divided\n3 large egg yolks, beaten to blend\n\nPreparation\n\nEGGPLANT AND LAMB\n\nStep 1\nPlace a rack in middle of oven; preheat to 475°. Whisk half of the garlic, ½ cup oil, 1 Tbsp. mint, and 1 Tbsp. oregano in a small bowl. Brush both sides of eggplant rounds with herb oil, making sure to get all the herbs and garlic onto eggplant; season with salt and pepper. Transfer eggplant to a rimmed baking sheet (it's okay to pile the rounds on top of each other) and roast until tender and browned, 35\[Dash]45 minutes. Reduce oven temperature to 400°.\n\nStep 2\nMeanwhile, heat remaining 2 Tbsp. oil in a large wide pot over high. Cook lamb, breaking up with a spoon, until browned on all sides and cooked through and liquid from meat is evaporated (there will be a lot of rendered fat), 12\[Dash]16 minutes. Strain fat through a fine-mesh sieve into a clean small bowl and transfer lamb to a medium bowl. Reserve 3 Tbsp. lamb fat; discard remaining fat.\n\nStep 3\nHeat 2 Tbsp. lamb fat in same pot over medium-high (reserve remaining 1 Tbsp. lamb fat for assembling the moussaka). Add onion, cinnamon, 2½ tsp. salt, and ½ tsp. pepper and cook, stirring occasionally, until onion is tender and translucent, 8\[Dash]10 minutes. Add chiles and remaining garlic and cook, scraping up any browned bits from the bottom of the pot, until onion is golden brown, about 5 minutes. Add paprika and tomato paste and cook until brick red in color, about 1 minute. Add wine and cook, stirring occasionally, until slightly reduced and no longer smells of alcohol, about 3 minutes. Add tomatoes and break up with a wooden spoon into small pieces (the seeds will shoot out at you if you're too aggressive, so start slowly\[LongDash]puncture the tomato, then get your smash and break on!). Add lamb and remaining 1 Tbsp. mint and 1 Tbsp. oregano and cook, stirring occasionally, until most of the liquid is evaporated and mixture looks like a thick meat sauce, 5\[Dash]7 minutes. Pluck out and discard cinnamon stick.\n\nBÉCHAMEL AND ASSEMBLY\n\nStep 4\nHeat butter in a medium saucepan over medium until foaming. Add flour and cook, whisking constantly, until combined, about 1 minute. Whisk in warm milk and bring sauce to a boil. Cook béchamel, whisking often, until very thick (it should have the consistency of pudding), about 5 minutes; stir in salt. Remove from heat and whisk in farmer cheese and half of the Pecorino. Let sit 10 minutes for cheese to melt, then add egg yolks and vigorously whisk until combined and béchamel is golden yellow.\n\nStep 5\nBrush a 13x9'' baking pan with remaining 1 Tbsp. lamb fat. Layer half of eggplant in pan, covering the bottom entirely. Spread half of lamb mixture over eggplant in an even layer. Repeat with remaining eggplant and lamb to make another layer of each. Top with béchamel and smooth surface; sprinkle with remaining Pecorino.\n\nStep 6\nBake moussaka until bubbling vigorously and béchamel is browned in spots, 30\[Dash]45 minutes. Let cool 30 minutes before serving.\nStep 7\n\nDo Ahead: Moussaka can be baked 1 day ahead. Let cool, then cover and chill, or freeze up to 3 months. Thaw before reheating in a 250° oven until warmed through, about 1 hour.\n";

Panel[Pane[recipe, Sequence[

ImageSize -> {500, 150}, Scrollbars -> {True, True}]]]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/581a6882d28f1dad.png)

![Clear[LLMInvoker];

LLMInvoker[command_String, opts : OptionsPattern[]] :=

Block[{questions, ans},

questions = {"Which function?", "Which arguments?"};

ans = ResourceFunction["LLMTextualAnswer"][

command,

questions,

"Request" -> "use camel case for to answer the questions, do not add prefixes like 'make', start with a capital letter, give the arguments as a list:",

opts];

ResourceFunction[ans["Which function?"]][

Sequence @@ If[MemberQ[{None, "None", "N/A", "n/a"}, ans["Which arguments?"]], {}, ToExpression@ans["Which arguments?"]]]

];](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/1bc3f0768e85ca00.png)

![SeedRandom[8728];

LLMInvoker["Make a random binary tree with arguments 5 and 3.", LLMEvaluator -> LLMConfiguration["Model" -> "gpt-4-turbo"]]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/57a3a22e984275ad.png)

![LLMInvoker["Make a random english haiku.", LLMEvaluator -> LLMConfiguration["Model" -> "gpt-4-turbo"]]](https://www.wolframcloud.com/obj/resourcesystem/images/e53/e539bb1c-600e-43e4-9b73-a6c08b823934/1-0-0/2cf492ea167721cc.png)