Wolfram Function Repository

Instant-use add-on functions for the Wolfram Language

Function Repository Resource:

Import a safetensors binary file

ResourceFunction["ImportSafetensors"][file] returns an Association with tensors from a safetensors binary file. | |

ResourceFunction["ImportSafetensors"][file,name] returns a specific tensor with the given name. | |

ResourceFunction["ImportSafetensors"][file,{name1,name2,…}] returns multiple tensors. | |

ResourceFunction["ImportSafetensors"][file,"Header"] returns a header containing each tensor's type, dimensions and offset. |

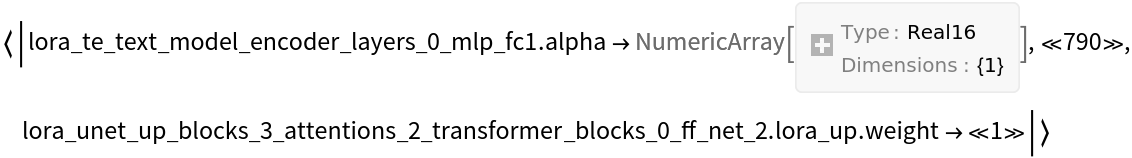

Import tensors from the wild:

| In[1]:= |

| Out[1]= |  |

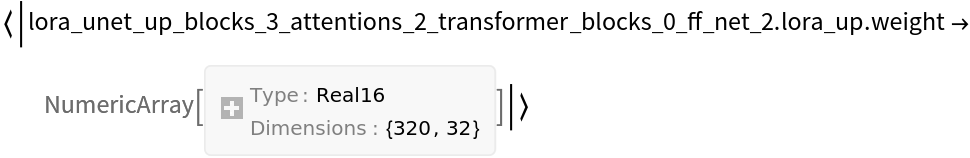

Import a single tensor:

| In[2]:= |

| Out[2]= |  |

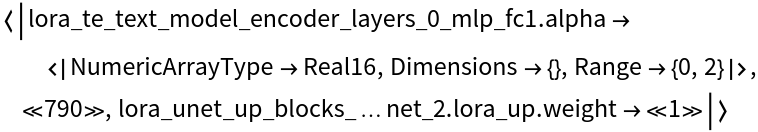

Import just a header:

| In[3]:= |

| Out[3]= |  |

Missing tensors are reported and ignored:

| In[4]:= |

| Out[4]= |

Import DreamShaper weights:

| In[5]:= |

Use the imported tensors to modify Stable Diffusion V1 parts:

| In[6]:= | ![textEncoder = NetModel[{"CLIP Multi-domain Feature Extractor", "InputDomain" -> "Text", "Architecture" -> "ViT-L/14"}];

unet = NetModel["Stable Diffusion V1"];

decoder = NetModel[{"Stable Diffusion V1", "Part" -> "Decoder"}];](https://www.wolframcloud.com/obj/resourcesystem/images/d89/d899f2a2-73e9-4990-ad9a-ffe3c739aeba/67b5b25aaf2ba9ba.png) |

Define a function to modify networks:

| In[7]:= | ![modifyNet[net_, mappings_, tensors_] := NetReplacePart[net, KeyValueMap[#1 -> ArrayReshape[If[ListQ[#2], Normal, Identity]@Lookup[tensors, #2],

Dimensions[NetExtract[net, #1]]] &, DeleteMissing@mappings]]](https://www.wolframcloud.com/obj/resourcesystem/images/d89/d899f2a2-73e9-4990-ad9a-ffe3c739aeba/3f462b1c58786f5a.png) |

Create a modified encoder, unet and decoder:

| In[8]:= | ![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/e890392f-65b3-4f0f-9a82-975b986bcb09"]](https://www.wolframcloud.com/obj/resourcesystem/images/d89/d899f2a2-73e9-4990-ad9a-ffe3c739aeba/00efc8708d7469fb.png) |

Compare vanilla and modified weights. These evaluations may take several minutes (unless GPU is available):

| In[9]:= |

| Out[9]= |  |

| In[10]:= | ![ResourceFunction["StableDiffusionSynthesize"]["spaceship", 3, "TextEncoder" -> modifiedTextEncoder, "UNet" -> modifiedUNet, "Decoder" -> modifiedDecoder, TargetDevice -> "CPU"]](https://www.wolframcloud.com/obj/resourcesystem/images/d89/d899f2a2-73e9-4990-ad9a-ffe3c739aeba/7032ba81b6bd5293.png) |

| Out[10]= |  |

Add more details using LoRA (Low-Rank Adaptation), with small adjustments to existing model weights. Define a collection of utility functions to help:

| In[11]:= | ![toMatrix[tensor_] := If[ArrayDepth[tensor] > 2, ReshapeLayer[Dimensions[tensor][[;; 2]]][tensor], tensor]

LoRA[weight_, {alpha_, down_, up_}] := With[{scale = Dimensions[up][[2]]}, FunctionLayer[#weight + #alpha / scale #up . #down &][<|

"weight" -> weight, "alpha" -> alpha, "down" -> toMatrix[down], "up" -> toMatrix[up]|>]]

computeLoRA[net_, name : {"transformer", id_, "self-attention", "input_project", "Net", "Weights"}, tensors_] := With[{array = ReshapeLayer[{3, Automatic, 768}] @ NetExtract[net, name]},

CatenateLayer[] @ MapIndexed[

LoRA[PartLayer[#2[[1]]] @ array, #1] &,

Table[

tensors[

StringTemplate[

"lora_te_text_model_encoder_layers_``_self_attn_``_proj.``"][

id - 1, layer, lora]],

{layer, {"q", "k", "v"}}, {lora, {"alpha", "lora_down.weight", "lora_up.weight"}}

]

]

]

computeLoRA[net_, name : {"transformer", id_, "self-attention", "output_project", "Net", "Weights"}, tensors_] :=

computeLoRA[net, name, StringTemplate @ StringTemplate[

"lora_te_text_model_encoder_layers_``_self_attn_out_proj.``"][

id - 1, "``"], tensors]

computeLoRA[net_, name : {"transformer", id_, "mlp", linear : "linear1" | "linear2", "Net", "Weights"}, tensors_] :=

computeLoRA[net, name, StringTemplate @ StringTemplate["lora_te_text_model_encoder_layers_``_mlp_``.``"][

id - 1, StringReplace[linear, "linear" -> "fc"], "``"], tensors]

computeLoRA[net_, name : {block : "up" | "down" | "cross_mid", bid_Integer : -1, transformerId_String, rest : PatternSequence[___, "Weights"]}, tensors_] := With[{

suffix = Replace[{rest}, {

{"proj_in", "Weights"} -> "proj_in",

{"proj_out", "Weights"} -> "proj_out",

{"transformerBlock1", "ff", "Net", linear : "proj" | "linear", "Weights"} :> "transformer_blocks_0_ff_" <> Replace[linear, {"proj" -> "net_0_proj", "linear" -> "net_2"}],

{"transformerBlock1", attn : "self-attention" | "cross-attention", layer : "query" | "value" | "key" | "output", "Net", "Weights"} :>

StringTemplate["transformer_blocks_0_``_to_``"][ Replace[attn, {"self-attention" -> "attn1", "cross-attention" -> "attn2"}], Replace[layer, {"query" -> "q", "value" -> "v", "key" -> "k", "output" -> "out_0"}]

],

_ :> Missing[name]

}]

},

If[MissingQ[suffix], Return[suffix]];

computeLoRA[

net, name,

StringTemplate @ StringTemplate["lora_unet_````_attentions_``_" <> suffix <> ".``"][

Replace[

block, {"cross_mid" -> "mid_block", upOrDown_ :> upOrDown <> "_blocks_"}],

If[bid > 0, bid - 1, ""],

Interpreter["Integer"][StringTake[transformerId, -1]] - 1,

"``"

],

tensors

]

]

computeLoRA[net_, name_, template_TemplateObject, tensors_] :=

With[{array = NetExtract[net, name]}, LoRA[array, tensors[template[#]] & /@ {"alpha", "lora_down.weight", "lora_up.weight"}]]

computeLoRA[_, name_, _] := Missing[name]](https://www.wolframcloud.com/obj/resourcesystem/images/d89/d899f2a2-73e9-4990-ad9a-ffe3c739aeba/7946f4b7662e7842.png) |

Apply the weights using the utility functions:

| In[12]:= | ![LoRATensors = ResourceFunction["ImportSafetensors"][

"https://civitai.com/api/download/models/87153"];

textEncoderLoRA = DeleteMissing[

AssociationMap[computeLoRA[modifiedTextEncoder, #, LoRATensors] &, Keys@Information[modifiedTextEncoder, "Arrays"]]];

unetLoRA = DeleteMissing[

AssociationMap[computeLoRA[modifiedUNet, #, LoRATensors] &, Keys@Information[modifiedUNet, "Arrays"]]];

modifiedTextEncoder2 = NetReplacePart[modifiedTextEncoder, textEncoderLoRA];

modifiedUNet2 = NetReplacePart[modifiedUNet, unetLoRA];](https://www.wolframcloud.com/obj/resourcesystem/images/d89/d899f2a2-73e9-4990-ad9a-ffe3c739aeba/49a5417a20cec962.png) |

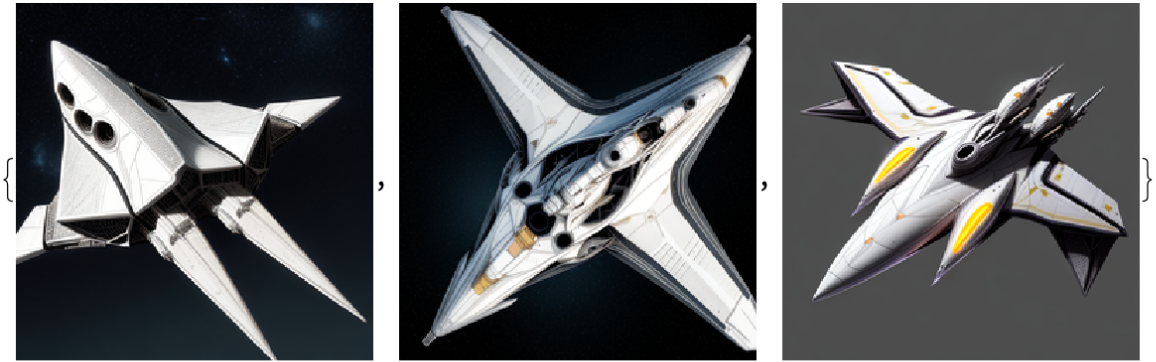

Generate new images with weights modified using LoRA technique:

| In[13]:= | ![ResourceFunction["StableDiffusionSynthesize"]["spaceship", 3, "TextEncoder" -> modifiedTextEncoder2, "UNet" -> modifiedUNet2, "Decoder" -> modifiedDecoder, TargetDevice -> "CPU"]](https://www.wolframcloud.com/obj/resourcesystem/images/d89/d899f2a2-73e9-4990-ad9a-ffe3c739aeba/453ed496f899223b.png) |

| Out[14]= |  |

Wolfram Language 13.0 (December 2021) or above

This work is licensed under a Creative Commons Attribution 4.0 International License