Wolfram Function Repository

Instant-use add-on functions for the Wolfram Language

Function Repository Resource:

Render an image using the DeepDream-β algorithm

ResourceFunction["DeepDreamBeta"][net,image] renders the image using the deepdream-β algorithm. | |

ResourceFunction["DeepDreamBeta"][net,image,step] renders the image with an iteration depth of step. |

| "Eyes" | 2 | network activation depth |

| "Activation" | Identity | pre-activation function for image |

| "StepSize" | 1 | step size of each pattern overlay iteration |

| Resampling | "Cubic" | works the same as ImageResize |

| TargetDevice | "CPU" | works the same as NetChain |

| WorkingPrecision | "Real32" | works the same as NetChain |

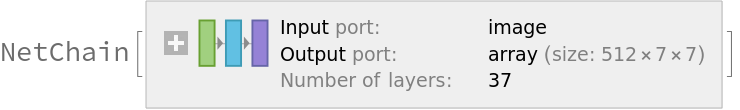

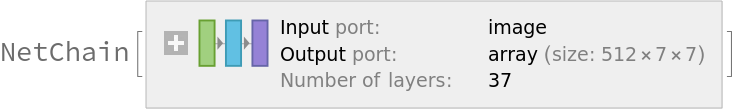

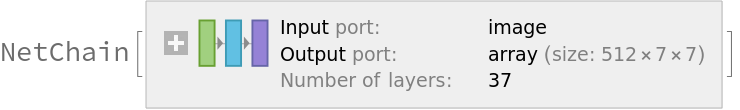

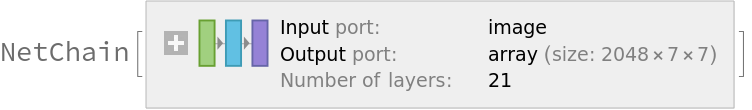

Take a neural network as the dreamer:

| In[1]:= |

| Out[1]= |  |

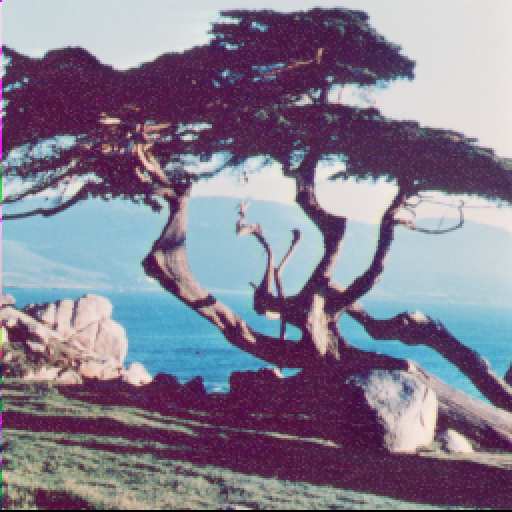

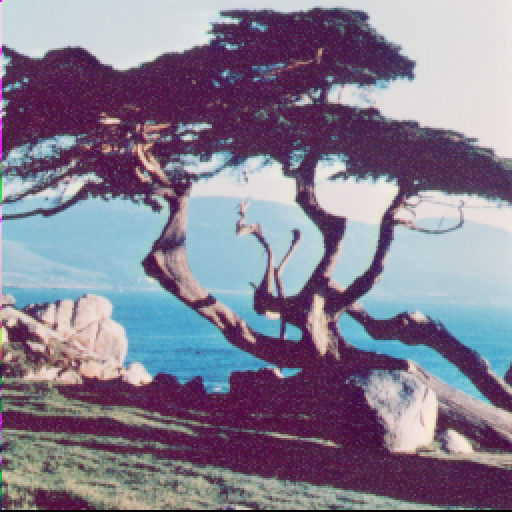

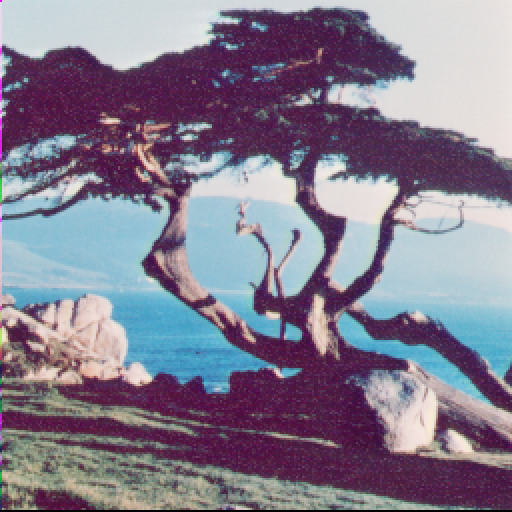

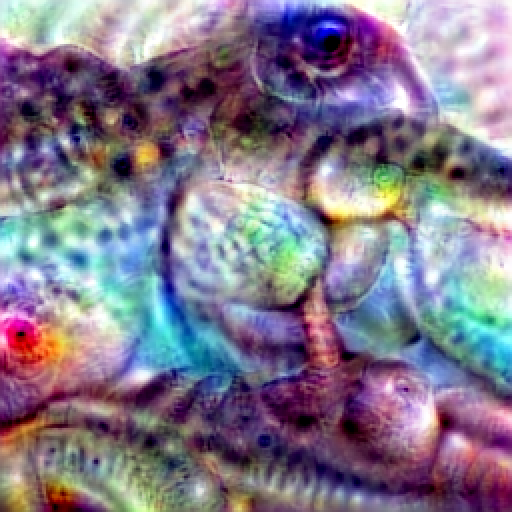

Start with an image:

| In[2]:= |

| Out[2]= |  |

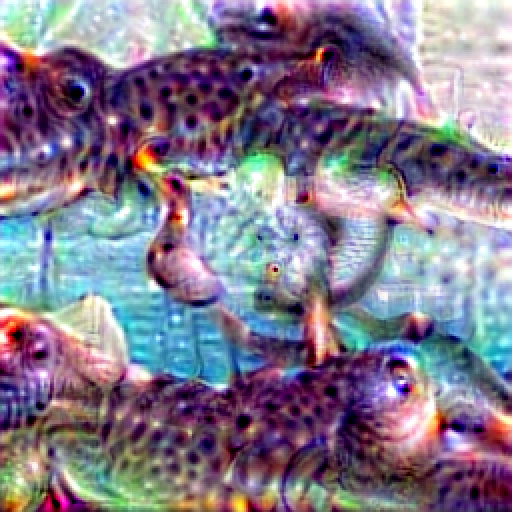

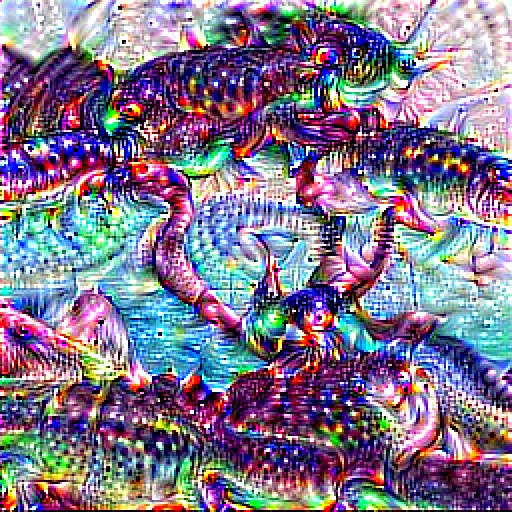

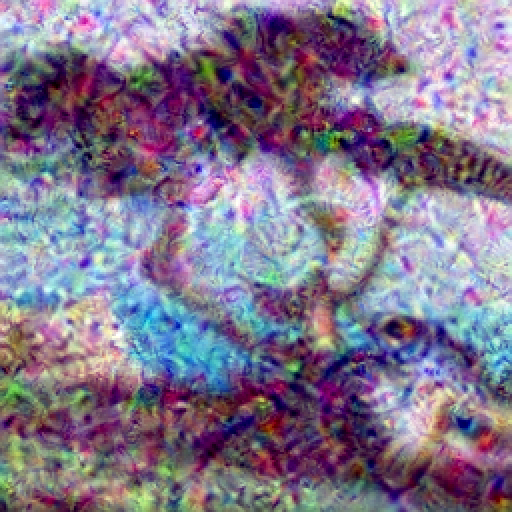

Now process it with DeepDreamBeta:

| In[3]:= |

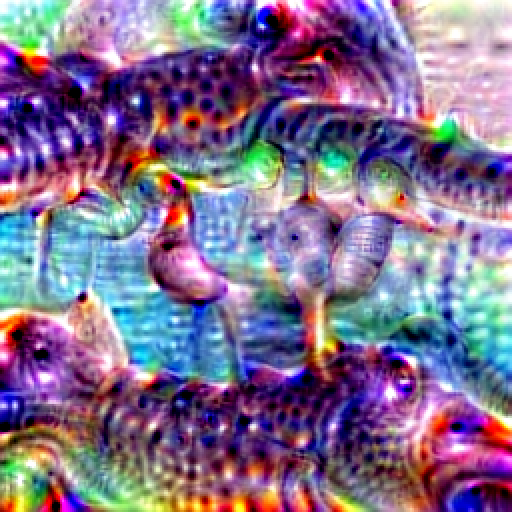

| Out[3]= |  |

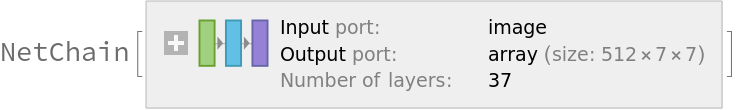

Take a neural network as the dreamer:

| In[4]:= |

| Out[4]= |  |

Start with an image:

| In[5]:= |

| Out[5]= |  |

Use GPU acceleration:

| In[6]:= |

| Out[6]= |  |

Take a neural network as the dreamer:

| In[7]:= |

| Out[7]= |  |

Start with an image:

| In[8]:= |

| Out[8]= |  |

Change the pre-activation function:

| In[9]:= |

| Out[9]= |  |

Take a neural network as the dreamer:

| In[10]:= |

| Out[10]= |  |

Start with an image:

| In[11]:= |

| Out[11]= |  |

"Eyes" controls the size of the receptive field:

| In[12]:= |

| Out[12]= |  |

More eyes gives a greater departure from the original picture:

| In[13]:= |

| Out[13]= |  |

Take a neural network as the dreamer:

| In[14]:= |

| Out[14]= |  |

Start with an image:

| In[15]:= |

| Out[15]= |  |

The smaller the step, the smoother the final result will be:

| In[16]:= |

| Out[16]= |  |

| In[17]:= |

| Out[17]= |  |

Use non-residual networks for better results.

The residual activation network is difficult to activate abstract features:

| In[18]:= |

| Out[18]= |  |

Generate messy textures:

| In[19]:= |

| Out[19]= |  |

This work is licensed under a Creative Commons Attribution 4.0 International License