Details and Options

A text completion question, or cloze question, occludes random individual words in a text passage, requiring the user to fill in the blanks. This question format can be used in a variety of assessment tasks. One common use of the cloze format is to assess the level of understanding for a student acquiring a second language. A cloze test can be administered with multiple choices to fill in each blank, which requires the user to rely more on the forms of the words and their parts of speech rather than their meanings. Without choices, a cloze test causes the user to rely more on the semantic meaning of the passage. Typically, if a cloze assessment requires a free-form answer, then it needs to be hand scored to allow for synonyms and spelling variations.

ResourceFunction["RandomTextCompletionQuestion"] returns a list of two items, a

QuestionObject and a list of the answers.

ResourceFunction["RandomTextCompletionQuestion"] can take the following options:

| "DistractorType" | "Inflections" | how to choose the distractors |

| "QuestionType" | "SelectCompletion" | the form of the output |

Possible values for the "DistractorType" option include the following:

| "Inflections" | (default) distractors try to match the parts of speech and the inflectional endings of the answers |

| "PartsOfSpeech" | distractors match the parts of speech of the answers |

| "Random" | distractors are random words |

Possible values for the "QuestionType" option include the following:

| "SelectCompletion" | (default) blanks in the passage are drop-down menus, each with nine choices |

| {"SelectCompletion", n} | blanks are drop-down menus with n choices (1 ≤ n ≤ 9) |

| "TextCompletion" | blanks in the passage are text boxes |

| "Parts" | output is a list: {q, a,{d1,d2,…}}, where the string q is the passage with blanks, a is a list of answers, and di are lists of distractors. |

When

ResourceFunction["RandomTextCompletionQuestion"] produces a

QuestionObject, the user's results are reported including what words they chose, the correctness of each answer, and the overall score for the question in fraction form.

ResourceFunction["RandomTextCompletionQuestion"] gets text from English-language Wikipedia articles, so some service credits are consumed.

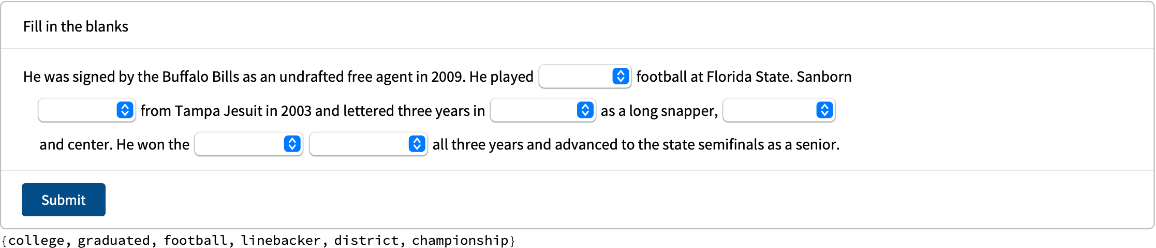

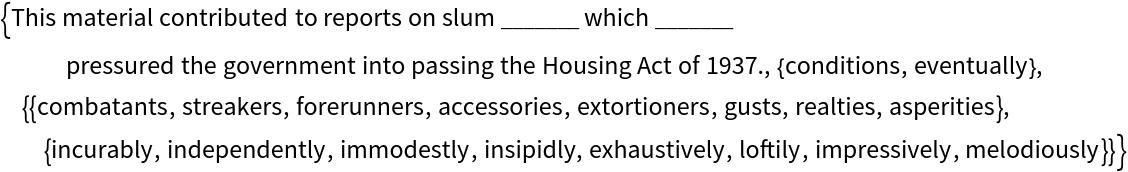

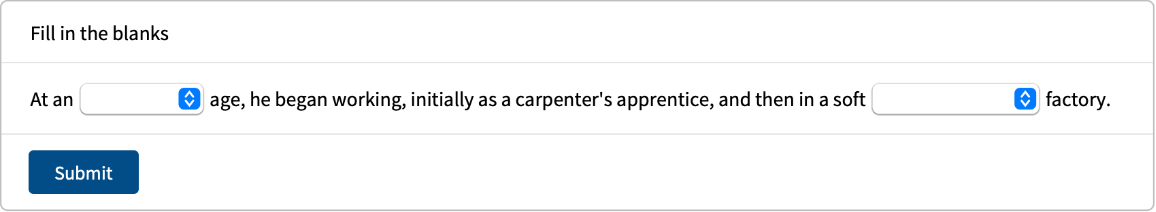

![cloze = ResourceFunction["RandomTextCompletionQuestion"][2, "QuestionType" -> "Parts"];

prompt = "What two words might fill in the blanks?";

answers = cloze[[2]];

passage = cloze[[1]];

StringForm["What two words might fill in the blanks?\n\n`1`", Style[passage, 18, FontFamily -> "Times New Roman"]] // Framed](https://www.wolframcloud.com/obj/resourcesystem/images/83b/83b9f700-21f5-412f-af29-66f50bc39e92/32d3ce316687ce04.png)

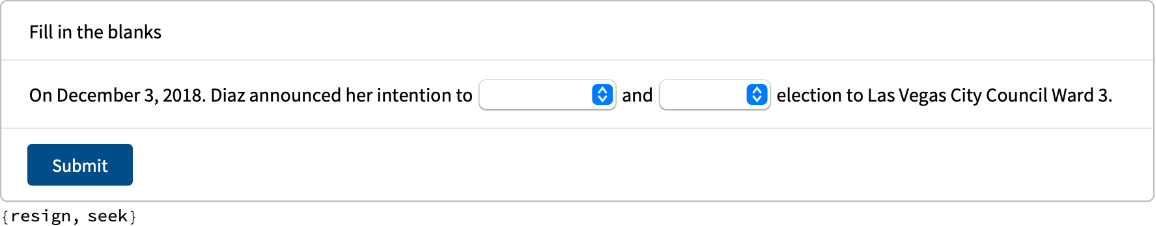

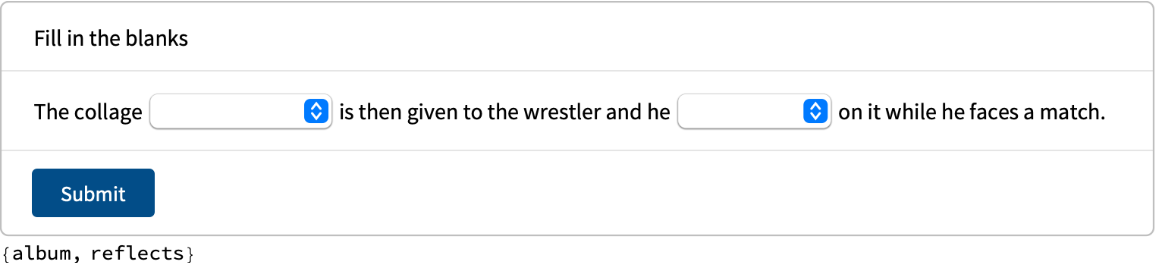

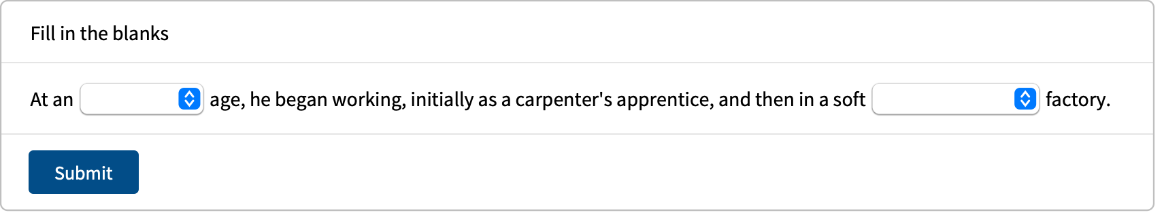

![cloze = ResourceFunction["RandomTextCompletionQuestion"][2];

answers = cloze[[2]];

question = cloze[[1]]](https://www.wolframcloud.com/obj/resourcesystem/images/83b/83b9f700-21f5-412f-af29-66f50bc39e92/1bc2850d337fce64.png)

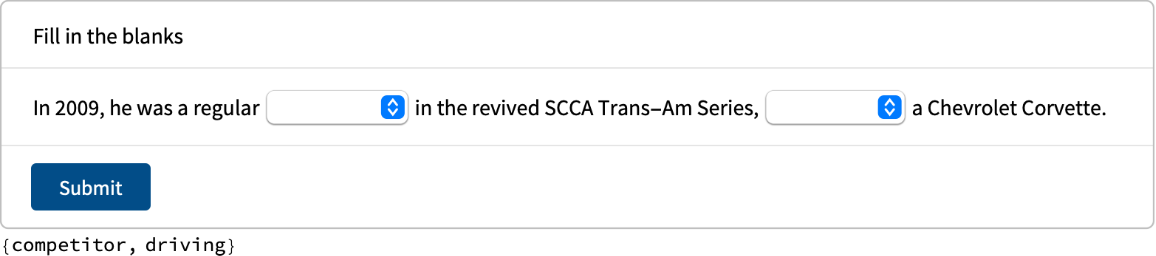

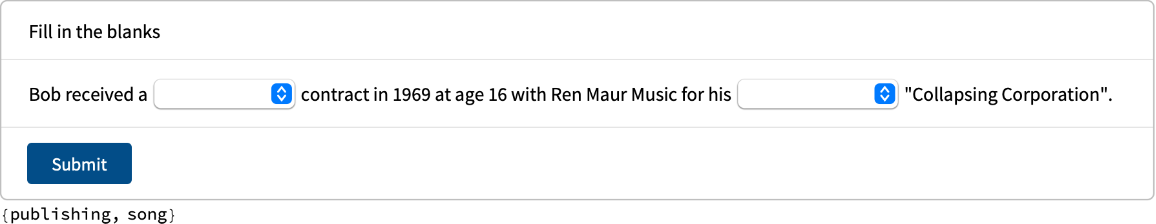

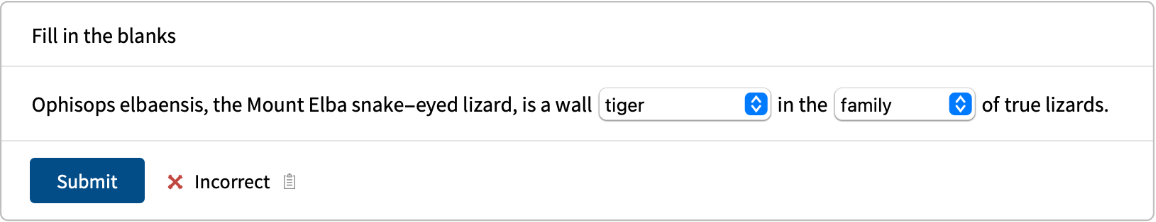

![cloze = ResourceFunction["RandomTextCompletionQuestion"][2, "QuestionType" -> "TextCompletion"];

answers = cloze[[2]];

question = cloze[[1]]](https://www.wolframcloud.com/obj/resourcesystem/images/83b/83b9f700-21f5-412f-af29-66f50bc39e92/181783ac78eb6f01.png)

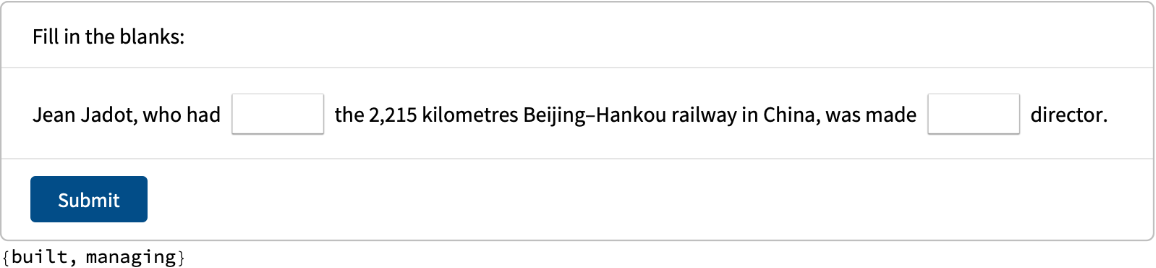

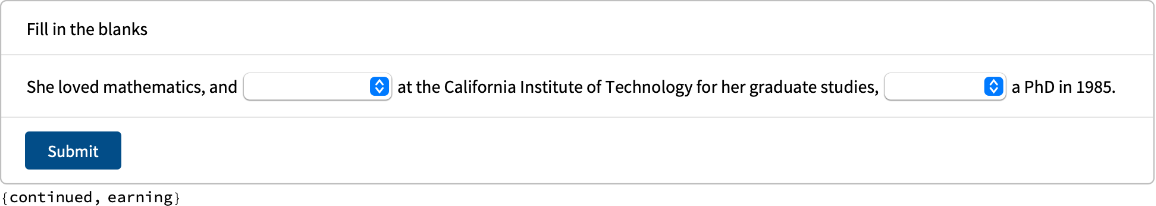

![cloze = ResourceFunction["RandomTextCompletionQuestion"][2];

answers = cloze[[2]];

question = cloze[[1]]](https://www.wolframcloud.com/obj/resourcesystem/images/83b/83b9f700-21f5-412f-af29-66f50bc39e92/70e9fe0b5d5b1b08.png)

![AssessmentResultObject[<|"Score" -> Rational[1, 2], "AnswerCorrect" -> False, "GivenAnswer" -> {"tiger", "family"}, "Explanation" -> {}, "ElementInformation" -> <|"Scores" -> {0, 1}, "AnswerCorrect" -> {False, True}|>, "Timestamp" -> DateObject[{2025, 3, 17, 13, 55, 35.636829}, "Instant", "Gregorian", -7.], "AssessmentSettings" -> {"ListAssessment" -> "SeparatelyScoreElements"}, "AnswerComparisonMethod" -> "String", "SubmissionCount" -> 1|>][{"Score", "GivenAnswer"}]](https://www.wolframcloud.com/obj/resourcesystem/images/83b/83b9f700-21f5-412f-af29-66f50bc39e92/6044bccfa5d23904.png)