Wolfram Function Repository

Instant-use add-on functions for the Wolfram Language

Function Repository Resource:

Generate a sequence of values using the Metropolis–Hastings Markov chain Monte Carlo method

ResourceFunction["MetropolisHastingsSequence"][f,x,n] generates n samples using probability function f with independent variable x. | |

ResourceFunction["MetropolisHastingsSequence"][f,{x,x0},n] generates samples using the initial value x0. | |

ResourceFunction["MetropolisHastingsSequence"][f,x,{n,burn}] discards the first burn samples. | |

ResourceFunction["MetropolisHastingsSequence"][f, x, {n, burn, thin}] selects n elements in steps of thin. | |

ResourceFunction["MetropolisHastingsSequence"][f, {{x, x0},{y, y0},…}, …] generates samples for the multivariate function f. |

| Method | Automatic | jump function is normally distributed |

| "Scale" | 1 | variance of the normal distribution |

| "JumpDistribution" | "Normal" | accepts a symmetric parameter‐dependent distribution, if Method→"CustomSymmetricJump" |

| WorkingPrecision | MachinePrecision | precision used in internal computations |

| "ShowRejectionRate" | False | controls the appearance of the rejection rate in the output |

| "LogProbability" | False | f represents a probability function |

Approximate a CauchyDistribution:

| In[1]:= |

|

| Out[1]= |

|

Approximate a CauchyDistribution using the specified starting point x0, burn and thin values:

| In[2]:= |

|

| Out[2]= |

|

Approximate a MultivariateTDistribution in 2D:

| In[3]:= |

|

| Out[3]= |

|

The default Method is Automatic, in which case the jump function is a NormalDistribution (or MultinormalDistribution):

| In[4]:= |

![(SeedRandom[1234]; ResourceFunction["MetropolisHastingsSequence"][E^(-x^2/

2), {x, 0}, {100, 100, 10}, Method -> Automatic]) == (SeedRandom[

1234]; ResourceFunction["MetropolisHastingsSequence"][E^(-x^2/

2), {x, 0}, {100, 100, 10}, Method -> "CustomSymmetricJump", "JumpDistribution" -> (NormalDistribution[#, 1] &)])](https://www.wolframcloud.com/obj/resourcesystem/images/189/189a6b3c-6587-45e1-b843-d946422b03d1/6934f8f82085ee1b.png)

|

| Out[4]= |

|

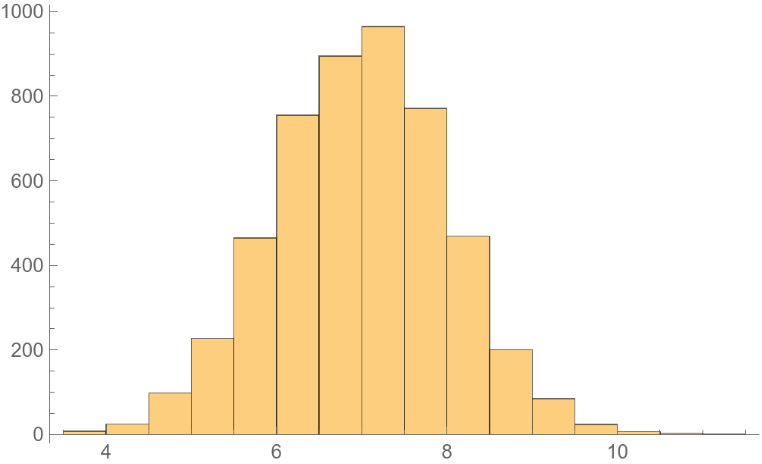

If f has multiple peaks, the algorithm can get stuck in the one nearest to the starting point:

| In[5]:= |

|

| Out[5]= |

|

Use a larger "Scale" to explore the whole landscape:

| In[6]:= |

![ResourceFunction["MetropolisHastingsSequence"][

E^(-(x - 7)^2/2) + E^(-(x + 7)^2/2), {x, 1}, {5000, 100, 10}, "Scale" -> 5] // Histogram](https://www.wolframcloud.com/obj/resourcesystem/images/189/189a6b3c-6587-45e1-b843-d946422b03d1/2da141ce9b066074.png)

|

| Out[6]= |

|

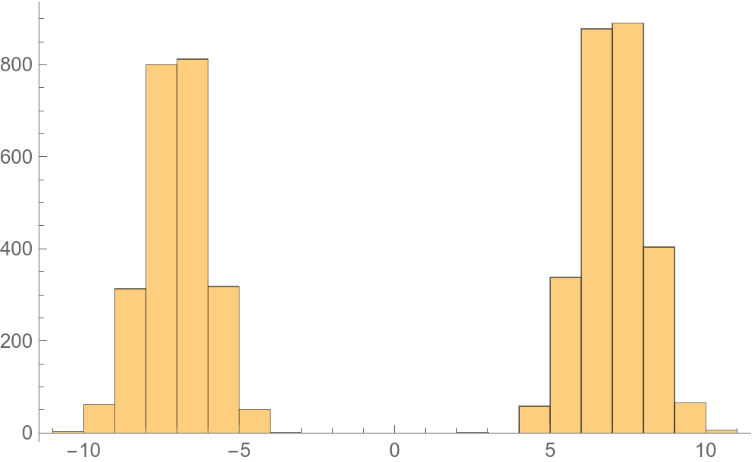

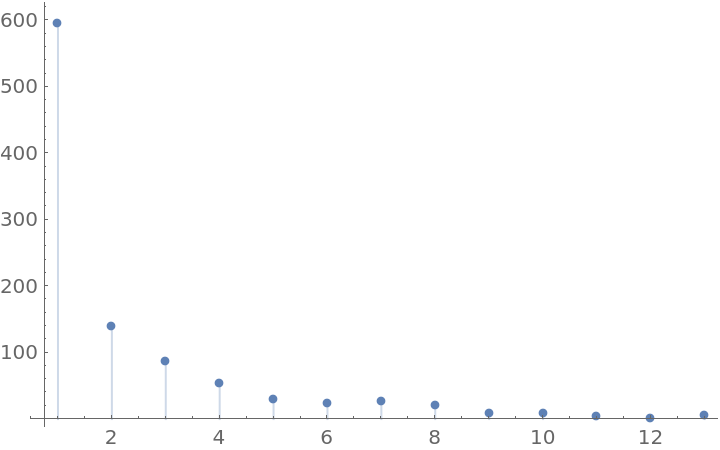

Use a discrete jump distribution to approximate discrete distributions:

| In[7]:= |

|

| In[8]:= |

|

| In[9]:= |

|

| In[10]:= |

|

| Out[10]= |

|

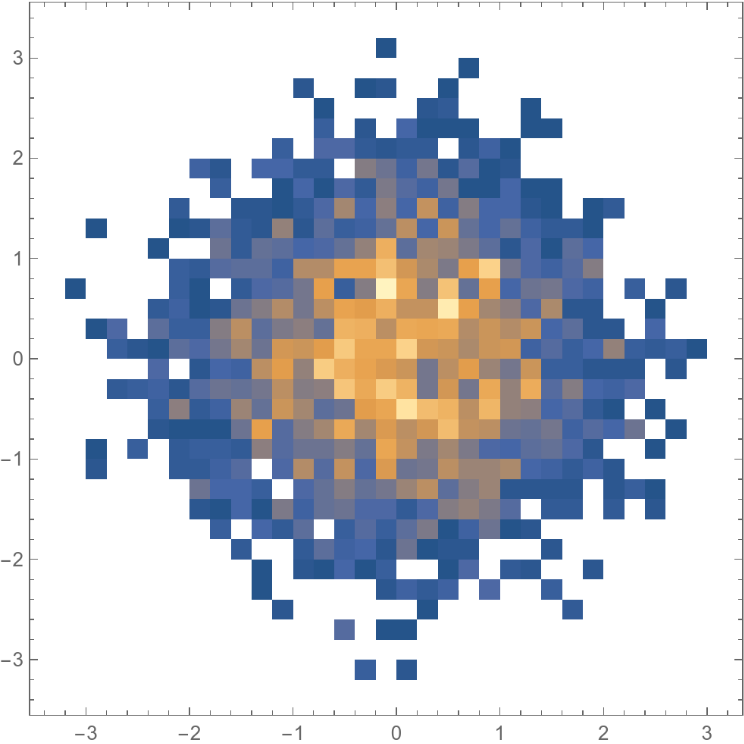

Multivariate example:

| In[11]:= |

![ResourceFunction["MetropolisHastingsSequence"][

E^(-((x^2 + y^2)/2)), {{x, 0}, {y, 0}}, {5000, 100, 1}, Method -> "CustomSymmetricJump", "JumpDistribution" -> (MultivariateTDistribution[#, IdentityMatrix[2], 3] &)] // DensityHistogram](https://www.wolframcloud.com/obj/resourcesystem/images/189/189a6b3c-6587-45e1-b843-d946422b03d1/71d5a3baa1cff051.png)

|

| Out[11]= |

|

Use WorkingPrecision to get higher precision in parameter estimates:

| In[12]:= |

|

| Out[12]= |

|

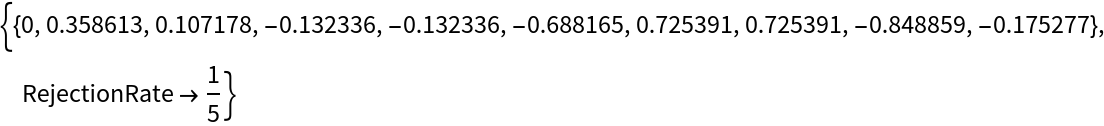

Use "ShowRejectionRate" to see the rejection rate of the process:

| In[13]:= |

|

| Out[13]= |

|

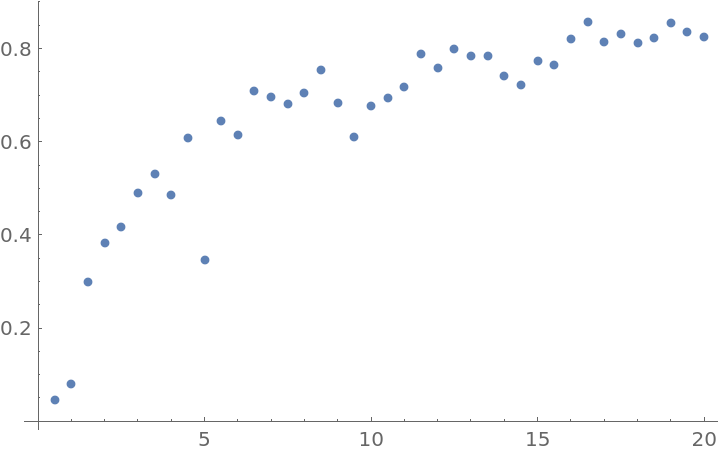

"ShowRejectionRate" can be used to select an optimal "Scale" for the method ("RejectionRate" is usually advised to be between 50% and 80% [see References]):

| In[14]:= |

![Table[{\[Sigma], "RejectionRate" /. Last[ResourceFunction["MetropolisHastingsSequence"][

1/(1 + x^2), {x, 0}, {100, 0, 10}, "ShowRejectionRate" -> True, "Scale" -> \[Sigma]]]}, {\[Sigma], 0.5, 20, 0.5}] // ListPlot](https://www.wolframcloud.com/obj/resourcesystem/images/189/189a6b3c-6587-45e1-b843-d946422b03d1/79d20828cafab3a8.png)

|

| Out[14]= |

|

Generate sample data using BernoulliDistribution:

| In[16]:= |

|

Define a prior distribution for the parameter (in this case, the Jeffreys prior):

| In[17]:= |

|

Use Bayes's rule and multiply the Likelihood with the prior to get an f function, which is proportional to the posterior distribution of the parameter:

| In[18]:= |

|

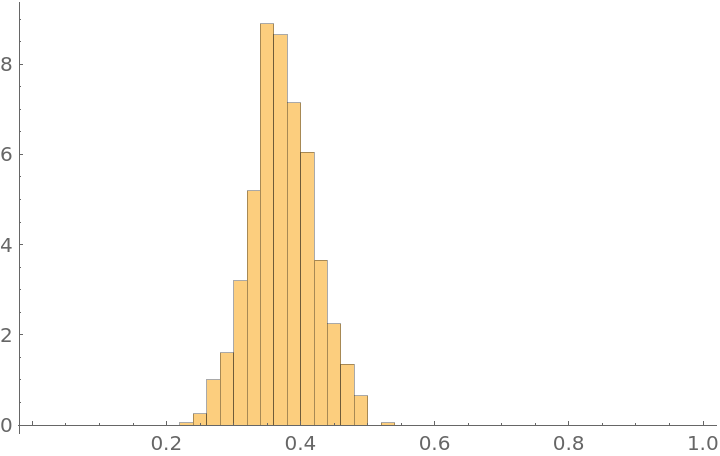

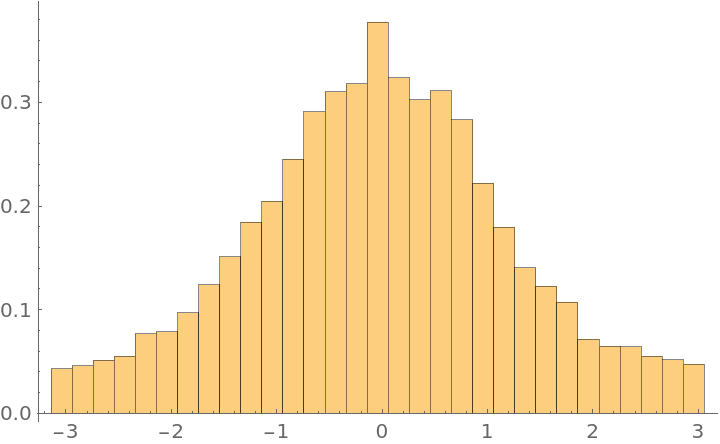

Use MetropolisHastingsSequence to generate one thousand samples of the θ parameter and create a Histogram:

| In[19]:= |

![Histogram[

ResourceFunction["MetropolisHastingsSequence"][

f, {\[Theta], 1/2}, {1000, 100, 10}], {0, 1, Automatic}, "ProbabilityDensity"]](https://www.wolframcloud.com/obj/resourcesystem/images/189/189a6b3c-6587-45e1-b843-d946422b03d1/3ca3c660dc6bd439.png)

|

| Out[19]= |

|

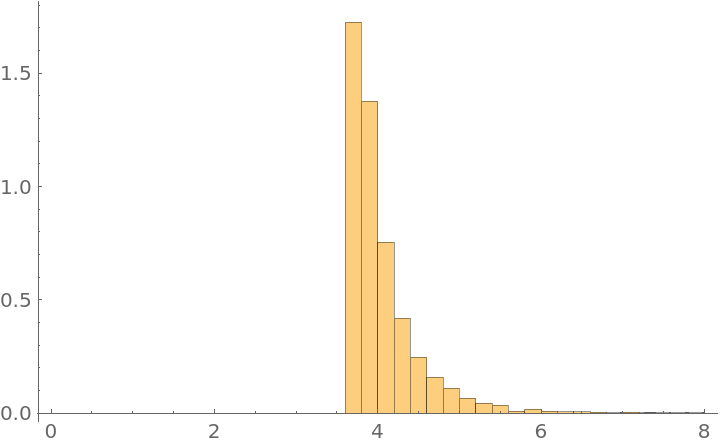

Infer the parameter of a UniformDistribution (also know as the German tank problem). First, generate a small sample of data using UniformDistribution:

| In[20]:= |

|

Define a prior distribution for the parameter (in this case, the non-normalizable Jeffreys prior):

| In[21]:= |

|

Use Bayes's rule and multiply the Likelihood with the prior to get a function that is proportional to the posterior distribution of the parameter:

| In[22]:= |

|

Use MetropolisHastingsSequence to generate one thousand samples of the θ parameter and generate a Histogram:

| In[23]:= |

![Histogram[

ResourceFunction["MetropolisHastingsSequence"][

f, {\[Theta], 8}, {5000, 100, 10}], {0, 8, Automatic}, "ProbabilityDensity"]](https://www.wolframcloud.com/obj/resourcesystem/images/189/189a6b3c-6587-45e1-b843-d946422b03d1/13a6cb6471760638.png)

|

| Out[23]= |

|

Generate sample data using NormalDistribution:

| In[24]:= |

|

Define a prior distribution for the parameter (in this case, the scale-free non-normalizable prior):

| In[25]:= |

|

Use Bayes's rule and multiply the Likelihood with the prior to get a function that is proportional to the posterior distribution of the parameter:

| In[26]:= |

|

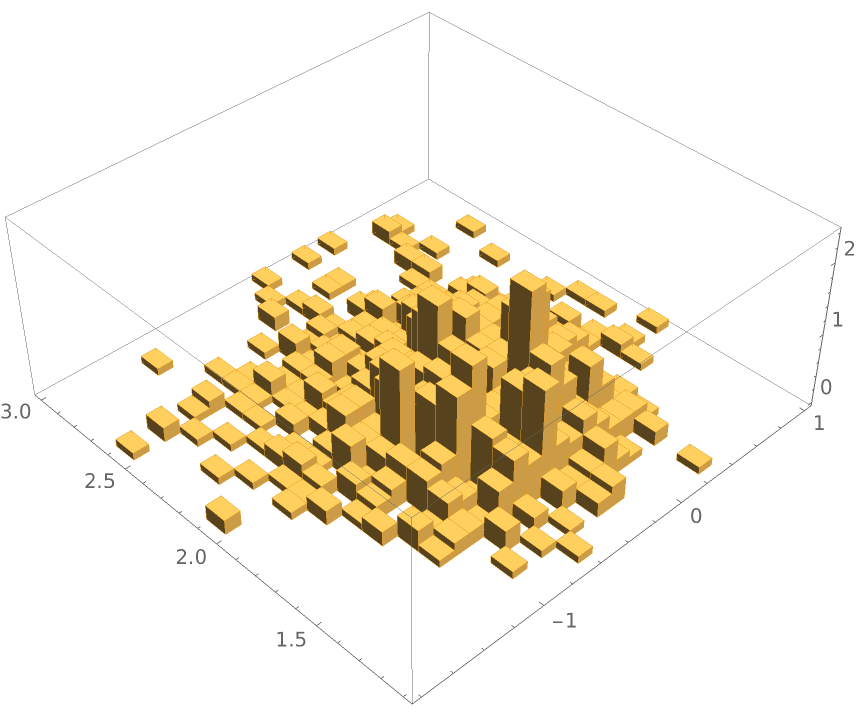

Use MetropolisHastingsSequence to generate one thousand samples of the {μ,σ} pair of parameters and generate a Histogram3D:

| In[27]:= |

![Quiet[Histogram3D[

ResourceFunction["MetropolisHastingsSequence"][

f, {{\[Mu], 0}, {\[Sigma], 1}}, {1000, 100, 10}], {Automatic, {0, 3, Automatic}}, "ProbabilityDensity"]]](https://www.wolframcloud.com/obj/resourcesystem/images/189/189a6b3c-6587-45e1-b843-d946422b03d1/75b3ce8bf590ce5d.png)

|

| Out[27]= |

|

Generating a sample from distribution with PDF ![]() defined on a circle:

defined on a circle:

| In[28]:= |

![Histogram[

Mod[ResourceFunction["MetropolisHastingsSequence"][

E^-(1 - Cos[\[CurlyPhi]]), {\[CurlyPhi], 0}, {10000, 100, 10}], 2 \[Pi], -\[Pi]], {-\[Pi], \[Pi], Automatic}, "ProbabilityDensity"]](https://www.wolframcloud.com/obj/resourcesystem/images/189/189a6b3c-6587-45e1-b843-d946422b03d1/19e0cd10f285b015.png)

|

| Out[28]= |

|

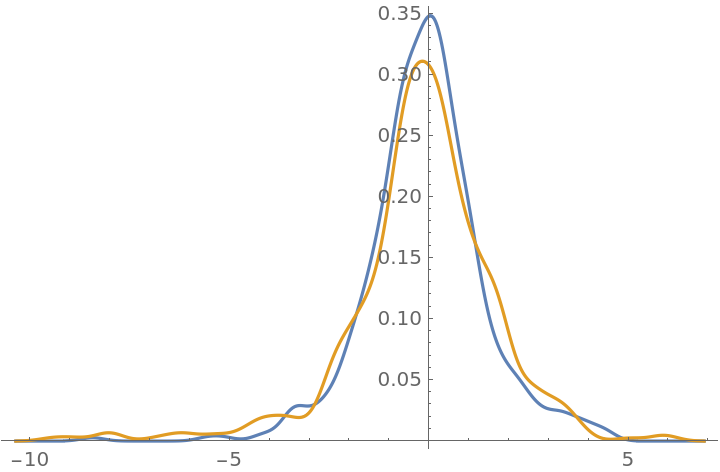

If f is normalized, it approximates RandomVariate[ProbabilityDistribution[f,{x,–∞,∞}],n]:

| In[29]:= |

![\[ScriptCapitalD] = ProbabilityDistribution[

1/\[Pi] 1/Cosh[x], {x, -\[Infinity], \[Infinity]}];

data1 = RandomVariate[\[ScriptCapitalD], 500];

data2 = ResourceFunction["MetropolisHastingsSequence"][

1/Cosh[x], {x, 0}, {500, 100, 10}];

KolmogorovSmirnovTest[data1, data2]](https://www.wolframcloud.com/obj/resourcesystem/images/189/189a6b3c-6587-45e1-b843-d946422b03d1/063c76579667681d.png)

|

| Out[32]= |

|

| In[33]:= |

|

| Out[33]= |

|

The parameter thin should be an integer greater than 0:

| In[34]:= |

|

| Out[34]= |

|

The dimension of the parameter-dependent "JumpDistribution" must match the number of variables:

| In[35]:= |

|

| Out[35]= |

|

This work is licensed under a Creative Commons Attribution 4.0 International License