Wolfram Function Repository

Instant-use add-on functions for the Wolfram Language

Function Repository Resource:

Tokenize an input string into a list of integers from a vocabulary that was originally used to train GPT nets

ResourceFunction["GPTTokenizer"][] returns a GPT NetEncoder. | |

ResourceFunction["GPTTokenizer"]["string"] tokenizes an input "string" into a list of integers from the GPT neural net vocabulary. | |

ResourceFunction["GPTTokenizer"][{"input1", "input2","input3", … }] tokenizes a list of strings into a list of integer tokens from the GPT neural net vocabulary. |

| GPT-3.5 | Utilizes the 'CL100K' vocabulary set. This vocabulary is also employed by other models including GPT-4, GPT-3.5-turbo, text-embedding-ada-002, text-embedding-3-small, and text-embedding-3-large. |

| GPT-4o | Utilizes the 'O200K_base' vocabulary set. This vocabulary is also employed by other models including GPT-4o. |

| GPT-2 | Employs the 'R50K' vocabulary set. GPT-3 models, such as Davinci, utilize this same vocabulary. |

| P50K | Employs the 'P50K' vocabulary set. Codex models, such as text-davinci-002, text-davinci-003, utilize this same vocabulary. |

Encode a string of characters:

| In[1]:= |

| Out[1]= |

Encode a string of characters using "GPT-3.5" Method:

| In[2]:= |

| Out[2]= |

| In[3]:= |

| Out[3]= |

Encode a string of characters using "GPT-2" Method:

| In[4]:= |

| Out[4]= |

Encode a string of characters using "P50K" Method:

| In[5]:= |

| Out[5]= |

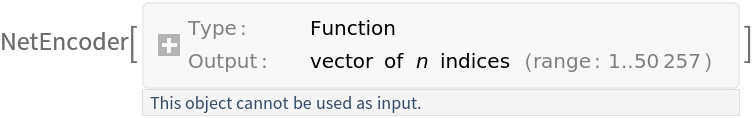

Get the GPT NetEncoder:

| In[6]:= |

| Out[6]= |  |

Check that tokenization is the same:

| In[7]:= |

| Out[7]= |

Wolfram Language 13.0 (December 2021) or above

This work is licensed under a Creative Commons Attribution 4.0 International License