Wolfram Language Paclet Repository

Community-contributed installable additions to the Wolfram Language

FubiniStudyMetricTensor[QuantumState[...] ,opts] | calculates the Fubini–Study metric tensor as defined by the VQE approach from a QuantumState with defined parameters |

QuantumState |

|

|

1 | 0 |

0 | 2 Sin[2θ1] |

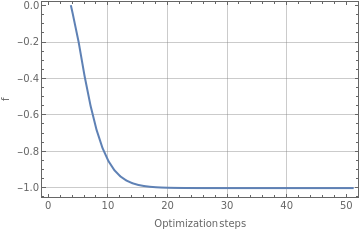

QuantumNaturalGradientDescent[f,metric ,opts] | calculates the gradient descent of f using the defined metric tensor for the parameters space. |

QuantumState |

|

"Gradient" | None | indicate correspondant gradient function ∇f |

"InitialPoint" | Automatic | initial starting point for the optimization process |

"LearningRate" | 0.8 | step size taken during each iteration |

"MaxIterations" | 50 | maximum number of iterations to use |