Wolfram Language Paclet Repository

Community-contributed installable additions to the Wolfram Language

This paclet utilizes XGBoost algorithm in Wolfram Language

Contributed by: Mike Yeh

In this paclet, we provide Wolfram Language functions for implementing XGBoost python functions, e.g., xgb.DMatrix(), xgb.train(), and predict(). So far we have XgbTrain[] to implement xgb.train() and XgbModelPredict[] to perform model prediction. More functions will be added later.

To install this paclet in your Wolfram Language environment,

evaluate this code:

PacletInstall["MikeYeh/XGBPaclet"]

To load the code after installation, evaluate this code:

Needs["MikeYeh`XGBPaclet`"]

A XGBoost python session is recommended before using XgbTrain[]. The code demonstrates the minimum packages needed to be installed before creating a python session:

| In[1]:= |

To create a XGBoost python session:

| In[2]:= |

Within the session our function will automatically import xgboost and other packages.

Create training set and validation set for the following examples

The following code generates training set and validation set as pure numerical arrays or lists:

| In[3]:= | ![(*Load "Titanic" data from ExampleData[]*)

traindataset = ExampleData[{"MachineLearning", "Titanic"}, "TrainingData"];

testdataset = ExampleData[{"MachineLearning", "Titanic"}, "TestData"];

traindataset = DeleteMissing[traindataset, 1, 2]; (*remove the Missing[]*)

testdataset = DeleteMissing[testdataset, 1, 2];(*remove the Missing[]*)](https://www.wolframcloud.com/obj/resourcesystem/images/c7b/c7b76bf3-d8ba-4d49-af93-2b11bd67b007/1-6-0/0d06fb10326b1a6b.png) |

| In[4]:= | ![(*The maps coverting class labels to integers*)

classMapping = <|"1st" -> 1, "2nd" -> 2, "3rd" -> 3, "crew" -> 4|>;

genderMapping = <|"male" -> 0, "female" -> 1|>;

statusMapping = <|"survived" -> 1, "died" -> 0|>;

(* Function to convert data entry to integers using the mappings *)

convertDataEntry[entry_] := {classMapping[entry[[1]]], entry[[2]], genderMapping[entry[[3]]]}

(* Apply the conversion to data and label *)

trainData = convertDataEntry /@ Keys@traindataset;

trainLabel = statusMapping /@ Values@traindataset;

testData = convertDataEntry /@ Keys@testdataset;

testLabel = statusMapping /@ Values@testdataset;](https://www.wolframcloud.com/obj/resourcesystem/images/c7b/c7b76bf3-d8ba-4d49-af93-2b11bd67b007/1-6-0/0281bdbe90ccafaa.png) |

| In[5]:= |

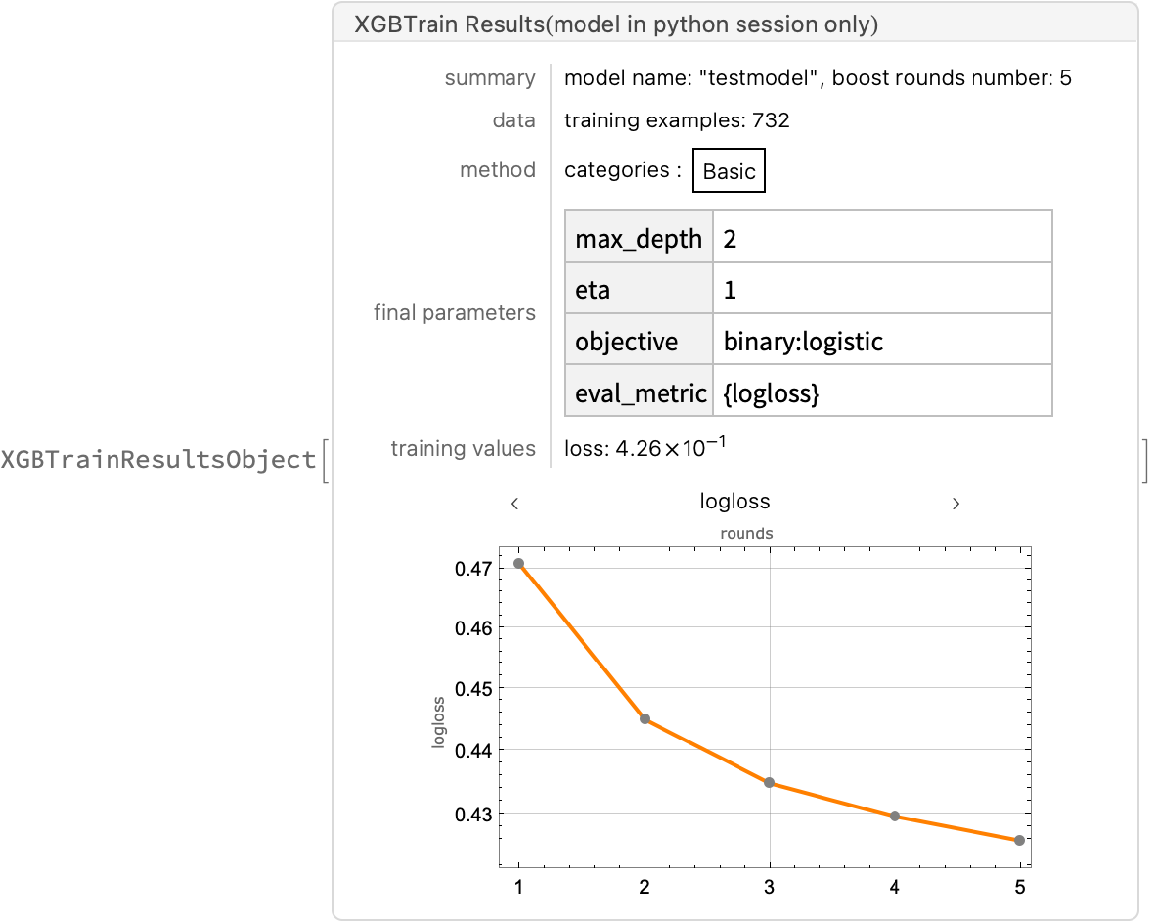

Use XgbTrain[] to train a model with trainset and XGBoost python session, and store the trained model in the output session:

| In[6]:= |

| Out[6]= |  |

Store all information of the trained model in the XGBTrainResultsObject[]:

| In[7]:= |

| Out[7]= |  |

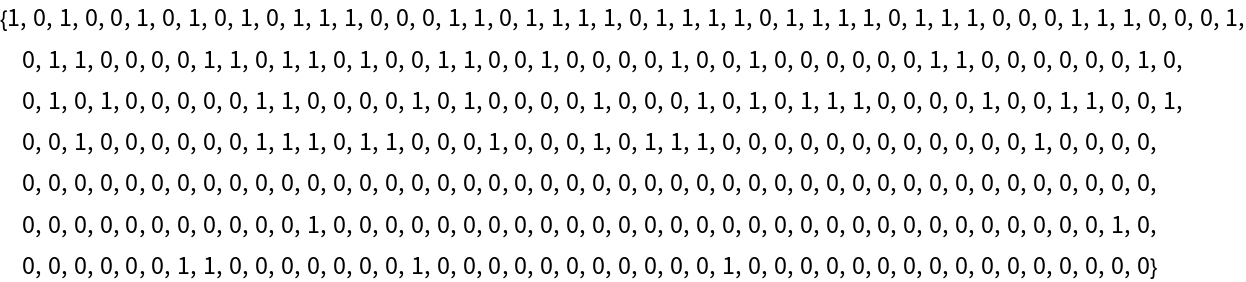

XgbModelPredict[] use the given session and default model name "model" to predict the testData. Please note that the xbgoost python session should containing the trained "model":

| In[8]:= |

| Out[8]= |  |

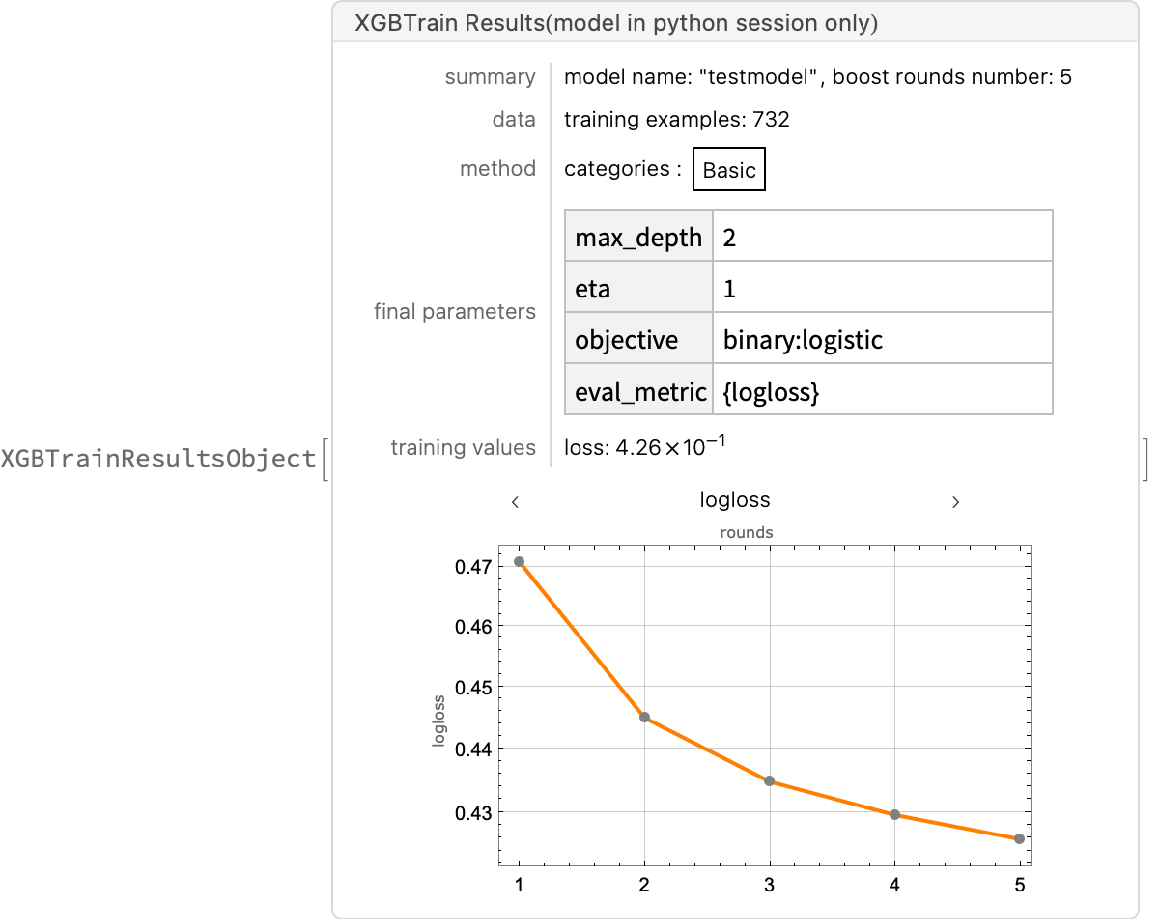

Change XGBoost training runs "numBoostRound" to be 2:

| In[9]:= |

| In[10]:= |

| Out[10]= |  |

Wolfram Language Version 14.1