Basic Examples (11)

This functionality requires an OpenAI API account and an API key:

Convert all equations from the 1st through the 3rd sections of the provided arXiv preprint from TeX to Wolfram Language output them as a dataset:

Instead of returning a dataset, save the result to a notebook and open it automatically:

Also save the output notebook:

By default, it saves in the directory FileNameJoin[{OptionValue["ProjectBaseDirectory"], "paper"}] where OptionValue["ProjectBaseDirectory"] by default is FileNameJoin[{URL[$LocalBase], "ConvertArXivTeXSource"}]:

Instead of saving it to the default file path, save it to a specified one:

Convert all equations in the sections 2 through 4, then publish the results to a Wolfram Cloud notebook:

Convert all equations from the given arXiv preprint using multiple parallel kernels to speed up the process:

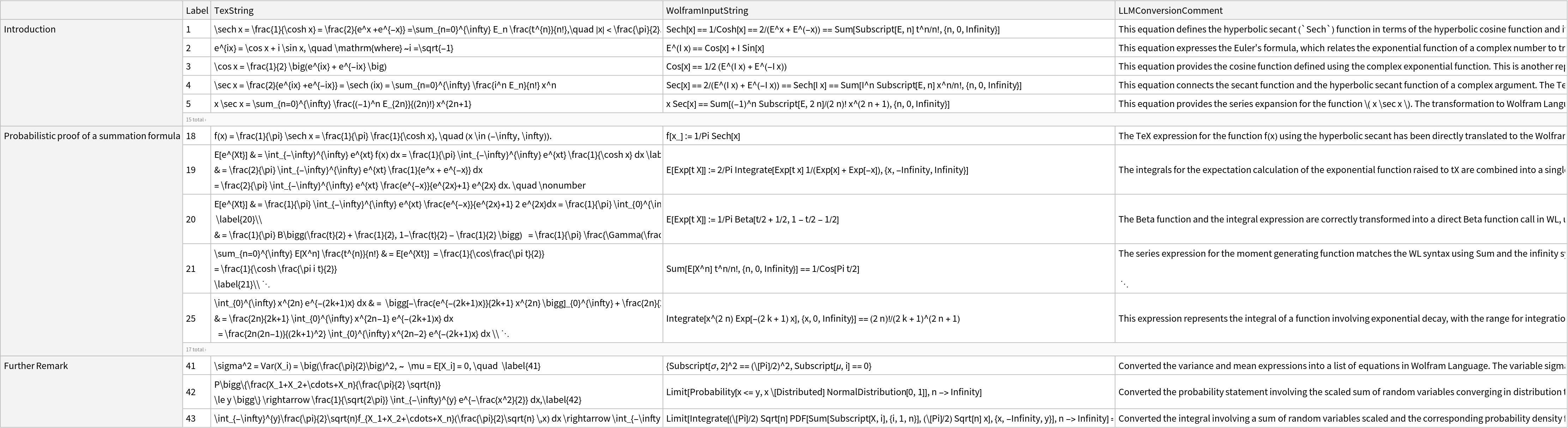

A pre-generated result for reference:

The LLM model used for most of the development and testing is OpenAI's "gpt-4-turbo" which has a long (128k) context length limit needed for typical arXiv preprints. But the option "LLMModel" can be used to specify a different mode:

Use a different LLM model:

Scope (2)

The function ConvertArXivTeXSource requires access to OpenAI API to work. Users need to set the system credential SystemCredential["OPENAI_API_KEY"] = "XXX" (Replace "XXX" with your OpenAI API key.)

The TEX source file for the targeted paper ID are downloaded from arXiv.org and saved to a subdirectory "paper/" inside OptionValue["ProjectBaseDirectory"].

OptionValue["ProjectBaseDirectory"] specifies the project base directory which includes the sub-directory "paper/" for saving downloaded TEX files and output notebooks. Its default value is FileNameJoin[{URL[$LocalBase], "ConvertArXivTeXSource"}], where $LocalBase has a default value $DefaultLocalBase.

The option "LLMModel" can be used to specify a the LLM model used for the converting: "LLMModel" -> <|"Service" -> "OpenAI", "Name" -> "gpt-4-turbo"|>. The current default is "gpt-4o". The model "gpt-4-turbo" was used a lot during the development and testing. Both models have a long (128k) context length limit needed for a typical arXiv preprint. The behavior with other models aren't tested extensively.

The function ResourceFunction["ConvertArXivTeXSource"] requires access to OpenAI API to work. Users need to set the system credential with SystemCredential["OPENAI_API_KEY"] = "XXX" (Replace "XXX" with your OpenAI API key.)

When the output format is "Notebook", use the "SaveFileQ" → True option to save the output notebook. It saves the notebook file in the same directory "paper/" alongside the TEX file. Currently the named LLM prompt recipe argument promptRecipe can only be {"ConvertArXivTeXSource","EquationList"} which collects and converts a list of equation-like objects in the specified portion of the paper.

The option "AdditionalLLMPrompts" -> {p1, p2,…}, can be used to add additional prompts at the end of the built-in one. Each pi should be a string.

Set the option "ParallelQ" → True to run the potentially slow LLM API calls in parallel.

To use a local TEX source file instead of having the function automatically downloading the TEX source file from arXiv.org, before running ResourceFunction["ConvertArXivTeXSource"], specify the root directory with "ProjectBaseDirectory" → dir and save the TEX file as, e.g. FileNameJoin[{dir,"paper", "2404.11685.tex"}].

The ConvertArXivTeXSource function takes the following options:

| "ProjectBaseDirectory" | FileNameJoin[{URL[$LocalBase], "ConvertArXivTeXSource"}] | project base directory for saving downloaded TEX file and output notebooks |

| "LLMModel" | <|"Service" -> "OpenAI", "Name" -> "gpt-4o"|> | LLM model |

| "SaveFileQ" | False | set to True to save the output notebook as FileNameJoin[{OptionValue["ProjectBaseDirectory"],"paper", "YYMM.xxxxx.nb"}] |

| "PublishToWolframCloudQ" | False | set to True to publish the output notebook to the cloud specified by OptionValue["CloudBase"] |

| "CloudBase" | $CloudBase | the cloud base for publishing notebooks |

| "ParallelQ" | False | set to True to process LLM API calls parallelly |

| "AdditionalLLMPrompts" | {} | optionally provide a list of prompt strings to be appended after the built-in prompts of the specified prompt recipe |

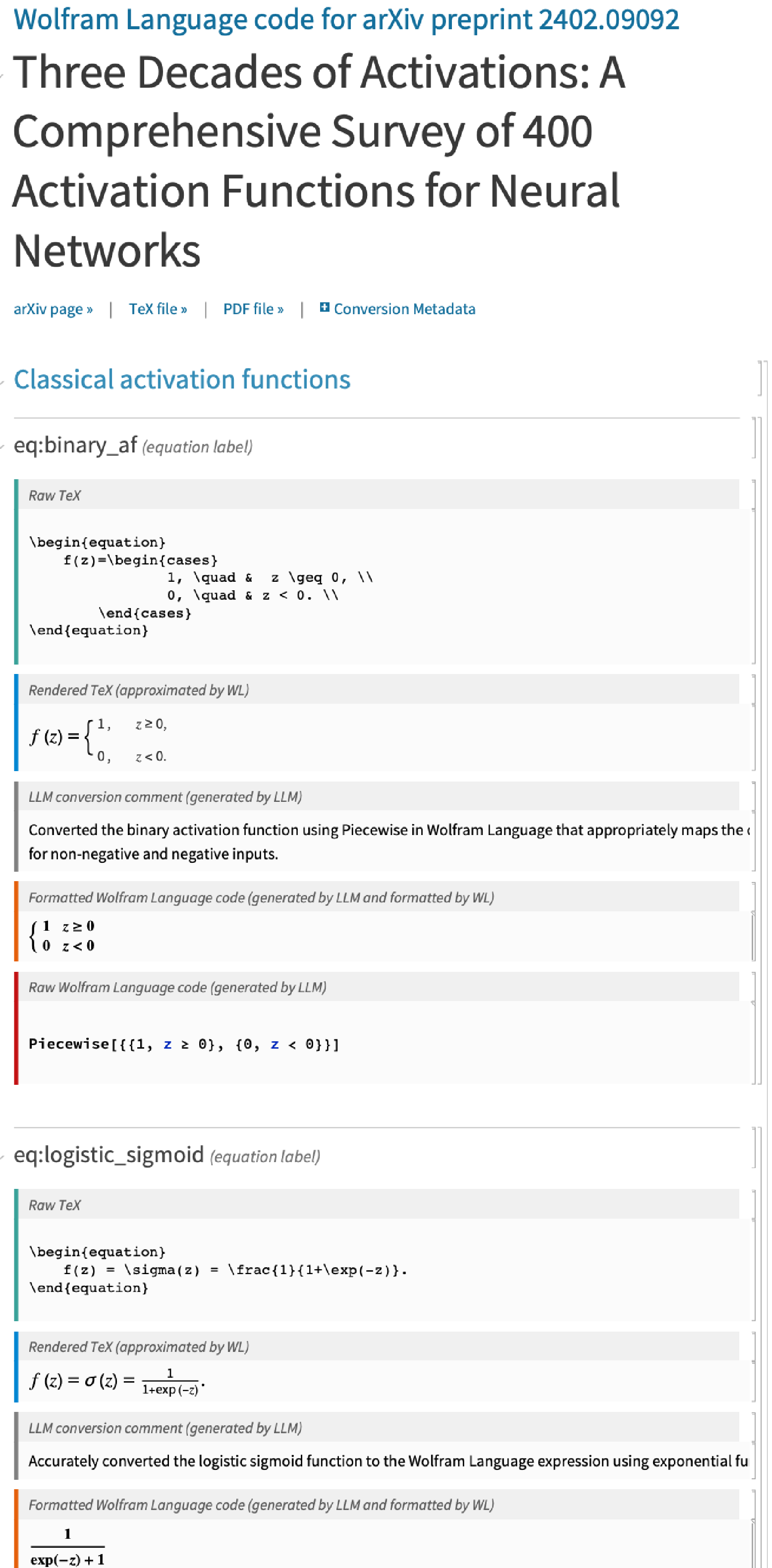

Screenshot of an example output notebook:

![InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ConvertArXivTeXSource", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"Wolfram/ConvertArXivTeXSource\"", ",", "\"Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]]["2410.05895", {"Section", {1,

3}}, {"ConvertArXivTeXSource", "EquationList" }, "Dataset"]](https://www.wolframcloud.com/obj/resourcesystem/images/3ec/3ece8da9-9e25-4826-a64a-f72e7187cd39/1-0-0/1346c74605cabeb2.png)

![InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ConvertArXivTeXSource", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"Wolfram/ConvertArXivTeXSource\"", ",", "\"Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]]["2410.05895", {"Section", 2}, {"ConvertArXivTeXSource", "EquationList" }, "Notebook"]](https://www.wolframcloud.com/obj/resourcesystem/images/3ec/3ece8da9-9e25-4826-a64a-f72e7187cd39/1-0-0/14c064cbe95fc649.png)

![nb = InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ConvertArXivTeXSource", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"Wolfram/ConvertArXivTeXSource\"", ",", "\"Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]][

"2410.05895", {"Section", 2}, {"ConvertArXivTeXSource", "EquationList" }, "Notebook", "SaveFileQ" -> True]](https://www.wolframcloud.com/obj/resourcesystem/images/3ec/3ece8da9-9e25-4826-a64a-f72e7187cd39/1-0-0/3a81694b592706b1.png)

![nb = With[

{dir = FileNameJoin[{$HomeDirectory, "temp", "myBaseDir"}]},

If[! DirectoryQ[dir], CreateDirectory[dir, CreateIntermediateDirectories -> True]]; InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ConvertArXivTeXSource", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"Wolfram/ConvertArXivTeXSource\"", ",", "\"Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]][

"2410.05895", {"Section", 2}, {"ConvertArXivTeXSource", "EquationList" }, "Notebook", "SaveFileQ" -> True, "ProjectBaseDirectory" -> dir]

]](https://www.wolframcloud.com/obj/resourcesystem/images/3ec/3ece8da9-9e25-4826-a64a-f72e7187cd39/1-0-0/57fe02edd9500e7d.png)

![InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ConvertArXivTeXSource", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"Wolfram/ConvertArXivTeXSource\"", ",", "\"Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]]["2410.05895", {"Section", {2,

4}}, {"ConvertArXivTeXSource", "EquationList" }, "CloudNotebook", "PublishToWolframCloudQ" -> True, "CloudBase" -> "http://www.wolframcloud.com"]](https://www.wolframcloud.com/obj/resourcesystem/images/3ec/3ece8da9-9e25-4826-a64a-f72e7187cd39/1-0-0/664876aa184be241.png)

![Block[{$myDebugLogQ = True, $myDebugLevel = "INFO"},

InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ConvertArXivTeXSource", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"Wolfram/ConvertArXivTeXSource\"", ",", "\"Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]]["2402.09092", All, {"ConvertArXivTeXSource", "EquationList" }, "CloudNotebook", "PublishToWolframCloudQ" -> True, "CloudBase" -> "http://www.wolframcloud.com", "ParallelQ" -> True]]](https://www.wolframcloud.com/obj/resourcesystem/images/3ec/3ece8da9-9e25-4826-a64a-f72e7187cd39/1-0-0/37743f4768b91fdc.png)

![CloudObject["https://www.wolframcloud.com/obj/meng/ConvertArXivTeXSource/2402.09092.nb", Permissions -> "Public"]](https://www.wolframcloud.com/obj/resourcesystem/images/3ec/3ece8da9-9e25-4826-a64a-f72e7187cd39/1-0-0/354bbe6b3e10fc56.png)

![InterpretationBox[FrameBox[TagBox[TooltipBox[PaneBox[GridBox[List[List[GraphicsBox[List[Thickness[0.0025`], List[FaceForm[List[RGBColor[0.9607843137254902`, 0.5058823529411764`, 0.19607843137254902`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]], List[List[0, 2, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3], List[0, 1, 0], List[1, 3, 3]]], List[List[List[205.`, 22.863691329956055`], List[205.`, 212.31669425964355`], List[246.01799774169922`, 235.99870109558105`], List[369.0710144042969`, 307.0436840057373`], List[369.0710144042969`, 117.59068870544434`], List[205.`, 22.863691329956055`]], List[List[30.928985595703125`, 307.0436840057373`], List[153.98200225830078`, 235.99870109558105`], List[195.`, 212.31669425964355`], List[195.`, 22.863691329956055`], List[30.928985595703125`, 117.59068870544434`], List[30.928985595703125`, 307.0436840057373`]], List[List[200.`, 410.42970085144043`], List[364.0710144042969`, 315.7036876678467`], List[241.01799774169922`, 244.65868949890137`], List[200.`, 220.97669792175293`], List[158.98200225830078`, 244.65868949890137`], List[35.928985595703125`, 315.7036876678467`], List[200.`, 410.42970085144043`]], List[List[376.5710144042969`, 320.03370475769043`], List[202.5`, 420.53370475769043`], List[200.95300006866455`, 421.42667961120605`], List[199.04699993133545`, 421.42667961120605`], List[197.5`, 420.53370475769043`], List[23.428985595703125`, 320.03370475769043`], List[21.882003784179688`, 319.1406993865967`], List[20.928985595703125`, 317.4896984100342`], List[20.928985595703125`, 315.7036876678467`], List[20.928985595703125`, 114.70369529724121`], List[20.928985595703125`, 112.91769218444824`], List[21.882003784179688`, 111.26669120788574`], List[23.428985595703125`, 110.37369346618652`], List[197.5`, 9.87369155883789`], List[198.27300024032593`, 9.426692008972168`], List[199.13700008392334`, 9.203690528869629`], List[200.`, 9.203690528869629`], List[200.86299991607666`, 9.203690528869629`], List[201.72699999809265`, 9.426692008972168`], List[202.5`, 9.87369155883789`], List[376.5710144042969`, 110.37369346618652`], List[378.1179962158203`, 111.26669120788574`], List[379.0710144042969`, 112.91769218444824`], List[379.0710144042969`, 114.70369529724121`], List[379.0710144042969`, 315.7036876678467`], List[379.0710144042969`, 317.4896984100342`], List[378.1179962158203`, 319.1406993865967`], List[376.5710144042969`, 320.03370475769043`]]]]], List[FaceForm[List[RGBColor[0.5529411764705883`, 0.6745098039215687`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[44.92900085449219`, 282.59088134765625`], List[181.00001525878906`, 204.0298843383789`], List[181.00001525878906`, 46.90887451171875`], List[44.92900085449219`, 125.46986389160156`], List[44.92900085449219`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6627450980392157`, 0.803921568627451`, 0.5686274509803921`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[355.0710144042969`, 282.59088134765625`], List[355.0710144042969`, 125.46986389160156`], List[219.`, 46.90887451171875`], List[219.`, 204.0298843383789`], List[355.0710144042969`, 282.59088134765625`]]]]], List[FaceForm[List[RGBColor[0.6901960784313725`, 0.5882352941176471`, 0.8117647058823529`], Opacity[1.`]]], FilledCurveBox[List[List[List[0, 2, 0], List[0, 1, 0], List[0, 1, 0], List[0, 1, 0]]], List[List[List[200.`, 394.0606994628906`], List[336.0710144042969`, 315.4997024536133`], List[200.`, 236.93968200683594`], List[63.928985595703125`, 315.4997024536133`], List[200.`, 394.0606994628906`]]]]]], List[Rule[BaselinePosition, Scaled[0.15`]], Rule[ImageSize, 10], Rule[ImageSize, 15]]], StyleBox[RowBox[List["ConvertArXivTeXSource", " "]], Rule[ShowAutoStyles, False], Rule[ShowStringCharacters, False], Rule[FontSize, Times[0.9`, Inherited]], Rule[FontColor, GrayLevel[0.1`]]]]], Rule[GridBoxSpacings, List[Rule["Columns", List[List[0.25`]]]]]], Rule[Alignment, List[Left, Baseline]], Rule[BaselinePosition, Baseline], Rule[FrameMargins, List[List[3, 0], List[0, 0]]], Rule[BaseStyle, List[Rule[LineSpacing, List[0, 0]], Rule[LineBreakWithin, False]]]], RowBox[List["PacletSymbol", "[", RowBox[List["\"Wolfram/ConvertArXivTeXSource\"", ",", "\"Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource\""]], "]"]], Rule[TooltipStyle, List[Rule[ShowAutoStyles, True], Rule[ShowStringCharacters, True]]]], Function[Annotation[Slot[1], Style[Defer[PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"]], Rule[ShowStringCharacters, True]], "Tooltip"]]], Rule[Background, RGBColor[0.968`, 0.976`, 0.984`]], Rule[BaselinePosition, Baseline], Rule[DefaultBaseStyle, List[]], Rule[FrameMargins, List[List[0, 0], List[1, 1]]], Rule[FrameStyle, RGBColor[0.831`, 0.847`, 0.85`]], Rule[RoundingRadius, 4]], PacletSymbol["Wolfram/ConvertArXivTeXSource", "Wolfram`ConvertArXivTeXSource`ConvertArXivTeXSource"], Rule[Selectable, False], Rule[SelectWithContents, True], Rule[BoxID, "PacletSymbolBox"]]["2410.05895", {"Section", 2}, {"ConvertArXivTeXSource", "EquationList" }, "Notebook", "LLMModel" -> <|"Service" -> "OpenAI", "Name" -> "gpt-4-turbo"|>]](https://www.wolframcloud.com/obj/resourcesystem/images/3ec/3ece8da9-9e25-4826-a64a-f72e7187cd39/1-0-0/4d94986e1e800e10.png)