Channel-Separated Video Action Classification Net

Trained on

Kinetics-400 Data

Inspired by 2D separable convolutions in image classification, the authors propose 3D Channel-Separated Networks (CSNs), in which all convolutional operations are separated into either pointwise 1×1×1 or depthwise 3×3×3 convolutions, resulting in a significant accuracy improvement on Sports-1M, Kinetics and Something-Something datasets while being two to three times faster.

Examples

Resource retrieval

Get the pre-trained net:

Basic usage

Classify a video:

Obtain the probabilities predicted by the net:

Feature extraction

Remove the last three layers of the trained net so that the net produces a vector representation of an image:

Get a set of videos:

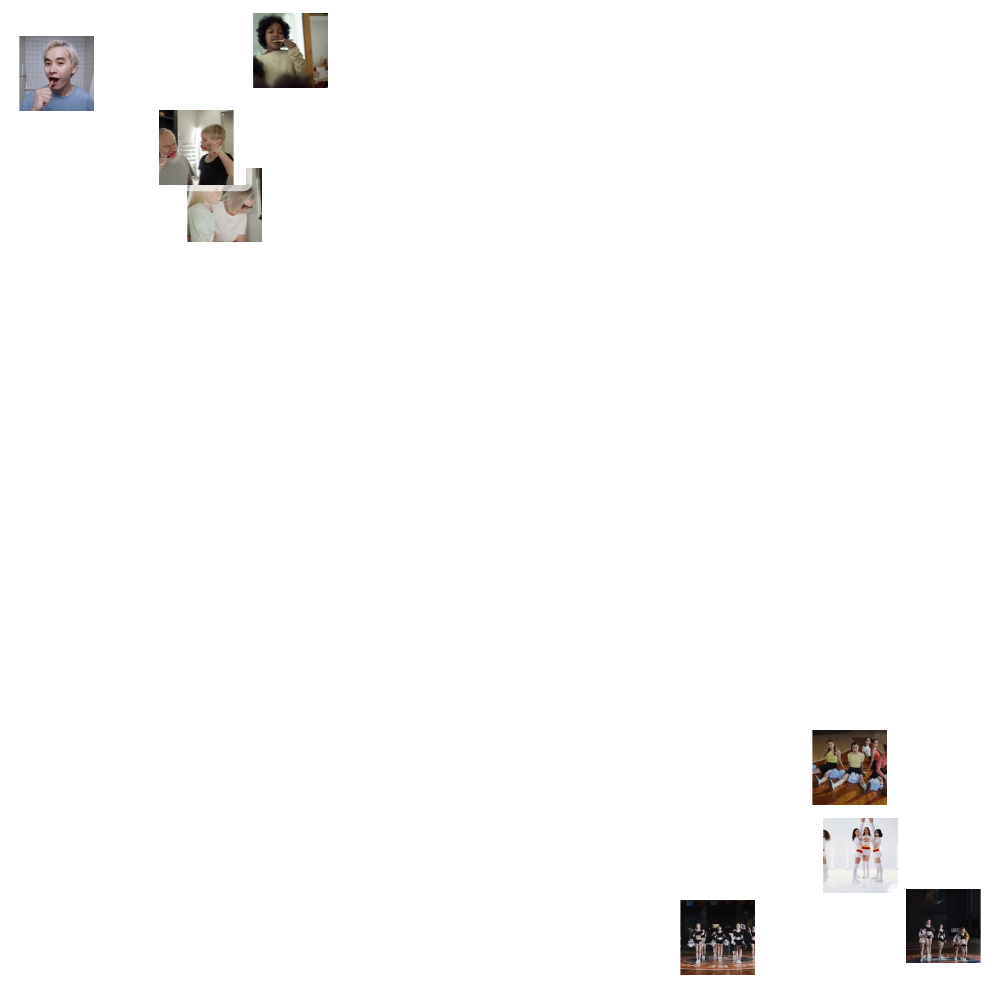

Visualize the features of a set of videos:

Transfer learning

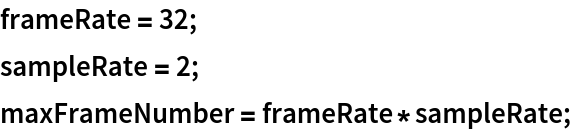

Use the pre-trained model to build a classifier for telling apart images from two action classes not present in the dataset. Create a test set and a training set:

Remove the linear and the softmax layers from the pre-trained net:

Create a new net composed of the pre-trained net followed by a LinearLayer and a SoftmaxLayer:

Train on the dataset, freezing all the weights except for those in the "Linear" layer (use TargetDevice-> "GPU" for training on a GPU):

Perfect accuracy is obtained on the test set:

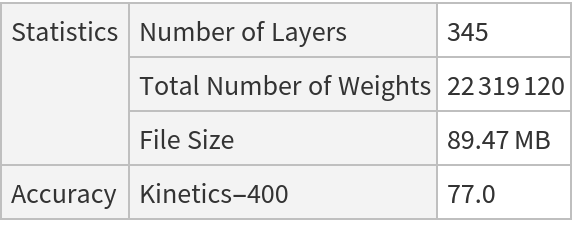

Net information

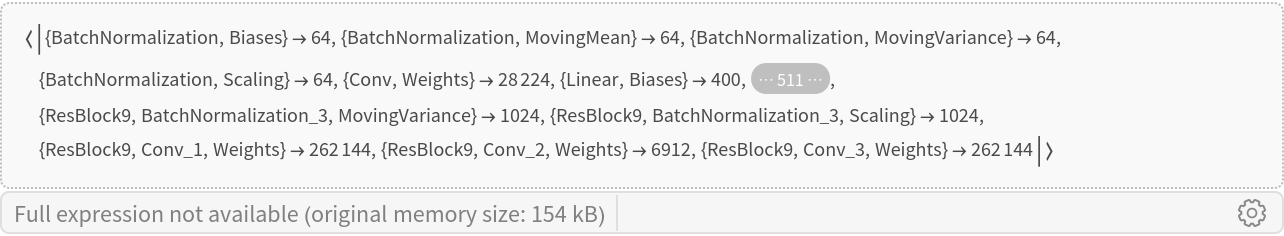

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

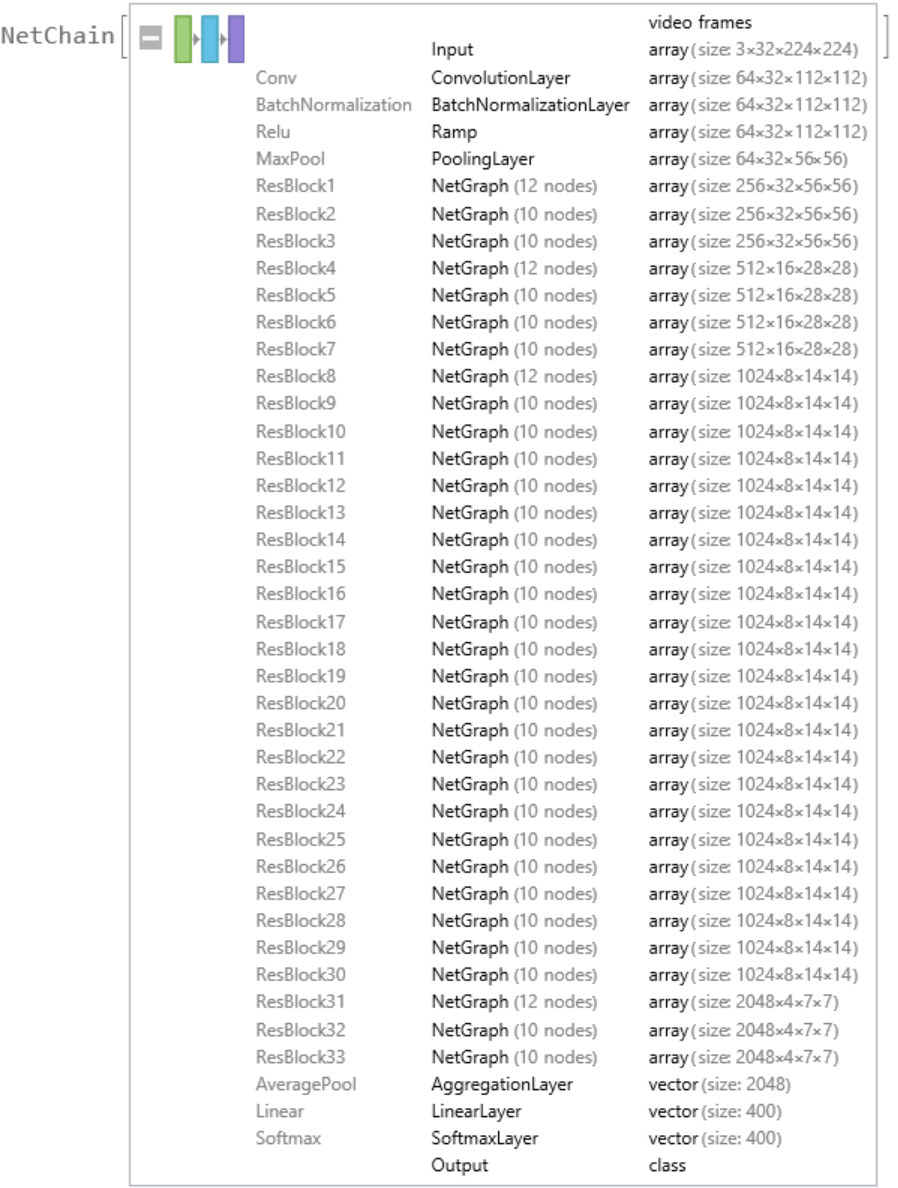

Display the summary graphic:

Resource History

Reference

![pred = NetModel[

"Channel-Separated Video Action Classification Net Trained on Kinetics-400 Data"][video]](https://www.wolframcloud.com/obj/resourcesystem/images/f8e/f8eddf03-d7e7-474e-bdca-a26c23282623/52acfa6d6c4026e0.png)

![extractor = NetDrop[NetModel[

"Channel-Separated Video Action Classification Net Trained on Kinetics-400 Data"], -2]](https://www.wolframcloud.com/obj/resourcesystem/images/f8e/f8eddf03-d7e7-474e-bdca-a26c23282623/091ffd68a35f5a21.png)

![FeatureSpacePlot[videos, FeatureExtractor -> (extractor[#] &), LabelingFunction -> (Placed[Thumbnail@VideoFrameList[#1, 1][[1]], Center] &), LabelingSize -> 50, ImageSize -> 500, Method -> "TSNE"]](https://www.wolframcloud.com/obj/resourcesystem/images/f8e/f8eddf03-d7e7-474e-bdca-a26c23282623/13c0fbef85926d43.png)

![videos = <|

VideoTrim[ResourceData["Sample Video: Wild Ducks in the Park"], 10] -> "Wild Ducks in the Park", VideoTrim[ResourceData["Sample Video: Freezing Bubble"], 10] -> "Freezing Bubble"|>;](https://www.wolframcloud.com/obj/resourcesystem/images/f8e/f8eddf03-d7e7-474e-bdca-a26c23282623/7412d8b96264e5b9.png)

![dataset = Join @@ KeyValueMap[

Table[VideoTrim[#1, {Quantity[i, "Frames"], Quantity[i + maxFrameNumber - 1, "Frames"]}] -> #2, {i, 1, Information[#1, "FrameCount"][[1]] - Mod[Information[#1, "FrameCount"][[1]], maxFrameNumber], Round[maxFrameNumber/4]}] &, videos];](https://www.wolframcloud.com/obj/resourcesystem/images/f8e/f8eddf03-d7e7-474e-bdca-a26c23282623/483ee4b3da7cbaab.png)

![tempNet = NetDrop[NetModel[

"Channel-Separated Video Action Classification Net Trained on Kinetics-400 Data"], -2]](https://www.wolframcloud.com/obj/resourcesystem/images/f8e/f8eddf03-d7e7-474e-bdca-a26c23282623/1efe3703ec7c7332.png)

![newNet = NetAppend[

tempNet, {"Linear" -> LinearLayer[2, "Input" -> {2048}], "SoftMax" -> SoftmaxLayer[]}, "Output" -> NetDecoder[{"Class", {"Wild Ducks in the Park", "Freezing Bubble"}}]]](https://www.wolframcloud.com/obj/resourcesystem/images/f8e/f8eddf03-d7e7-474e-bdca-a26c23282623/2034f97bc0814aca.png)

![Information[

NetModel[

"Channel-Separated Video Action Classification Net Trained on Kinetics-400 Data"], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/f8e/f8eddf03-d7e7-474e-bdca-a26c23282623/4bac9dcdfdd85c78.png)

![Information[

NetModel[

"Channel-Separated Video Action Classification Net Trained on Kinetics-400 Data"], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/f8e/f8eddf03-d7e7-474e-bdca-a26c23282623/3dda00da8dc9874b.png)

![Information[

NetModel[

"Channel-Separated Video Action Classification Net Trained on Kinetics-400 Data"], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/f8e/f8eddf03-d7e7-474e-bdca-a26c23282623/22cdca31f30bb905.png)

![Information[

NetModel[

"Channel-Separated Video Action Classification Net Trained on Kinetics-400 Data"], "SummaryGraphic"]](https://www.wolframcloud.com/obj/resourcesystem/images/f8e/f8eddf03-d7e7-474e-bdca-a26c23282623/068bc8e2ad697f42.png)