Pose-Aware Face Recognition in the Wild Nets

Trained on

CASIA WebFace Data

Released in 2016, these models tackle the problem of the pose and viewpoint variations in facial recognition systems. Unlike other models that attempt to transform different poses and viewpoints to a canonical frontal pose, this set of models provides multiple pose-specific nets.

Number of models: 10

Examples

Resource retrieval

Get the pre-trained net:

NetModel parameters

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

Pick a non-default net by specifying the parameters:

Pick a non-default uninitialized net:

Evaluation function

Create an evaluation function that takes two facial images and outputs True if they belong to the same person and False if not:

Basic usage

Predict whether two facial images belong to the same person or not using the evaluation function:

Net information

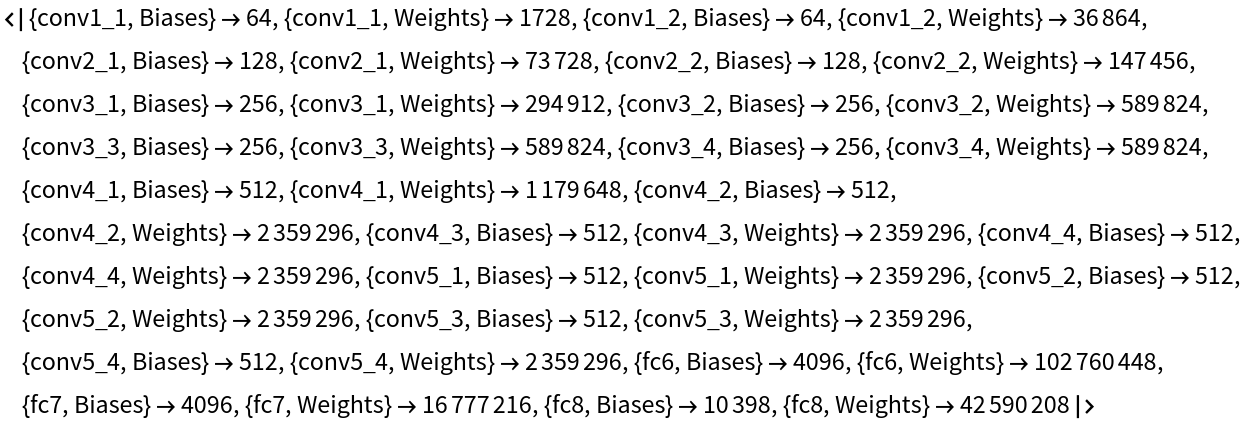

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

Requirements

Wolfram Language

12.0

(April 2019)

or above

Resource History

Reference

![netevaluate[img1_, img2_, pose_, threshold_ : 0.1, architecture_ : "VGG"] := Block[

{net, features},

net = NetModel@{"Pose-Aware Face Recognition in the Wild Nets \

Trained on CASIA WebFace Data", "Pose" -> pose, "Architecture" -> architecture};

features = net@{img1, img2};

Correlation @@ features >= threshold

]](https://www.wolframcloud.com/obj/resourcesystem/images/ece/ece2a2ff-bfc8-4d25-80ad-896c4ad7c90c/46cc56b13108c58a.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/d34c296c-5009-48d4-835b-2704bd767d51"]](https://www.wolframcloud.com/obj/resourcesystem/images/ece/ece2a2ff-bfc8-4d25-80ad-896c4ad7c90c/2d5bd5484eac596a.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/265a43b1-321d-4dc0-82cb-925be03dbb96"]](https://www.wolframcloud.com/obj/resourcesystem/images/ece/ece2a2ff-bfc8-4d25-80ad-896c4ad7c90c/2ad240986dbf07c2.png)

![Information[

NetModel["Pose-Aware Face Recognition in the Wild Nets Trained on \

CASIA WebFace Data"], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/ece/ece2a2ff-bfc8-4d25-80ad-896c4ad7c90c/5b8f71f6e514daa1.png)

![Information[

NetModel["Pose-Aware Face Recognition in the Wild Nets Trained on \

CASIA WebFace Data"], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/ece/ece2a2ff-bfc8-4d25-80ad-896c4ad7c90c/57730a9693872a33.png)

![Information[

NetModel["Pose-Aware Face Recognition in the Wild Nets Trained on \

CASIA WebFace Data"], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/ece/ece2a2ff-bfc8-4d25-80ad-896c4ad7c90c/773fce3137e10a8c.png)