Resource retrieval

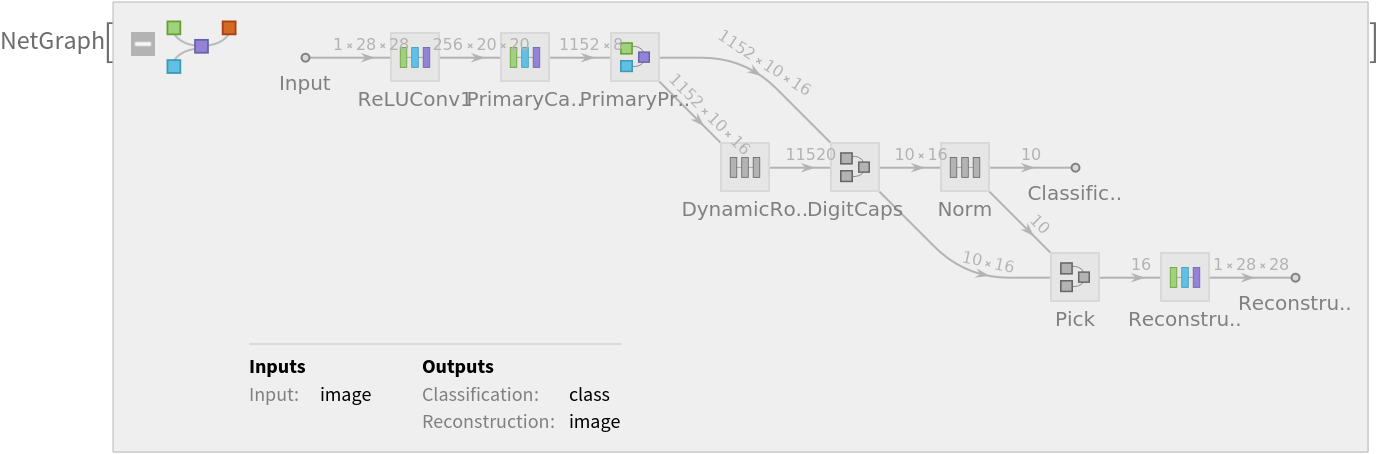

Retrieve the pre-trained net:

Basic usage

Apply the trained net to a set of inputs:

Give class probabilities for a single input:

Feature extraction

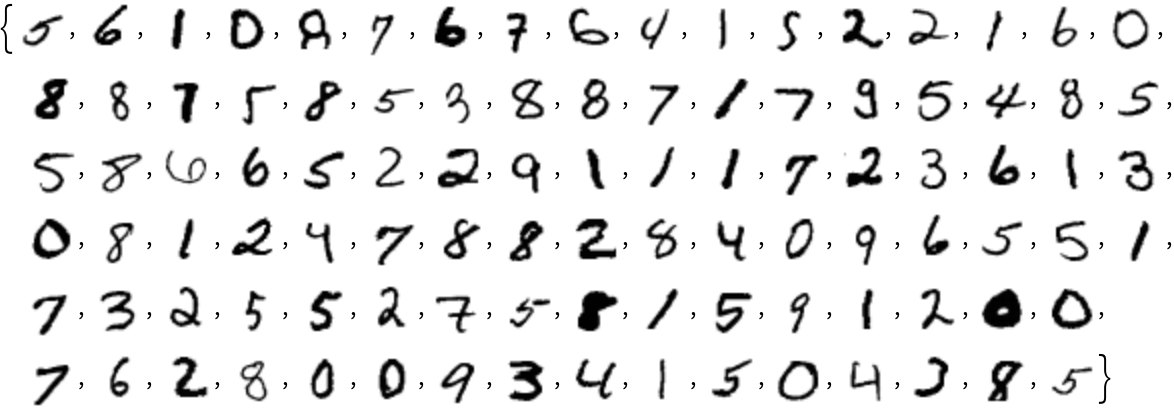

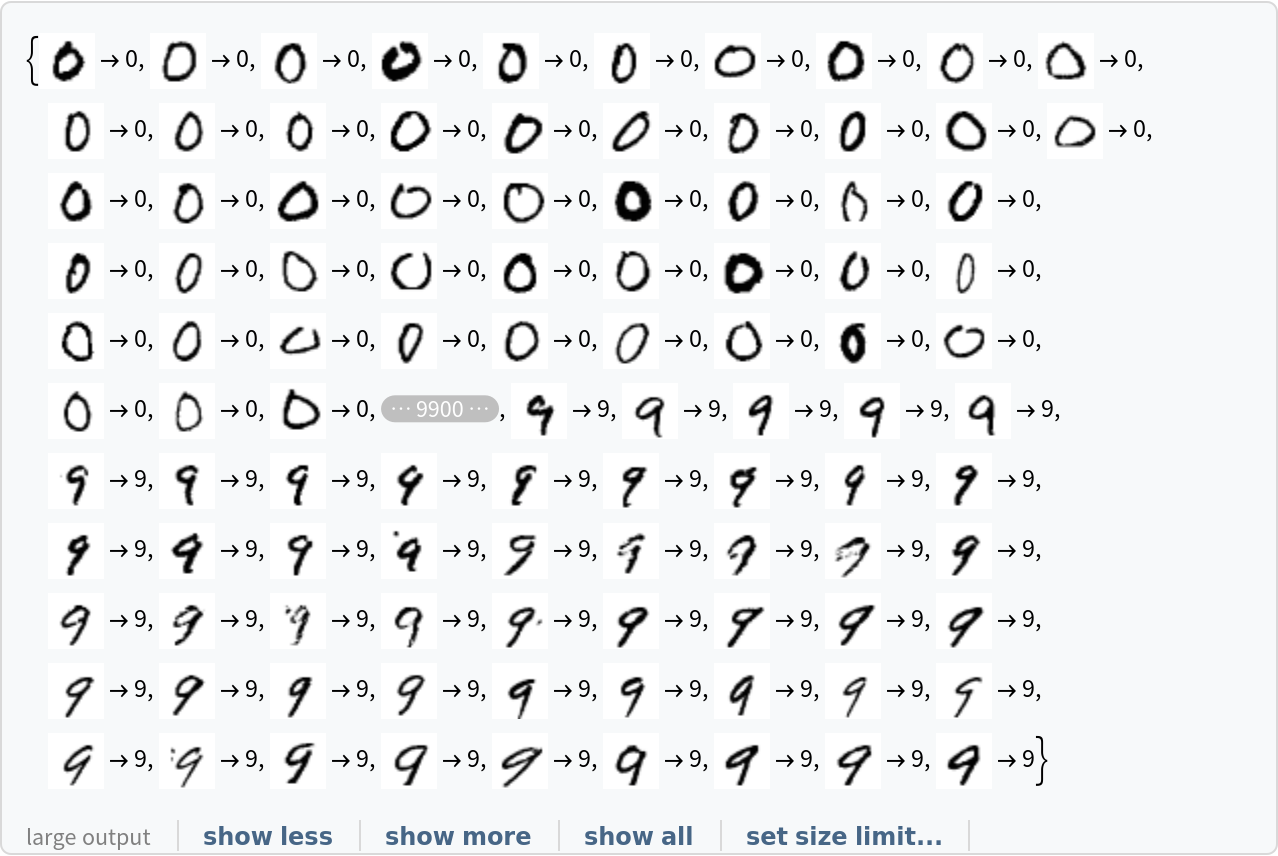

Create a subset of the MNIST dataset:

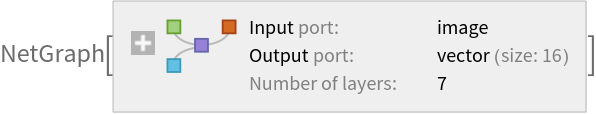

Remove the last linear layer of the net, which will be used as a feature extractor:

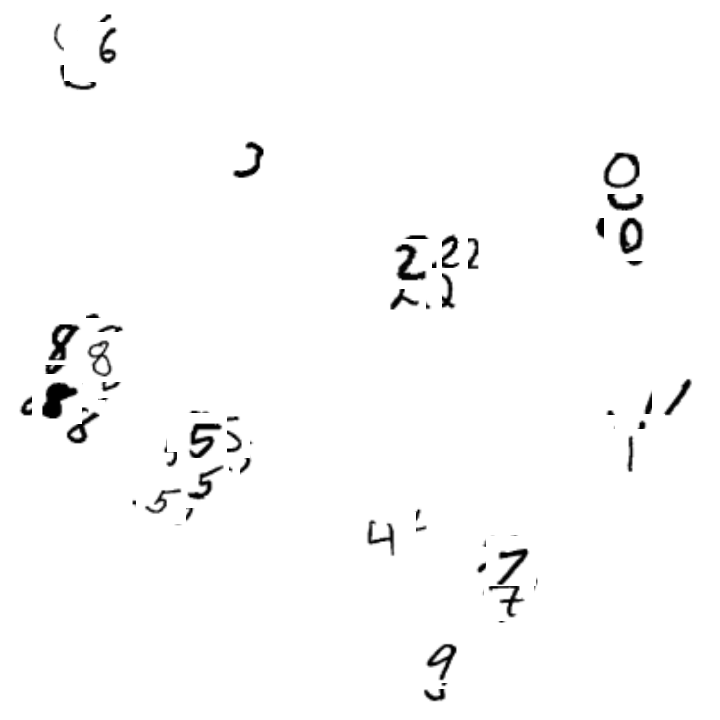

Visualize the features of a subset of the MNIST dataset:

Image generation

Extract the image reconstruction part:

Extract the DigitCaps feature vector for a given digit image:

Reconstruct the image from the feature vector:

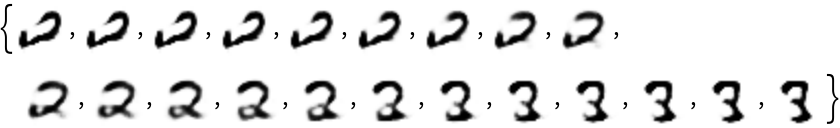

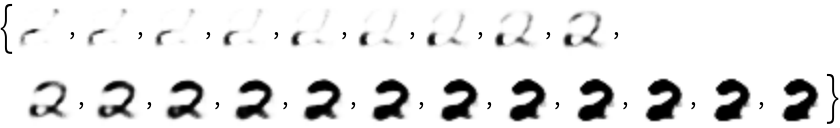

Experiment with changing the feature vector. Add a shift along a single coordinate at a time:

Training the uninitialized architecture

Retrieve the uninitialized training architecture:

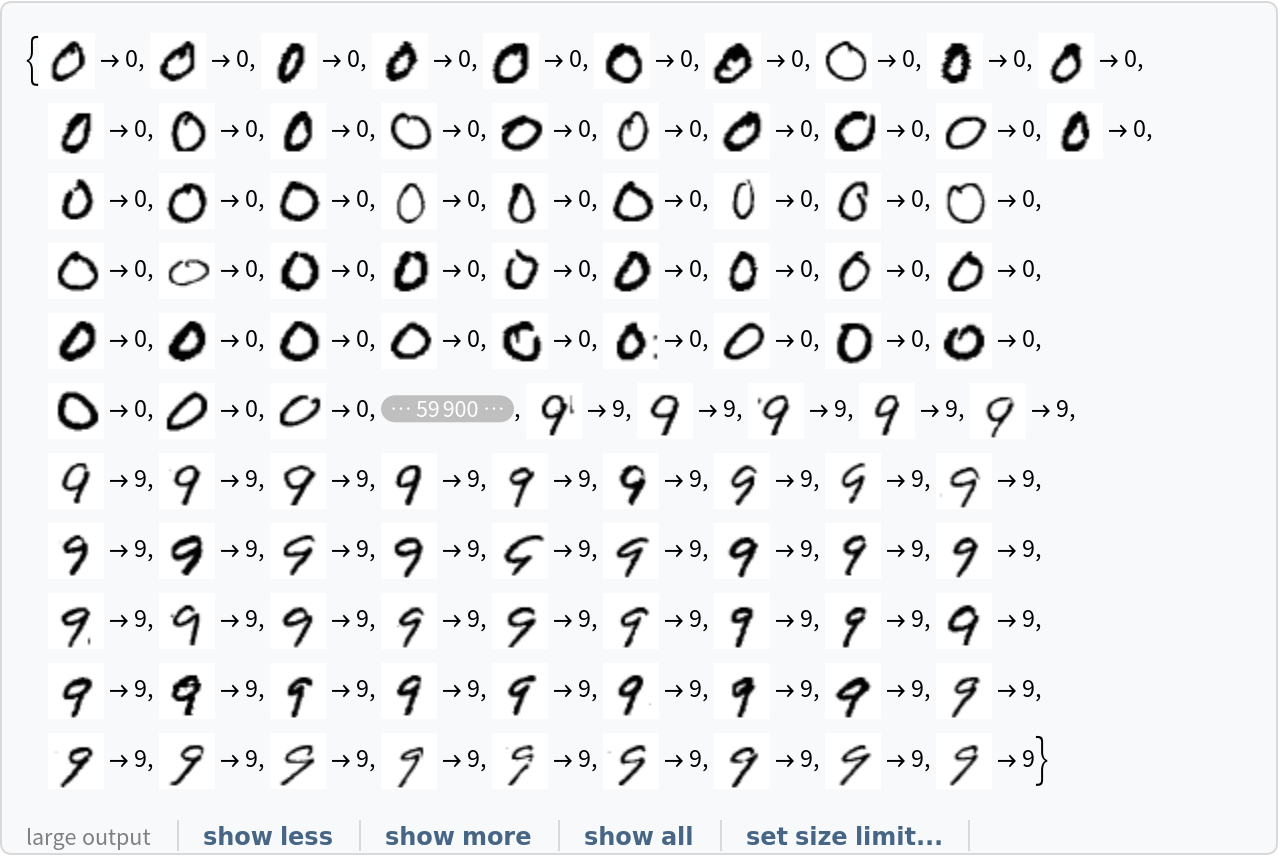

Retrieve the MNIST dataset:

Use the training dataset provided:

Use the test dataset provided:

Initialize the “W” matrices properly:

Train the net (if a GPU is available, setting TargetDevice -> "GPU" is recommended):

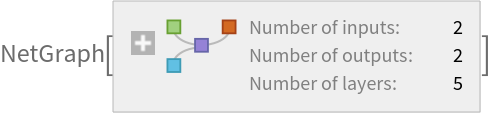

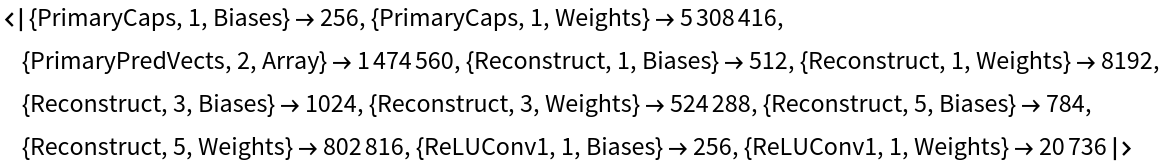

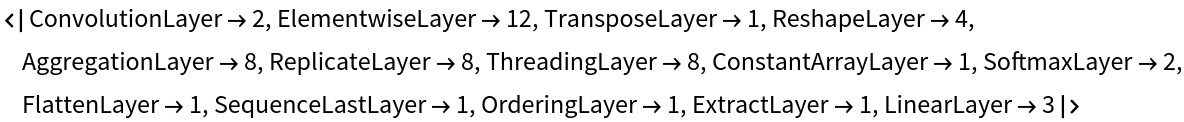

Net information

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

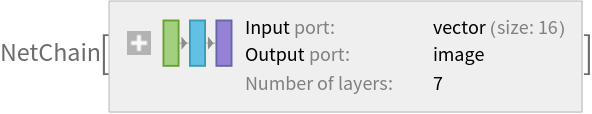

Display the summary graphic:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

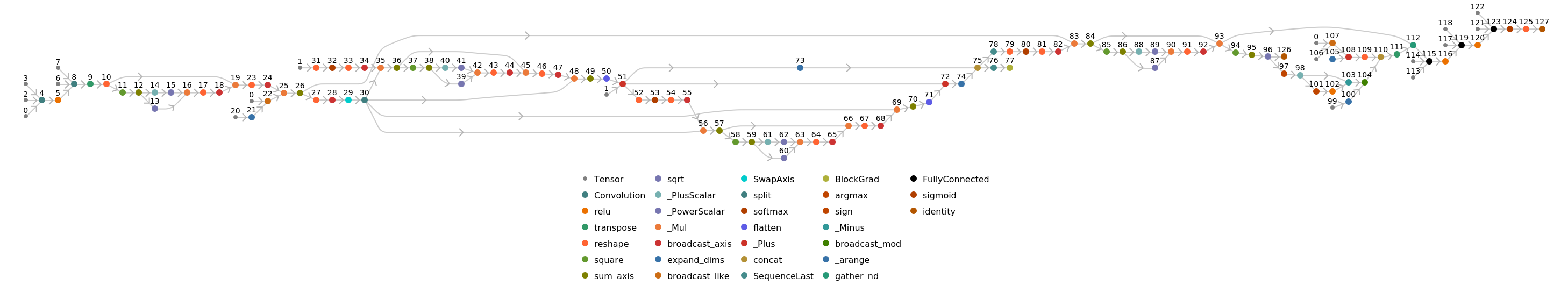

Represent the MXNet net as a graph:

![reconstructor = NetReplacePart[

NetExtract[NetModel["CapsNet Trained on MNIST Data"], "Reconstruct"], "Output" -> NetDecoder["Image"]]](https://www.wolframcloud.com/obj/resourcesystem/images/ebc/ebc8a2f1-bb8b-46de-9c35-2b7083e27916/547551f52ab16df6.png)

![trainingCapsNetInitialized = NetReplacePart[

trainingNet,

{"CapsNet", "PrimaryPredVects", 2, "Array"} -> RandomVariate[UniformDistribution[{-1, 1}*0.005], {1152, 10, 16, 8}]

]](https://www.wolframcloud.com/obj/resourcesystem/images/ebc/ebc8a2f1-bb8b-46de-9c35-2b7083e27916/6423d673094b39c0.png)

![trained = NetTrain[

trainingCapsNetInitialized, trainSet,

ValidationSet -> valSet,

LossFunction -> {"ClassLoss" -> Scaled[1], "RecoLoss" -> Scaled[0.392]},

MaxTrainingRounds -> 50,

TargetDevice -> "CPU"

]](https://www.wolframcloud.com/obj/resourcesystem/images/ebc/ebc8a2f1-bb8b-46de-9c35-2b7083e27916/009376e9d11215a1.png)