Pix2pix Street-Map-to-Photo Translation

Released in 2016, this model is an application of a powerful method for general-purpose image-to-image translation using conditional adversarial networks. The automatic learning of the loss function with the adversarial networks technique allows the same paradigm to generalize across a wide range of image translation tasks. The architecture enables an efficient aggregation of features of multiple scales through skip connections with concatenations. This particular model was trained to generate a street map from a satellite photo.

Number of layers: 56 |

Parameter count: 54,419,459 |

Trained size: 218 MB |

Examples

Resource retrieval

Get the pre-trained net:

Basic usage

Start with a street map:

Draw a satellite photo from a street map:

Evaluate accuracy

Overlap map and prediction:

Compare the generated satellite photo with the actual satellite photo:

Net information

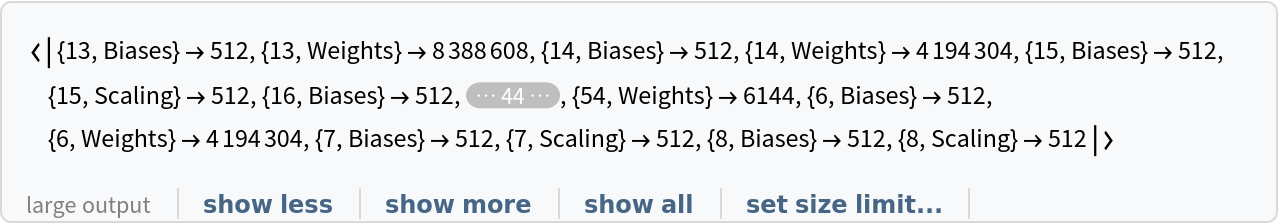

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

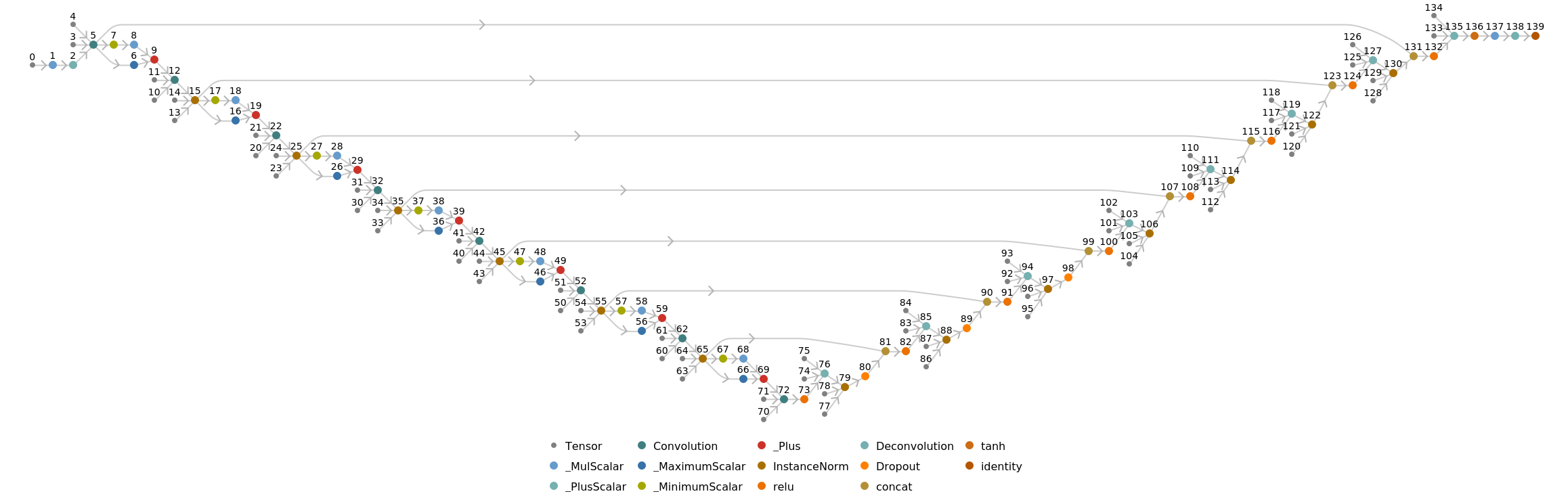

Display the summary graphic:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

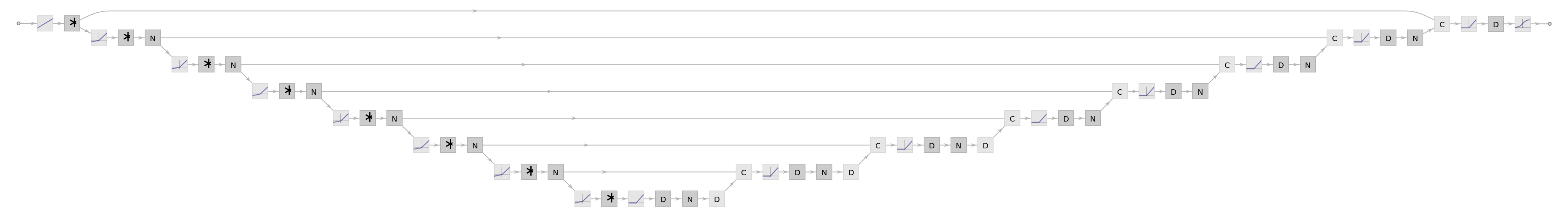

Represent the MXNet net as a graph:

Requirements

Wolfram Language

11.2

(September 2017)

or above

Resource History

Reference

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/d3c98920-5ed4-4aa3-ab0d-31984a34e913"]](https://www.wolframcloud.com/obj/resourcesystem/images/e38/e3859dc9-a7a2-47d2-99bf-355663dda194/71bec02a7ec3737c.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/a676262c-04cb-4021-9fe7-f02045c18046"]](https://www.wolframcloud.com/obj/resourcesystem/images/e38/e3859dc9-a7a2-47d2-99bf-355663dda194/0ac50a56505dbb4e.png)