Resource retrieval

Get the pre-trained net:

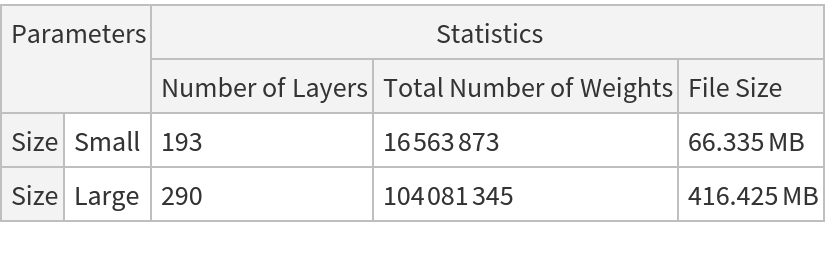

NetModel parameters

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

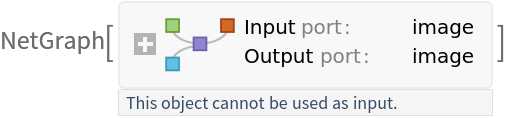

Pick a non-default net by specifying the parameters:

Pick a non-default uninitialized net:

Basic usage

Define a test image:

Obtain the depth map of an image:

Show the depth map:

Visualize a 3D model

Get an image:

Obtain the depth map:

Visualize a 3D model using the depth map:

Adapt to any size

The net resizes the input image to 256x256 pixels and produces a depth map of the same size:

The recommended way to obtain a depth map with the same dimensions of the input image is to resample the depth map after the net evaluation. Get an image:

Obtain the depth map and resize it to match the original image dimensions:

Now modify the net, changing the image size in the NetEncoder. The new net natively produces a depth map of the original image's size:

Obtain the depth map from the new net and visualize it:

Compare the results. Notice that the depth map obtained by resizing the net output (top-right corner, depthMap1) more accurately predicts the depth of the roof lamp and the carpet but is less accurate at predicting the depth of the background furniture:

The first pipeline is also faster:

Net information

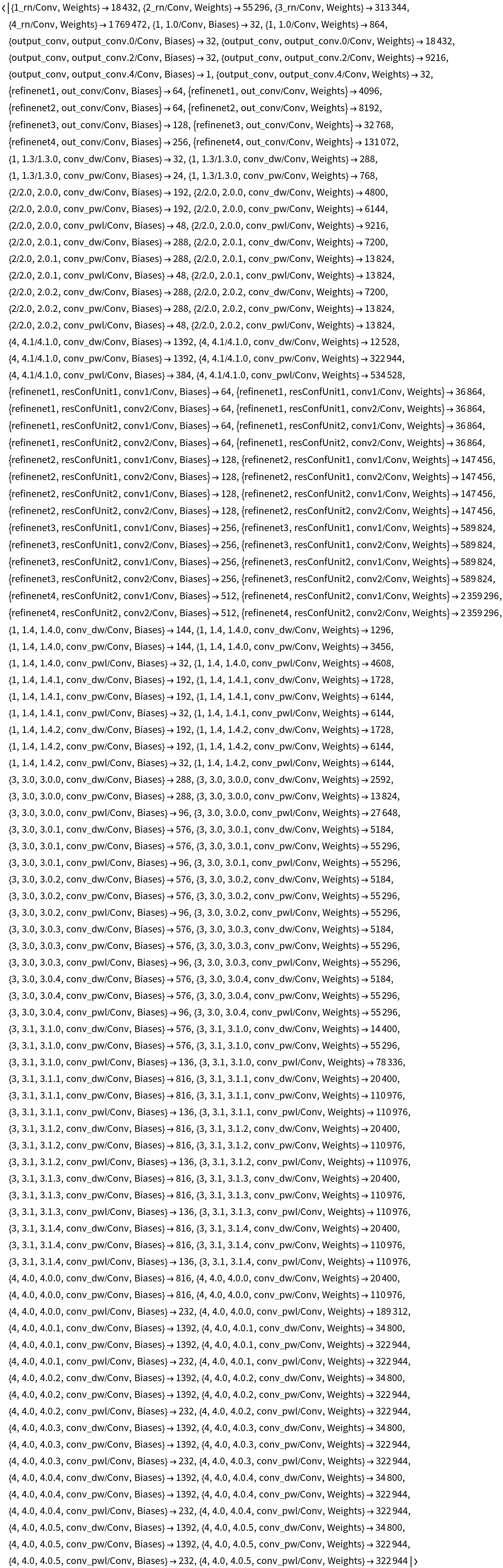

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

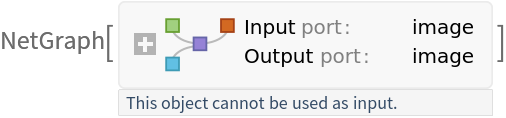

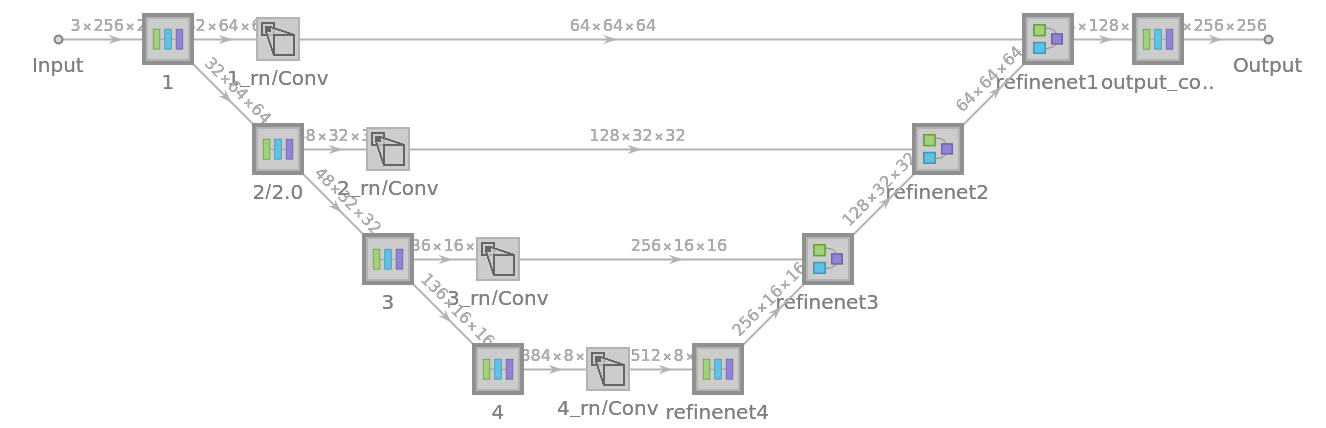

Display the summary graphic:

Export to ONNX

Export the net to the ONNX format:

Get the size of the ONNX file:

The size is similar to the byte count of the resource object:

Check some metadata of the ONNX model:

Import the model back into Wolfram Language. However, the NetEncoder and NetDecoder will be absent because they are not supported by ONNX:

![NetModel["MiDaS V2.1 Depth Perception Nets Trained on Multiple-Datasets", "ParametersInformation"]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/3128684b57dff4c0.png)

![NetModel[{"MiDaS V2.1 Depth Perception Nets Trained on Multiple-Datasets", "Size" -> "Large"}, "UninitializedEvaluationNet"]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/149d3d7bd3006a8e.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/4bd7b1eb-424d-41d8-b189-dcf0e584f339"]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/6dd40f6358be37b8.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/fa4013e8-8644-41d9-bdd8-4f8a21785852"]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/428f603db58c330e.png)

![depthMap = ImageResize[

NetModel[

"MiDaS V2.1 Depth Perception Nets Trained on Multiple-Datasets"]@

img,

ImageDimensions@img

];](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/7d87c2f9a14e4750.png)

![ListPlot3D[ImageData[depthMap], PlotStyle -> Texture[ImageReflect@img], PlotTheme -> {"Minimal", "NoAxes"}, ViewPoint -> {0.002, 0.8, 1.2}]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/04eb49c43bc4d1d3.png)

![NetExtract[

NetModel[

"MiDaS V2.1 Depth Perception Nets Trained on Multiple-Datasets"], {"Input", "ImageSize"}]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/278e23e30043b351.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/19297e82-8fa0-4b9d-b0c8-5163e68eb120"]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/75feb46506635f54.png)

![{time1, depthMap1} = RepeatedTiming@ImageResize[

NetModel[

"MiDaS V2.1 Depth Perception Nets Trained on Multiple-Datasets"][img],

imgDims,

Resampling -> "Cubic"

];](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/70cb7f186ac70329.png)

![resizedNet = NetReplacePart[

NetModel[

"MiDaS V2.1 Depth Perception Nets Trained on Multiple-Datasets"], {"Input", "ImageSize"} -> imgDims]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/435b6639fc339452.png)

![ImageCollage[{

0.8 -> img,

0.2 -> depthMap1,

0.2 -> depthMap2

}]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/4d83a91ecbefcab1.png)

![Information[

NetModel[

"MiDaS V2.1 Depth Perception Nets Trained on Multiple-Datasets"], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/69dfa3339b38e3fe.png)

![Information[

NetModel[

"MiDaS V2.1 Depth Perception Nets Trained on Multiple-Datasets"], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/4295f3cc31e6820d.png)

![Information[

NetModel[

"MiDaS V2.1 Depth Perception Nets Trained on Multiple-Datasets"], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/5b3675f545a83de2.png)

![Information[

NetModel[

"MiDaS V2.1 Depth Perception Nets Trained on Multiple-Datasets"], "SummaryGraphic"]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/172c5e38a396e0e3.png)

![onnxFile = Export[FileNameJoin[{$TemporaryDirectory, "net.onnx"}], NetModel[

"MiDaS V2.1 Depth Perception Nets Trained on Multiple-Datasets"]]](https://www.wolframcloud.com/obj/resourcesystem/images/d99/d990eb12-b645-498f-bbcb-601a25e0d8c9/541138cb5dcd39f9.png)