StarGAN

Trained on

CelebA Data

Released in 2017, StarGAN performs multi-domain image-to-image translation. Adapted from CycleGANs, StarGAN uses the same architecture for the generator network and has a similiar objective loss but instead of having one generator for every particular image translation task, StarGAN has a single generator for all translations.

Examples

Resource retrieval

Get the pre-trained net:

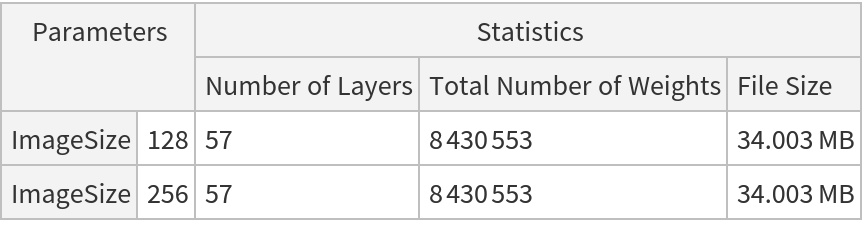

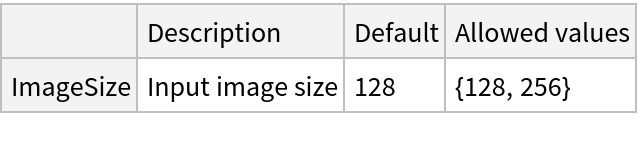

NetModel parameters

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

Pick a non-default net by specifying the parameters:

Pick a non-default uninitialized net:

Basic usage

Evaluate a net on a photo:

Attributes interpolation

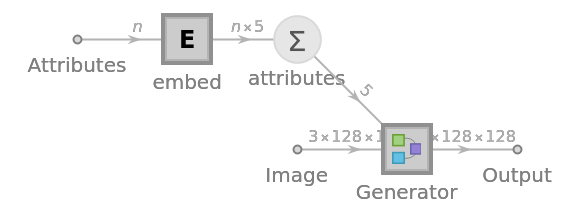

Remove the class encoder from the net:

Instead of using one-hot representation for the class attributes, allow continuous inputs:

Net information

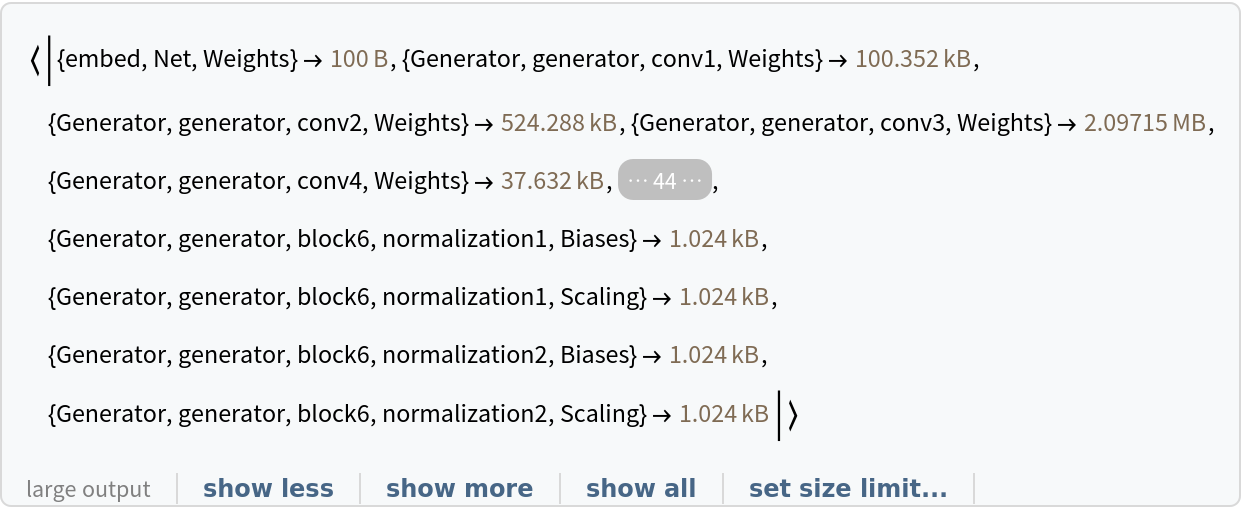

Inspect the sizes of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Export to ONNX

Export the net to the ONNX format:

Get the size of the ONNX file:

The size is similar to the byte count of the resource object :

Check some metadata of the ONNX model:

Import the model back into the Wolfram Language. However, the NetEncoder and NetDecoder will be absent because they are not supported by ONNX:

Resource History

Reference

-

Y. Choi, M. Choi, M. Kim, J.-W. Ha, S. Kim, J. Choo, "StarGAN: Unified Generative Adversarial Networks for Multi-domain Image-to-Image Translation," arXiv:1711.09020 (2017)

- Available from: https://github.com/yunjey/stargan

-

Rights:

MIT License

![NetModel["StarGAN Trained on CelebA Data"][<|"Image" -> \!\(\*

GraphicsBox[

TagBox[RasterBox[CompressedData["

1:eJzsvHVYZFe69j0nk06nhYamcXd3d3d3aNy9cXfXQsuAAgooCnd3h3LFvekk

Mxk7c95z3u/7/v1WQaYnZyKnM5PJJO/1Jk/vqyjk2vV71rqf+1l77S0enuwR

/dGvfvWr9E/BwSMs2zwtLSzXkwN84Z2UHheTFBVpn5QRFROVphf+a/BmKfhX

Av6xXhNph98MEv3oJw4y4/hD4n/8YfBdcP7gBYV58uFBPTz9rvhBP/wQtKOz

D4//k/i/h/9/+f8oWfjmt77/dx/O/Ot/4Qdl4QPxfmD8n8T/WzPyzd8lUJm/

RP7/Kto/euApjG8m8cOz8BOT/wXxB2BBvB//33XOYPx/T+34Cfj/IPK/IP7g

JPeJ1Pen+q3nDN4EOXrQzwfavxT+/1rN/5AA9ADbhxR819k+8MeR6Q/x/hd/

yiz8EvkD0QDcwBHEw5m8f/3eUj4EjkQ7INHe/9jfwAfMH777vhD/svj/S7Lw

N9gP7gmzvgTvk2kHRCoefJcKskPHUeh7JNounrxHoBDIDByNiaMzifRDEkgT

jUmg0MEPg98FWbj/a0wy/RgECcQH14J/rf789PwfmL+XjofXAOD7F0BwdnDE

XRB44h6BtL2P29jZ3zkgbO/j9wlkAoVBoZ+QaCdE+skOnvxQow9I1AMyjQAy

Qr2fU/RDPOOQ+I258DPk/y9Rnq+rzfvSiSPTtnFEQH5ze3djfX1pfmFqdGQU

g+5rhw71tIMY6EYO93VOT4xubG8TGEf7FOYOjrS7j1tb31hdW9/eAbki05nH

FMYJHmSBxiD9kL7sn0r7Z8j/Hg5LMR6GPRjDW3uE1fXd+bmFUWwvAlJemBwe

/9o+xEHX31o9wFYv3tc2I9wjP9K7OC4IUpw1Pti3uXuwsbWL7e2GQyogBWnV

2UktpZloWBNIHI3GYByfE1mt8YM1Pfm58f8uZfgJkvLVgKfS8UDqyUAuGDtE

ytLKxszkTD+qvSg5KtDOyEFdwkRBwERRyFhB0F5H1stC081E1d1Mw89cPcxW

J9jeIDnQBdPWimpqSA/ziXIzDLXVCbPVTnAzTHAzzgxxgZRkTI4OU2hM2tE5

UCEK8+gDC/E/G/tPxv971g0enA/LLlKPiPTjfTxpaWVranS4qTwryEnfUI5b

Q+ylliSPjhQ34O+gK+dlrhHkYBjhZh7mYmKlKWWiIm6qLOqgI+NnoRZkq2Op

ImqqJGSmJGyjJuaiKx1krZnkY57y2iLZz6omK25jdZV6ckmkgyn2QQt0v3T+

31xT+noWHt55gA9eAN+ys3cwNzWBhta/CfYwVORX4H+iKPBCW5rHQEnQXE3M

VEnYQk3c2UDR00Q52F4v2t083MXYSV/OVFlEQ4JTVeSFiYqghsQrZRFuFQlO

FTEOFdEXOlKvbDREguzUkv0s4tz04r3MMSgkkXrviL76XO8nwj99kfOH8v+x

tOXr5fW9J7//7jGJeownUUGpPcCTt3dxYwN9uTF+jnoyqsJsykIv9OT4jJUE

zJSFTeQFjeT4zJWEHLRlPIyVvcxU3Q0VfM1VQ2w1fUwVbTTEXYxVrDWltCQ5

1CVeKgpyqIlxa0ly68vy6UnzmCsJuBlIhznpZAU5pHoaRzrqNpVn72zvEBin

RCY4DSaBdVagOv+1WaB+bcH5F8f/+2cN4P++LQWOEU85BDZyl0De3NxG1hYH

2mhrgQEs/EJNhF1HmttQQcBIkd9cVdhRV+a1pUaEo1GEk5GvubqjrrSDtqSD

ljg4gnS4G8qnvLZN9LQwluNWFWOX5nuuIMCuJvrSWFHYVlvWTkfeXkfa10I5

0lk/3d8mN9gu2kErK9p3emIYT6aCckBkHhP/esngq/N/IP+e/3fl4mfI/+tZ

+Oab9yk4/Mpq0g/XD/Arm9tTY8P5iSEuuuLa4k9VxNg1xV8ayvObKgubqYrY

aEk668v5W2mFOuiH2On5W2oG2+q46suYKQtpiL9UEmTTkeRy0JIIs9WojPeL

cNLTBuNfiENWgF1RmF1bittSQ9LPRtvPStPLVNnbTC3K1TjO3TQj0DbeTTfO

wwSNaN3HEXFECuuqAeP0oRzc0/5KJP+1+v+PxEP7/7AU/M04ANUWOMzt/ZXl

zeEBbEqAvYUir57UKy0JdkM5LlMFfnNlIRBmSoL22lI+5mpg2Afb6kY6GwdY

aXmbKDlqi+tIcqqIcqqKc+vJ8DnrSL5xN0TmRlfG+9ppiGmIv5IVBLPgqbII

u5GigI2WuIuBgreZuquRioepGpg+QLhSfS0yAmx8LVVhdSV729s7u6Ds0+/5

/7Uiv9eiXyL/92rzkALQHxEYLIdPooMv6dtE6tL6zvr69tTw0Bt/J1tVfi0J

Dl1pLgs1EXtdGWs1UVsNcSddGQ9DJT8zjWhXkwgXfXAMttULstbxNlEFk0JX

hkdNDOj8Kx0pXkctCWA124ve1CS89jNRNJLjVRRhVxRiVxBi05B6pSvLY6Yi

bKEsBOB7mKo7GyhYqorba8tGuBil+pp5GcrAqgv39g/Wt3Z28AQihUoFKsQ8

e5gIJJZZ/fYS8PPn/+Arviq+QFdZ8JkUxuH6Ln5memZxdrYLCY3zsXRU49aW

emGqIuxrreVhpmqlLgbguxkqgGEfYq8f4WQMXGWQvY6bibKNpjQw/+Yqovqs

uixkqMCvJcmlK8XtbiD7xt0ImhPdkhEW66RnoSQAsqMjxaMl+UpdAuTopYmy

iJ2OrJOO3GtLbR8zDVt1KUN5ATNFAXd9mSQPo9fGMvW5iSuLc9sEMo7CBBOB

+hdf+l6Rfon8/9ssAAaDfkilH25sbE1Nza2srHc0lEfYqdqr8ejLclqo8nia

KroaKlqoCNtpSrjoywH+r600geZ7m6oC9bDWkNKS4DJTEbPVkbVUlwT23kpd

wlJN3ECWX1+a21VHIuu1JaYyva88OdnD2E5NyEyRz1JZxElb0stEwdVAzkJF

xERJxNVAMcTOIMzRONTRyEFXFhgqTTEOS2WhNy7aoWZyhYkh81MTuHthJFFp

7/l/Twp+zvX367OA9YJ6RKYxt3Z2p2bmtnb2hrphMe76Dmp8lko87kYyTnoS

jjqStupiQHMsVYStVEVAeJoqO2hLGMvzAiepKcFjriJuqyXjZ63rA5pfAyVn

HTk3fUV7TWkn4G2MZJPd9VEFMb0lCQmOOv4m8jYKPMYSnNaK/F76kqHWqqBH

9jFRs1aXtNWS9rbQBI1bhIupo568vjSvkhC7rhRXtIN2tJ1aQZTP0uz0AYXO

WiwCyvO1HvmXy5/0YO0ox8BmTE6D/ycx3W1vvE3ctYRcdMSDrJSdtEXtNEVs

1EUcdaQsVYV0xNnttKWcDRX15fhVRdjlBF+IvHoG/LypipiHuaa/rb6LgZKr

obK/lU6onZ6rvry9mqinrniUjWJNjONQxZv2jKASf/MQYykbJS5rBW4XDeFQ

G/UoB91AMzUnHVnA3F5X1slAwcNIJdjOAByNFAVVhNnV+Z+GWMoHWshUpEbu

bW4fkBjAkVIZYPCckBjf1wX8/Pk/6D+eQltYXpkcGx9EITMC7PwNJYDqhtpp

uuiIWasJ2muJmygKGMjxghKsL8ttpycBRFuK67nQy2e8Lz6RE+DQl+E3U5cy

UZXQlxMwUxG11pJx1Fdg/Yosl5UKX4CxbJa7QUOsU3dW8HBJbGeab56nVrS5

RKSJaICugL+BWLC5QrSTXoCVspuhrJOugrWatI2mlIWKqLuxiqepCjBaqkIv

FAWe+VsrhVjJ1WXF723uEql0EqtBOwf8Hzj/Evk/XJDaJ1LXt3fGRsYHO+D5

Ea5hNor+JlLhdqoOmkJGsq8sVASsNMS0JDiBnwcVU1OCU1mUXYTjY362R2Lc

z41VxA1l+XSk+LUledRFQSJ4QGkItdcNs9MOtFCNttdIcdVKdlZPddWoDDTp

eOM5kBM8lBfUn+41nuEzku7eGmqSYiXtrMhhIffSXVfCRVvcRkUQCJ25mjho

7nSkuOy1pD2N5a3VhdSEXqgLsgVYyvqZSlWkRGytLu3gKaxaQGOSHwrBt3mh

nzN/8j3/PRJ1cw+/vLYxPThUGh8QZiXjby4bZqdkq85vKMNppsgLPKeu9CsF

QTYlwee6MlzKIhwC7I/5n/9ahPNj0F5ZKAkayfAYyAqYKQk5aYgGmSkkOGun

exgWB1pXhNlXBVmUeumV+xtBE5wHcoOWa5PW6pMPYFl4RBYFnrFdF7NWETKb

79cQahZsIG4q9sxSltNKgdtMlgukADR3mpJc8rxPTBUFnHUlHTVEDMHsk2L3

MZEMsVZqh5RsAke6i8PhiaxrOiALf7GjX58LP2f+BCp9B0/cPCCsbW6NjYw0

FaeHWilEWCskuOp5GMvoSr0E/sRGVVBPmkdX4qW8wDMloedy/E+FXj0RfPmp

LD8bmA4mSkLASWqIghLJaSzD6aDEE2IsGWstl+OuW+RtVOipV+1vAo92wGT4

zVXGbTVl7jZn4mDZlPY8OgpELq7pzUFjwj4kZqMydCLPrz7YJMJYzEWF31qe

z1qR11D+lY4Mh5LQE2WhF9oSXNYaInZawsYyXFZKfJ76YgleZpNDmP0DIp5E

JTOOSKAjZvXFrBT8bMf/+wWHhyXN+4uGJNBYLi8uTGE60gLMwiwlUz0NvQyk

5fifGyvwe+pJmCsIaIi+tFQV1ZUCU+CFAMdjMZ4XKiIvVUVYWqQhxqEhxqYr

/txS8ZWzBl+MlUKOm3ZloGnbGw9kgltvus9oQchcZexGU+ouLBuHzCd1FFJR

BYA/tT2X1p5NRaQzuvKpyHRyUyy5KWazKmQww63K3zjEQMJZXdRQ+qW+JLuJ

Ip8M92MNCW5NyVemyvyWKkKmCjwuOiKBZrIVqSHzM5Mb23tECp3KPCHSQS98

9jPn/5dqy7oIS6AwiWTm5hZocgdqM0PjHVTzfEwibNQAakXe51YqovbaYqDt

kuN7qij4TFn4pQDHU+7nnygIvACdkTL/EyBHwAu5aAj56QpFmIhmuarVBJhA

Q6yGsgJmy2OXa+J3mlP3WtPxyFwyqoDUUUDpKmb2ljK6i457Sw67CpmdeUed

uUfdBYeoLDoimdmRsdcYt1ETuVwRgklyKnTX9tUT0RN/bqHMZ6bIryLIpiry

QlOc3VJNDPQONhpCPiYy0XbK7fWFC/MLu3t4Aol+v1L9VUfwz+D/j19/Z635

0A7v9yockmhHBBJtdWNlemIUWpYVYi5REGiR7mNmocilKPjcWJHPXJH1wcHc

l+Z6rMD/TITzMcfTR5I8L6wVBbTFXqqLsOlKcFjKsPtq8iRZK9QGmKKT3Mdy

A1eq4vdbM3ehmThEDggyKp/ZU3zUW3bUW36IKTvClJ30V5yC6C077S05RRee

dOUeo7Jo8BQ6Mo0MTdmHxO3WRG1WhU8W+CJibWIspA0kXhjL85jI8UpxPVES

eWko/cpaic9QhstFTyrYSiHVz7QfUbe2vHpApOJoDDLLlJ6QaUc05g+eAvTj

cxBff/31+FH4k8Dgp4FWlwnOE5zkxub+4sLieF9bordRppdejr+xh56olshz

PRkeSzVBK1UBTVF2Uc5PJbmfir78lOvZYyG2R0ZyPHoS7CpCz7XEnlkpvAzW

Eyp002iPd16siNtrydiEJO82pxMQYMAX0rpKGD1lR33lLNr9VSeY6pPB6tOh

2rPBmtP+ytO+spOektOe4kNU9nFnDoBPaEkkw1KILUn45jf7DfGb1eFLZcHo

VLd4GwVdkSfAyhrJ88nwPlYW/NRYlttaVdRCgddNTyzYSr48KWCkt3Nre5dA

oxMoDECeQj+m0H/wFPgf+f8I8bDUQzukME5294mrGzubK6vN+XHJ7uoNCe5J

7jreJlK64hwWiry6Es/MlfmBnxTleirFyybw4hPe549MFPjM5F+qCj/REntu

JPFpmIFQe4zdbFHIak0cDpax3ZgCUkBhKXwRA10K4rC3/Liv4gRTeT5Qd4at

Ox1uvBhvOR9uPAWvMZXHPaWnvSCKT3oLTnoKaO2ZZEQaBZFOQqQSoMkgBTt1

kSvVkYPZ3lmu6qYy7KYKnLrS7IqCLDE0kuMDNtVK8ZWPkWSEvRqiMnt2YpxA

Yu04AuRBCshfqwI/SGf+Jgs/sv/56vLWIZ5MX1rdOiCSxjCowlC72mi7DG9d

LwNJfUlOA6kXptIvTGTZwaxXFWaX5H6mJMwJ3L6ePI+2BLu+JIca/yMLafZ4

a4XeNy4r5REbdfF7LSl7ral4eBa9q5jRXULvKqF3ljC6So56Ko56K0/7awDw

84GGyzHY+Sj0fLj1fKTlbLD2fKD6Alt5M1R1jik+7Ss66S086gamKJuMTCfC

08iITHzLmy1I/CYkYazgdaKtnLEsu6kSj7rYC1mBZ2rCbNaqgnZqvC5agsEW

0hmBNiM9qB0wpCgMIpVJZfH/axX4efBnrS0D8WFt8KAxdvbxwPNvb+00ZIUW

+xuXh1kHmYsbyXKqCz+3UeY2l39pIcdpIs8rzvGpguBzSc6P9WQ4jWW5gAlU

EXhsJvlpqpU8KtZxKj9wvS5+tykZD88kIrMpHQW0riLAHwz7o76qw97qE0z9

6UDD8UA9iNOhxosR6NUY/HIUdjOJvJ6AXo42nA9Vn2MrTvuKj7vzj7tyjsCx

t5DRnU9FZRNhKSR46n5TwkZt1EpVZHuCU6S5pJEUu7USr4b4C2UhNlD6HTQF

7FR4g8xlE1w02ytzlxcXiaylISaJtTR3+oH8vw75W3PxI+nPV7t3iDTGHoGy

S6Ae4PA90PrCIIuqcIsMD007lZd64k/t1QQcNfiNpV54G8poi3HIcgHv/VxT

jF1PisNC/pWa4FNjSbZcF2VomBk2iTX4D1rTCMgccnseuSOf2lVCZWl++elA

7TG27hhbfzLQeDrYdDrczBrwIy2no62Xk4ibmY7b2Y7b6fbLCejVeNPVeMPV

KOQSU85szaDDs07RxWe9pcfoInpHNhmRQUak70Litmuj50qCawP13VW5jGQ4

7dX41cXYVYWemci9slLidtcTibZTqkz0m8L24HHAB7G2O374+P9p+D9stiRQ

AXxg+Ak7ONLCxHBdWmBVuE1RiFmgiaiJHLuFPKe3jrC98is7Ve7XprIaImxq

okBpuQ0AfAUeLdHnxmKPS7x1oeGmXXE2s4WBBy1pwFhSu4opXUW07hJqdxkN

XcHEVLPgD0COsGDkN12MwS7GYQD75QTiEjCfQd3Od9+AmOu+ne+8nWu7m0Pe

TSNuRptO0BUXveXnmPIzTPkJsEno4sPOAgYqDw+UrTllB5IwnOed56ZqKceh

J/nCWpVPgf9TTRF2SyUeBzX+KBv5bD+j7vqS9ZVVMp1OpjMp4FP/XfrzTRV6

+PIfhc/aTgPaLvoBgQS88vYefqS9oSLSrj7WIdNHx8tAzET2ZYCpdLCxmK+h

sIuukJXiSyslHjN5TjDGbBReqQuzaQh8kuqo0Bxs0BxiMlMcDGQfdLIEZB7g

T0OXUbtLWfD7ahiY2tPhptORVhAnQ61no7ALAH+q7QJozmzn7VLf7WLf24W+

u4Xuu4Wuu/mOd3Oou9n2m0nY9XjzzWjD1TCo1NWnmIrzvrLj7iImKp+MzNpv

TjloSZmvCEOnOkeZiWuLPrFQ4jaS55Lj/lRfmsNKhTfQRCLWRrouJXBpZopA

o5FpdCqN9alBUwZqAe3wX+l/7q9wHRHoTMAfR6aBbn2fRNne2u6szoQkuMJS

3bO8NO1VeH2NpJPctFJcVUPNpaxVuI2l2Hz0xZ00BBw0BEwVeKVffRJnKd0a

blrvqz6U5bVQEblRnwh8PhFYzfYCSkcxHV1x1F972F93PMiCfzEOv5hAno0i

zifarmY6Lqc7rmdRtwvdtyuY2xXs20XM29nOd/Pd7+bR75Z67hbRb2c73k4h

b8ehV8ON14OQ877Kk15QkYtAjwZUCNeaQoSnb9bGjOf7NoSZemry60u8sFLm

1RB7rinOZqbE56otGGUlleatP4JG4MhUMp1BAg0miQE8NpXx3/YL/av8J4HO

2kKzhycRqQwcmTQ3hK5P8YMkuqCy/bz1BMyl2bJ9TerjXWpj7INMpeyUXzqq

8bhoCAJFctQSluD61EtbuCZArylAuzvKdKrQf6kqer0uAbhNcls+rRO4zfIj

TM0Rtu5kqPFspPVsDHoONGcCeTHedjXVcc+/HRyvZlFXiz23K/1vF0FgP18d

+mJt5PP14bcr/e+WekE67ibbbkaab0bqL7GVwJeyWuOufGp79n5z0m5j4l7T

m9lif3SyQ6q9gp08p5E0u7HiS10JNlMFHmtlrkBTqUQXDWRF2vbWJvikrG0q

FCarC2CcUL+2KPo/8v+u+Efr77347BwQQAo2N9a6KzJaEt3hqZ6tyW6G4p8k

uGi3ZbxuTnAtD7PxMxTz0eX30RcG7ZWLpqC+DKee6NNcZ4UyH+3uGPOJbM+5

sojl6hjAHw/PAZ6HiioEbue4v+50pOEEePsx6Nk40Hz45f34v5hsvwDi8xDT

7WD8360OvFsZvFsbAvHZxshn64OfrWA+X+r5fL77drztZrT1erjuYqD8or8M

8Kd35NBRuaT7KQCysFEdPpzhAgk2CjcRM5Fm0xFjM5J9aSTNBiTIVUckxk6x

NNZldWEeT2YAvSWSmQ/twAP/D9SffxJ/IvMYtL04AhlHoKzOjTcluiPTvMdr

4vNeG9oqv+wrj0cXRsLSfUuCzQINBEJNhV01eRzVeZ20RAwkOcKMREq9NLqT

HIcz3SbzXy9XxSxUxqzWJuDg2SRUIbmzhImpOuyvBoP/BFgdoDxTbayCO9V+

NtFxPtl5OY16iOuFvndrwyzyq4Pv1gdvl3rfLvW8W0B/voh+N98FBv/VEOys

r/6E1ZcVXfSXHnflMdrSqYh0MqspY8VOQ9xM4WtUvG2Ws4K7BreeOGtFyEju

laUSr7OmQJSDUtZrw4ku5AGehqfSiSAL91OA9mFV+J/Kn8C6UYKGwxNpNPpw

W21znH1/cehweViIkWCktUxHTnB/eVxPUXjBa4NYa4l4ayknVS47dTCveTy1

RWpDTdvj7cfzfMayPedLgtdrYufLI8H4321OJbblUln6A1rdssN+UHmbz8eA

20FeTT3IPvpyBn010301i76eA8oz+BbAXx+5Wx9+tzpwt9x3B8R/tvPtDOpu

DnU7Drsaaj7rqz7vAy6o9Kyv6BCVzWhLo8BSiK3JhNYkAjxlrzlhpSJ8IMO9

1EcrwkzcRolLmf+xrgS7qfwrd12RYEuZJDcNeHHywT6OtX+MwmSJD0t/flL+

39zGfF+CGcD5kEjk3e2tnsqkthTXFUQuItU1wkysOcFlpDZpqDqhNcm9PtY2

y1050kLCU0fIz0TOWY0331O7O9m5L8V5JNNjrjgAfPyF4tDFioi12tjtxiQ8

NI0Iz6Cj8mgduXR0GeizzkdbLieA+ADlabuc7r6c7T2d7rqYQd+Amrs6eLc6

9BbE8sDNQs/bBdbgfzfbeTfbASzQ2wnozSjkeqjmeqDyElt20lNw1JlzhMpk

IFPJ0CR8SyIRmnTQnLBVEz1V4N8SYZFkJ/faWExf/LmWCJuh1At3XeEgC+kE

J9XSaOfVhWkcmc7ar3W/EEf7sIuSPyL/h0Vmyv0lua820tMP8UTiAQ43PzaI

rUjoyvHbxVSXhZike2ljy+K2MHWd+WE1kTbNCfblwYYumq98TWQDjRWK/Uzb

Exx6kx2GM90nc72n8nwmc3zmi0LmS0KXKyI3QQlufEOApjJQuUClD3vKTgYh

F8DDTCJYKgT0ZIbF/2ym+2K25+a+5t7OY94uAP+DvVvC3ALzM9/zbq6TBX8W

eTfVejcJuR2vux2tuxyoPO8rYa1Oo7KZyHQKNBnfnIBvSThojN+ui5krDuxM

dCzw0oq0kfIykDCW5jSS4QBtY6CZdLStfIq7xsww+oBE38OTiRRgge5vPfu2

XuCbJv9HHP8PN8cBt0Nmtb0MAoVBwOM2Ntf7G3Lb03ynGpIXmlPyPNQgic5L

7aVbPXWo3NCOTF9EskuKk5K7jpCZsmCgmRImJwid4jaa6zua6T6W4TqW5TZd

4DdTFLBYFgb4b9TG7bckkZGs8X+ELj7BVJ8Ot5xNgFLbDgw/qL9Xs12Xs+iL

6e6LGdBz9d0s9d8uY98uD92tDN+uDr5dxr5bxIAp8Ha2/XYKeTeDuJuBvp1q

BU3x2WDtBbbqvLfsuD3vEJHNgGeSoCm4FpDuuN2G2KWKoJ4Ux9ow8xhb2XAr

WXddIQslLnNFTi894Shr+XhH+d6m8gMihWX2KHQChQ4a//d71791/H8/+b9v

/H91xxCNCQJPpu3jSbs7Oxvzo52FodAkt2VkESrDpyXeYagibre3dr2jDJHm

15Xjj0j3DLeUddAQNpXlrIpwGC4IGcwLmCgIGMvxnsjxmszzmSkOmi0NWSoN

Wa2M2KqLBfpD6chjdBWx1jn7awD/i6mO+2rbcTbWdjHbfTHXezrVfTYN9AcL

ii+rBKwOf745ebc+erc6/G5p8LMl7GegHZvtfjuFuptC3E5Ar4CCjTSdYmtO

+ypPu0uPOgoYyCwqPI0CByl4s9eUsFEXNZzjBY+zS3ZWCTSV9DYQN5V/aSzz

wk2LP8JaIcxcojknEre/B4Yc+OAUxhH1a1eE/46R/yH8v3n3CmuHNuOYwmCl

AEeiEslUIpG40NfSGGnSnOSIG0JA410Gy6OX4Pm7mLp5eO5wRSymKKw4yPS1

saS/sUy0jTIqM3Cm6s10RdR4UfB0cRBIwVRhwFRR0GJl1GpNzCYE1N9kYlsO

tbMY+P/jvhpA7HwUejmFuppBXc11A/G/WsCcz/VdzvdfzGGugOysj9yuDl8t

gRI8+dn27Gcbk7cLg1dz2LcrI2+XBq9mei8nURfjHZcTqPPx9uMRGBPbSEfX

MNAVDHQxtT2Xgsgiw9NxzUk7jQnzZcFdSQ5ZHpq+BhK+xtKsrQLSbIB/oLls

gIFwcZjl+vwM64okFfjPw4e16L+78n44/68/foS1pQrYHgodT6aDyQjO5GBv

e6QpCxKs2Zjkutbb3BjjtNlTs9pZuT/UPFKXNFqbiEz1jLBSjHPRLwywKgqy

6C1L2u6ELDVlLUESZwqDJvNez4CRXx2zUpew0Zi205q1D88ltBcRUaU0dDWj

t/ZkoAHI/vVsJ5Cd6zn0zQIg33e1iL1cwF4tDoAA8N+ujV4uDt5tTH2+u/jF

zvxnG9O3q5NvN2febc7crIxdLQxezGLOpnuPJ7uZ4x20ASixB0LoqsZ1lJG7

SlkLTe25eFj6FuTNak3UaJ5XRaCRj4G4p6Gks46QiewLe1VuHwMxT02+LC+t

qR4YhXlGINOIDNZC0Id0vj8i/wcJAuQBf0D+/s5oxur0yFh9cn2wZk2E9WBN

SnOKN3kGvYQqX0aVd+eHDNe+QWb4umuLp3qbVYXbwzNCd7CIvYG29Y6qleaM

hdJI0PMuAudfHbcKSdlszt2G5u8ji4mdlWR0DR1TfzTQdDICPZ8ETRbw+b3X

C/03y4M3qyM3q+OXS2Mgrlcmbtem3m5M323N3mzOvN1Z/Gxv+fO95c92l+52

F8GXVxuzV6vjZ7PY7a6mBVjlcHVWb2EiuiC2JzuqPcGvNy1wpT6V1J5PQGRv

Q96s18TMl4c2hFsEm8k6aYu4aAuz1swVXrlrCdgpcyXaK/bWZe7jWM0Onn5I

Yv7T+X9Thb56QWOVYCIoAXjCPAa51lbQGG2e76nRle3fmhFEnumdguYN1ad1

5gX1lEZn+hiH2Wq3pvrVRtgMVGQejPVQFgYpE907baUrkLTl+pSFmjdL9Wmr

TdnrrfmbsMIdZOkBqpLUU0fHNh8NQ0/GEOdTKKD510vYm5WR69Wxm42pm/WZ

q5Wpi+UJcLxanrzbWvh8fwXQvtmaB9hvN+eu16av1mcu16YZ0wMzrZWlQW5x

NvoBxqpeOnI+enKh5mrJzgbpbkbJDjoF3mZdqX6bTembkKTlyiggg4h4hzhb

JVcdUTs1fgd1QRMZdlslbm3RZ8GmEm35ERsry6zFFpYDYdH46fmzdsXc11+Q

/b2tjVUMlDZYl+9vGGOn0p3j31kSdzDSMd9WgS5JhKf6owoiPPQkYAUJQ1Vg

IgRNwsro6/OfHVLp80M7bVUgBestuUuQzJWWvNXWgrXWIhAbsOLt9sq9rjpc

TwMJ28Ichh1PtJ/OoM/neoHsXy0OXq2MgixcL49eLAxdLg6dzmJv1yZugeyD

WJ+4XBo+nek/nuqjjKAWWiuqwr0irHQjzLQTHUzSvW3DrbVDzFRj7bRj7bUD

jOUDDeWTnIzy/awRSa9XG9KXquOXqmK7UtwSbBT8jKTtVPnt1QQMJdjMZDlk

uT92UuZsSHBZHB8m04/xFBqFtfb4U/D/myyQ6QA+gwIaQDpzbXZivbeBiClN

cVLz1BUbrIgbaS0ark1vzwuDxLmN16dlBlhZyHMP1GUNlCV050WuYmDnpL0v

by4ZiyO43obd7roNWOF0bSqmKKY9Mxj+xg8S41kd6Q5NDenNj5+uy93vghAx

LaQBKH2snT7ecTiBOp3pOQdld3HgYh57NoO5mBs8mx0A8nI+3386y/ryZLKP

NtC+3FyGzo1LczEJMlSIszd442Sa/9o5zdUy2dm0Jsq3NSmsNSmoIca7PtKz

MtApw9Uk18OkKsByrDhqpT55IPd1jKWcj5GUlQqfhTynluATLZHnMjyP3dS5

qkJNJ3oQD4+kAA4cQKZ89yrQw/s/On/WlSAag8o4JuDxG7Oj692VW8iMCHMp

O2VeRLrfRHNeQ7xLYaBpeYhFX3m8i65YmIPODKJspCq1ryR+ewz95c3Z7++u

Trdm9gdg2+hGWHponIOem5aktaKApTyfkeQrffFXRtI8NsrCYZZaQDewRUkz

kLxtVB1lEE4baWeOdx2O9xxPdJ9O9ZxO9Z5OYkCcTIJ2rO90uudkppc20rHQ

XNKRFlET7pHnbZ3saJDubpHjY1se4tYUHzBUlrYKq9hG1e91N+AxzTh04ya8

YrAktS7CPdNZHxLuNFMRN1oUGm2l4KYjZq7EayDJpsz3VJTjEc+zj1w1+cqC

DIcQNTt7+wQSFejPP5v/N5SHdf8sqwVgHJGp9L2Nte25kfWOAkJntqcOvyLf

0yQ3rZ7CsGJ/gxgrqdZkj9xAS22x56WJAbtD8NmWgtH6HMLi2L//5vPf3V2e

bM3ipvs7S9PDrTXtVUWNZfmM5QV1JPk0RV7aa0p7Gyg5q0uayfPry/J46Eqm

uBrm+1ojUoLnGvN3ULUkDJw2iKQPIcGROYI6Hus+Ges6BKkZaaOOINYR5SNl

Kd05UY2x3g3RnpVBjoikIHRW5FRVKq6zijnYcjTUyhxoPhxqPR6Fg9eHAy3k

3qalxsKuzAj4m9eT5bGT5dGRlgq2yrwWKgKaYmzinJ9yPf+Y48lHzpoCoLXs

rc3e2ljHEYgP+3Ip37EK/b4X+HH5A/MP4FMPTw4ODnbXlvdmB1ehGbj2DD8j

UX62T6zV+GpjHCLNxcLNRKojrBxB/dIS663P3xtFYatSMZXp58Sd/+d//fl3

by/pGzPdjeWehipuasIemmLAILnrSgdbaEVY64SbqwUaKXnryXnry3nqy9oo

8zmo8vsZyISbKjZGu41XpKxBS/fBdOhvpWBa6VjE0VDH6SjqaAjBHIIT0JCJ

8lR0elhLnC86L6Y3J3ogN26uMn2nNZ/cWXzYV3ky1Ay6ieOBRiamltlXfYRh

baKgd1cTOyoJXbXT1WnjZXEzNYkxdmqmcpyGcjyyPI/52R9zPX/E+eQjL13B

XHeF5oygpbnp/QPcV/wZ3z7+/xH+3/r8tPunuBxSGayySyCR93e3gPPfn+ya

ro1ZaU7w0RPiePyRDC9bmLWSo9qrUDORdFc1Zb5nr43lR5tKtyZ6m1P8Z2Fl

v705/99//uNvzk7Gu5Al8eFFQa5lwS6QaI+e7IiBooSxkoTpqvSx8tTe/Fh4

cmBNuGtpgG26i0G0pWq0tUaKi2Gxrzks0W+gKHGlpWS/s46IbqD2th5hESfD

iNNhOBjV4M0FSNFoafpAYcJ4RfJsVdpSbeZGfdYBNAsPz8BBM/ZasgiIAgoy

j9FRwOwqYXSXMXsq6V2lREQhuYvlByarkuYhKWkeeloSbMby/PK8bFxP/+3Z

px+/ePSxn55wrodaQ4LTzEj/zu4ukcKgH5497Av6yfiDok8CPRcOv7e3RyHs

Eyba5mqj+rI9PLQFOJ894n7+iaG8gBTXR/aqPC6awrpSPLH26pjq7KV+REmo

/fYg8s+//fw///DlOZm0PDIy2FyHyknszgrHZIXOVCSuQDK3GtNZm5lh+Tuw

/K3W3OX6tLGiKHRaACzOqyHctSbEqSbYri7MqSM1eKYma6khF4+qovc1M7HQ

o0Ho6QjscKAJGNeN1rLF+vyF2syVhqyNxszthoz1yrjZvMC5wpC1yrjdprR9

aPZBc9pBfTyxKZkEyya3F9E6S8ntxURUxXZbyVRNymJDamWonZbIM3UxTnUh

dktZLlXhF68efeRvKJbtrloTZTXa2bq9uY4j0b66EPAP8P9W1N8apIebxKl0

AJ9IJO3hSVQibhcL2WpN6MvxsFHg4Hn+iIf9qaasENuTj8VePlYVegFsQ56P

wVB9/kRbTVm4A2EG+7///Y9/+s1nN4eMSyZ9Z2JguqloojRuNDdwtjh8qTJ2

oy5hpyllD5q1D2PFHixruyV9uTZ1qjQBmxWGjPdqiXFtjHSGxXljsiMnyxO3

oPmU7trjIdjZKPx0pJWJbcB3lG805i9WZyxVZ6zXZW9B0pZKIybTPZdLQ/eb

MgnIclJ3PQkNIXVWE5FFO/UpG1Vx+/Vv9prTD6C5+4jiLWTxQkv2SmM6MtHH

TJ5XhPORrjhnf6pnqauyPNuvQgz5C71VqkJNeuoL1pfmgP4cEKgU2vHDptBv

0v4QF/rh/Mmse8MPDwhkEplKIJMPCJT99fmtztLF6vCOJFsXdV7JV09ePfuE

58Wjl88eSbx6xvX8EzWxl5BYh4HaXHR1Xk2s2+neyn/+8fe/v7v+8vrq3cnR

7lD3QkPuRHHkTGHIXEHwXHHIbJH/fGnocmXsak3CSlUcqzsuiVwsj16qTVqs

TZsojgVzoSnMqdLXvDHcvj/Lf7nmDamz8nQEfjXVeTnZfjrcdIAoXKzJAIK/

VJu+WZ+yVBw0kuI8Xxi8W5O0VJa0VJk5V5G9Ca3BddQcwCu2mwrWa5I2KqL3

WzIOoPl7iKKttuI1eMF6Sw62IMpZS4L7ya+CzFR3IUmhWrz6/B+H6PIXemmU

BOiiwd+fGiNQqSwjSmZdDvgm/w9sAT5o5H+lS4d4Mo1MYeCJNByJtL+P35rC

7HWVLEPi4fEWXjpC8jxPOZ9/wvb0ER/HE+7nj379q1+5G8ujcgLqkvyRBXHN

KYE3lL0///aLP9xe3Z2f3h3TyVN9qy0FYwVhIxlePTG20EAzzBvX6aKIpcqU

tcb8TWjJLrR0qzFvIj9qINF1tiBkpSFzOD8KGetV6mWS5aTaFGYxVxpJ6qq4

nup4O997O9N5jK3fbM5ZguSuNhatNRctV8SOJdhMpzpPpPk0+NlnOJpEmqhb

SPC/NtR442aW5WvXmRY2XRCzVp+8D83BwQv224p3UaVbiMINaO5MXVqghbIg

20fpbgYVnnqyT39lIsYWrMOb5aZSFKDbWZK4NDGyiz8gM1mLkGTa4df9z98s

h/4j/EGpff/0GOL9mgPgDzJOIJJwBweE2V58T8l6a3pTrJWvvoiK4DNetkcv

XzznePLJR7/+hP3Jo0RXvabUgIoEr56yhL6y1N+f0Vn8317/9vrsgrJNne1d

gRWOF4RBw8wRUTbjuRGzhTHD2ZHjxcmz9QVLLeXz4Ai8U0PBQmFsX7zzaKbP

THkiNieqJtQp0UY5z0V9JNufgCw8G4HezXffTrfT0VUbAHtzyW5H7V57zXxB

+GyKY1eoeam7UaC1gbIImzDnUw8He0URbjlBHhN1VU1xngADRUSCz0ZzBg5R

iG8rwXWW77UVbUKz11pz0zyNpF99lOWhW+apLsn2kZbQ80hD/hwXBTD+4TnB

S+NDm5tb9EOWJpDuu2D6f9/w9iHwv4f/35Rj0v0+E9bDi1jPOGUScHg6jUQc

byP1Fa9As4v89Xx0Ba0UuZUEnz97/IkK96cKvM95n/xbuJ1WQawfvDhluKFw

uRvyuzPaH7/47D+//PwPd1eXpC3G4uASrKQjwaUz1X8XVYvNiagKdMj3s/c1

VLVRFjeVEbBXkUz1tAGC05/ktQRkKi9kODditPgN9E1girNOkrUcOt5luyGV

2Vd7MYa4GGmldVduI2r20c3UYSQRDdksi+oLNS3xNOsoL8xNDDOUfiYtzOfo

4GhnqGSmpVxfAUkICzeREzMS5ch1054sjsIhiomdFXhUyS4idxdZ1BDrqiPw

aXO0fUeMhQL7vxmJvwg35MtyUawINmhIdlsYxmxv7RBJFOrRCfHohHL4twtx

P5T/t7sgOuspdlTGCSj0rMFPZVAoNAKRCLpvysHWNqqUhq1cgWelOCl56wg6

qvEpCzyVYPsoVIcv0kjQTIbdVV+pHVLVCakebCjd7G/93Tn99++ufv/ZzW+v

j88O1g/XJlbbKtAZAecbc8ONZY4qohpivOJcL7ge/1qAkz0yOFRNhL+ioKC1

srzA06jFV3sqN2ijKX+8KqstOSTbw+iNpWxHtP1WXdphT835cOMZtpbWUQyG

PXWo7WoZezzcupQVhIlyG6nPW5scbizItZZ7YSzxVIHnEz2J58HOdnNjM4Mt

kIYwlww7tXo/48n80F1oPqGrgthdugfP2UMWducEh+lLDGX7t0ebq736taXM

qzgzgQwHmbIAvfoYh1lM5872HmiC6Een1KNTyv2Taj4c+9f5f4//ZFV2xgmJ

esi64gkmGoVGJlHIoAIzDslbCzsdBeS+imVoeranpr+RmIXcS03h56biL3xU

ubyUOaJNJELtNcJ8XPLiwkeaSveGkb+/ZP72+vR3N+fvjknHmwvH61PrnTVj

tVnYlmoLRQktWfG4kCBVUV557o95Odh1VOTMZdmLM9KoOPIqtrs/JwIZaDJV

GEbGNk9UpRX4mqXaKLZF2mzXpVHbSs76a097KijwvH14GWO47d3myPEwdK0y

bRdWPZKbMNlQtNTXnRns4aTM464r8drBdLCzawXT21+YDPG3rvUzXyiLpXSU

4NtL8KhSSk85vi3/AFk0WBTZEGK1UR3fFmGsy/fISvplooVAooVwkZ92ZajF

aFvj1ubm7u4O7fCrjVgPvfDfzf+bys/qrEHQWYMfpIBAoRNIFAoVlGAwCaj0

9YktRM4eqnCxJe2NvUKgsZitMpeTuqCLPIeZyGNt3o+CtfmMZV9xPPpVhLN5

b1X29jDyd+fML86YX57SL/Fr9MWxo9XJqebiNFdje1UpN1XhvFCv9qYWH1tz

Q9FHumIv1AU+tlLmGURjiGtLSz1tW9Ca2azwydLofXTddG16oa9xjqNqR5T1

dk0yBVZ41FVxhCplIvJxrYX4TsjxVOfhJJqAqlqrTZotSJwpSpwqjp6pzkam

RnfmJS/AWhZbaruSw1CxPti04JmyBECbiq4id5VS0OXUngpyRxEeWQwaYUyG

/0FjGirS1Er8sa3M8yjDVxHGPKWBepUhxn2gMG1vgv+I95viqH95WNMPnQLf

1XN9tbMRZIc1+Jkk2uEBkUKm0qn3/BngMIfegqUR0KUjZWEBuvx++kIOKtw+

euJB2vyOsi9MxB4F6nDZyHIpCLDHuFuiy9NwE11fHFPvGMR31F3m8sj+UMfe

EGoOVlEd5bPSj27LiCrzMEKmx6Cqy2Ld7Fw0hP0N5WB5aUto1HxN3mx5+mxF

+kZLCa63eaOzoS3Jv9hTv9BVoz3Cag0M3Za8w/YSOjyfUJ+Ma8zag5cd9EAI

A8hdZN1WY9FKZfpMdsRYTshMUfJc0ZvhrKj5opT+9Mil2txdWNkevJSMrmJi

IfTeGkp3GRVdRgMpQJUA/lOVbzoSPbfqktBxNq5ybLbSzyL0X0Qa8+V5q1UG

G6DKkg52wPjfxRHJODLrCUK0wzP60fkPnQLf43zu45hwf53lgEQFRotKZ4Lx

T6XRmcQ9+njrZkviDjJnINfLR5PL30jURY3Xz1Ay3EDMXYHDUuxxkJ5goKGo

rhRndoRPT2kSbbbvy1PqDXnncmeWOILY7Kxdba+aaS5YRjUcby6PNVXW+lu3

hDq1xb1GJUd2pkQN572ZKEqdKUldKAPNVM42vHK3s36rvW4KUlgf5lTurV/u

qdsebreYH4GHpDHgeZSWDFxDMqE566A1bxdWTOprIfbBd9vrt6CV242F6/W5

W5C8teqM5crUvdbCA2QZpaeOjoEcDUGPh+GH2IZDbP0hpobZW0HrKSF3lODa

S2ZrUppCLRZLIjBxDl6KnGZibGF6zyL1ebKdZcuCdGE5oZury7t7e8AKsq5G

3W/KYhyevV8O+kf5P6SAwbrUC2IftF001mNkaHQGCdTfpVHqMGQLnr4CTetI

dfTRfPnaQMBbR8hTSyjGRMJbmctG8hnoVhJt5PREn8S4mnUUxDNWRn57yrjE

bxwvD+H6mzeQFSuw0oXmgg10wxa2bbuvdaYmDZsW2Jsc0Jnwerr4zUxZ8kx5

6iokf72hAFTVvV7Y/gBqv78dkx/fEGRZ729c/9qkI9JhPCPgoD6dAs1lthVQ

Ebn41mx8Sy6ppYCIKKViGmn9CGJPO6G7ldLTSupqwCGrqehGBqbleBhxPIK4

mGi/muw4H4OfDbWcDEBOsfWn2NrD3jJWLWgrma9Lbwgxm8kP6o93DFTlshR7

EmP0KlT3VYYjKMFajcneG0vzBzg8Dg/8OBBn5ld3R/7lqR0faIe+t+E9IVOZ

RBIwPFTi/Y4LCo1BpzOpZOLBGJI51rjXVbjVWdKV4+OhxvFaXyDAQMRTgz/V

Rj5IW8hGki1Clz/dRsZFmTPJw3SipeSKsP77y9M78t7RfD+xt36vrWylKXe1

OW8FXrKMKF9uLZqtyZgoiZ8rT1qrSd9qyNpqzttFVhx0NRD64dThTsoImjDY

tdJS0p3o2Rpi3hRo3hxo3hZuN5UbetCQddhRdgzMGLKAAMsjwfNJ0Fx8YyYF

UczENB+OdJD728h9CDoWMO84GUWdTnafz/SczfScT3VdTCBvJhBXI1CWicLW

n2FrTjAVDFQZuaNssT4D8J8vDhtOcovR4bOVeBRjxBes+yrRSrTMX6P5jcvc

xAgY+/sHONZNGdRDEPTDs4e1IPoHN8Lfsc7DWuphpRXAJ1HxRCr54eGxQHyY

R1T8LnG44WgMsttZOFOXgC0Oc1F5GWQoEGEm6a0pEGsmGW8i5SDJFm8smmYj

FWIsFGomNwMrfUvd+/O7Gxb/uT5Sb90eoni1IXP1IQWNOcuQrJXG3NWmvG1Y

Ca6tgtBdS8I0UwaRtFE0ZRxDn+inDbTj2qpni2J64hwR4VatIZYtgRatoTab

dcm4hjRGW+EJuuKwqwLfnEOE5jG6ysmwfGpr/mFnxUl/4+EQ7HAQwRyAHY+0

n02gjye6TqfRx+OdF5Odl+PIm3HAv+UM23A91HI12HiBrWWgSojtpQv16S0R

lovFESNvXNKMBJ2kPg7XfRms9yrWQqjktUZjguMUtpMIZBlHJFLoFPoxnkQH

8OmH3z7gvysF380fJJTG2mhBY+1zuN/rdUSl39f6nWX6eBNtoArfUzxaHABP

dLKSfRpswBNjJR2oL+6rI5BqKe8h9yLNSjrDQT7YSCjcTBZI/Ts67s+/eXtL

3T+aHyD1NRy0law3Zi7XZy1DMlcgmWuN2duwQlxHFb6rntDTSMRCycNt1GEU

ZaiLPtoJLD2uowZY/emc4O5Ye2SENSzMutrHCBHtjGvN3q9NpEKzT3pqTnvr

qIgCQmsus6uSjiqjIwuP2opOumuO+urPh6Fnw/DjQfjREOJkBHE23nY50XY5

Brseg92A40jT1VDzzTDsaqjlchByiC4nospnazM6EtxXi2OH42wzTfg95D4J

1gKi+jLalL/AVw0SazfT27q3jwfqj2fdmnFMILPuy6D/pRH+R/jf72wBc4rO

ehgO7avdhqziCySIQmVszjAmYcTeEspA2UR5WG2YmbnU40BdrihziRBjGQfF

V8lWCiFaPEWuKjlOSq+1+aMtlIHJ/+IQ919//N1nx9SjpVFiXxOuvWwTkrZW

k7xem7pWm7rdmLnfmgccI76zBt9VR+lrpmKhjCEEOFLRteSuahy0YKMybizT

pyvGBvBvCDQvctUayQ0lQnMPIOnHqIrznrpTdDWjo5AAzaahSo/QFYy2/ENk

wQm6+qwXcoltvhiEXg7DL4ZbLoebr4ca78ahb8dgb0dbbocgb4eargearoda

gRCdDzYye6oIgH9N9mhuxFpRNCbCNM2Ax1P+cYjW82AdjhgT3kIvpdooq2Fo

2cHeLoFMxZOoQHzAFABHKvP0Wzn/HeOfTAPTirXPjQWfeQyUnwLqC4l4vDF+

udzDHKnZ7sgaLgyqj7AA/EMMeWMtJcNM5cykXkToi8YbC5Z5aKRZyfqocbkq

cNbHuJzuz/3Xn/8EJOhye4E+0raPKNmoTtqsStyqSdqsfoNryiTB8sjtwHuU

UburGT319O5aZm8trbuc2lFMai85aM7ZrI6fKXjdl+jQHmlf5qlb7W+yD83D

QTJILTmHqNLjztIjVPFRWz6hMY0MzTnuLj/uKjpsyz/tqT5H1130QC4xzbdD

sKuBxsveuouOsuve+mts87vBlrf9kNu++tsBMP6hF8OtlyOtzN4afGfVbF3a

SLbvSkHYaJxtkg6nu8yjYM1nr9WfxJsB/op1UZY9lWl72+sHFFY7+qD/D5vS

vxX1h/N/L/5MUMSZR/cbHY+ph8BcgXb4iEkhnm+Nvd0coA/XztXF9Od6V4SZ

mkt+EmkmEm8pGWIsoSfOYS3LnWIiUu9vmGYj76vC5avOl+mufTAE+3//+If/

+PLdb45pZwvDhO66vYasnco3u7UpuAbAMJvcmkND5NDb8omt2cyOUkZ7Ka2t

iN5WBF5TYPn4puzduuSFoqDBJA/Q9ubYyc0URVHaS3br02nw3OPusuOu8rOu

sjN0GbOtkNCYRYHnn2PKT9HFl33VNwONF72Q6/6WGyz0Gtty1lV3iaq96oac

d9XdYVu/GIbfDUJZqRlsvQQyNdDC7KkjdFfP16fNFQVToGmLBYHeMs8cZB6H

aj31U3scby5Q7KNSE2HaURy7vbYEZJ9EppIo988oAC3Y4dnDWvSHwP/28c8q

tcf0o1MG8FGMI9b6xj1/GvMY+B8Gfvtye/Ryrfd6GbXSlDha4l/gp+ugyBZn

LRVvKeGvJ2wo9UqJ+9MQDd6GAOMcB+UAdb5wI6kIU2lMSdyfP7/985e/+dO7

t1+Qd4+mevahRdvVyXuVCfjaN9SmDGpzBrExlQbLIbVmAxmnIYsocNaR0VZC

gxeRoQUHDenLZaHDqW4VHupl3jo0dA0emkNoyqAjC876669H4NfDraeY6tOe

KlJrHr4p86ir+Ha49gpbczfa+m4U/vlY280A9BrTfNnTeNPXfN3XdIttuelv

fjsAfTsIux5oZfEH7UA/0J96XGfFPMgsqoiGKsyxkLQS+LW9zLMQjWc+Kk9i

zfgLPBVrwk3hOcHrC9N4Io1MY+BJFByZ9exE8l+uxfzd/EEwTs6ZJ+c0OjC0

J7RDVhdAZRzePxXw6HB38d3++PUG5majbwOaPF7il+OhEWIsnmgrk2gtFWwk

biLNJfzikbUUe62XVq6dgq8Kp5cqf5C+VOFr41vqzn/94Y//8dndb0AjsDUP

SupOfeZ2eQKhNo3alE1rBpFFac6mwwtpsEJyax4NXsh6jSw5AorUUU5ozduo

ie1LtEs1E+3PCT+d6j1oyiU3Zpx2116MoL7YW/ryYJH1FAJsAzCiOEg6qTXr

or/ydqzp7Rj0bgx+N4a8wrYA+GAiANq3g7DPRtveDiNuh+CA/yWmCfA/7W86

6msA4/8AVb7SkEFFFa/WJTf5anvIPreS/CRQ44mn8ieRRtw5rrJVYcbwzNfL

49j9AyJrO9w9f+pfxv/fXIL8gfyPGMdnzOMzCpUJxjwY/KAE0A5Z7QCFzrzE

LX5JnD5f7r5ax6y3JI4X+Rb76YabSmS4qKQ5KgYbSRhJvOTneKzC8yjXWrrU

WcVP+aWjFLu3mlC4sfD+OOr/+8Mf/3R9/vnZ4TVug4yF7TTlblWlboOetDYT

V59FqE0lN2aRm3PITTk0aB4TWXzUXgYMzDEIdC0VWbLXkNoSaJBjI48f6fzd

BY2IhuCashi9VeejyJsZ7N3C8Mko7AQLOeouJTZnkpoy6R0Fn88gPp9uu5tA

vAVWcwj2bhAOpOZmGEwWkJGOu7H2m2HENbb1ZhB2gW05wTQeYhoYPXW77aWr

kGRCa8ZSVfxAvI2N6DMDwY/91Z95Kj8O0+fMdZMrDzaEpXtPoOEHB0QciQb4

7xMorIcYf/Xs7n+APxj2QO2Pz0Baqff8H+ovkcakE/feUta+IC9er3RfrnbP

14Vhstzz3VXA4M/31k1zVXttIGosxSnE+Ynsq8ehmtzl7sq5DsrBmryucs9d

ZT4dby74X5/f/v7i6DcntFvCJm28e7+jcq0qZb00casc1OLk/ZpkAiQdBB2e

f4QqBdblFNMACijwkye9taAWbNTGFTsqwuI8/+PdJWNldANWvt2QR+4oB4P2

BNvC7Gtm9DSA7okEyyXBs+nIXDoy/+1o42ezbXeTyLsJ5LuJts+m2u/GEJ+N

Ij6faL8bb3t3f7wG1miw9WKg5QTbdIhtpPbWbcCLdqGZoKHerkvGxNjIcz7W

4P/YW+WJi+xHUUbc+R4KpUEGzUmeYx0QHA6HBy6dylolAMOV9rVVuL/z+u89

fyD77/lT7ssxiEPSwW+Y219SV+42eq6XO5Yb4wbzffI9lJPsZcD4T7SX89Hm

N5Jkl+T+VILzUbSxRKqRQLqleL6TYoqVlJ/S0/asgH+/OQbi845BOt9ZZk5j

9rvqV+uyVsqSNsqTtkAtqEs7YPmZ3ENU+VF31Tmm/ry//rSv9mKw+bS/gY4q

n84PzrMQxxTGnW3NrtZmEDFN6y15W5AMalc1DV1H64FQuupI7eUEaC4VCUxR

IbO98Hqo/m4K9m4ScTcG+3yy/fMZFEjBu3EkKwD8ifa3Y8jbUQSAfzEEPR1s

YfQ3kLqr12EFFFQxA5lLac3ui3MRfPaREt8nTorPnBQex5nx57rLlwTqNya4

DMEq93Z3iMD/398NAUDRjv4qPn83/4eC+8D8K/g0JvCipzTin04OvmSs3ax3

3K227bZldyQ7ptqJp7sqZLooJ9pI+esKmMtwKvI/533+q9eawpVuKpFaL9Ot

JEq8NFNtpUu8te6ou19eHN9S9i92F0+XxohY5Da8crM+Z6s6dbs2ab8xiwgr

oLWXMLurjzCQM0z9aU/l5UDDxTD0dKCZhCwG5ifXXKr+tUF/dhgi3qsjJ2EL

WbFU+Wa1Pn0bWoBDFs+UxMYayOfZyG/XxtCQuTRE7tVQ/c1Y07sJ6Gdj0Lej

0LsZ1OezqHeT7Z9NtLP4g6IMCvcIHMA/GWw5Hmxh9rM2Uay05J5g684wZUxY

bleCF++zj+R5HtnJPXFSfJxkLZzlLFP4Wqc+1qG/qXhzbQVPIFKZrPugWUJ9

dPr1S8B/B39QZ4HtobKeuMW67PiQBcCfRmdcM0l/uiD/hrJ8uYK6W4LvtmXV

hRkC/jnuKnmeagk20q91BWwUuTTFOAQ4/v/W3jo6zuTK+7fFzMzMzMzMzMzQ

YqaW1N2ibjFLFjPLsi1ZtpjVUqu5W2yamSQzO9lNdt/39/evHjmZzCbZZCbv

6tR5Tks+9rE+99b33lvPrSpmY1HWGh/NIie5eBOBXFflEk8tmLXkyeLgv91T

P1zsPZxs0jaXrpbHzkbb9juq9zHF+035h82FZyDsDtTSJzDX0y0Pc223U2hQ

kz4u9V/PdoLqYL4oOs1crsJDoy/WPtZAXJ3lWaix1uuGvDV40kpl8rvGgqWi

2HInPaSj8qt8/9OWPEJP5eMM5sN888e5li+LUMH1eRWEg8FvXw5+uwYmwiAI

Co8LoATuBYGbPtdOn20nTKAP+hHr6FziEPxhCkUerKkJsRNkeaYpwuqkzOKh

zpLlJFXopQwPM8Eku443ley8fQMkCCQqOGgViAz89qcXAf9y/wNwdTyB8pX/

V/8HHyhk0g+PlN9/IH2Pf/txb/Sbd/3nQ5XNiVZ5bgqVgYalAbpJdnLRVtKe

uiIGsjxKQmxgziZZyJQ5yyaaCeU6KxY5KaWbi83V531Hwd6fbj0cv73ZeUle

n72c7jl7gT7qqjlsKwe18AkIvv01tLFG4Pwgk7mbab2dab9f7L1d6CKNoDZq

U+u9DZMNhPMdlLKsFSP1JKIMJKoCLIbS/aayw942Fl8ON5FGGg7r0tfyQy/a

Su7HGj/ONX1exHyeb/403/55tefLavc3L/uBCb68BEGh/9NKH6h5Hxa66TNt

5KlmCiQ+9ceDiE5YSLqDzgjMZzDLO8xYRoKTSUOE1UaeyRP4v4t0nqciPNiw

KcHpRS3s7fIs9gxLJF9fEihfe7GuKPRf/iLm7y17/pn/U+SF1hye7uagUUg/

PBC/v8Z+c776eX/s43r3cX9ZW4ptrqs8PNi4MsQQ8E92VHbTFtKWYFcTYhLl

ZjWX5sq2ky5yUylzUyl1Vcm3l+3I8P2M23s4274/2rjdXaNuzF3O9Z+NtZ0M

Nhx3ww/bSo47y3CDtZSxBup44+108/0sSAtbHxZAWdpOHUOcNGe/ygnpjXIo

dtGNN5TOsFJsCLIcygpcKEvcaiw4bK/A9iIuexDH9Tkgm/0wgfkwDeA3fLPS

9M1i86fFlk8rnZ9f9n5ZG/gCovAqSIr6Hhe7n9Yc2qjTzcSJJuD8Z0PIw77K

yigPGeZnPlqsGc5S8XZKCvzMSkLMVvLMftoc6Q7iWS4y1SFGmGS3vvKktcnB

0+MzIuUGcn4SnfA/rL/9Kv+/xJOI0P76P4WAr+OaSvzDZ9oP12ffnK795niJ

stCw05XRFGea4yxX6KlR4q+T6qiY7KTsoSuiL8OpJPhcVYRFno8pSF+4wl2t

3l8P4a9T6alW5adP3Vp8xO5e772iv18G/n+1Moqd7cFOABPUHXWWgXExUE0e

baCNNd1MNQP/f5jBPMy13sygaSNV+K78PVTqRlXSUmFsT6JbhZf2QIrPEdS6

VnvYUU0ZbQRWu3qBJPbBP0ygPs80fZrFfLPU/M1K45fFxm9XWj+tdnx+1ftp

rf/jk+eDcTPbfjPTRp/GUKaaiBMNuBHU0WDtdl91Zah1gC5XZYAaMsyowE9P

S4JdVoDZXIbFX4sjxUY400kK+H9DgktfadxCP+bo8ARPur54OqOP+Mvazv+h

/9MvQClBAMkPdJIwjkjGE8kUCuWRTvyPz9e/oR79jvT+u+NF8nzj8VBpU4wp

8P8CT9V8L7UUB4VEe0VvfTEDGS5lIWY9KQ4FASYLWe6GQN2OSNPWCPOmUKMY

Y5HlLuR3pFPa7kv61jJlY5a4Nn61MHAx3Xk81LTfVXXUWYHtq7kaQpFG625m

MHcz6FvwnMXcjCNoL8qveoqOWrL3GjPeo9JWK2NHYF7LlZH4FwjKZOtZXw1t

svF2vvlmDk2fqnuYrn+cafgw2wjGx/mGL0tNX5bQX5abPy63flxp/7jS8wE4

//KT7E+3gr9IGm+4Gq3HDiMPBmrfd1XAw4zzXCSbIvRaE6yrwswMpTjkeFkB

/0A97gRr4XQHyfJg/fpEx76S6OWBltPjY+CxIAvFk+m/Snz+Af8rAhFaAiVQ

oMVtPJFEIn6kY//9A/nfHwi/p+1/szdGmUFcjlagY0zL/NQLvdQyneUTbWVS

nVTcgf6Is2iKs5jIcwMhkuZhDDWWbgwzRfrrxlrIi7I+K4nx/gZ/SNt7ebO9

Ql6fpm7MEFZHrhb6T0Zb9rrh+21lZ91w7AAwQS19suFmso42ibqGDk2qIPYX

YTsLTttyD9CZW/Wpa/DY1YqIXXQabRpzs9R9OYSgTtY/rnbeL7bcL4DYUXc/

Xf8w2wCe9zP1nxYh+J+Xmj8sYj4uN0OHEqz0Pix3Xc+1AuUBFRx+tO5yuO58

GLXbW/2urbgmzAAZqtUeb9aWbFMfb2epJCDLDfQH8OeKtRDKdpUrC9JDxFr3

FIavDLYcQNsBLi/xRAL1L+L/r+k/9M6RAqYS4QJ3BS2BAvGH3vkSyWTSR/r5

Hz+S//OR8D1+89vdEfoc4rQ/HxWmV+KjBvin2Ekl28sB/h46IjpijMaybHbq

fCYKHAqCzGLsz3TEOJQF2XnYWZ89Z3bSkrrZXb45XL/ZBvozTQJT4PUU6eXY

xWz34QByt630oKPipK8SNwQnDMPJI9XUkSrKSCUJOjGpCNtVcNAM265PfV+X

9Boe9QYec9KWezPfcrfcjRuoJg3C7xfbbufQ93NN93ONtzN1wAq3M/W3cw13

802PS4B8y8cl9MNi0+Ny+/1S5+1C6/VcM3UaTZyoB8qDHUKeDiHfd8PX0XnI

EK2BTOv+dNvWROumJEcnbXFZHkZzWYYQI55oc4E8D8XSQN3aaMtWmO9Cd93B

ztYxKMKIZCLtBv+z5P9f4w/8H5gSe4mDMh9ot++T/pBJX64Jf/xE+f3d2X/Q

tr8/nqHM1W+2ZCDDDfLcFYD+pDnIQEtwrupeemI6Ygx2ajyuWvzm8mwaYiwy

AmwgEIjzsAhzsfCyM4FYtjWKeTzdvNlZpW3OU97NU98vUNZnrpZfgLm/11G2

31F22FV63leKhQ4/LCMMlF/1lV72lZ10FR225Ww3pb9FJb6pjgXw3yNSr3qq

Hpc7b+ZbiYM1d6N1H+Za76aaHqagcwboU8jraRRtCnm70HS3iLkBzwX0h4XG

h4XG+3n07UILfRZNmWokTdQD/hcjSCjyDiK2e6qXapORQaqTRW4jee6N0ab1

sbYeBlIyPAxm0s+izAWizQUB/5IA7cowo6YUt/Gm0t3N9bML6MpVwv8z/6/r

/9A7rwvcJbTPF7pRnUii0cnE3z2Qf3zA/3iL/ZG48duDEdpq505vKTLcJN9N

Md9DNdNZIdtVLdNF01tXVF+S2U1b0ENH0FaJU0+KXVWEVVuSQ12UVZKHUYib

DeTSnXkRn083aHtr1zur19uLlLez5I35q5WxxeqUTUzedmv+flvBEdQTXnTR

X3LWXXTSXXTUXbzXXrDXmrOOTFytjFitiH4NT9qAp29UZV10wWnDjZTBOupA

Hb6j5qKlEttWhe+tpY830iaAfCFpwBazjfS5RvpM/f1cw81M3TUwynwjbbae

NIF64t+EG60/G6o76ENsd1aMlQTVBqlNF/uNFQXWR5k3Jzh66YtL8zJayzJG

mQmCkQ1Cnq96aageOsV1HJWzvbaMxeKAUBD+3hakX8v/Arp9g4K9wF1cEi6h

O46pJDLtnk75/SPhN5TDH2kHv7tYvl1rfdzof99VUBNqUOylkuehnOWqWOCp

mWKv7KMvYiDF5Gso5qMv7KLBayzLri3JqifNriPJJsXPLMDDysn0LNhK4/r9

3PXuKn17ifBqHBqvpwgvx/cGUFsdZZvonJ3m3P3mnOOO/NNuYIjc/bbc/faC

95jsNUTSWk3iVF7IcHrgVFbUfG7CckHqbHrkq4KkXXjublXufk3RPqLkpLFi

D1W+gyo9bi0nDqOos82UWTR5tp48jbyeqaNPo8C8uJ6tp87UX43U4IYR+LEG

7DDqbAi101X5trWwK8MNGay5UBEyUuDfEG3RnOjoqQfxd1bljDQViLUUyXGT

L/bXKg8zbEpynUEXbq7MnpycgaL1f4U/lvB0sMnF5cVTtzlIhMDXPZX0/S0O

OP8P1INvTxeoiw20pdaN1qyqIO0CD/lMJ+kcd8Vcd9UMZ1U/IzETObZQc+lw

C2lfAxEzBXZ9aVYDGTZDaWZFIVYgQXzsjOoiHJv9qLv9Nfr7eeLaCG55AL86

Qno1eT7buzeAfIfJfdeQsd0E28XAAPy9ZtgOBrbXlr/ZBFuuSZguju5J8G0K

cuiN9VwqjF8uipvOCJ3LCN2Fw96Xpb0uSnhbkbZVk7VZDXtTkf6yNOVNNex8

AEWewhAmkKSpWvoTefo0kg7xryOMIfCjSKjtdgh5MogA/BcRyQ0xVk2RRqvV

Uf0wT0y8TUeqm6eumCjHM1d1rlhL4WhzoXxPpXxvtbJQo6YkjzFU1pvFsZPT

86uv+k/5O+v//2D87VY76AI4AvnsAjrlBkReIoi9ZMCf8Lubqz98Iv8b7ejx

/YvHV8301bbVusSaEP1CL6U8d8WKIN0MJ3mYm5qvvrCdCme0rUKkpXScrbyt

CrexHDsIxCaybBoi7GKcTELcrHxMz6oTfKApsL10u71AfD0BTYG1cez8wP4w

+l1b6dtG2PumzL2W7P3W7G0Avzl7szFtuTplNDeiPcYTE+qaY62ZYihd625Y

YqeRZaiQqS+fqC1XaaubY6BY52jQ4mXeF+44EO0yEOs+nOi3UBj3uiZ5vw12

2pNLGqumTaMAfNoMijyBIE3U4cfqsC+Q2CHUYS/8fUcpOsmpLtIMHWWyWhvT

kmDdnGDfDfP21JeQ4HzmpMIWZyWaaCMJc5Yr8tEoCdKvT3AerclcXxyHulCg

VfprPOXXtSD+bf/h0/FKT2ebEKDMh0iiUEHyc0384fbqP79c/460/bjR83G9

42a9d7UxGchjkZdyma9aXaRphpNcob++v6GIhzZ/opNahLlkpruKm46QhSK3

lRK3qSybnjiHBDejIDcTFxujnbrY7gsEaROo0Ap9a4G0MYN/NYZfHTqd7t7t

Q+52lGyiYTst2bstsO3m7HcNafOlIehI5xJXk0IHvTQTxVgNCZiebK2laoeH

Sau9boelVrOVQa+b8YiP1WKwy8to75XkoNnEoMFQ595g+64wp4kMn8OWtP2W

tPPeIhARrueaQCwgTdTix1CXw6jzQcTpQM1ed8VsTUqyg1xrgk1rnNlseQgm

zhQd79CW4emhLyHN/dxNgyPEkC/RRhr8plUhBqDkRMbbD1emv1+ZOTw+xkEv

3391C+7f3X8HrfY/Ha+Be/J/GvHqu1vCDzdXf/xM+4xdf9jouXvZDGbBK3Ra

c7x1kYdCsacCItQA5qJQHGjoockLJCjVVTPaSibdVdnXQNRBlddVC8RiLgNJ

Nll+ZkEORh5ONjEuBnSa79XLIWCC290l0sY0aX2K+GbiYvnF6UTbYX/1TnvB

TlveTmvOVivsDSLuFTx8LDMI5WWJ8betcTbqCHAaDHSaC3Nej/fZjPHeT486

hcVc5Mefl6edVsLOawoOy3OP4QVH8Ly9iuztmhx8X+UVKB968097CsmTCNps

A3mq7mq05hKIz0jd6WDtQW/V+44SRLRDrJVYd7oTOtpwosi/KdakPtq6Nc3d

Heg/93MPLa4AXc4wY4EUB5kSf62yYH3Av6844d3y9On5+eUVGU/+dcXXP+dP

pJDIVFD5/u4G98MNDtRfvyFsgeB7NVr64W33Uk1kd6p9pb9aiad8faQx0J+i

QAPAP8BYIs1dO81FLdlBPsBQ2FWDx1tPyE2T10yWTQmEAA5mAQ4WNuZnDpri

r1oLAfO7vVXa+3nK5ixhfZrwZupyof90uG6/u2yvs/igq2inI+t1bdRCgfe7

mtRXxcnTaSFDUZ6jsT7jMT6vYHEHVYUX9VUXmEYsqoLQ0kAb6CX2d+BaG0+Q

VUeoyn1E6XZN4Vl7Nf5FDX6kgjBWdTFUjh+vJk2hCJPIi+EaUPCevkAe9MH3

++CDBWGxlrLJ9lLoWLP6CB3AHxWuj4iwaEp0dtQQ+so/xJA30IA3wVaywFcj

31cTHmXZV5y4NjNyjgUJC7Qd9V/XH8KfB6RFVKjnCke8Il1TafSPN6RvqRc/

3OD/8IH8I2mHttKCH6t43BpcRMS1JFhV+WvUBGlh4q1y3FVKg4z8DISDTCSy

vHSzvXTTnZWDDIXtlDm89EV99fmctXi0xVjFuJj4uRjY2Bn4WZ8V+JqdjmFo

oBDYWaa9mye9naG8ncYtD56MN+90lIKE87ALpJ1Zb1CxUwU+Q+luU1mB01kR

w0lBU5nR62U5L/PTDlAVly0oLBqJbajGttRd9XdQXnQf1VUfoqoPG2t2UMX7

jUWnHUUXfWWUCQQYhDE4bhQOYjFuDIEdqjkfRhz1w/c7S15h8uIclfx1BdIc

ZJHhOohQjaFcz6oAzQp/3fpoK1tFHlne5y5qnCFGQqEmIpEmAsV+WgW+OqUh

Jj2FMStDnefQSzAqlP9TfkXx+8/5g3/omvbxmvCBcPbjHfGPHym/J23fveog

T1Q+vhuYq4pEx1lU+qmXeavUR5vle6qWhxgFGopEWsoU+BkU+RvnemqEmYmb

yrI4agh46wl46fGZy3OCRI6PnYGLg5WdhQlkRM0ZfoTVYdrWCnV7gbw5g18b

xS0NHo+1bHeW7bblH3YXbbdkrMDDZ0oC+9Ich2Cer2oy16ryFoozQOb5Epaw

VZC2V55zhiw9qS0+rik6ra84qis/bKw8xlQB8jv1OUcteRe9JcQROHm8FigP

ZRJBGK/Fj9dih+BnL6pPBuD73eVgVEY6uGly++sJFPpoocJ1qoNUO1NsSryV

KgJ0asOM7JQA/2fuGrwB+gJBRsKRxvyFPhrF/nolIcY9hdGrQ+2HBwfQe6v/

Df4XeArI/6+IlCs8mUggPt5QP9CuHiH+oP4l/fZq/XqtFfei5O5l93RFVHuq

fXWgVk2gVmOsRbm/ZpGfNpD9VFe1Al/dYn/DXHeVJEdZGyU2ezVef30RX11e

J3VuZSEmfjZmLjbwxczE/ExfgXcGkUbdnKO8XyBuTAP9x871nk62brYXv0XD

ttpyN9GpcyWBozkeI9keE0WBC5WJi5VpU8Wxw2n+Y/FeM0m+cxkBc5mBs+kB

s2kBq9lha0UxoDR7XZP4Fpm0i8k46cy96CvBD1eRJhHUaRR1CkmYqL0Yhp+/

gJ8MVB30Vux1lk3DU6wUufz0BX31+KpCjKsC1WpC1NuTLMt8lMr8NEp9NQB/

eQFGN3Ue8Ct4aHHGWYvl+6jn+WgWBOh15Ye/HG7f3dk5x5FwlL9sBPsrzv+T

Uf4bfyLt5/xxeDKZSPxwTbwlnn+i4H64vfzPT8Qvp8u3693gd7le6R0pjWyI

tagO1K4K0ECGG1UFaud5qiY7KsI8NIv89WujrAq91PO8lL10uL20BeKt5cNN

QWrErSPBKszJzMbKxM7GzMzCwsLEEGAisdFXc/1+iQhC8OsJyP/Hmzfai9fq

U183pL5Cxi+Uhw5neUwXB6/UxC9URE8VBY/k+vSmuPQk2PUm2Y1kuPQn2fXE

WQ0m24+lOs7meS2XBW6gYrfRqQdtsLPegouBMvxoNRnAn6n7M/+q4/7yw77y

3c7izeb8aGt1DaFnwcaiPro8iAizEi95ZJhWY7RRgbtsjqssqLas5DkUBZlc

VLm8tXlc1NljLEWKArSLA/VgHqotmX4rQ61HBwfQoXzU6//J//85/6/FL+Hr

db3Qjb2gCqBSaZ8o2GsC9pGC+/094Y+3598dzN696iJOwm/Xh8YroloTbeqj

TJAReqgIo6oAnXwvtURb6VRH5bJgY0SMdaG3ZoGPWoSZcKCBYJKDQrKDbJiZ

hIU8hzw/CxAfNjYmYABGJhYjGfZcT92D8Rb6zjzu5djVytDpZMu7rvJVZOrL

2vi1mtgVePRsWdhyTTywyDI8arUqark8crk8bK0i9B0y6rgl/awt+7wj56Ij

B9eVje2AnbVnYruzz3ryLvqLr4Yr8WPVxIla4DOUKSQV0p8a7IvKg56S7a7i

7bbsjnRPFQEmOyVuHz3+ID0+RKhhsbsMJsYYEapT5KWY4yYHc5G1VGBXE2Zw

VOX00OJ1VeeMthDO81YvCTSCuaug4u1fDrceH+5fXBFwUPLz99/8/lP+X7f6

ginwZ/7QOZ90KvUDGXtHJX6kXv7hA/H39KP7t0M3a530xYabV/2jxSHdaQ4N

0aZVgRp1kcalQBJ9NRNspdOcVcpDTZGxNkU+WkW+GuAnocaiKY5KILWOs5Vz

VufXFmfjY2NkY2YE3v+cgUlTiivKTKzQ3/RsvpO2Pnm5NHA6077dV/sanfu6

Pv01Iv4NKuF1XdJ6Q9pbDOxNfeIGKvFdQ/JWY8JeU9Jxa9plVwF5sPx6pPpu

AnE7Xk0eKiMPl9PGqijj1TSgOVMo2lOpC41pUHPVXo1Vnw2W73UVrjdnvUYl

1MfYKfIyuGkLOqtyAv6oCONSL/mWeNNyP5VyP9USP9VMJxkbRTYNMUY7ZU4n

NU5XdY5QY16Ym1Khv0GSvRxIQV+OdOztbGFxBBy0C+nvL0H8Y/5fLz3/ev/U

BfSB/PTOnUKnkO6I59c0yhcaJD7/Rt2/Xe+jrrSfj8GJSx3TZaH9mc4oiLwy

OtYcHqhb4AVyTrkcL52qCEt0slORr3ZZgFaynWy0hXSmi1qKs3Kivby3jqCR

JJMUHwsHIzMby/PnjMziAuzJdlKRJkKlwRYXM53E1WHsfM/+KHq7F/6urXAT

k72ByXrfXrDbXbrTkb/VkrHbkrXbnL6LST1py8H1FENdo31lt2OIDzON91N1

d5Oo+5n6Dwvoh4Wm+wX03QIGWnmbb4CsMI0ijsKxL8qPeos3MZmL1XHvmlKa

Yu0VeJ656vA5KnGCqVoTYZRpJ4yJM8lzlS7xVs73Ukqzl7BXZNGWYLZW4rRR

YndV4wg24E51ks33M4i3lauNd3w52rW/u3WJ/9PdwT/B/9sXYX9rha/K85X/

JYH6lf/l0306V0TypxvyLRlHJuK/vcb91xfSv1EA/176WufJUDlhsX20wLcr

xQYRpl/hr9aSaFUVqJvvpZpkK1MaZFwXZ4dJdinx160I0kt3kk2yk8vx1Ep1

UU5ylA8yFrVWZFcWZuFhBfLPxMjEyMPGFGklmukkF24iUhFmfTzZcrnYdzDR

fDjauD9Yu9tbvttbcTSMPBtvOB6o3G/PPmqDHTRnHLVmnXcUEPsr6SM15MFK

2nDV/STydqLufhb9uABdePFxufVxqeV+seUG8J+towH44zXng6VHPcCmmXMV

kWBOHbbDGqJt5fmeO6lxuarxJNiIlwVoploLoiL1U2yFy3yVslykE+3EHZTZ

DaWYLBU4LORZXdQ5A/Q5kx1lc3z0Y2xkK8LNV4Y793a2v/J/6pi6/roX7x9c

kPFX/v+ng22/nvBJhBoOz6BuLtJ392Qa6YoM6l/A/zPpB9Lu3XovbbXteKDk

YqZ5uMC7P8upJkSvzFe9PcWuPFC3JEArw1mpKtQUk+TUmuJREWRUHqRfGqiZ

46lW4Keb7qoSaysZZi7moSOoIcYiwAX0n4mZlYGd6ZmPkWCOu1KKk1yMpURp

iOXhGPpyqfdgtOlkovloGAUGdrrlar7rfKzhqLMQ8D9uhR02Z150FhIHKuhj

tbTRmpuxmruJ2oephg+L7R8W24AJPq60f1hue1hsgZaap1GkseqLwfLDnsK3

6PTZ8vA3DSkHnTnHHVkNMXYyPM88tASBY8dYCBb7qGY5ileH6SRaC1b6q8Cc

JWJtxG2VOc1kWEDkslTkdNbg9tXhSHVRhHlpR1hIlAfqQ3uBd/eA/l9CS6C0

P52O/tSLTvzvhxL8Lf+f33mH+xN/6iUJOt6TRqF8vgUpEJ5CwP2WfvF/P5G+

w72+Xmu7nKzbasu4mG4aynEfzvNAhZsUuCl3pTmXB+hUhugX+ulUhpq2prr0

5gTURliUgSkQrF0eZFDoqwuiVYyVWIy1ZJCJuJ4ki6QAOxsLCAGMjAzPHDUF

8zyUM50V0p0U4qylc731NjrLAfDjsebT8VbcbDdx+QV5beRqtuO4t2yvJWu/

GXbannvVX0YZrqGNI6+hs+VRD1N1H+cwD/OtD/MtgP/jE/z7hebr+SYQfy+G

Ko57izaa0uYqIoAJTvpLjnsLT7tzAH9pwF9byFmFPdFWDOYkDdKeqmDNZBvh

Mh/lDEfJGGtxG0VWCwV2Axk2A2l2D20BHy2OZEeFJCdlwL8kQG9xoPXkCDqP

B0sgQ/5PokONKKRrwi+4I+nn/L9+xoLii0S8IpJuSMRHKp5EIlyT8b+lnv5/

n0ifT+fJCw3Hg1XvW9L2B6ra4s170m3qoowz7MQw8RZF3upVoaDs0q2JtOzI

8BguDq+LsYYHGZYHalYGG5UHGhX6a4PMOdFeJsJSylqVT5qflY8TUqBnDAzG

cry5bgpZLgqZLvKZroopDjIZzsqjlXFn0xjsbOfVUh/h5RB1fYKw2Acq1v2O

gv22nOP23MveUsowgjSCvJ6sv5uuh5R/sv5+ruVuFnM/hwHk7xcwN7NNlOmG

y5Hqo96S1/WpwPPXG1PPBsvOh8pPegtPurKREdZSXJD/OymzpzlKp9iIVvip

FHkrpTtIlPqoZDhKxVhJ2CqyAvHREGNWEWQAlvLV4U6wBbmEQqytTLGfzvKL

9otzLBaHB/SIVGgLGDAB8asE/TP+P1v2ofx84Ih4Ov6cCqYUifjxmvjjA/4/

HwnfnszRFhv3O3PWm5J2u4raE62Gcz3roswzncQboo3K/bXKg3VyvNRRcY7d

OT592T4NcTbVISagNKj016kOMykP1ouxEomyEou3kvDSF5IRZBLmYWdlYXjO

8FxZlCvHFSR7itnuStluSlluSmlOcvHWUvUJjrtDdfiVAdxyP+nVCOnl6OVk

+3FvxX5b/n5r/klHMX4Aju+vuh6vIw9VU4Zrrycbb2cwN9Po29nmm1nM9XQj

dRKFG6k96i19iUyaLgndaEw96iu6BEnpSNVJd8FpWwY81FyW57mHJo+9Ehvg

n2orBA/UyPdQyHaVKfUCyY90mImIhSybmTy7ljirIt8zd01eH22eaGu5cDPp

SCupinDjV5PDZ+cXZ1gcDur/fGL7iy+F+bv8n3Z7gWT0mEzE3dzQv3ug/tcX

6r/fnH06nLleaT7uzVuujdxuz+uHuYCBTrDOcBKvizCo8Ncq9lcvCtBpTHbr

y/fvzfJuSrCtCDRsirFAhBqUB+pVhegnO0hFWopmOMoFm4ioijILcjJzsDMy

MDyX4GNNcZDL81TJ8VDJ9VDJdlcGCV6mq3KSrWyej+5CUzZhZfByaRA733s2

3nbYW7XfUbTTUrDXWnDeXYF/UUMeRV0NVhOGEfQpNHm0DgzKRBN+tJ4wVocf

RR50Fi9Xx0PwG1JA8D0friCCKmC06qgr/7wDVhNqriLIaKfE5qjKnu4ok2DB

Vxuim+0sW+SjkueqCCwSaChkLMViJs+hJ8WhxP8cpKl+egJBphJBxuLh5mKI

RKe3S3PHJ2fYSzx0Pt7fnAL0a/lfkKAnCU+gE85IxMtrOu23H6//6zP5R9L2

p5Olu/We4z6I/2Zz1liBd1O0UX2Maa67bKm3WrGXKuCf7amGincaLg3pz/VF

J9mXBxigYy1Q4UbISHN4kH6ao0yEmVCeh3qkuZilEpcINxM3BzMDIwM/B1OM

tQxIXwF/UETneqpmeajA3MBQS3VSSHFSas8OOp5qwc51HQw1bvdUbrbkb2Ly

3qHzDjpKT3rh5wO1X8fFQO1ZH/ystwo7iDjurTroLttogs2XR63AY7aaM057

i7CDZfjJWtIU8mqkEvq2Mwvw1xJhNpRicVHjSLYRTbMTQ4YZJpgJZ7spJFtL

AMH00RUwlmIFkVdHgk1ViNlOmcNbR8BdWyDQWDTaTKS9OGbz1crh0fHlFfFr

8nlF/m8toP9Uf/588+yfBpZEOccT6UTiHZgHBNzNNf37z3f/fn/5u8vX312+

ob/q3mmDrdXFvWpIGy3wRoRqlQdo5Hko1EWY5rnKF/mqJdnL1ETbDuQH9mR5

oRPt4SHGoKIBEoSKMkeGG+d4KAfocmW7KCfaSbpr84MQwMXOxMLCzMbMEGAs

UeitBiroAm91MECBme2hBnNTBSbIdFVNsJcvD7dYbi48mWhd76xYQGQsVCUt

VaesITLeNuVvogveoQvW63M2GnLfofPB542GrFVE8lxF1Gx5xEZd8lFH3llv

0dVQOXG8ljxbB2ph3FD5GYi/7Zl1kZa6YswaokyemlwxpgIlfhogkY405Mtw

kk22kYg0E3LT4DaUYILe34kya0tw2Cqy+xmIuGjyBZmJJ1iLDdUVvlt/A/HH

E3+e/P9C/j/d/PtTn+FT5Xt1SyN9oJEpJPLdNfWHz3d/eLj8Ab/5m4vXd2td

ux25y7VRG82w4TwveJBqqS+IVmq1kcZ57vIF3qqRliL1cXb92UFtqc6NsUB/

DGrCDRviLOqiLKqDdHPcFYMN+NMdZFOdZQKMhLXF2TnYGDlYmZ8zMLjoiBb5

qBdBRbR6ia96vrdarpc6zEM1w0Upw0UlzUU12Uk5zV2zGRa41FzyqqV8Dp7R

Bwvth4WMFESO5EeM5oUN5wQN5QQM5waO5AWN5gdNFAWv1CZsteac9BReDJQC

wQf5P2WmgT7fSJlE4IfKT/vzjjrSW+JtVPmfaQozBujyBupxFHkrw1xkw4z5

0xxkkm0lIkwFHJXZTKVYDaQ5NcTYjaTZ3TR4fQ1EvfWE/Qwl0twU5rrR796+

PT67wF4RoeZn6l/055fczvN3+BPIBALxlkq6pRDodNrjLe3HL3d/vMN+j9v8

crxMX27YxCQs14RuYFJ7023L/RRqw/XKA7TBbM12lQU5JKikQOXbkeoJHL4p

HuIPD9GrjTBCRpjCg3Se+PMl2knA3BWCjESsVHn52RnY2JgYGJgsVfiB/5cG

6FQEaVcEaJf6axf5aeV6aYAo8JV/qotqurtaiotqtrdeXZJnd350f3FMd25o

e7pPa6oHJsGpJcGxPdGhO9V1NN9/sToWkD/uKz1/UXkxXIkbhQPNp87UX4Ny

eL6JNlNHHoOfDeQD/j3pzkq8z7RFGYP1ucIMOCv8VFNtxSJM+FNsJBKsRIMN

+SzlWIykWPUl2fSlOE2l2Vy1BVw0+b0MxIAVCoONX4717m1tPe1//F/gf/m0

1YhGodFBJku8oNMp3zze/Mc3d6Dy/d3FSxB/L0fLtpqTX6Eil2tjupKtKv2V

6mOMCzxVM5xksl1k8jyUQswEGhPsO1LdIbePtSz31wMzuiJYpz7GotxPA1qO

MxGJshTIcZf3NxBy0eGT4WcBJRjTM2ZdaY58L5WyAN2acANEmGFtmElViGGx

rw4wQZa7erqrWqqLGrBCpjuYFOqZ7ho53rrFAQaVocY1YeY1IaZ1EeZtSQ6D

Wd4gvYdqq+7Ci2E4SDvx44irsVrwJE/X0+fRN4vNNwvom/lGwP98sOCkK7Mv

002e+5meBEOYHkuiGX+Jl0KKlVCMGX+ihXC8pYivHi/4I0NpDsDfTI7TXIbF

WpHDTUfYTVc0wEgUmeq5Njt8sLuDw0PLlVC7IJX+E/9fIkF/uXn8yQSgiCYB

+FTKHQ0k/pc3N7TvPtz98cvtF+z6p/3JL0ez1CX0Vkvqm4bE5ZrY1jjjCl+F

5kRrgD3bRS7DUSbLXSncTKg2yrIpzqHAU7k8UKfET7vMT73MVxMTZ1cGyYty

vI1kkBEvyK5DjATddfm1JbiYWZ4zMjEpCrODsAuSWESESWOMeX20OQJMmRBD

UODkemlmeapnuKulu4CIoJHjpVXop18ZYlYdag6qp9ZEh65U56Fc7wV41HpT

5m5Hwdlg5dU4kjhVR5hAEiYQ+PFa0iTyyfnR14uYu+VmwB+Uw7ih0uNO2Giu

lxIvk4k0Q5AOU5IZX66TRKI5X7w5f5wJb6QJn70Ss64os4EUu44Eu5kMh70i

h6kcmwN0L5hcvL1CV3nym8Xp/e1t/NNLK4g/5S/HQf+Do7n/lv+TCaBWQzKF

ckun3NOhc07ubq9/A/h/IH+Lff1pd/J2c4S4gN7tzHrbnLlQFY2JNSxyl25N

sk+zl8i0l8pxU0p0kIowEy4LMWyMsyv2UikP0i0N0i72Vij1VKqPNC/31chy

loi3lQg0FMx0kow2E3LV5DdXFOBmZ3zG9FyClxVUXlVB2sgo8+Z4a0ycNUhc

6yPNasJNyoL0Qe2W6w3Ia+T5aBf665UFGyGjrVuSXHqzfMeLQxZrYsF/aber

8HSg6mocRZxqoMxiqHNNxEkkYbzmahROnkLS5+qv5xuB/98tY67n6qlTSBAR

Djtgi2UBBqIcljKMwXrsyZZ8adYCCWY80SbcUYackWYC5jIMeiKAP4u2BJuV

Ao+DEo+xDJu5AmeohXxBoOE4pnJzbfVo7wAP9Y087VX/V/lDN7sRyEQylUql

3d/Qb6lEwtXl/Q3t+w83/+cD8fPJ8vXGC8pK2+lw+flw5ZumtNnysJY4syIP

aSAy0WZ82a7yue7KURbCkRYiJYF6jfE2RZ6KlSE6JQGaRZ7yZT6KNSG6lQHa

6faiUeZCXtrcqQ6ScTZSNkqclip8Yrwg/j4X5GIEZW91iG5Tgk1nCuTS7Un2

LfG2wBDISFN4mGFZoC4oNksD9CpDjGojLVpT3F7kBkyXRb2qz9zuKD4fqsGN

1RGn6qFWt5km2lwzZbYROD/gD+BTZ+roc1DkvV1qvl6AluOgFzEjVQftmeuI

8EBdESPRZ8G6HLEm3LEmXOEGHCAQRBpxgVTBWJJRW+S5oTSzoSSzvZqAhTyX

hSyHlQJXlI1cdZzD0kDr1sab46NjHOFPG1Wuni4l+YXw/8r/ofKNRKFQqHQK

iU66olFIjzeU7+8pP1KPvxwuUF/3X00hD3ryzkdqpsoD+zIdawI14UEqDfFW

0ZYC6Y5SMGf5SHOhMBPBkiAQcE0KPORLAkA+qVHkqVLiqVgCpoOvRrazZKyV

qL+hYKylSKy1lIUCu7kCh5wAGzMjAy8nQ5KdNOAPbAdiYn+m+3Cez0CWR3ea

c0eyQ0uibWOsJTLcBBFmXB9l3p7qOpQftFSd8A6TfdBdcj5cDXyeNo8B8Klz

GNp8MxiUWcj/odeOM3W0Wcj575db7lfabhfRwP9p0yjCKPyoM/t9fTQ8QFNP

8JmfKrOfOmO4AXuwLpufJku4EberMpuBODOk/zKM1gpstqogFrBZy/O4agvH

2cuhM/1eTw7v7WyfnJ6eYq++bhT9tfyf3P4n8QH5JuBPppChAuyaTv1wQ/n9

I/EPt2cfdmcIC81XU6ijvsLj/pKRHHeApTJAozJAFUh6gpVwqr1MmpNspKlg

kJFgrrcaMEq+pzJIRzOd5cDUyHeTy3GWznWTz3KUTLIR89PlDzIWApHCSoHd

UJZdUQhkQM842RliraVrQ/TRcTYDMI/xAv+JwoCp4qCxAv+RfL+BLM+eNJeO

FPu2JLv2ZLuBbK/ZypjXDRm7ncUnA5W4MQRpphEIDmWmkTaPps2hqVDDbRNl

up4yBeA3XC803a+0AOW/XcIAQzz1v9WBpOi4p+AdKmqu0M1NhcNVnslFgdlP

iyVIlz1Qh91Ph8tEihGIv7YYi4Ekq7smr6U8p74kh72GkI++SLaXVm9l2ur0

0NHh6enZxcn5BbRdC4QAaOvo9c8vBf7HFxT+xB+6TYNEgbqtqFQSEU8k4qkU

0gON8OP91Y/Ug+vNkYup+ssJ4P8FR31FU0V+XakuoPKqCFCHVsitRfO8tWBu

yjGWor56/OkuClWhBumOstluiqn2Uqn2kjmushn24plOUinWIKkQ8tXld1Tj

jLQQtlfh1hRjUhRi4WJjYGd9DvjXBOth4qz6Mt1nSkMWKiIWKiMW4dGv6pIW

4THTpaGjud5DMI8XmW7AKAvVMRtQtC06Gai6GKklTDaQAfy5JpDkQGOukTqN

As4PBmAO4D++bLsH/BfRkBDNNQBRuhyFn/UXv6uLfY8Kg/trGIg+MxRjdlNm

CtNm8tfjMpdl1pNkU+Zn0BBiNJNiDDUWsZJlt1LktVHmjrSUqYyw6K1MX556

cXx8dnp2eXaBw1P+Rf5fTfAkPkD76TQaFSg/MAGVSobaDq/Pv718S309cDJa

i5tCHfcXv21OH87zbE9xrgrVqQjUynSWiTIThLmDnFAFBF83Le5Ee5myAB1o

GcFdJdVBJs5KFKT9qXbiKXZiiVaC4cbcvvp8FrKsQSb8NsqcIK4pi7LxcbE8

8ZesCdFBx1n0ZbhOFgW+RMS9rksEyeQmJmMDnb7emPqyNm6mJGQ8zw/86SI8

ahOdsduWD/z/chQB9Ic83fhUXgH/b3rqcEMQJ2oo08ivnv+w0gJ9WMIA+ECO

yJOIy5Eq7GDpdmPCO2TkywovWzk2eb7njorMAdocZjKsWsJMykIcEhwM+uJM

Tqos4cZi1oo8xrKsLurc+f6GBQGG9dlhr2anjo9PzrE44P5XT/nPJemvL8X4

KRb/7Q/B+Ik/VHaRgPRQSSQimYgnkwgUEuGBfPEbwu63F29uNl6cjNRix2p3

OrK32rJe5LjXhhsX+Slnucgm2YmHGPEkOShmuKpEmIs5QGdhScBDjSuCjbJc

VJLtZYDUg+os2kwg1lIwzkow2JDTU5vTRJLZV5/bWplTVZhJQZCFh5OZk/V5

op00MkyvMca0J91psjhotTZ2ozFlpz17pz1npz13qzULskJT2lxl1FRJ8HJ1

NLDIVnP2cX8F4P/ntAeaAsAKVOidL4o2WwfUBojP3ZP/P6y2PSy33Cw0gR8C

64C8CDdcvodJ3kREH6Bj60P11QWe2auy6EuySHMxqgmwCHMwC3MwmcqwuKqz

BhtJGslwG8oyx9jI5vvpg2IEnR/1ZnFub2/34hI6ggniT/7V/KET1J8uUwP8

yRQakUi8vMQRCXgqmUAjEa5xB5+PF39z8Zqw3HHyovJ8qPIdJu09JqU/06XM

Xy3FXjjJVjTGXNhfly3JXjHdSTHURMJOiSvaXLTUXyPHE1q3ibORjLcSzXCW

CzcVCjPlB8NPh9VVlVVXjNFDi9tBhUuWl0GOn42LjVmYiwEEi/pIY0y8WUeq

3XhRwAoidr0pZbcz56S/5KS/dKc9/11zFhgbTRmriISV2ri36My9zsLTwSrc

GBLwJ4Pgu9BMBcozW0956jaB4IOcc6n5/mX7w1oHeN4tt0HrDzPAWAgcxL9i

vwP2vj72sClppy46y15Kkfe5ACeLLDeDBDczLwuzAj+LhTybjTybu6awqRyH

t4FQgp1iqptWgqMqIsXv5dzEzu7OxcXlKfbi4ulCrss/3wv/V+R/Pv6a/1Or

5xN/KtRrDr6gd494Gplwc7l/vzP54XCBuNy+31MInH+jIX6u1L8l3jzPTS4e

8meRUCOAlCPVWTXDRSXERNxGkTPORrrQF9RKismOCqEmwjEWonFW4sGGAgEG

vAH6vL7aHHaKzJqiDNBLJXl2GT5mCV4WDiYmZWHmPHf5pmiztkSbrjTHoRzv

xeqoDXTK+9bMwx5QzFad9JfvtOdtYmBgvEVnAFHaas3Z7yp6ir9IkHPSF1qo

iyDzAfoP+Tx4AkPcLKK/wv8z/1YaEP+f8T/uzt1Cx592pB+jk16XBzor8XM+

fy7Eyc7PySbKyaQtyqQrxaEpxmoux+mgLuClKxRiqRDtoOZjIJIXZLk6+WJ/

f+/kFAv0/+mIPOj8n1/F/+vxntDaBZkGkh/AHxQABDweSBDIQq/Pd6hvBj8e

zFFX23c6st42Jb1BRr7IsGuI1Ic5SceYCiTaScZYSXhoMKe4aqQ4KfrqCdiq

cCc7K8Dc5VOc5OLtZPz1+aPMRWKtxAMMBd01OH10+dzU2K3lWVWEGc3lQFLN

IsXHKsLDxMnAbC7HXuKt0BJn2Z3s2JfpBurZ6bKQV/WJ71oydjtygf9jh6qO

+0pBebvdlvuuGQbk6LCn+HQAzMpqwgSU9l8vttKWWuiLGBBwb5bQtIXGm2XM

/cvWx1d/gn+/CvEHFqHM1hEna/HjNbiRCmx/4UFz6nE37Kgz8wCdulLsEawr

zMP4XJCDWZKHWV0M2vmuI8Zuq85nocpnqcznqCfhYyrjpiMA89ZfeNF+dHR0

co47v7yC9r//jP8vGYD/OYCPJ+GAv1NoBCL5yQSgBgBZENT2Tzxcp73q+bg/

g5vDvGtNfYUKny307Eo0rQ3WSLMTCTfmibcVBwWXvQJjooNyrI2Mkzq3nQpn

misohKVBMI22kgwyFAoxFgm3EPXX57NRZHbR5HZUZjFXYFcUZNSVZFYTBgLL

wsf1nJuF2U9XoNJPpTPZrj/TbTDbEyT/XyVoE5O+255z3Ft8/qLitL/0sLvw

oKsADGCUvc6Cs8EqIP64CRR5Dk1fbKUvNl8vYsC4WcLcrUDZJnjev+x4fNV9

v9YJPtwut9CA+M/Wkaah0hg3UnU5VH7ckX3SnXvam3fQkraPjnsDDyh2VVbl

ecbH8kxMgFVNlF1bnMNEnk9ZhENBmENVhM1CTdhRVyzH3xTw39t+d3IG/B9/

iYfOx8P9YvhfB+APBp4IMp9rPIFEIEJTgE6FqgAyAU88eENdab1+O3w22bhW

H7MMDxjLcuyINynzVky2EQg15g414QvQ57aQYUp2Ug03lwT5jIsGd4qTQpiZ

eIS5eKCRcIiRiJ+eiJcur7cOt4kMq5kcu7kMq4Eks4YYo4oQkwQPMzc7EwcL

k5wAc4adRHWAZleqw3Ce90i+L+A/Vug/XxXxui5ppzX7sLvgbKDkpK/4sCv/

sDt/vzMP/OSor/T0BRB/KPJS55uvl1qvl5pvllvuVtvuV9u+Zvug5npc6/zw

uvt2pR1MgduVZup8A2WunjBZC/iTntrhoBcBPbkXvbmHranH7Wn7mMSduojB

NOtAXSEFnufCHM9kBLlkRXn4oc07XMK83IJ8HDKiPJ4WWjP9LVtbW2cXV+dY

PPaK/HX36L/A/4oIMn8KtOedQKLT6cAEV7gLOgFLOXh9PoagvRnc7i2fKvab

LPBojdXtSLKo9FPNcBQLMuDy0ASBicNIijHQWDLMQtpUlsVFiz/BQSHASCzA

WNRbTyjQRBRopqMGj6smn444k5Yog7E0i5rgMzURkPZDN8WwsTPys7K4a3AX

u0uhwvT7s1zHCv2mSkMAfOD/MxWhq7Vxm+h0APy4twjwB8+jnkLw7X5XPpAj

7HD15UgtVPzOAZ9vBfBvV1pvIfgtIARA/Fdb71daH4D4rADlwVAXGoDzA/6k

aRR5po4MaoRpJG648rS34Lwv/7w3D4yzTthhS8oBOn6r1r8/2SLaVFqRj1GE

j5uTi5udjZmdjYWZhUGAl0taXNjH2Xp94+0VkXoGXUpOBnrya/n//5Jbtjw=

"], {{0, 48.375352736947036`}, {48.000350002552096`, 0}}, {0, 255},

ColorFunction->RGBColor,

ImageResolution->{191.9986, 191.9986}],

BoxForm`ImageTag["Byte", ColorSpace -> "RGB", Interleaving -> True],

Selectable->False],

DefaultBaseStyle->"ImageGraphics",

ImageSizeRaw->{48.000350002552096`, 48.375352736947036`},

PlotRange->{{0, 48.000350002552096`}, {0, 48.375352736947036`}}]\), "Attributes" -> {"black", "young"}|>]](https://www.wolframcloud.com/obj/resourcesystem/images/c74/c7481216-d36b-4ff2-95d5-a5c7cfb9e1fb/13e75678c12617dd.png)

![Manipulate[

generator[<|"Attributes" -> {black, blonde, brunette, male, young}, "Image" -> \!\(\*

GraphicsBox[

TagBox[RasterBox[CompressedData["

1:eJzsvHVYZFe69j0nk06nhYamcXd3d3d3aNy9cXfXQsuAAgooCnd3h3LFvekk

Mxk7c95z3u/7/v1WQaYnZyKnM5PJJO/1Jk/vqyjk2vV71rqf+1l77S0enuwR

/dGvfvWr9E/BwSMs2zwtLSzXkwN84Z2UHheTFBVpn5QRFROVphf+a/BmKfhX

Av6xXhNph98MEv3oJw4y4/hD4n/8YfBdcP7gBYV58uFBPTz9rvhBP/wQtKOz

D4//k/i/h/9/+f8oWfjmt77/dx/O/Ot/4Qdl4QPxfmD8n8T/WzPyzd8lUJm/

RP7/Kto/euApjG8m8cOz8BOT/wXxB2BBvB//33XOYPx/T+34Cfj/IPK/IP7g

JPeJ1Pen+q3nDN4EOXrQzwfavxT+/1rN/5AA9ADbhxR819k+8MeR6Q/x/hd/

yiz8EvkD0QDcwBHEw5m8f/3eUj4EjkQ7INHe/9jfwAfMH777vhD/svj/S7Lw

N9gP7gmzvgTvk2kHRCoefJcKskPHUeh7JNounrxHoBDIDByNiaMzifRDEkgT

jUmg0MEPg98FWbj/a0wy/RgECcQH14J/rf789PwfmL+XjofXAOD7F0BwdnDE

XRB44h6BtL2P29jZ3zkgbO/j9wlkAoVBoZ+QaCdE+skOnvxQow9I1AMyjQAy

Qr2fU/RDPOOQ+I258DPk/y9Rnq+rzfvSiSPTtnFEQH5ze3djfX1pfmFqdGQU

g+5rhw71tIMY6EYO93VOT4xubG8TGEf7FOYOjrS7j1tb31hdW9/eAbki05nH

FMYJHmSBxiD9kL7sn0r7Z8j/Hg5LMR6GPRjDW3uE1fXd+bmFUWwvAlJemBwe

/9o+xEHX31o9wFYv3tc2I9wjP9K7OC4IUpw1Pti3uXuwsbWL7e2GQyogBWnV

2UktpZloWBNIHI3GYByfE1mt8YM1Pfm58f8uZfgJkvLVgKfS8UDqyUAuGDtE

ytLKxszkTD+qvSg5KtDOyEFdwkRBwERRyFhB0F5H1stC081E1d1Mw89cPcxW

J9jeIDnQBdPWimpqSA/ziXIzDLXVCbPVTnAzTHAzzgxxgZRkTI4OU2hM2tE5

UCEK8+gDC/E/G/tPxv971g0enA/LLlKPiPTjfTxpaWVranS4qTwryEnfUI5b

Q+ylliSPjhQ34O+gK+dlrhHkYBjhZh7mYmKlKWWiIm6qLOqgI+NnoRZkq2Op

ImqqJGSmJGyjJuaiKx1krZnkY57y2iLZz6omK25jdZV6ckmkgyn2QQt0v3T+

31xT+noWHt55gA9eAN+ys3cwNzWBhta/CfYwVORX4H+iKPBCW5rHQEnQXE3M

VEnYQk3c2UDR00Q52F4v2t083MXYSV/OVFlEQ4JTVeSFiYqghsQrZRFuFQlO

FTEOFdEXOlKvbDREguzUkv0s4tz04r3MMSgkkXrviL76XO8nwj99kfOH8v+x

tOXr5fW9J7//7jGJeownUUGpPcCTt3dxYwN9uTF+jnoyqsJsykIv9OT4jJUE

zJSFTeQFjeT4zJWEHLRlPIyVvcxU3Q0VfM1VQ2w1fUwVbTTEXYxVrDWltCQ5

1CVeKgpyqIlxa0ly68vy6UnzmCsJuBlIhznpZAU5pHoaRzrqNpVn72zvEBin

RCY4DSaBdVagOv+1WaB+bcH5F8f/+2cN4P++LQWOEU85BDZyl0De3NxG1hYH

2mhrgQEs/EJNhF1HmttQQcBIkd9cVdhRV+a1pUaEo1GEk5GvubqjrrSDtqSD

ljg4gnS4G8qnvLZN9LQwluNWFWOX5nuuIMCuJvrSWFHYVlvWTkfeXkfa10I5

0lk/3d8mN9gu2kErK9p3emIYT6aCckBkHhP/esngq/N/IP+e/3fl4mfI/+tZ

+Oab9yk4/Mpq0g/XD/Arm9tTY8P5iSEuuuLa4k9VxNg1xV8ayvObKgubqYrY

aEk668v5W2mFOuiH2On5W2oG2+q46suYKQtpiL9UEmTTkeRy0JIIs9WojPeL

cNLTBuNfiENWgF1RmF1bittSQ9LPRtvPStPLVNnbTC3K1TjO3TQj0DbeTTfO

wwSNaN3HEXFECuuqAeP0oRzc0/5KJP+1+v+PxEP7/7AU/M04ANUWOMzt/ZXl

zeEBbEqAvYUir57UKy0JdkM5LlMFfnNlIRBmSoL22lI+5mpg2Afb6kY6GwdY

aXmbKDlqi+tIcqqIcqqKc+vJ8DnrSL5xN0TmRlfG+9ppiGmIv5IVBLPgqbII

u5GigI2WuIuBgreZuquRioepGpg+QLhSfS0yAmx8LVVhdSV729s7u6Ds0+/5

/7Uiv9eiXyL/92rzkALQHxEYLIdPooMv6dtE6tL6zvr69tTw0Bt/J1tVfi0J

Dl1pLgs1EXtdGWs1UVsNcSddGQ9DJT8zjWhXkwgXfXAMttULstbxNlEFk0JX

hkdNDOj8Kx0pXkctCWA124ve1CS89jNRNJLjVRRhVxRiVxBi05B6pSvLY6Yi

bKEsBOB7mKo7GyhYqorba8tGuBil+pp5GcrAqgv39g/Wt3Z28AQihUoFKsQ8

e5gIJJZZ/fYS8PPn/+Arviq+QFdZ8JkUxuH6Ln5memZxdrYLCY3zsXRU49aW

emGqIuxrreVhpmqlLgbguxkqgGEfYq8f4WQMXGWQvY6bibKNpjQw/+Yqovqs

uixkqMCvJcmlK8XtbiD7xt0ImhPdkhEW66RnoSQAsqMjxaMl+UpdAuTopYmy

iJ2OrJOO3GtLbR8zDVt1KUN5ATNFAXd9mSQPo9fGMvW5iSuLc9sEMo7CBBOB

+hdf+l6Rfon8/9ssAAaDfkilH25sbE1Nza2srHc0lEfYqdqr8ejLclqo8nia

KroaKlqoCNtpSrjoywH+r600geZ7m6oC9bDWkNKS4DJTEbPVkbVUlwT23kpd

wlJN3ECWX1+a21VHIuu1JaYyva88OdnD2E5NyEyRz1JZxElb0stEwdVAzkJF

xERJxNVAMcTOIMzRONTRyEFXFhgqTTEOS2WhNy7aoWZyhYkh81MTuHthJFFp

7/l/Twp+zvX367OA9YJ6RKYxt3Z2p2bmtnb2hrphMe76Dmp8lko87kYyTnoS

jjqStupiQHMsVYStVEVAeJoqO2hLGMvzAiepKcFjriJuqyXjZ63rA5pfAyVn

HTk3fUV7TWkn4G2MZJPd9VEFMb0lCQmOOv4m8jYKPMYSnNaK/F76kqHWqqBH

9jFRs1aXtNWS9rbQBI1bhIupo568vjSvkhC7rhRXtIN2tJ1aQZTP0uz0AYXO

WiwCyvO1HvmXy5/0YO0ox8BmTE6D/ycx3W1vvE3ctYRcdMSDrJSdtEXtNEVs

1EUcdaQsVYV0xNnttKWcDRX15fhVRdjlBF+IvHoG/LypipiHuaa/rb6LgZKr

obK/lU6onZ6rvry9mqinrniUjWJNjONQxZv2jKASf/MQYykbJS5rBW4XDeFQ

G/UoB91AMzUnHVnA3F5X1slAwcNIJdjOAByNFAVVhNnV+Z+GWMoHWshUpEbu

bW4fkBjAkVIZYPCckBjf1wX8/Pk/6D+eQltYXpkcGx9EITMC7PwNJYDqhtpp

uuiIWasJ2muJmygKGMjxghKsL8ttpycBRFuK67nQy2e8Lz6RE+DQl+E3U5cy

UZXQlxMwUxG11pJx1Fdg/Yosl5UKX4CxbJa7QUOsU3dW8HBJbGeab56nVrS5

RKSJaICugL+BWLC5QrSTXoCVspuhrJOugrWatI2mlIWKqLuxiqepCjBaqkIv

FAWe+VsrhVjJ1WXF723uEql0EqtBOwf8Hzj/Evk/XJDaJ1LXt3fGRsYHO+D5

Ea5hNor+JlLhdqoOmkJGsq8sVASsNMS0JDiBnwcVU1OCU1mUXYTjY362R2Lc

z41VxA1l+XSk+LUledRFQSJ4QGkItdcNs9MOtFCNttdIcdVKdlZPddWoDDTp

eOM5kBM8lBfUn+41nuEzku7eGmqSYiXtrMhhIffSXVfCRVvcRkUQCJ25mjho

7nSkuOy1pD2N5a3VhdSEXqgLsgVYyvqZSlWkRGytLu3gKaxaQGOSHwrBt3mh

nzN/8j3/PRJ1cw+/vLYxPThUGh8QZiXjby4bZqdkq85vKMNppsgLPKeu9CsF

QTYlwee6MlzKIhwC7I/5n/9ahPNj0F5ZKAkayfAYyAqYKQk5aYgGmSkkOGun

exgWB1pXhNlXBVmUeumV+xtBE5wHcoOWa5PW6pMPYFl4RBYFnrFdF7NWETKb

79cQahZsIG4q9sxSltNKgdtMlgukADR3mpJc8rxPTBUFnHUlHTVEDMHsk2L3

MZEMsVZqh5RsAke6i8PhiaxrOiALf7GjX58LP2f+BCp9B0/cPCCsbW6NjYw0

FaeHWilEWCskuOp5GMvoSr0E/sRGVVBPmkdX4qW8wDMloedy/E+FXj0RfPmp

LD8bmA4mSkLASWqIghLJaSzD6aDEE2IsGWstl+OuW+RtVOipV+1vAo92wGT4

zVXGbTVl7jZn4mDZlPY8OgpELq7pzUFjwj4kZqMydCLPrz7YJMJYzEWF31qe

z1qR11D+lY4Mh5LQE2WhF9oSXNYaInZawsYyXFZKfJ76YgleZpNDmP0DIp5E

JTOOSKAjZvXFrBT8bMf/+wWHhyXN+4uGJNBYLi8uTGE60gLMwiwlUz0NvQyk

5fifGyvwe+pJmCsIaIi+tFQV1ZUCU+CFAMdjMZ4XKiIvVUVYWqQhxqEhxqYr

/txS8ZWzBl+MlUKOm3ZloGnbGw9kgltvus9oQchcZexGU+ouLBuHzCd1FFJR

BYA/tT2X1p5NRaQzuvKpyHRyUyy5KWazKmQww63K3zjEQMJZXdRQ+qW+JLuJ

Ip8M92MNCW5NyVemyvyWKkKmCjwuOiKBZrIVqSHzM5Mb23tECp3KPCHSQS98

9jPn/5dqy7oIS6AwiWTm5hZocgdqM0PjHVTzfEwibNQAakXe51YqovbaYqDt

kuN7qij4TFn4pQDHU+7nnygIvACdkTL/EyBHwAu5aAj56QpFmIhmuarVBJhA

Q6yGsgJmy2OXa+J3mlP3WtPxyFwyqoDUUUDpKmb2ljK6i457Sw67CpmdeUed

uUfdBYeoLDoimdmRsdcYt1ETuVwRgklyKnTX9tUT0RN/bqHMZ6bIryLIpiry

QlOc3VJNDPQONhpCPiYy0XbK7fWFC/MLu3t4Aol+v1L9VUfwz+D/j19/Z635

0A7v9yockmhHBBJtdWNlemIUWpYVYi5REGiR7mNmocilKPjcWJHPXJH1wcHc

l+Z6rMD/TITzMcfTR5I8L6wVBbTFXqqLsOlKcFjKsPtq8iRZK9QGmKKT3Mdy

A1eq4vdbM3ehmThEDggyKp/ZU3zUW3bUW36IKTvClJ30V5yC6C077S05RRee

dOUeo7Jo8BQ6Mo0MTdmHxO3WRG1WhU8W+CJibWIspA0kXhjL85jI8UpxPVES

eWko/cpaic9QhstFTyrYSiHVz7QfUbe2vHpApOJoDDLLlJ6QaUc05g+eAvTj

cxBff/31+FH4k8Dgp4FWlwnOE5zkxub+4sLieF9bordRppdejr+xh56olshz

PRkeSzVBK1UBTVF2Uc5PJbmfir78lOvZYyG2R0ZyPHoS7CpCz7XEnlkpvAzW

Eyp002iPd16siNtrydiEJO82pxMQYMAX0rpKGD1lR33lLNr9VSeY6pPB6tOh

2rPBmtP+ytO+spOektOe4kNU9nFnDoBPaEkkw1KILUn45jf7DfGb1eFLZcHo

VLd4GwVdkSfAyhrJ88nwPlYW/NRYlttaVdRCgddNTyzYSr48KWCkt3Nre5dA

oxMoDECeQj+m0H/wFPgf+f8I8bDUQzukME5294mrGzubK6vN+XHJ7uoNCe5J

7jreJlK64hwWiry6Es/MlfmBnxTleirFyybw4hPe549MFPjM5F+qCj/REntu

JPFpmIFQe4zdbFHIak0cDpax3ZgCUkBhKXwRA10K4rC3/Liv4gRTeT5Qd4at

Ox1uvBhvOR9uPAWvMZXHPaWnvSCKT3oLTnoKaO2ZZEQaBZFOQqQSoMkgBTt1

kSvVkYPZ3lmu6qYy7KYKnLrS7IqCLDE0kuMDNtVK8ZWPkWSEvRqiMnt2YpxA

Yu04AuRBCshfqwI/SGf+Jgs/sv/56vLWIZ5MX1rdOiCSxjCowlC72mi7DG9d

LwNJfUlOA6kXptIvTGTZwaxXFWaX5H6mJMwJ3L6ePI+2BLu+JIca/yMLafZ4

a4XeNy4r5REbdfF7LSl7ral4eBa9q5jRXULvKqF3ljC6So56Ko56K0/7awDw

84GGyzHY+Sj0fLj1fKTlbLD2fKD6Alt5M1R1jik+7Ss66S086gamKJuMTCfC

08iITHzLmy1I/CYkYazgdaKtnLEsu6kSj7rYC1mBZ2rCbNaqgnZqvC5agsEW

0hmBNiM9qB0wpCgMIpVJZfH/axX4efBnrS0D8WFt8KAxdvbxwPNvb+00ZIUW

+xuXh1kHmYsbyXKqCz+3UeY2l39pIcdpIs8rzvGpguBzSc6P9WQ4jWW5gAlU

EXhsJvlpqpU8KtZxKj9wvS5+tykZD88kIrMpHQW0riLAHwz7o76qw97qE0z9

6UDD8UA9iNOhxosR6NUY/HIUdjOJvJ6AXo42nA9Vn2MrTvuKj7vzj7tyjsCx

t5DRnU9FZRNhKSR46n5TwkZt1EpVZHuCU6S5pJEUu7USr4b4C2UhNlD6HTQF

7FR4g8xlE1w02ytzlxcXiaylISaJtTR3+oH8vw75W3PxI+nPV7t3iDTGHoGy

S6Ae4PA90PrCIIuqcIsMD007lZd64k/t1QQcNfiNpV54G8poi3HIcgHv/VxT

jF1PisNC/pWa4FNjSbZcF2VomBk2iTX4D1rTCMgccnseuSOf2lVCZWl++elA

7TG27hhbfzLQeDrYdDrczBrwIy2no62Xk4ibmY7b2Y7b6fbLCejVeNPVeMPV

KOQSU85szaDDs07RxWe9pcfoInpHNhmRQUak70Litmuj50qCawP13VW5jGQ4

7dX41cXYVYWemci9slLidtcTibZTqkz0m8L24HHAB7G2O374+P9p+D9stiRQ

AXxg+Ak7ONLCxHBdWmBVuE1RiFmgiaiJHLuFPKe3jrC98is7Ve7XprIaImxq

okBpuQ0AfAUeLdHnxmKPS7x1oeGmXXE2s4WBBy1pwFhSu4opXUW07hJqdxkN

XcHEVLPgD0COsGDkN12MwS7GYQD75QTiEjCfQd3Od9+AmOu+ne+8nWu7m0Pe

TSNuRptO0BUXveXnmPIzTPkJsEno4sPOAgYqDw+UrTllB5IwnOed56ZqKceh

J/nCWpVPgf9TTRF2SyUeBzX+KBv5bD+j7vqS9ZVVMp1OpjMp4FP/XfrzTRV6

+PIfhc/aTgPaLvoBgQS88vYefqS9oSLSrj7WIdNHx8tAzET2ZYCpdLCxmK+h

sIuukJXiSyslHjN5TjDGbBReqQuzaQh8kuqo0Bxs0BxiMlMcDGQfdLIEZB7g

T0OXUbtLWfD7ahiY2tPhptORVhAnQ61no7ALAH+q7QJozmzn7VLf7WLf24W+

u4Xuu4Wuu/mOd3Oou9n2m0nY9XjzzWjD1TCo1NWnmIrzvrLj7iImKp+MzNpv

TjloSZmvCEOnOkeZiWuLPrFQ4jaS55Lj/lRfmsNKhTfQRCLWRrouJXBpZopA

o5FpdCqN9alBUwZqAe3wX+l/7q9wHRHoTMAfR6aBbn2fRNne2u6szoQkuMJS

3bO8NO1VeH2NpJPctFJcVUPNpaxVuI2l2Hz0xZ00BBw0BEwVeKVffRJnKd0a

blrvqz6U5bVQEblRnwh8PhFYzfYCSkcxHV1x1F972F93PMiCfzEOv5hAno0i

zifarmY6Lqc7rmdRtwvdtyuY2xXs20XM29nOd/Pd7+bR75Z67hbRb2c73k4h

b8ehV8ON14OQ877Kk15QkYtAjwZUCNeaQoSnb9bGjOf7NoSZemry60u8sFLm

1RB7rinOZqbE56otGGUlleatP4JG4MhUMp1BAg0miQE8NpXx3/YL/av8J4HO

2kKzhycRqQwcmTQ3hK5P8YMkuqCy/bz1BMyl2bJ9TerjXWpj7INMpeyUXzqq

8bhoCAJFctQSluD61EtbuCZArylAuzvKdKrQf6kqer0uAbhNcls+rRO4zfIj

TM0Rtu5kqPFspPVsDHoONGcCeTHedjXVcc+/HRyvZlFXiz23K/1vF0FgP18d

+mJt5PP14bcr/e+WekE67ibbbkaab0bqL7GVwJeyWuOufGp79n5z0m5j4l7T

m9lif3SyQ6q9gp08p5E0u7HiS10JNlMFHmtlrkBTqUQXDWRF2vbWJvikrG0q

FCarC2CcUL+2KPo/8v+u+Efr77347BwQQAo2N9a6KzJaEt3hqZ6tyW6G4p8k

uGi3ZbxuTnAtD7PxMxTz0eX30RcG7ZWLpqC+DKee6NNcZ4UyH+3uGPOJbM+5

sojl6hjAHw/PAZ6HiioEbue4v+50pOEEePsx6Nk40Hz45f34v5hsvwDi8xDT

7WD8360OvFsZvFsbAvHZxshn64OfrWA+X+r5fL77drztZrT1erjuYqD8or8M

8Kd35NBRuaT7KQCysFEdPpzhAgk2CjcRM5Fm0xFjM5J9aSTNBiTIVUckxk6x

NNZldWEeT2YAvSWSmQ/twAP/D9SffxJ/IvMYtL04AhlHoKzOjTcluiPTvMdr

4vNeG9oqv+wrj0cXRsLSfUuCzQINBEJNhV01eRzVeZ20RAwkOcKMREq9NLqT

HIcz3SbzXy9XxSxUxqzWJuDg2SRUIbmzhImpOuyvBoP/BFgdoDxTbayCO9V+

NtFxPtl5OY16iOuFvndrwyzyq4Pv1gdvl3rfLvW8W0B/voh+N98FBv/VEOys

r/6E1ZcVXfSXHnflMdrSqYh0MqspY8VOQ9xM4WtUvG2Ws4K7BreeOGtFyEju

laUSr7OmQJSDUtZrw4ku5AGehqfSiSAL91OA9mFV+J/Kn8C6UYKGwxNpNPpw

W21znH1/cehweViIkWCktUxHTnB/eVxPUXjBa4NYa4l4ayknVS47dTCveTy1

RWpDTdvj7cfzfMayPedLgtdrYufLI8H4321OJbblUln6A1rdssN+UHmbz8eA

20FeTT3IPvpyBn010301i76eA8oz+BbAXx+5Wx9+tzpwt9x3B8R/tvPtDOpu

DnU7Drsaaj7rqz7vAy6o9Kyv6BCVzWhLo8BSiK3JhNYkAjxlrzlhpSJ8IMO9

1EcrwkzcRolLmf+xrgS7qfwrd12RYEuZJDcNeHHywT6OtX+MwmSJD0t/flL+

39zGfF+CGcD5kEjk3e2tnsqkthTXFUQuItU1wkysOcFlpDZpqDqhNcm9PtY2

y1050kLCU0fIz0TOWY0331O7O9m5L8V5JNNjrjgAfPyF4tDFioi12tjtxiQ8

NI0Iz6Cj8mgduXR0GeizzkdbLieA+ADlabuc7r6c7T2d7rqYQd+Amrs6eLc6

9BbE8sDNQs/bBdbgfzfbeTfbASzQ2wnozSjkeqjmeqDyElt20lNw1JlzhMpk

IFPJ0CR8SyIRmnTQnLBVEz1V4N8SYZFkJ/faWExf/LmWCJuh1At3XeEgC+kE

J9XSaOfVhWkcmc7ar3W/EEf7sIuSPyL/h0Vmyv0lua820tMP8UTiAQ43PzaI

rUjoyvHbxVSXhZike2ljy+K2MHWd+WE1kTbNCfblwYYumq98TWQDjRWK/Uzb

Exx6kx2GM90nc72n8nwmc3zmi0LmS0KXKyI3QQlufEOApjJQuUClD3vKTgYh

F8DDTCJYKgT0ZIbF/2ym+2K25+a+5t7OY94uAP+DvVvC3ALzM9/zbq6TBX8W

eTfVejcJuR2vux2tuxyoPO8rYa1Oo7KZyHQKNBnfnIBvSThojN+ui5krDuxM

dCzw0oq0kfIykDCW5jSS4QBtY6CZdLStfIq7xsww+oBE38OTiRRgge5vPfu2

XuCbJv9HHP8PN8cBt0Nmtb0MAoVBwOM2Ntf7G3Lb03ynGpIXmlPyPNQgic5L

7aVbPXWo3NCOTF9EskuKk5K7jpCZsmCgmRImJwid4jaa6zua6T6W4TqW5TZd

4DdTFLBYFgb4b9TG7bckkZGs8X+ELj7BVJ8Ot5xNgFLbDgw/qL9Xs12Xs+iL

6e6LGdBz9d0s9d8uY98uD92tDN+uDr5dxr5bxIAp8Ha2/XYKeTeDuJuBvp1q

BU3x2WDtBbbqvLfsuD3vEJHNgGeSoCm4FpDuuN2G2KWKoJ4Ux9ow8xhb2XAr

WXddIQslLnNFTi894Shr+XhH+d6m8gMihWX2KHQChQ4a//d71791/H8/+b9v

/H91xxCNCQJPpu3jSbs7Oxvzo52FodAkt2VkESrDpyXeYagibre3dr2jDJHm

15Xjj0j3DLeUddAQNpXlrIpwGC4IGcwLmCgIGMvxnsjxmszzmSkOmi0NWSoN

Wa2M2KqLBfpD6chjdBWx1jn7awD/i6mO+2rbcTbWdjHbfTHXezrVfTYN9AcL

ii+rBKwOf745ebc+erc6/G5p8LMl7GegHZvtfjuFuptC3E5Ar4CCjTSdYmtO

+ypPu0uPOgoYyCwqPI0CByl4s9eUsFEXNZzjBY+zS3ZWCTSV9DYQN5V/aSzz

wk2LP8JaIcxcojknEre/B4Yc+OAUxhH1a1eE/46R/yH8v3n3CmuHNuOYwmCl

AEeiEslUIpG40NfSGGnSnOSIG0JA410Gy6OX4Pm7mLp5eO5wRSymKKw4yPS1

saS/sUy0jTIqM3Cm6s10RdR4UfB0cRBIwVRhwFRR0GJl1GpNzCYE1N9kYlsO

tbMY+P/jvhpA7HwUejmFuppBXc11A/G/WsCcz/VdzvdfzGGugOysj9yuDl8t

gRI8+dn27Gcbk7cLg1dz2LcrI2+XBq9mei8nURfjHZcTqPPx9uMRGBPbSEfX

MNAVDHQxtT2Xgsgiw9NxzUk7jQnzZcFdSQ5ZHpq+BhK+xtKsrQLSbIB/oLls

gIFwcZjl+vwM64okFfjPw4e16L+78n44/68/foS1pQrYHgodT6aDyQjO5GBv

e6QpCxKs2Zjkutbb3BjjtNlTs9pZuT/UPFKXNFqbiEz1jLBSjHPRLwywKgqy

6C1L2u6ELDVlLUESZwqDJvNez4CRXx2zUpew0Zi205q1D88ltBcRUaU0dDWj

t/ZkoAHI/vVsJ5Cd6zn0zQIg33e1iL1cwF4tDoAA8N+ujV4uDt5tTH2+u/jF

zvxnG9O3q5NvN2febc7crIxdLQxezGLOpnuPJ7uZ4x20ASixB0LoqsZ1lJG7

SlkLTe25eFj6FuTNak3UaJ5XRaCRj4G4p6Gks46QiewLe1VuHwMxT02+LC+t

qR4YhXlGINOIDNZC0Id0vj8i/wcJAuQBf0D+/s5oxur0yFh9cn2wZk2E9WBN

SnOKN3kGvYQqX0aVd+eHDNe+QWb4umuLp3qbVYXbwzNCd7CIvYG29Y6qleaM

hdJI0PMuAudfHbcKSdlszt2G5u8ji4mdlWR0DR1TfzTQdDICPZ8ETRbw+b3X

C/03y4M3qyM3q+OXS2Mgrlcmbtem3m5M323N3mzOvN1Z/Gxv+fO95c92l+52

F8GXVxuzV6vjZ7PY7a6mBVjlcHVWb2EiuiC2JzuqPcGvNy1wpT6V1J5PQGRv

Q96s18TMl4c2hFsEm8k6aYu4aAuz1swVXrlrCdgpcyXaK/bWZe7jWM0Onn5I

Yv7T+X9Thb56QWOVYCIoAXjCPAa51lbQGG2e76nRle3fmhFEnumdguYN1ad1

5gX1lEZn+hiH2Wq3pvrVRtgMVGQejPVQFgYpE907baUrkLTl+pSFmjdL9Wmr

TdnrrfmbsMIdZOkBqpLUU0fHNh8NQ0/GEOdTKKD510vYm5WR69Wxm42pm/WZ

q5Wpi+UJcLxanrzbWvh8fwXQvtmaB9hvN+eu16av1mcu16YZ0wMzrZWlQW5x

NvoBxqpeOnI+enKh5mrJzgbpbkbJDjoF3mZdqX6bTembkKTlyiggg4h4hzhb

JVcdUTs1fgd1QRMZdlslbm3RZ8GmEm35ERsry6zFFpYDYdH46fmzdsXc11+Q

/b2tjVUMlDZYl+9vGGOn0p3j31kSdzDSMd9WgS5JhKf6owoiPPQkYAUJQ1Vg

IgRNwsro6/OfHVLp80M7bVUgBestuUuQzJWWvNXWgrXWIhAbsOLt9sq9rjpc

TwMJ28Ichh1PtJ/OoM/neoHsXy0OXq2MgixcL49eLAxdLg6dzmJv1yZugeyD

WJ+4XBo+nek/nuqjjKAWWiuqwr0irHQjzLQTHUzSvW3DrbVDzFRj7bRj7bUD

jOUDDeWTnIzy/awRSa9XG9KXquOXqmK7UtwSbBT8jKTtVPnt1QQMJdjMZDlk

uT92UuZsSHBZHB8m04/xFBqFtfb4U/D/myyQ6QA+gwIaQDpzbXZivbeBiClN

cVLz1BUbrIgbaS0ark1vzwuDxLmN16dlBlhZyHMP1GUNlCV050WuYmDnpL0v

by4ZiyO43obd7roNWOF0bSqmKKY9Mxj+xg8S41kd6Q5NDenNj5+uy93vghAx

LaQBKH2snT7ecTiBOp3pOQdld3HgYh57NoO5mBs8mx0A8nI+3386y/ryZLKP

NtC+3FyGzo1LczEJMlSIszd442Sa/9o5zdUy2dm0Jsq3NSmsNSmoIca7PtKz

MtApw9Uk18OkKsByrDhqpT55IPd1jKWcj5GUlQqfhTynluATLZHnMjyP3dS5

qkJNJ3oQD4+kAA4cQKZ89yrQw/s/On/WlSAag8o4JuDxG7Oj692VW8iMCHMp

O2VeRLrfRHNeQ7xLYaBpeYhFX3m8i65YmIPODKJspCq1ryR+ewz95c3Z7++u

Trdm9gdg2+hGWHponIOem5aktaKApTyfkeQrffFXRtI8NsrCYZZaQDewRUkz

kLxtVB1lEE4baWeOdx2O9xxPdJ9O9ZxO9Z5OYkCcTIJ2rO90uudkppc20rHQ

XNKRFlET7pHnbZ3saJDubpHjY1se4tYUHzBUlrYKq9hG1e91N+AxzTh04ya8

YrAktS7CPdNZHxLuNFMRN1oUGm2l4KYjZq7EayDJpsz3VJTjEc+zj1w1+cqC

DIcQNTt7+wQSFejPP5v/N5SHdf8sqwVgHJGp9L2Nte25kfWOAkJntqcOvyLf

0yQ3rZ7CsGJ/gxgrqdZkj9xAS22x56WJAbtD8NmWgtH6HMLi2L//5vPf3V2e

bM3ipvs7S9PDrTXtVUWNZfmM5QV1JPk0RV7aa0p7Gyg5q0uayfPry/J46Eqm

uBrm+1ojUoLnGvN3ULUkDJw2iKQPIcGROYI6Hus+Ges6BKkZaaOOINYR5SNl

Kd05UY2x3g3RnpVBjoikIHRW5FRVKq6zijnYcjTUyhxoPhxqPR6Fg9eHAy3k

3qalxsKuzAj4m9eT5bGT5dGRlgq2yrwWKgKaYmzinJ9yPf+Y48lHzpoCoLXs

rc3e2ljHEYgP+3Ip37EK/b4X+HH5A/MP4FMPTw4ODnbXlvdmB1ehGbj2DD8j

UX62T6zV+GpjHCLNxcLNRKojrBxB/dIS663P3xtFYatSMZXp58Sd/+d//fl3

by/pGzPdjeWehipuasIemmLAILnrSgdbaEVY64SbqwUaKXnryXnry3nqy9oo

8zmo8vsZyISbKjZGu41XpKxBS/fBdOhvpWBa6VjE0VDH6SjqaAjBHIIT0JCJ

8lR0elhLnC86L6Y3J3ogN26uMn2nNZ/cWXzYV3ky1Ay6ieOBRiamltlXfYRh

baKgd1cTOyoJXbXT1WnjZXEzNYkxdmqmcpyGcjyyPI/52R9zPX/E+eQjL13B

XHeF5oygpbnp/QPcV/wZ3z7+/xH+3/r8tPunuBxSGayySyCR93e3gPPfn+ya

ro1ZaU7w0RPiePyRDC9bmLWSo9qrUDORdFc1Zb5nr43lR5tKtyZ6m1P8Z2Fl

v705/99//uNvzk7Gu5Al8eFFQa5lwS6QaI+e7IiBooSxkoTpqvSx8tTe/Fh4

cmBNuGtpgG26i0G0pWq0tUaKi2Gxrzks0W+gKHGlpWS/s46IbqD2th5hESfD

iNNhOBjV4M0FSNFoafpAYcJ4RfJsVdpSbeZGfdYBNAsPz8BBM/ZasgiIAgoy

j9FRwOwqYXSXMXsq6V2lREQhuYvlByarkuYhKWkeeloSbMby/PK8bFxP/+3Z

px+/ePSxn55wrodaQ4LTzEj/zu4ukcKgH5497Av6yfiDok8CPRcOv7e3RyHs

Eyba5mqj+rI9PLQFOJ894n7+iaG8gBTXR/aqPC6awrpSPLH26pjq7KV+REmo

/fYg8s+//fw///DlOZm0PDIy2FyHyknszgrHZIXOVCSuQDK3GtNZm5lh+Tuw

/K3W3OX6tLGiKHRaACzOqyHctSbEqSbYri7MqSM1eKYma6khF4+qovc1M7HQ

o0Ho6QjscKAJGNeN1rLF+vyF2syVhqyNxszthoz1yrjZvMC5wpC1yrjdprR9

aPZBc9pBfTyxKZkEyya3F9E6S8ntxURUxXZbyVRNymJDamWonZbIM3UxTnUh

dktZLlXhF68efeRvKJbtrloTZTXa2bq9uY4j0b66EPAP8P9W1N8apIebxKl0

AJ9IJO3hSVQibhcL2WpN6MvxsFHg4Hn+iIf9qaasENuTj8VePlYVegFsQ56P

wVB9/kRbTVm4A2EG+7///Y9/+s1nN4eMSyZ9Z2JguqloojRuNDdwtjh8qTJ2

oy5hpyllD5q1D2PFHixruyV9uTZ1qjQBmxWGjPdqiXFtjHSGxXljsiMnyxO3

oPmU7trjIdjZKPx0pJWJbcB3lG805i9WZyxVZ6zXZW9B0pZKIybTPZdLQ/eb

MgnIclJ3PQkNIXVWE5FFO/UpG1Vx+/Vv9prTD6C5+4jiLWTxQkv2SmM6MtHH

TJ5XhPORrjhnf6pnqauyPNuvQgz5C71VqkJNeuoL1pfmgP4cEKgU2vHDptBv

0v4QF/rh/Mmse8MPDwhkEplKIJMPCJT99fmtztLF6vCOJFsXdV7JV09ePfuE

58Wjl88eSbx6xvX8EzWxl5BYh4HaXHR1Xk2s2+neyn/+8fe/v7v+8vrq3cnR

7lD3QkPuRHHkTGHIXEHwXHHIbJH/fGnocmXsak3CSlUcqzsuiVwsj16qTVqs

TZsojgVzoSnMqdLXvDHcvj/Lf7nmDamz8nQEfjXVeTnZfjrcdIAoXKzJAIK/

VJu+WZ+yVBw0kuI8Xxi8W5O0VJa0VJk5V5G9Ca3BddQcwCu2mwrWa5I2KqL3

WzIOoPl7iKKttuI1eMF6Sw62IMpZS4L7ya+CzFR3IUmhWrz6/B+H6PIXemmU

BOiiwd+fGiNQqSwjSmZdDvgm/w9sAT5o5H+lS4d4Mo1MYeCJNByJtL+P35rC

7HWVLEPi4fEWXjpC8jxPOZ9/wvb0ER/HE+7nj379q1+5G8ujcgLqkvyRBXHN

KYE3lL0///aLP9xe3Z2f3h3TyVN9qy0FYwVhIxlePTG20EAzzBvX6aKIpcqU

tcb8TWjJLrR0qzFvIj9qINF1tiBkpSFzOD8KGetV6mWS5aTaFGYxVxpJ6qq4

nup4O997O9N5jK3fbM5ZguSuNhatNRctV8SOJdhMpzpPpPk0+NlnOJpEmqhb

SPC/NtR442aW5WvXmRY2XRCzVp+8D83BwQv224p3UaVbiMINaO5MXVqghbIg

20fpbgYVnnqyT39lIsYWrMOb5aZSFKDbWZK4NDGyiz8gM1mLkGTa4df9z98s

h/4j/EGpff/0GOL9mgPgDzJOIJJwBweE2V58T8l6a3pTrJWvvoiK4DNetkcv

XzznePLJR7/+hP3Jo0RXvabUgIoEr56yhL6y1N+f0Vn8317/9vrsgrJNne1d

gRWOF4RBw8wRUTbjuRGzhTHD2ZHjxcmz9QVLLeXz4Ai8U0PBQmFsX7zzaKbP

THkiNieqJtQp0UY5z0V9JNufgCw8G4HezXffTrfT0VUbAHtzyW5H7V57zXxB

+GyKY1eoeam7UaC1gbIImzDnUw8He0URbjlBHhN1VU1xngADRUSCz0ZzBg5R

iG8rwXWW77UVbUKz11pz0zyNpF99lOWhW+apLsn2kZbQ80hD/hwXBTD+4TnB

S+NDm5tb9EOWJpDuu2D6f9/w9iHwv4f/35Rj0v0+E9bDi1jPOGUScHg6jUQc

byP1Fa9As4v89Xx0Ba0UuZUEnz97/IkK96cKvM95n/xbuJ1WQawfvDhluKFw

uRvyuzPaH7/47D+//PwPd1eXpC3G4uASrKQjwaUz1X8XVYvNiagKdMj3s/c1

VLVRFjeVEbBXkUz1tAGC05/ktQRkKi9kODditPgN9E1girNOkrUcOt5luyGV

2Vd7MYa4GGmldVduI2r20c3UYSQRDdksi+oLNS3xNOsoL8xNDDOUfiYtzOfo

4GhnqGSmpVxfAUkICzeREzMS5ch1054sjsIhiomdFXhUyS4idxdZ1BDrqiPw

aXO0fUeMhQL7vxmJvwg35MtyUawINmhIdlsYxmxv7RBJFOrRCfHohHL4twtx

P5T/t7sgOuspdlTGCSj0rMFPZVAoNAKRCLpvysHWNqqUhq1cgWelOCl56wg6

qvEpCzyVYPsoVIcv0kjQTIbdVV+pHVLVCakebCjd7G/93Tn99++ufv/ZzW+v

j88O1g/XJlbbKtAZAecbc8ONZY4qohpivOJcL7ge/1qAkz0yOFRNhL+ioKC1

srzA06jFV3sqN2ijKX+8KqstOSTbw+iNpWxHtP1WXdphT835cOMZtpbWUQyG

PXWo7WoZezzcupQVhIlyG6nPW5scbizItZZ7YSzxVIHnEz2J58HOdnNjM4Mt

kIYwlww7tXo/48n80F1oPqGrgthdugfP2UMWducEh+lLDGX7t0ebq736taXM

qzgzgQwHmbIAvfoYh1lM5872HmiC6Een1KNTyv2Taj4c+9f5f4//ZFV2xgmJ

esi64gkmGoVGJlHIoAIzDslbCzsdBeS+imVoeranpr+RmIXcS03h56biL3xU

ubyUOaJNJELtNcJ8XPLiwkeaSveGkb+/ZP72+vR3N+fvjknHmwvH61PrnTVj

tVnYlmoLRQktWfG4kCBVUV557o95Odh1VOTMZdmLM9KoOPIqtrs/JwIZaDJV

GEbGNk9UpRX4mqXaKLZF2mzXpVHbSs76a097KijwvH14GWO47d3myPEwdK0y

bRdWPZKbMNlQtNTXnRns4aTM464r8drBdLCzawXT21+YDPG3rvUzXyiLpXSU

4NtL8KhSSk85vi3/AFk0WBTZEGK1UR3fFmGsy/fISvplooVAooVwkZ92ZajF

aFvj1ubm7u4O7fCrjVgPvfDfzf+bys/qrEHQWYMfpIBAoRNIFAoVlGAwCaj0

9YktRM4eqnCxJe2NvUKgsZitMpeTuqCLPIeZyGNt3o+CtfmMZV9xPPpVhLN5

b1X29jDyd+fML86YX57SL/Fr9MWxo9XJqebiNFdje1UpN1XhvFCv9qYWH1tz

Q9FHumIv1AU+tlLmGURjiGtLSz1tW9Ca2azwydLofXTddG16oa9xjqNqR5T1

dk0yBVZ41FVxhCplIvJxrYX4TsjxVOfhJJqAqlqrTZotSJwpSpwqjp6pzkam

RnfmJS/AWhZbaruSw1CxPti04JmyBECbiq4id5VS0OXUngpyRxEeWQwaYUyG

/0FjGirS1Er8sa3M8yjDVxHGPKWBepUhxn2gMG1vgv+I95viqH95WNMPnQLf

1XN9tbMRZIc1+Jkk2uEBkUKm0qn3/BngMIfegqUR0KUjZWEBuvx++kIOKtw+

euJB2vyOsi9MxB4F6nDZyHIpCLDHuFuiy9NwE11fHFPvGMR31F3m8sj+UMfe

EGoOVlEd5bPSj27LiCrzMEKmx6Cqy2Ld7Fw0hP0N5WB5aUto1HxN3mx5+mxF

+kZLCa63eaOzoS3Jv9hTv9BVoz3Cag0M3Za8w/YSOjyfUJ+Ma8zag5cd9EAI

A8hdZN1WY9FKZfpMdsRYTshMUfJc0ZvhrKj5opT+9Mil2txdWNkevJSMrmJi

IfTeGkp3GRVdRgMpQJUA/lOVbzoSPbfqktBxNq5ybLbSzyL0X0Qa8+V5q1UG

G6DKkg52wPjfxRHJODLrCUK0wzP60fkPnQLf43zu45hwf53lgEQFRotKZ4Lx

T6XRmcQ9+njrZkviDjJnINfLR5PL30jURY3Xz1Ay3EDMXYHDUuxxkJ5goKGo

rhRndoRPT2kSbbbvy1PqDXnncmeWOILY7Kxdba+aaS5YRjUcby6PNVXW+lu3

hDq1xb1GJUd2pkQN572ZKEqdKUldKAPNVM42vHK3s36rvW4KUlgf5lTurV/u

qdsebreYH4GHpDHgeZSWDFxDMqE566A1bxdWTOprIfbBd9vrt6CV242F6/W5

W5C8teqM5crUvdbCA2QZpaeOjoEcDUGPh+GH2IZDbP0hpobZW0HrKSF3lODa

S2ZrUppCLRZLIjBxDl6KnGZibGF6zyL1ebKdZcuCdGE5oZury7t7e8AKsq5G

3W/KYhyevV8O+kf5P6SAwbrUC2IftF001mNkaHQGCdTfpVHqMGQLnr4CTetI

dfTRfPnaQMBbR8hTSyjGRMJbmctG8hnoVhJt5PREn8S4mnUUxDNWRn57yrjE

bxwvD+H6mzeQFSuw0oXmgg10wxa2bbuvdaYmDZsW2Jsc0Jnwerr4zUxZ8kx5

6iokf72hAFTVvV7Y/gBqv78dkx/fEGRZ729c/9qkI9JhPCPgoD6dAs1lthVQ

Ebn41mx8Sy6ppYCIKKViGmn9CGJPO6G7ldLTSupqwCGrqehGBqbleBhxPIK4

mGi/muw4H4OfDbWcDEBOsfWn2NrD3jJWLWgrma9Lbwgxm8kP6o93DFTlshR7

EmP0KlT3VYYjKMFajcneG0vzBzg8Dg/8OBBn5ld3R/7lqR0faIe+t+E9IVOZ

RBIwPFTi/Y4LCo1BpzOpZOLBGJI51rjXVbjVWdKV4+OhxvFaXyDAQMRTgz/V

Rj5IW8hGki1Clz/dRsZFmTPJw3SipeSKsP77y9M78t7RfD+xt36vrWylKXe1

OW8FXrKMKF9uLZqtyZgoiZ8rT1qrSd9qyNpqzttFVhx0NRD64dThTsoImjDY

tdJS0p3o2Rpi3hRo3hxo3hZuN5UbetCQddhRdgzMGLKAAMsjwfNJ0Fx8YyYF

UczENB+OdJD728h9CDoWMO84GUWdTnafz/SczfScT3VdTCBvJhBXI1CWicLW

n2FrTjAVDFQZuaNssT4D8J8vDhtOcovR4bOVeBRjxBes+yrRSrTMX6P5jcvc

xAgY+/sHONZNGdRDEPTDs4e1IPoHN8Lfsc7DWuphpRXAJ1HxRCr54eGxQHyY

R1T8LnG44WgMsttZOFOXgC0Oc1F5GWQoEGEm6a0pEGsmGW8i5SDJFm8smmYj

FWIsFGomNwMrfUvd+/O7Gxb/uT5Sb90eoni1IXP1IQWNOcuQrJXG3NWmvG1Y

Ca6tgtBdS8I0UwaRtFE0ZRxDn+inDbTj2qpni2J64hwR4VatIZYtgRatoTab

dcm4hjRGW+EJuuKwqwLfnEOE5jG6ysmwfGpr/mFnxUl/4+EQ7HAQwRyAHY+0

n02gjye6TqfRx+OdF5Odl+PIm3HAv+UM23A91HI12HiBrWWgSojtpQv16S0R

lovFESNvXNKMBJ2kPg7XfRms9yrWQqjktUZjguMUtpMIZBlHJFLoFPoxnkQH

8OmH3z7gvysF380fJJTG2mhBY+1zuN/rdUSl39f6nWX6eBNtoArfUzxaHABP

dLKSfRpswBNjJR2oL+6rI5BqKe8h9yLNSjrDQT7YSCjcTBZI/Ts67s+/eXtL

3T+aHyD1NRy0law3Zi7XZy1DMlcgmWuN2duwQlxHFb6rntDTSMRCycNt1GEU

ZaiLPtoJLD2uowZY/emc4O5Ye2SENSzMutrHCBHtjGvN3q9NpEKzT3pqTnvr

qIgCQmsus6uSjiqjIwuP2opOumuO+urPh6Fnw/DjQfjREOJkBHE23nY50XY5

Brseg92A40jT1VDzzTDsaqjlchByiC4nospnazM6EtxXi2OH42wzTfg95D4J

1gKi+jLalL/AVw0SazfT27q3jwfqj2fdmnFMILPuy6D/pRH+R/jf72wBc4rO

ehgO7avdhqziCySIQmVszjAmYcTeEspA2UR5WG2YmbnU40BdrihziRBjGQfF

V8lWCiFaPEWuKjlOSq+1+aMtlIHJ/+IQ919//N1nx9SjpVFiXxOuvWwTkrZW

k7xem7pWm7rdmLnfmgccI76zBt9VR+lrpmKhjCEEOFLRteSuahy0YKMybizT

pyvGBvBvCDQvctUayQ0lQnMPIOnHqIrznrpTdDWjo5AAzaahSo/QFYy2/ENk

wQm6+qwXcoltvhiEXg7DL4ZbLoebr4ca78ahb8dgb0dbbocgb4eargearoda

gRCdDzYye6oIgH9N9mhuxFpRNCbCNM2Ax1P+cYjW82AdjhgT3kIvpdooq2Fo

2cHeLoFMxZOoQHzAFABHKvP0Wzn/HeOfTAPTirXPjQWfeQyUnwLqC4l4vDF+

udzDHKnZ7sgaLgyqj7AA/EMMeWMtJcNM5cykXkToi8YbC5Z5aKRZyfqocbkq

cNbHuJzuz/3Xn/8EJOhye4E+0raPKNmoTtqsStyqSdqsfoNryiTB8sjtwHuU

UburGT319O5aZm8trbuc2lFMai85aM7ZrI6fKXjdl+jQHmlf5qlb7W+yD83D

QTJILTmHqNLjztIjVPFRWz6hMY0MzTnuLj/uKjpsyz/tqT5H1130QC4xzbdD

sKuBxsveuouOsuve+mts87vBlrf9kNu++tsBMP6hF8OtlyOtzN4afGfVbF3a

SLbvSkHYaJxtkg6nu8yjYM1nr9WfxJsB/op1UZY9lWl72+sHFFY7+qD/D5vS

vxX1h/N/L/5MUMSZR/cbHY+ph8BcgXb4iEkhnm+Nvd0coA/XztXF9Od6V4SZ

mkt+EmkmEm8pGWIsoSfOYS3LnWIiUu9vmGYj76vC5avOl+mufTAE+3//+If/

+PLdb45pZwvDhO66vYasnco3u7UpuAbAMJvcmkND5NDb8omt2cyOUkZ7Ka2t

iN5WBF5TYPn4puzduuSFoqDBJA/Q9ubYyc0URVHaS3br02nw3OPusuOu8rOu

sjN0GbOtkNCYRYHnn2PKT9HFl33VNwONF72Q6/6WGyz0Gtty1lV3iaq96oac

d9XdYVu/GIbfDUJZqRlsvQQyNdDC7KkjdFfP16fNFQVToGmLBYHeMs8cZB6H

aj31U3scby5Q7KNSE2HaURy7vbYEZJ9EppIo988oAC3Y4dnDWvSHwP/28c8q

tcf0o1MG8FGMI9b6xj1/GvMY+B8Gfvtye/Ryrfd6GbXSlDha4l/gp+ugyBZn

LRVvKeGvJ2wo9UqJ+9MQDd6GAOMcB+UAdb5wI6kIU2lMSdyfP7/985e/+dO7

t1+Qd4+mevahRdvVyXuVCfjaN9SmDGpzBrExlQbLIbVmAxmnIYsocNaR0VZC

gxeRoQUHDenLZaHDqW4VHupl3jo0dA0emkNoyqAjC876669H4NfDraeY6tOe

KlJrHr4p86ir+Ha49gpbczfa+m4U/vlY280A9BrTfNnTeNPXfN3XdIttuelv

fjsAfTsIux5oZfEH7UA/0J96XGfFPMgsqoiGKsyxkLQS+LW9zLMQjWc+Kk9i

zfgLPBVrwk3hOcHrC9N4Io1MY+BJFByZ9exE8l+uxfzd/EEwTs6ZJ+c0OjC0

J7RDVhdAZRzePxXw6HB38d3++PUG5majbwOaPF7il+OhEWIsnmgrk2gtFWwk

biLNJfzikbUUe62XVq6dgq8Kp5cqf5C+VOFr41vqzn/94Y//8dndb0AjsDUP

SupOfeZ2eQKhNo3alE1rBpFFac6mwwtpsEJyax4NXsh6jSw5AorUUU5ozduo

ie1LtEs1E+3PCT+d6j1oyiU3Zpx2116MoL7YW/ryYJH1FAJsAzCiOEg6qTXr

or/ydqzp7Rj0bgx+N4a8wrYA+GAiANq3g7DPRtveDiNuh+CA/yWmCfA/7W86

6msA4/8AVb7SkEFFFa/WJTf5anvIPreS/CRQ44mn8ieRRtw5rrJVYcbwzNfL

49j9AyJrO9w9f+pfxv/fXIL8gfyPGMdnzOMzCpUJxjwY/KAE0A5Z7QCFzrzE

LX5JnD5f7r5ax6y3JI4X+Rb76YabSmS4qKQ5KgYbSRhJvOTneKzC8yjXWrrU

WcVP+aWjFLu3mlC4sfD+OOr/+8Mf/3R9/vnZ4TVug4yF7TTlblWlboOetDYT

V59FqE0lN2aRm3PITTk0aB4TWXzUXgYMzDEIdC0VWbLXkNoSaJBjI48f6fzd

BY2IhuCashi9VeejyJsZ7N3C8Mko7AQLOeouJTZnkpoy6R0Fn88gPp9uu5tA

vAVWcwj2bhAOpOZmGEwWkJGOu7H2m2HENbb1ZhB2gW05wTQeYhoYPXW77aWr

kGRCa8ZSVfxAvI2N6DMDwY/91Z95Kj8O0+fMdZMrDzaEpXtPoOEHB0QciQb4

7xMorIcYf/Xs7n+APxj2QO2Pz0Baqff8H+ovkcakE/feUta+IC9er3RfrnbP

14Vhstzz3VXA4M/31k1zVXttIGosxSnE+Ynsq8ehmtzl7sq5DsrBmryucs9d

ZT4dby74X5/f/v7i6DcntFvCJm28e7+jcq0qZb00casc1OLk/ZpkAiQdBB2e

f4QqBdblFNMACijwkye9taAWbNTGFTsqwuI8/+PdJWNldANWvt2QR+4oB4P2

BNvC7Gtm9DSA7okEyyXBs+nIXDoy/+1o42ezbXeTyLsJ5LuJts+m2u/GEJ+N

Ij6faL8bb3t3f7wG1miw9WKg5QTbdIhtpPbWbcCLdqGZoKHerkvGxNjIcz7W

4P/YW+WJi+xHUUbc+R4KpUEGzUmeYx0QHA6HBy6dylolAMOV9rVVuL/z+u89

fyD77/lT7ssxiEPSwW+Y219SV+42eq6XO5Yb4wbzffI9lJPsZcD4T7SX89Hm

N5Jkl+T+VILzUbSxRKqRQLqleL6TYoqVlJ/S0/asgH+/OQbi845BOt9ZZk5j

9rvqV+uyVsqSNsqTtkAtqEs7YPmZ3ENU+VF31Tmm/ry//rSv9mKw+bS/gY4q

n84PzrMQxxTGnW3NrtZmEDFN6y15W5AMalc1DV1H64FQuupI7eUEaC4VCUxR

IbO98Hqo/m4K9m4ScTcG+3yy/fMZFEjBu3EkKwD8ifa3Y8jbUQSAfzEEPR1s

YfQ3kLqr12EFFFQxA5lLac3ui3MRfPaREt8nTorPnBQex5nx57rLlwTqNya4

DMEq93Z3iMD/398NAUDRjv4qPn83/4eC+8D8K/g0JvCipzTin04OvmSs3ax3

3K227bZldyQ7ptqJp7sqZLooJ9pI+esKmMtwKvI/533+q9eawpVuKpFaL9Ot

JEq8NFNtpUu8te6ou19eHN9S9i92F0+XxohY5Da8crM+Z6s6dbs2ab8xiwgr

oLWXMLurjzCQM0z9aU/l5UDDxTD0dKCZhCwG5ifXXKr+tUF/dhgi3qsjJ2EL

WbFU+Wa1Pn0bWoBDFs+UxMYayOfZyG/XxtCQuTRE7tVQ/c1Y07sJ6Gdj0Lej

0LsZ1OezqHeT7Z9NtLP4g6IMCvcIHMA/GWw5Hmxh9rM2Uay05J5g684wZUxY

bleCF++zj+R5HtnJPXFSfJxkLZzlLFP4Wqc+1qG/qXhzbQVPIFKZrPugWUJ9

dPr1S8B/B39QZ4HtobKeuMW67PiQBcCfRmdcM0l/uiD/hrJ8uYK6W4LvtmXV

hRkC/jnuKnmeagk20q91BWwUuTTFOAQ4/v/W3jo6zuTK+7fFzMzMzMzMzMzQ

YqaW1N2ibjFLFjPLsi1ZtpjVUqu5W2yamSQzO9lNdt/39/evHjmZzCbZZCbv

6tR5Tks+9rE+99b33lvPrSpmY1HWGh/NIie5eBOBXFflEk8tmLXkyeLgv91T

P1zsPZxs0jaXrpbHzkbb9juq9zHF+035h82FZyDsDtTSJzDX0y0Pc223U2hQ

kz4u9V/PdoLqYL4oOs1crsJDoy/WPtZAXJ3lWaix1uuGvDV40kpl8rvGgqWi

2HInPaSj8qt8/9OWPEJP5eMM5sN888e5li+LUMH1eRWEg8FvXw5+uwYmwiAI

Co8LoATuBYGbPtdOn20nTKAP+hHr6FziEPxhCkUerKkJsRNkeaYpwuqkzOKh

zpLlJFXopQwPM8Eku443ley8fQMkCCQqOGgViAz89qcXAf9y/wNwdTyB8pX/

V/8HHyhk0g+PlN9/IH2Pf/txb/Sbd/3nQ5XNiVZ5bgqVgYalAbpJdnLRVtKe

uiIGsjxKQmxgziZZyJQ5yyaaCeU6KxY5KaWbi83V531Hwd6fbj0cv73ZeUle

n72c7jl7gT7qqjlsKwe18AkIvv01tLFG4Pwgk7mbab2dab9f7L1d6CKNoDZq

U+u9DZMNhPMdlLKsFSP1JKIMJKoCLIbS/aayw942Fl8ON5FGGg7r0tfyQy/a

Su7HGj/ONX1exHyeb/403/55tefLavc3L/uBCb68BEGh/9NKH6h5Hxa66TNt

5KlmCiQ+9ceDiE5YSLqDzgjMZzDLO8xYRoKTSUOE1UaeyRP4v4t0nqciPNiw

KcHpRS3s7fIs9gxLJF9fEihfe7GuKPRf/iLm7y17/pn/U+SF1hye7uagUUg/

PBC/v8Z+c776eX/s43r3cX9ZW4ptrqs8PNi4MsQQ8E92VHbTFtKWYFcTYhLl

ZjWX5sq2ky5yUylzUyl1Vcm3l+3I8P2M23s4274/2rjdXaNuzF3O9Z+NtZ0M

Nhx3ww/bSo47y3CDtZSxBup44+108/0sSAtbHxZAWdpOHUOcNGe/ygnpjXIo

dtGNN5TOsFJsCLIcygpcKEvcaiw4bK/A9iIuexDH9Tkgm/0wgfkwDeA3fLPS

9M1i86fFlk8rnZ9f9n5ZG/gCovAqSIr6Hhe7n9Yc2qjTzcSJJuD8Z0PIw77K

yigPGeZnPlqsGc5S8XZKCvzMSkLMVvLMftoc6Q7iWS4y1SFGmGS3vvKktcnB

0+MzIuUGcn4SnfA/rL/9Kv+/xJOI0P76P4WAr+OaSvzDZ9oP12ffnK795niJ

stCw05XRFGea4yxX6KlR4q+T6qiY7KTsoSuiL8OpJPhcVYRFno8pSF+4wl2t

3l8P4a9T6alW5adP3Vp8xO5e772iv18G/n+1Moqd7cFOABPUHXWWgXExUE0e

baCNNd1MNQP/f5jBPMy13sygaSNV+K78PVTqRlXSUmFsT6JbhZf2QIrPEdS6

VnvYUU0ZbQRWu3qBJPbBP0ygPs80fZrFfLPU/M1K45fFxm9XWj+tdnx+1ftp

rf/jk+eDcTPbfjPTRp/GUKaaiBMNuBHU0WDtdl91Zah1gC5XZYAaMsyowE9P

S4JdVoDZXIbFX4sjxUY400kK+H9DgktfadxCP+bo8ARPur54OqOP+Mvazv+h

/9MvQClBAMkPdJIwjkjGE8kUCuWRTvyPz9e/oR79jvT+u+NF8nzj8VBpU4wp

8P8CT9V8L7UUB4VEe0VvfTEDGS5lIWY9KQ4FASYLWe6GQN2OSNPWCPOmUKMY

Y5HlLuR3pFPa7kv61jJlY5a4Nn61MHAx3Xk81LTfVXXUWYHtq7kaQpFG625m

MHcz6FvwnMXcjCNoL8qveoqOWrL3GjPeo9JWK2NHYF7LlZH4FwjKZOtZXw1t

svF2vvlmDk2fqnuYrn+cafgw2wjGx/mGL0tNX5bQX5abPy63flxp/7jS8wE4

//KT7E+3gr9IGm+4Gq3HDiMPBmrfd1XAw4zzXCSbIvRaE6yrwswMpTjkeFkB

/0A97gRr4XQHyfJg/fpEx76S6OWBltPjY+CxIAvFk+m/Snz+Af8rAhFaAiVQ

oMVtPJFEIn6kY//9A/nfHwi/p+1/szdGmUFcjlagY0zL/NQLvdQyneUTbWVS

nVTcgf6Is2iKs5jIcwMhkuZhDDWWbgwzRfrrxlrIi7I+K4nx/gZ/SNt7ebO9

Ql6fpm7MEFZHrhb6T0Zb9rrh+21lZ91w7AAwQS19suFmso42ibqGDk2qIPYX