2D Face Alignment Net

Trained on

300W Large Pose Data

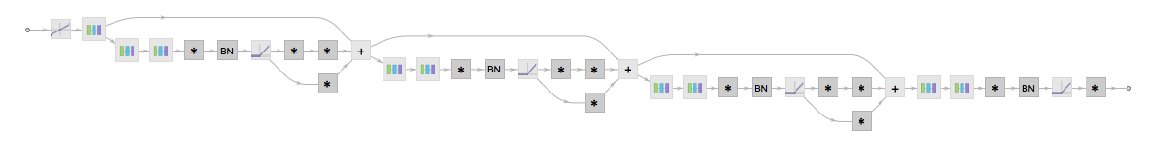

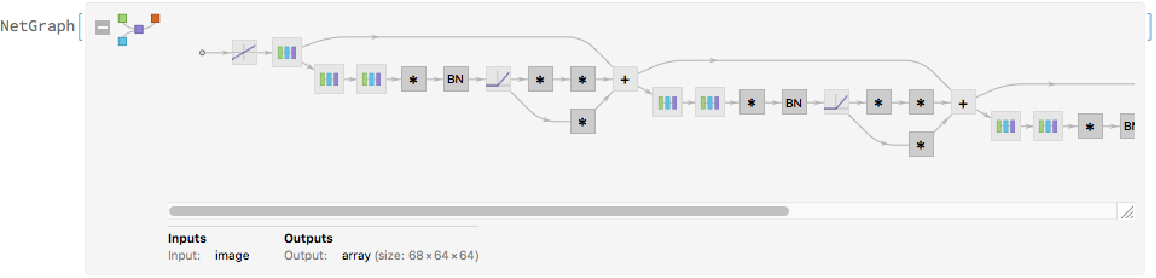

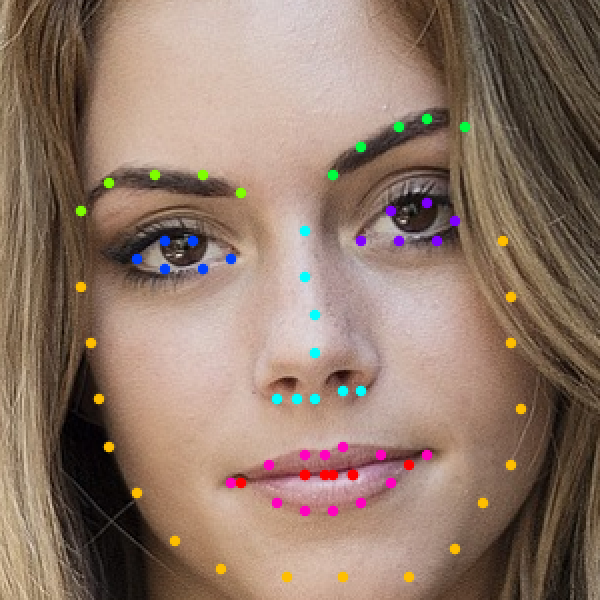

Developed in 2017 at the Computer Vision Laboratory at the University of Nottingham, this net predicts the locations of 68 2D keypoints (17 for face contour, 10 for eyebrows, 9 for nose, 12 for eyes, 20 for mouth) from a facial image. For each keypoint, a heat map for its location is produced. Its complex architecture features a combination of hourglass modules and multiscale parallel blocks.

Number of layers: 967 |

Parameter count: 23,874,320 |

Trained size: 97 MB |

Examples

Resource retrieval

Get the pre-trained net:

Basic usage

This net outputs a 64x64 heat map for each of the 68 landmarks:

Obtain the dimensions of the heat map:

Visualize heat maps 1, 12 and 29:

Evaluation function

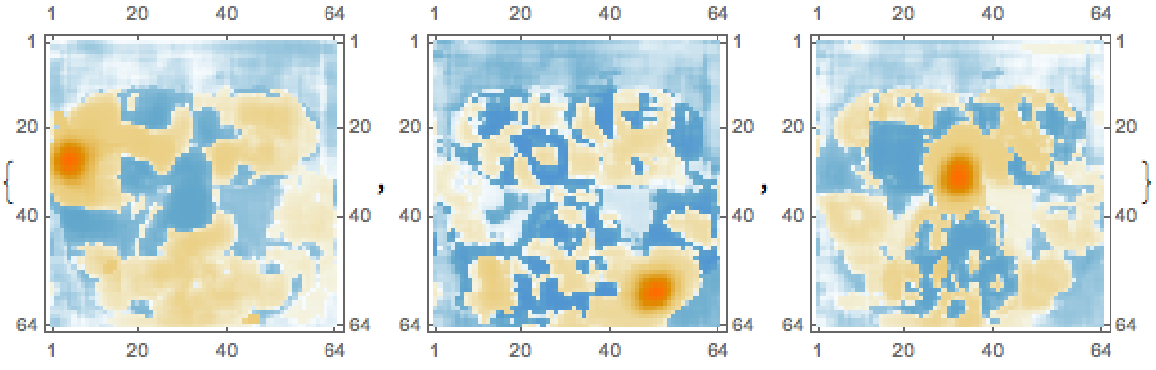

Write an evaluation function that picks the maximum position of each heat map and returns a list of landmark positions:

Landmark positions

Get the landmarks using the evaluation function. Coordinates are rescaled to the input image size so that the bottom-left corner is identified by {0, 0} and the top-right corner by {1, 1}:

Group landmarks associated with different facial features by colors:

Visualize the landmarks:

Preprocessing

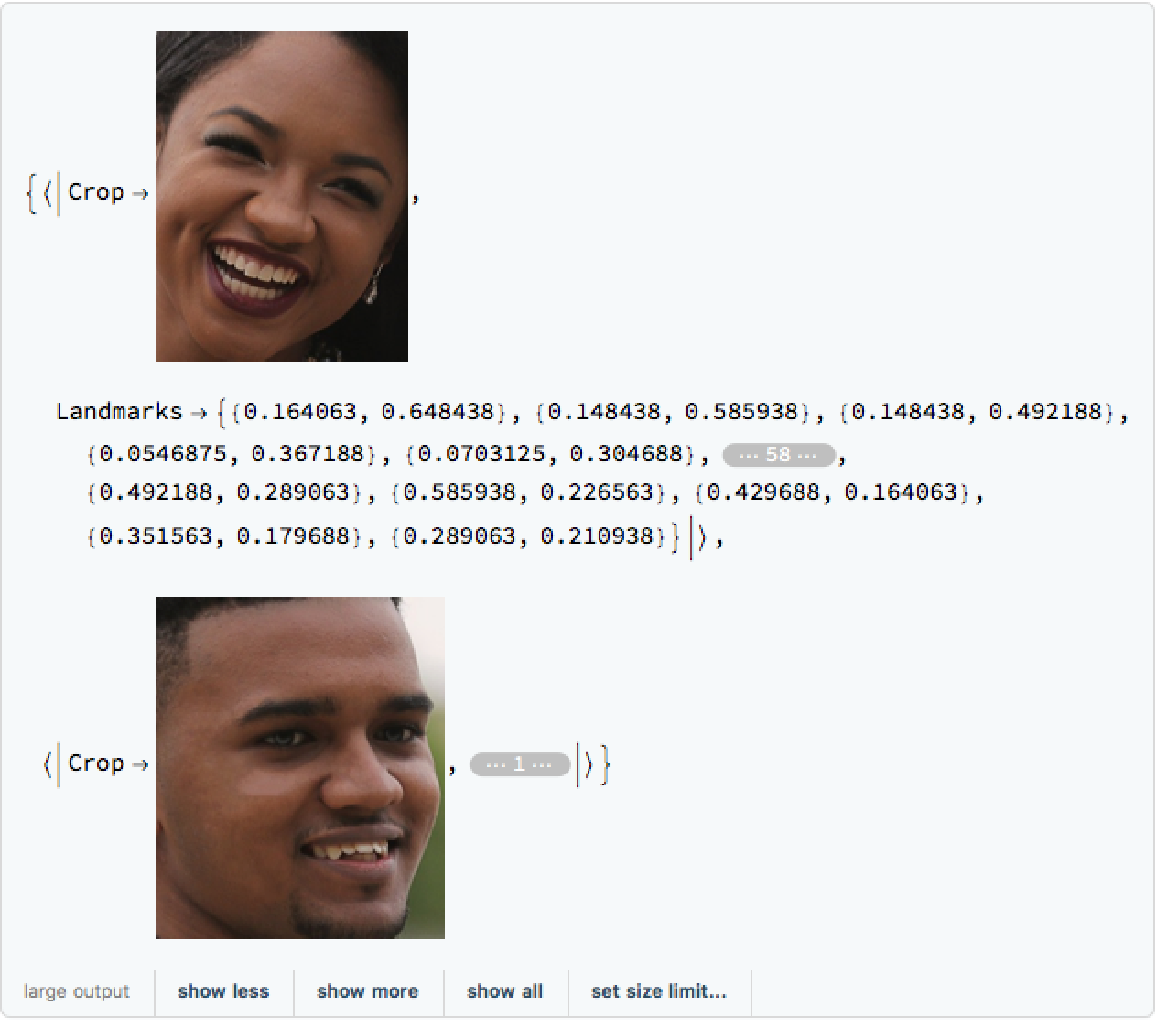

The net must be evaluated on facial crops only. Get an image with multiple faces:

Write an evaluation function that crops the input image around faces and returns the crops and facial landmarks:

Evaluate the function on the image:

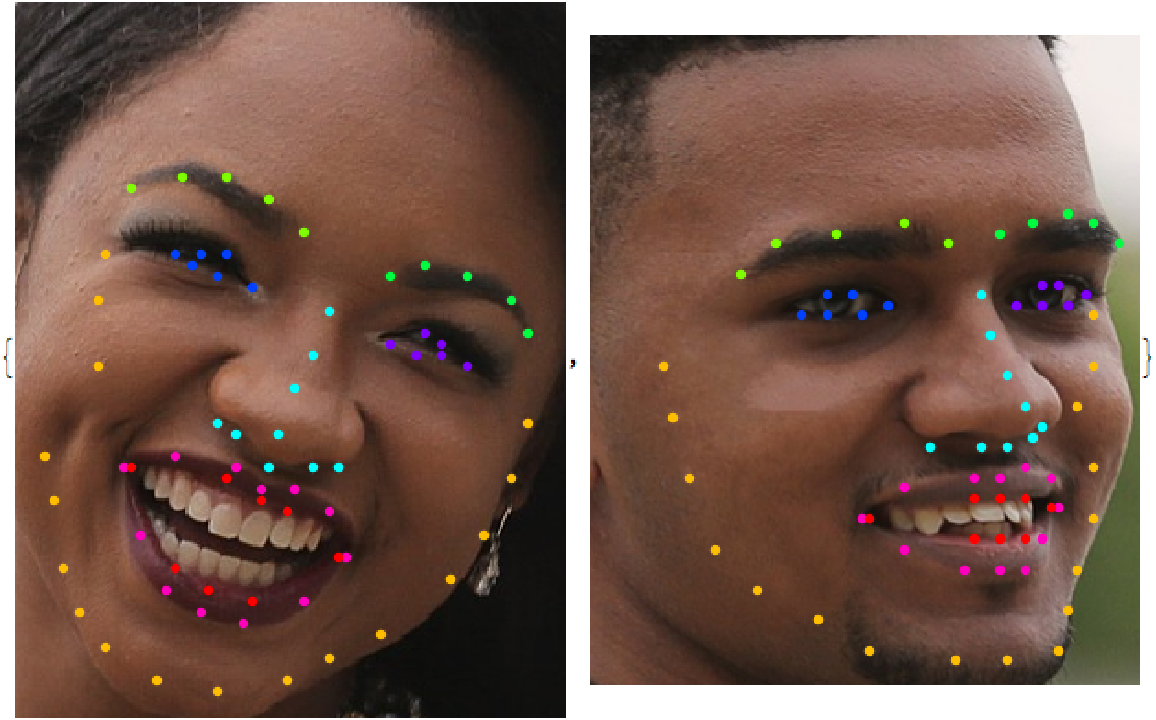

Visualize the landmarks:

Robustness to facial crop size

Get an image:

Crop the image at various sizes:

Inspect the network performance across the crops:

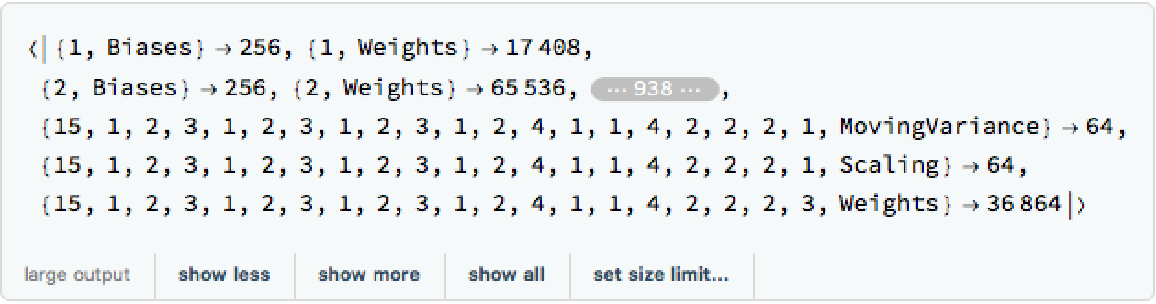

Net information

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

Requirements

Wolfram Language

11.2

(September 2017)

or above

Resource History

Reference

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/8e5bda12-6fb3-41c3-b8b4-6143a4473f94"]](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/1e5d294a7e972496.png)

![netevaluation[img_] := Block[

{heatmaps, posFlattened, posMat},

heatmaps = NetModel["2D Face Alignment Net Trained on 300W Large Pose Data"][

img];

posFlattened = Map[First@Ordering[#, -1] &, Flatten[heatmaps, {{1}, {2, 3}}]];

posMat = QuotientRemainder[posFlattened - 1, 64] + 1;

1/64.*Map[{#[[2]], 64 - #[[1]] + 1} - 0.5 &, posMat]

]](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/29bc64a6697fae78.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/35f10b4e-edcc-4d7a-9c1c-6a65d21e4dc1"]](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/1ea5ba76913777d6.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/3c9c937b-280a-4b5a-a4d1-33fa50fc86b5"]](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/2a9f0ea57f5f9b9d.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/a81c6a86-8b4b-4992-8a2c-79119765e53a"]](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/55df4f8becf2d955.png)

![findFacialLandmarks[img_Image] := Block[

{crops, points},

crops = ImageTrim[img, #] & /@ FindFaces[img];

points = If[Length[crops] > 0, netevaluation /@ crops, {}];

MapThread[<|"Crop" -> #1, "Landmarks" -> #2|> &, {crops, points}]

]](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/0c4ac7c45342fa71.png)

![HighlightImage[#Crop, Graphics@

Riffle[Thread@Hue[Range[8]/8.], Map[Point, Function[p, Part[#Landmarks, p]] /@ groupings]], DataRange -> {{0, 1}, {0, 1}}, ImageSize -> 300] & /@ output](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/4f9fa760fa4ba139.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/3aba6a35-a7f4-4742-9be1-0f52cf395c21"]](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/793c003458e05c4e.png)

![HighlightImage[#, Graphics@

Riffle[Thread@Hue[Range[8]/8.], Map[Point, Function[p, Part[netevaluation[#], p]] /@ groupings]],

DataRange -> {{0, 1}, {0, 1}}, ImageSize -> 250] & /@ crops](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/1fb1bf99a94e4699.png)

![NetInformation[

NetModel[

"2D Face Alignment Net Trained on 300W Large Pose Data"], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/7af92d29e0474399.png)

![NetInformation[

NetModel[

"2D Face Alignment Net Trained on 300W Large Pose Data"], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/292ffdf6166dc429.png)

![NetInformation[

NetModel[

"2D Face Alignment Net Trained on 300W Large Pose Data"], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/0b810e7c45c025ae.png)

![NetInformation[

NetModel[

"2D Face Alignment Net Trained on 300W Large Pose Data"], "SummaryGraphic"]](https://www.wolframcloud.com/obj/resourcesystem/images/c35/c35b20c0-e8eb-47ca-a60e-a0ce1f2f6102/513d4aa33be5f875.png)