Resource retrieval

Get the pre-trained net:

Basic usage

Classify an image:

The prediction is an Entity object, which can be queried:

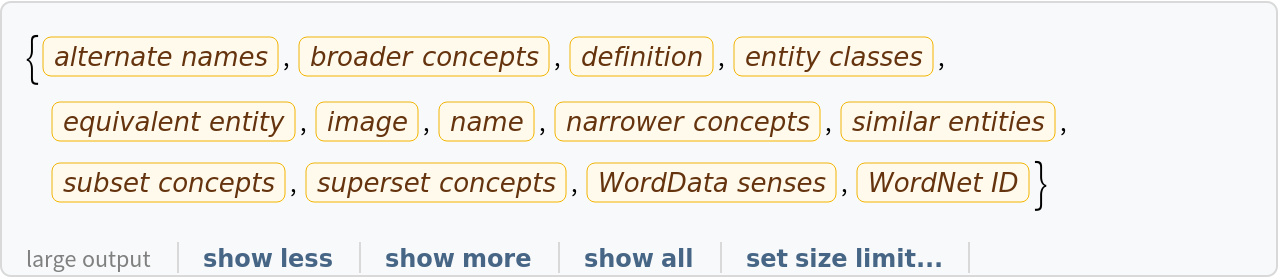

Get a list of available properties of the predicted Entity:

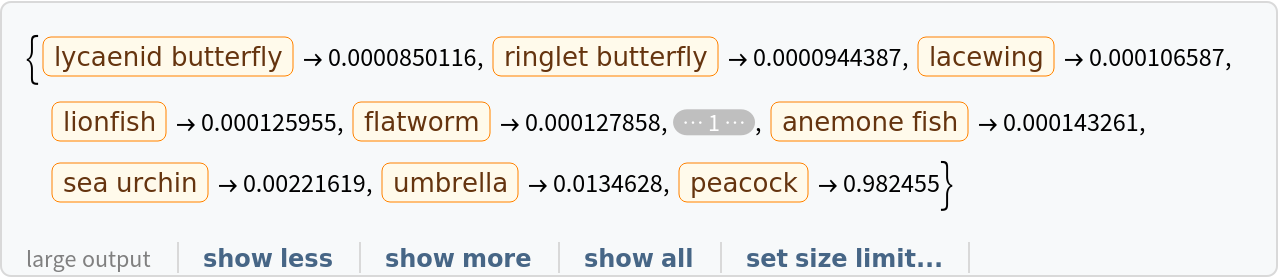

Obtain the probabilities of the 10 most likely entities predicted by the net:

An object outside the list of the ImageNet classes will be misidentified:

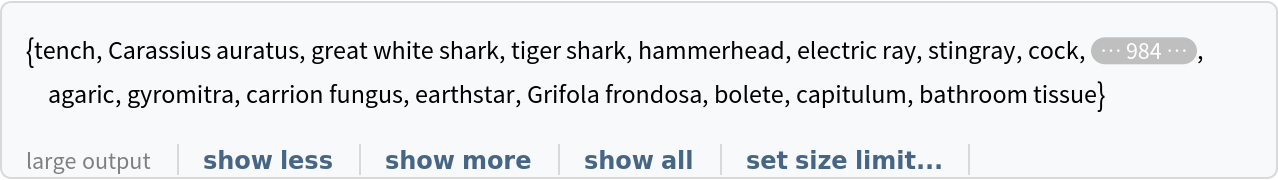

Obtain the list of names of all available classes:

Feature extraction

Remove the last three layers of the trained net so that the net produces a vector representation of an image:

Get a set of images:

Visualize the features of a set of images:

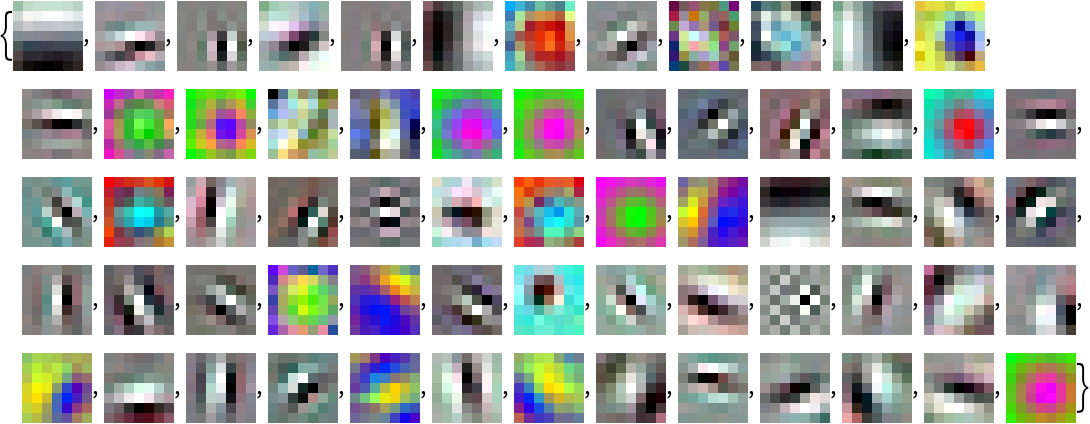

Visualize convolutional weights

Extract the weights of the first convolutional layer in the trained net:

Show the dimensions of the weights:

Visualize the weights as a list of 64 images of size 7⨯7:

Transfer learning

Use the pre-trained model to build a classifier for telling apart images of sunflowers and roses. Create a test set and a training set:

Remove the last two layers from the pre-trained net:

Create a new net composed of the pre-trained net followed by a linear layer and a softmax layer:

Train on the dataset, freezing all the weights except for those in the "linearNew" layer (use TargetDevice -> "GPU" for training on a GPU):

Accuracy obtained on the test set:

Net information

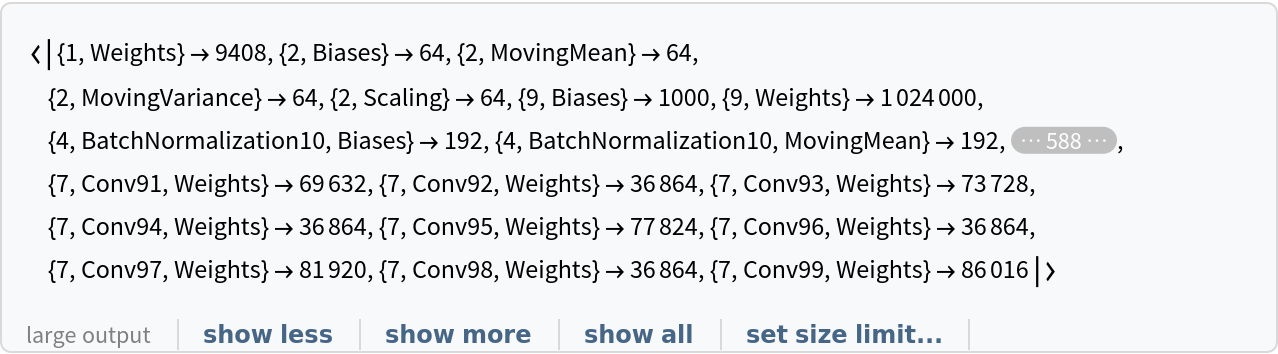

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Export to ONNX

Export the net to the ONNX format:

Get the size of the ONNX file:

The size is similar to the byte count of the resource object:

Check some metadata of the ONNX model:

Import the model back into the Wolfram Language. However, the NetEncoder and NetDecoder will be absent because they are not supported by ONNX:

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/673c76a6-119e-4da4-8fab-71b4a76aad0b"]](https://www.wolframcloud.com/obj/resourcesystem/images/ab9/ab94ed52-aebb-440c-878b-c7d5621e7e12/316b65a3ea5c0694.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/020f0303-0a1f-4e10-9bb4-482f03c64ea5"]](https://www.wolframcloud.com/obj/resourcesystem/images/ab9/ab94ed52-aebb-440c-878b-c7d5621e7e12/2ae514b4081ca2ed.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/7744e97d-3442-4657-8d03-be4dad94c906"]](https://www.wolframcloud.com/obj/resourcesystem/images/ab9/ab94ed52-aebb-440c-878b-c7d5621e7e12/4e2a640e98045743.png)

![EntityValue[

NetExtract[

NetModel["DenseNet-121 Trained on ImageNet Competition Data"], "Output"][["Labels"]], "Name"]](https://www.wolframcloud.com/obj/resourcesystem/images/ab9/ab94ed52-aebb-440c-878b-c7d5621e7e12/1b0bb2240e177b58.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/5f89a63c-fd8f-4512-b11d-2808618e3d32"]](https://www.wolframcloud.com/obj/resourcesystem/images/ab9/ab94ed52-aebb-440c-878b-c7d5621e7e12/1198706bc73efb8e.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/c62ce7f6-5192-4584-9405-6796ff8499f1"]](https://www.wolframcloud.com/obj/resourcesystem/images/ab9/ab94ed52-aebb-440c-878b-c7d5621e7e12/0b811f391be05c9a.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/5618fe9d-0718-447c-abe2-1b3e7799274f"]](https://www.wolframcloud.com/obj/resourcesystem/images/ab9/ab94ed52-aebb-440c-878b-c7d5621e7e12/1f566cdc2ebaf259.png)

![newNet = NetChain[<|"pretrainedNet" -> tempNet, "Agg" -> AggregationLayer[Mean], "linear" -> LinearLayer[], "softmax" -> SoftmaxLayer[]|>, "Output" -> NetDecoder[{"Class", {"sunflower", "rose"}}]]](https://www.wolframcloud.com/obj/resourcesystem/images/ab9/ab94ed52-aebb-440c-878b-c7d5621e7e12/6dd031610463d5d4.png)