YOLOX

Trained on

MS-COCO Data

YOLO (You Only Look Once) Version X is a family of object detection models published in August 2021. It is a revisitation of the YOLOv3-DarkNet53 model with several architectural and training improvements: the decoupling of classification and regression heads, the switch to an anchor-free pipeline, the introduction of an advanced label-assignment strategy named SimOTA (Simplified Optimal Transport Assignment) and the use of strong data augmentation techniques.

Examples

Resource retrieval

Get the pre-trained net:

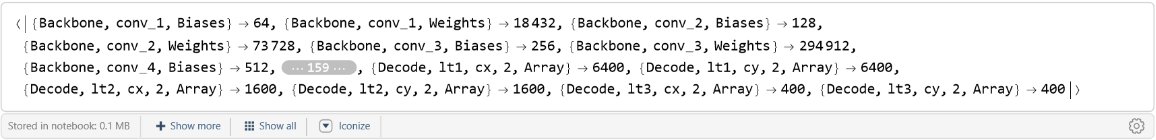

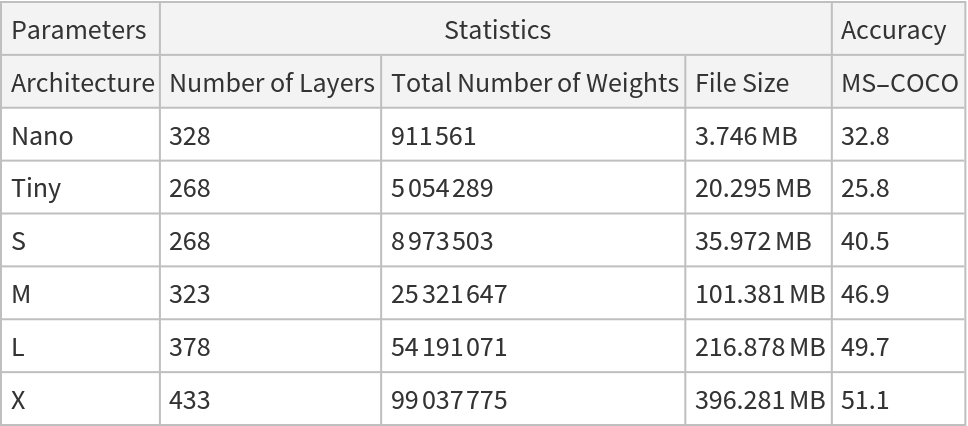

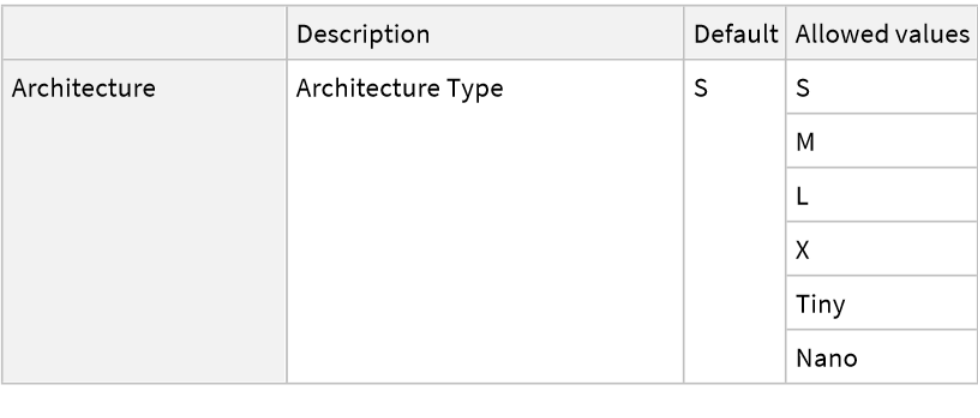

NetModel parameters

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

Pick a non-default net by specifying the parameters:

Pick a non-default uninitialized net:

Evaluation function

Write an evaluation function to scale the result to the input image size and suppress the least probable detections:

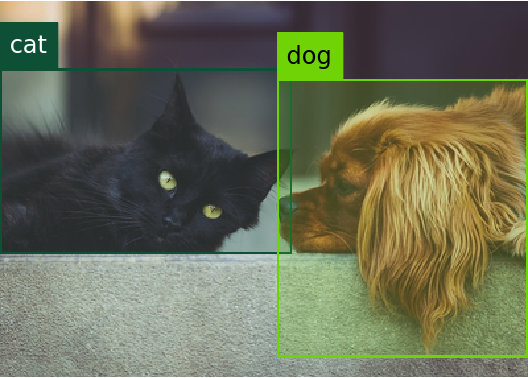

Basic usage

Obtain the detected bounding boxes with their corresponding classes and confidences for a given image:

Inspect which classes are detected:

Visualize the detection:

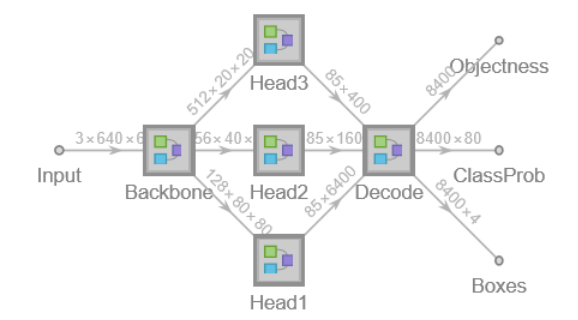

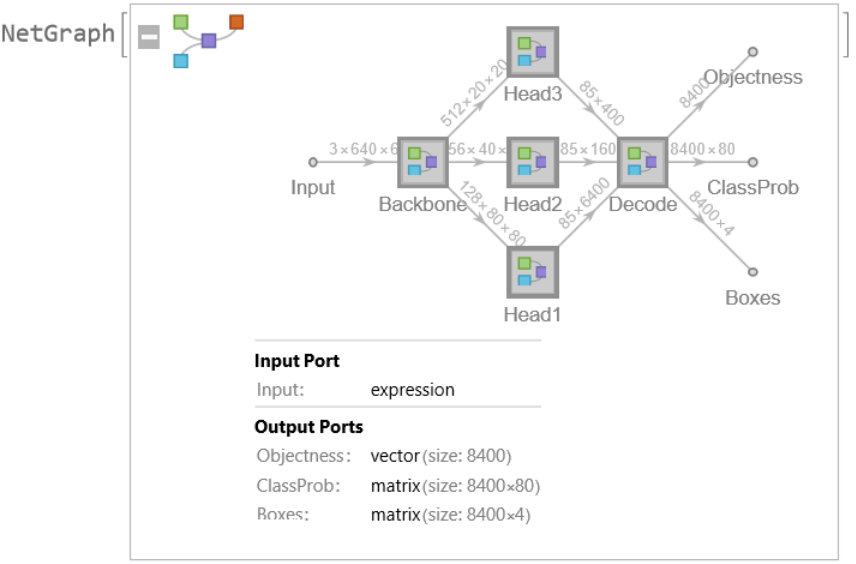

Network result

The network computes 8,400 bounding boxes and the probability that the objects in each box are of any given class:

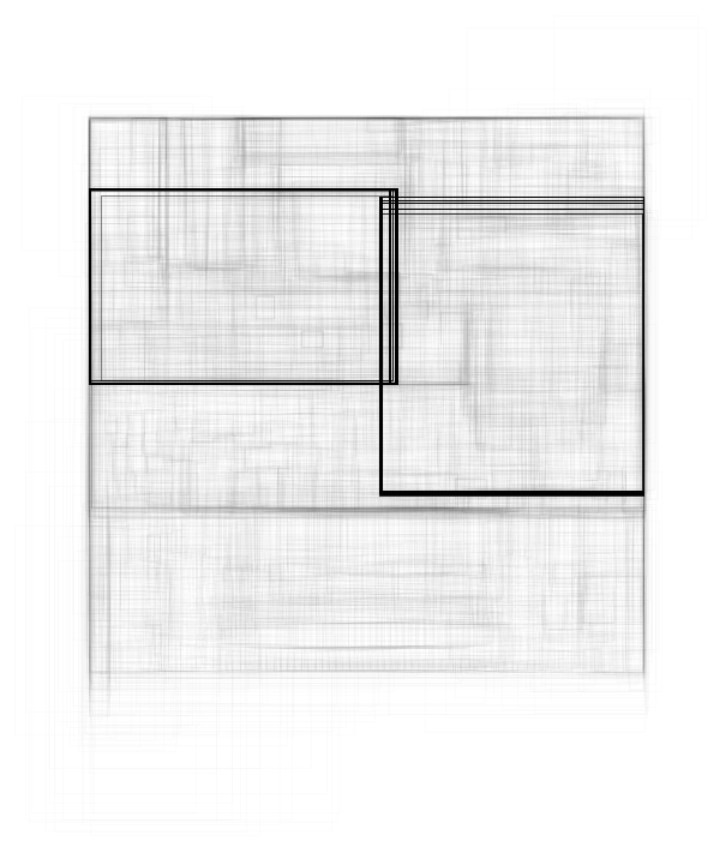

Rescale the bounding boxes to the coordinates of the input image and visualize them scaled by their "objectness" measures:

Visualize all the boxes scaled by the probability that they contain a cat:

Superimpose the cat prediction on top of the scaled input received by the net:

Net information

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Resource History

Reference

-

Z. Ge, S. Liu, F. Wang, Z. Li, J. Sun,"YOLOX: Exceeding YOLO Series in 2021," arXiv:2107.08430 (2021)

- Available from:

-

Rights:

Apache License

![NetModel[{"YOLOX Trained on MS-COCO Data", "Architecture" -> "L"}, "UninitializedEvaluationNet"]](https://www.wolframcloud.com/obj/resourcesystem/images/69e/69ec7641-a8bc-4c3b-a4a2-2f4195d2ef3b/719105f4f651b222.png)

![netevaluate[model_, img_, detectionThreshold_ : .5, overlapThreshold_ : .5] := Module[{imgSize, classes, coords, obj, scores, bestClass, probable, probableClasses, probableScores, probableBoxes, h, w, max, scale, padding, nms, finals},

imgSize = Last@NetExtract[model, {"Input", "Output"}];

{obj, classes, coords} = Values@model[img];

(*each class probability is rescaled with the box objectness*)

scores = classes*obj;

bestClass = Last@*Ordering /@ scores;

(*filter by probability*)

(*very small probability are thresholded*)

probable = UnitStep[obj - detectionThreshold]; {probableClasses, probableBoxes, probableScores} = Map[Pick[#, probable, 1] &, {labels[[bestClass]], coords, obj}];

If[Length[probableBoxes] == 0, Return[{}]];

(*transform coordinates into rectangular boxes*)

{w, h} = ImageDimensions[img];

max = Max[{w, h}];

scale = max/imgSize ;

padding = imgSize*(1 - {w, h}/max);

padding[[1]] = padding[[1]]/2;

probableBoxes = Apply[

Rectangle[

scale*({#1 - #3/2, imgSize - #2 - #4/2} - padding),

scale*({#1 + #3/2, imgSize - #2 + #4/2} - padding)

] &, probableBoxes, 1];

(*gather the boxes of the same class and perform non-

max suppression*)

nms = nonMaximumSuppression[probableBoxes -> probableScores, "Index"];

finals = Transpose[{probableBoxes, probableClasses, probableScores}];

Part[finals, nms]

];](https://www.wolframcloud.com/obj/resourcesystem/images/69e/69ec7641-a8bc-4c3b-a4a2-2f4195d2ef3b/4929e7048b7caecc.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/96f1ffd2-3499-4db5-beaa-3345691fceaf"]](https://www.wolframcloud.com/obj/resourcesystem/images/69e/69ec7641-a8bc-4c3b-a4a2-2f4195d2ef3b/32356a11a5e32496.png)

![rectangles = Block[

{w, h, max, imgSize, scale, padding},

{w, h} = ImageDimensions[testImage];

max = Max[{w, h}];

imgSize = 640;

scale = max/imgSize ;

padding = imgSize*(1 - {w, h}/max);

Apply[

Rectangle[

scale*({#1 - #3/2, imgSize - #2 - #4/2} - padding),

scale*({#1 + #3/2, imgSize - #2 + #4/2} - padding)

] &,

res["Boxes"],

1

]

];](https://www.wolframcloud.com/obj/resourcesystem/images/69e/69ec7641-a8bc-4c3b-a4a2-2f4195d2ef3b/601cec0924485888.png)

![Graphics[

MapThread[{EdgeForm[Opacity[#1 + .01]], #2} &, {res["Objectness"]*

Extract[res["ClassProb"], {All, idx}], rectangles}],

BaseStyle -> {FaceForm[], EdgeForm[{Thin, Black}]}

]](https://www.wolframcloud.com/obj/resourcesystem/images/69e/69ec7641-a8bc-4c3b-a4a2-2f4195d2ef3b/1b09c2017b3c3924.png)

![HighlightImage[testImage, Graphics[

MapThread[{EdgeForm[{Thickness[#1/100], Opacity[(#1 + .01)/3]}], #2} &, {res["Objectness"]*

Extract[res["ClassProb"], {All, idx}], rectangles}]], BaseStyle -> {FaceForm[], EdgeForm[{Thin, Red}]}]](https://www.wolframcloud.com/obj/resourcesystem/images/69e/69ec7641-a8bc-4c3b-a4a2-2f4195d2ef3b/0d00fe5fa3ffcaac.png)