Resource retrieval

Get the pre-trained net:

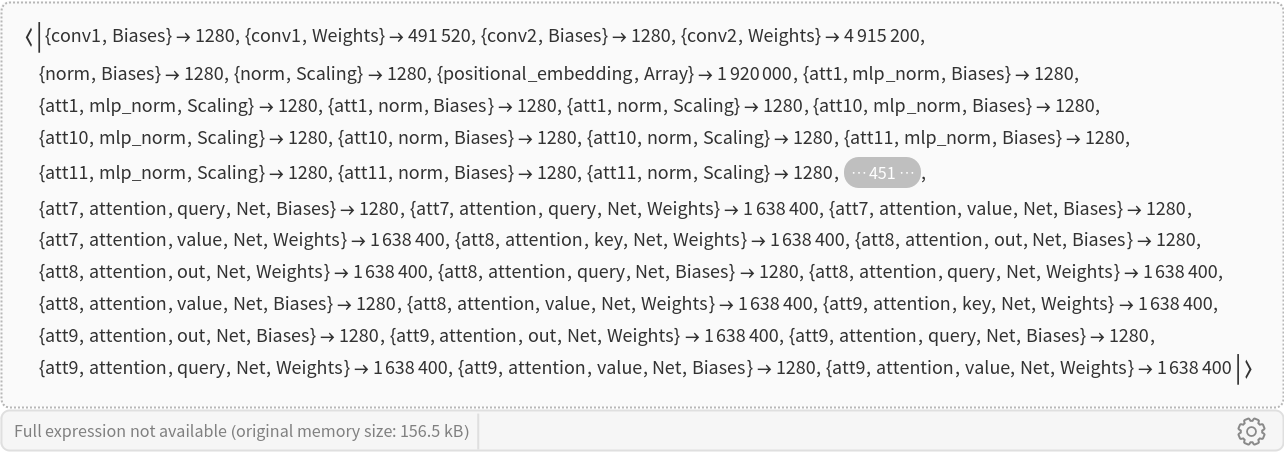

NetModel parameters

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

Pick a non-default net by specifying the parameters:

Pick a non-default uninitialized net:

Get the labels:

Evaluation function

Write an evaluation function to combine the encoder and decoder nets into a full transcription pipeline:

Basic usage

Transcribe speech in English:

Transcribe speech in Spanish:

Whisper can detect the audio language sample automatically, but the "Language" option can be used to pre-define the language of the audio sample. Transcribe speech in Japanese:

Set the option "IncludeTimestamps" to True to add timestamps at the beginning and end of the audio sample:

Feature extraction

Get a set of audio samples with human speech and background noise:

Define a feature extraction using the Whisper encoder:

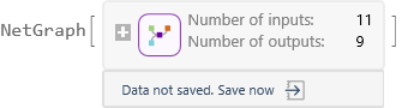

Visualize the feature space embedding performed by the audio encoder. Notice that the audio samples from the same class are clustered together:

Get a set of English and German audio samples:

Visualize the feature space embedding and observe how the English and German audio samples are clustered together:

Language identification

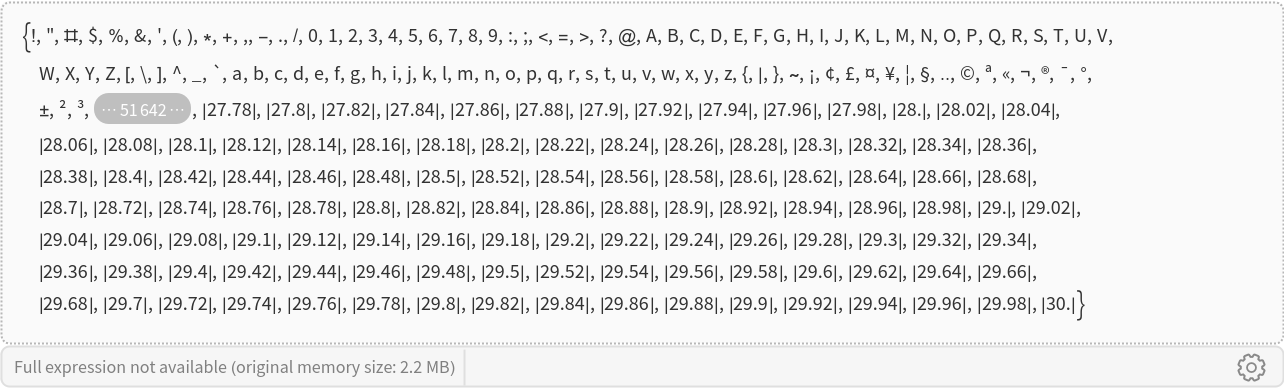

Whisper can transcribe from one hundred different languages. Retrieve the list of available languages from the label set:

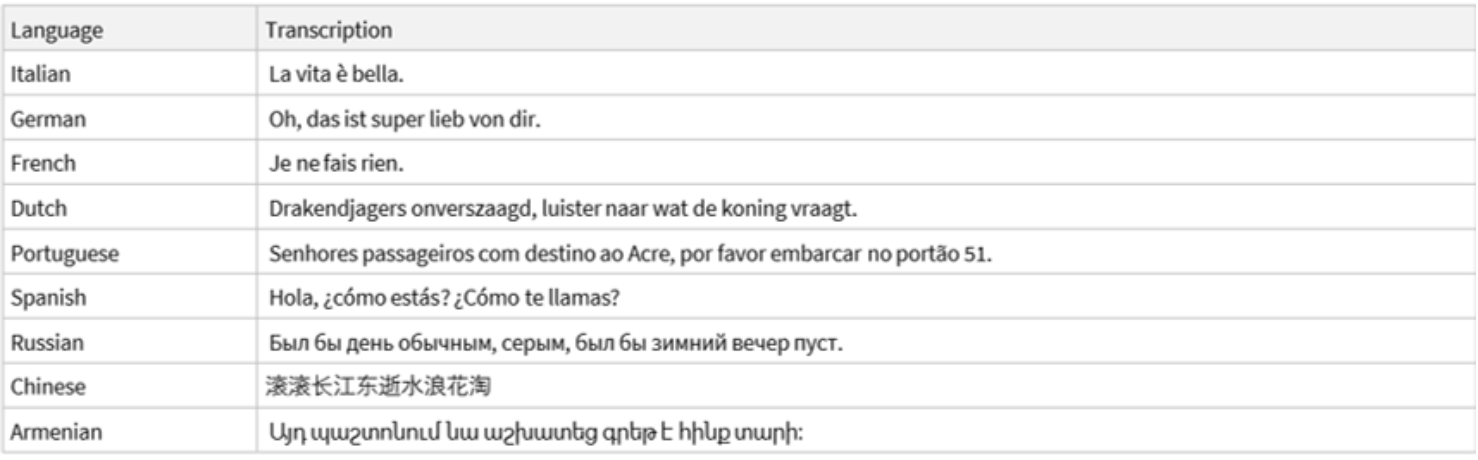

Obtain a collection of audio samples featuring speakers of different languages:

Define a function to detect the language of the audio sample. Whisper determines the language by selecting the most likely language token after the initial pass of the decoder (the following code needs definitions from the "Evaluation function" section):

Detect the languages:

Transcribe the audio samples:

Transcription generation

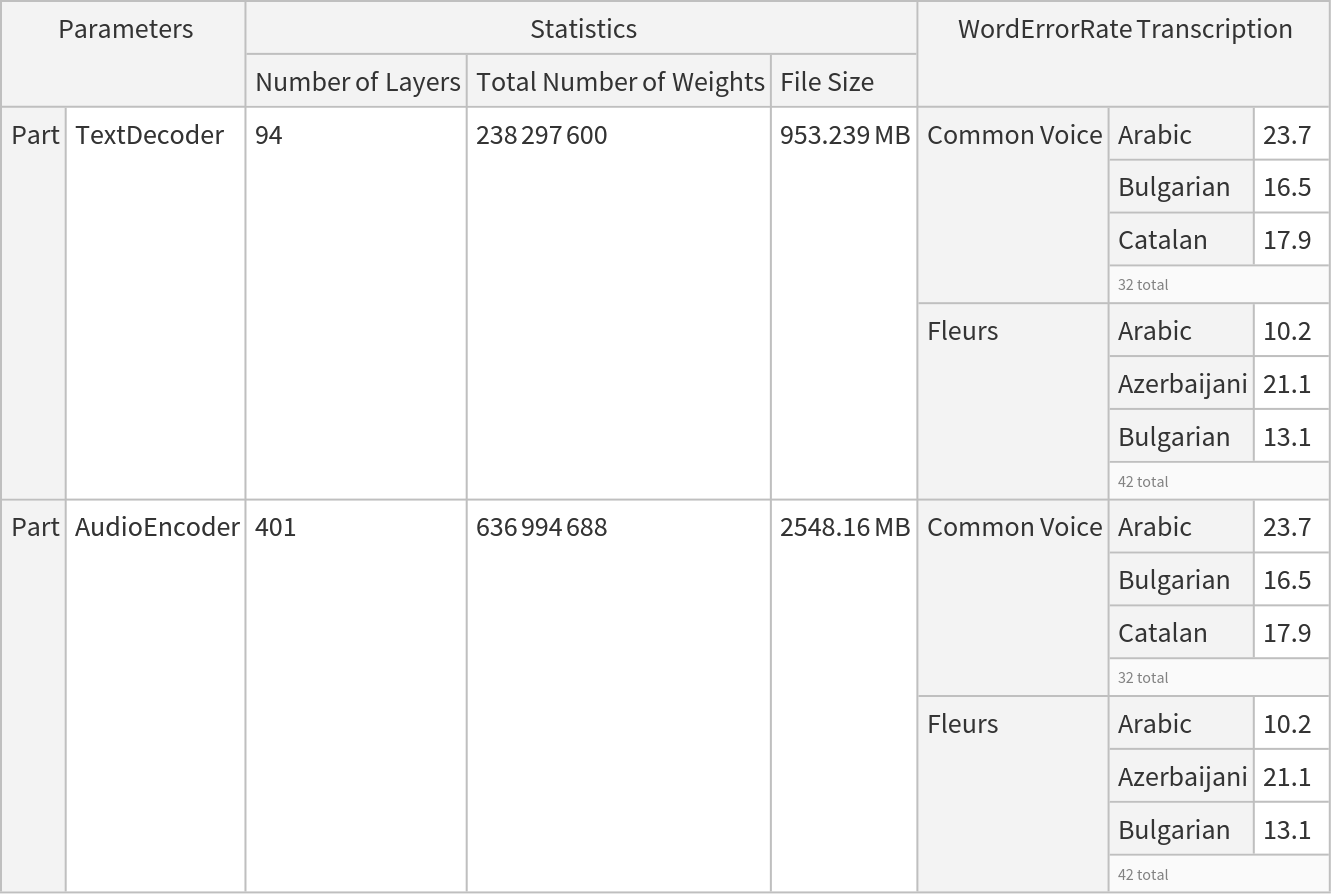

The transcription pipeline makes use of two separate transformer nets, encoder and decoder:

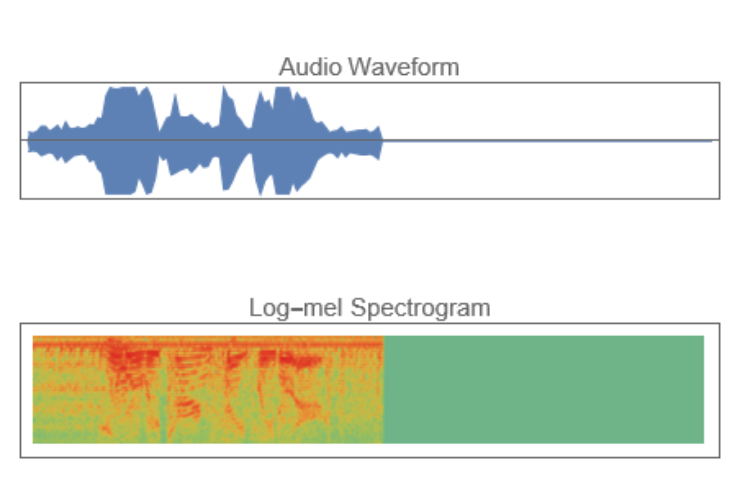

The encoder preprocesses the input audio into a log-Mel spectrogram, capturing the signal's frequency content over time:

Get an input audio sample and compute its log-Mel spectrogram:

Visualize the log-Mel spectrogram and the audio waveform:

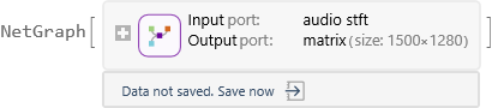

The encoder processes the input once, producing a feature matrix of size 1500x1280:

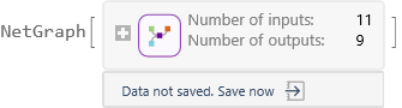

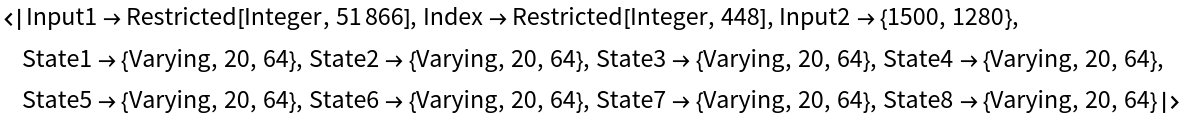

The decoding step involves running the decoder multiple times recursively, with each iteration producing a subword token of the transcribed audio. The decoder receives several inputs:

• The port "Input1" takes the subword token generated by the previous evaluation of the decoder.

• The port "Index" takes an integer keeping count of how many times the decoder was evaluated (positional encoding).

• The port "Input2" takes the encoded features produced by the encoder. The data fed to this input is the same for every evaluation of the decoder.

• The ports "State1", "State2"... take the self-attention key and value arrays for all the past tokens. Their size grows by one at each evaluation. The decoder has four attention blocks, which makes for eight states: four key arrays and four value arrays.

The initial prompt is a sequence of context tokens that guides Whisper's decoding process by specifying the task to perform and the audio's language. These tokens can be hard-coded to explicitly control the output or left flexible, allowing the model to automatically detect the language and task. Define the initial prompt for transcribing audio in Spanish:

Retrieve the integer codes of the prompt tokens:

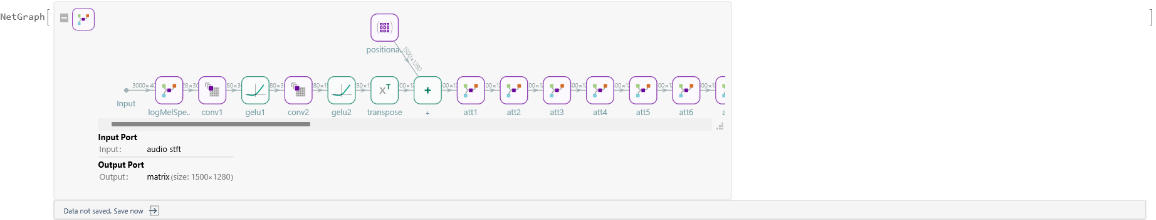

Before starting the decoding process, initialize the decoder's inputs:

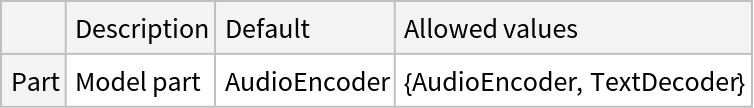

Use the decoder iteratively to transcribe the audio. The recursion keeps going until the EndOfString token is generated or the maximum number of iterations is reached:

Display the generated tokens:

Obtain a readable representation of the tokens by converting the text into UTF8:

Net information

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

![NetModel[{"Whisper-V1 Multilingual Turbo", "Part" -> "TextDecoder"}, "UninitializedEvaluationNet"]](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/7216b91f676eb626.png)

![suppress[logits_, tokenIds_ : {}] := ReplacePart[logits, Thread[tokenIds -> -Infinity]];

rescore[logits_, temp_ : 1] := Block[{expRescaledLog, total}, expRescaledLog = Quiet[Exp[logits/temp]] /. Indeterminate -> 0.;

total = Total[expRescaledLog, {-1}] /. 0. -> 1.;

expRescaledLog/total]

sample[probs_, temp_, tokenIds_ : {}] := Block[{weights, suppressLogits}, suppressLogits = suppress[Log[probs], tokenIds];

weights = Quiet@rescore[suppressLogits, temp];

First@

If[Max[weights] > 0, RandomSample[weights -> Range@Length@weights, 1], FirstPosition[#, Max[#]] &@

Exp[suppressLogits](*low temperature cases*)]];

sample[probs_, 0., tokenIds_ : {}] := First@FirstPosition[#, Max[#]] &@suppress[probs, tokenIds];

sample[probs_, 0, tokenIds_ : {}] := sample[probs, 0., tokenIds];](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/583044728c217bc0.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/a799483a-6a27-440c-95b5-dcf17992812d"]](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/73d4d8859573cd6e.png)

![(*Define needsFallbackQ function*)

fallbackQ[noSpeech_, compressionRatio_, compressionRatioThresh_, avgLogProb_, logProbThresh_] := Which[

And[noSpeech, avgLogProb < logProbThresh], False,(*Silent*)

avgLogProb < logProbThresh, True,(*average log probability is too low*)

compressionRatio > compressionRatioThresh, True (*too repetitive*),

True, False

];

(*Define compressionRatioF function*)

compressionRatio[tokens_, labels_] := With[

{textBytes = StringToByteArray[

StringJoin@

FromCharacterCode[

Flatten@ToCharacterCode[labels[[tokens]], "Unicode"], "UTF8"],

"UTF-8"]},

N@Length[textBytes]/StringLength[Compress[textBytes]]

];

(*Define decodeWithFallback function*)

Options[decodeWithFallback] = {"Language" -> Automatic, "IncludeTimestamps" -> False, "SuppressSpecialTokens" -> False, "LogProbabilityThreshold" -> -1, "CompressionRatioThreshold" -> 2.4, "Temperature" -> 0, MaxIterations -> 224, TargetDevice -> "CPU"};

decodeWithFallback[features_, textDecoder_, initStates_, outPorts_, labels_, prev_, opts : OptionsPattern[]] := Module[{tokens, noSpeech, avgLogProb, compressRatio, outPortst, needsFallback = True, temperatures, i = 1},

temperatures = Range[OptionValue["Temperature"], 1, 0.2];

(*if needsFallback is True iterate over different temperatures*)

While[i <= Length[temperatures],

{tokens, noSpeech, avgLogProb} = generate[features, prev, textDecoder, initStates, outPorts, labels, "Language" -> OptionValue["Language"], "IncludeTimestamps" -> OptionValue["IncludeTimestamps"], "SuppressSpecialTokens" -> OptionValue["SuppressSpecialTokens"],

"Temperature" -> temperatures[[i]], MaxIterations -> OptionValue[MaxIterations], TargetDevice -> OptionValue[TargetDevice]];

(*update iterator*)

i++;

(*update needsFallback*)

compressRatio = compressionRatio[tokens, labels];

needsFallback = fallbackQ[noSpeech, compressRatio, OptionValue["CompressionRatioThreshold"], avgLogProb, OptionValue["LogProbabilityThreshold"]];

If[! needsFallback, Break[]];

];

tokens (*return the generated tokens for this chunk*)

];](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/484dad588bbdaac6.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/5a4255ca-73e7-4e4e-a656-f04d95d7425e"]](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/30448e2a6a0da227.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/747daee0-22d6-471f-9f6b-eb34154e56b8"]](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/59e57bf5aa5c569c.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/dc539faa-6b37-47ea-a2c4-c3f2f10a0e4e"]](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/6dadbad3a9c82c35.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/83ad2453-cfd9-4ec1-86a7-f28259b2d5d8"]](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/158b3adbf4205426.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/a87ff8bf-5079-4b85-907b-38899c88f18e"]](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/6cf8aec66669d06f.png)

![getLanguage[audio_] := Module[{encoded, labels, languages, eosCode, textDecoder, initStates, init, probs, language, aud},

aud = AudioPad[audio, 30 - Min[30, QuantityMagnitude[Duration[audio]]]];

encoded = NetModel["Whisper-V1 Multilingual Turbo"][aud, TargetDevice -> "GPU"];

labels = NetModel["Whisper-V1 Multilingual Turbo", "Labels"];

languages = <|50260 -> "|English|", 50261 -> "|Chinese|", 50262 -> "|German|", 50263 -> "|Spanish|", 50264 -> "|Russian|", 50265 -> "|Korean|", 50266 -> "|French|", 50267 -> "|Japanese|", 50268 -> "|Portuguese|", 50269 -> "|Turkish|", 50270 -> "|Polish|", 50271 -> "|Catalan|", 50272 -> "|Dutch|", 50273 -> "|Arabic|", 50274 -> "|Swedish|", 50275 -> "|Italian|", 50276 -> "|Indonesian|", 50277 -> "|Hindi|", 50278 -> "|Finnish|", 50279 -> "|Vietnamese|", 50280 -> "|Hebrew|", 50281 -> "|Ukrainian|", 50282 -> "|Greek|", 50283 -> "|Malay|", 50284 -> "|Czech|", 50285 -> "|Romanian|", 50286 -> "|Danish|", 50287 -> "|Hungarian|", 50288 -> "|Tamil|", 50289 -> "|Norwegian|", 50290 -> "|Thai|", 50291 -> "|Urdu|", 50292 -> "|Croatian|", 50293 -> "|Bulgarian|", 50294 -> "|Lithuanian|", 50295 -> "|Latin|", 50296 -> "|Maori|", 50297 -> "|Malayalam|", 50298 -> "|Welsh|", 50299 -> "|Slovak|", 50300 -> "|Telugu|", 50301 -> "|Persian|", 50302 -> "|Latvian|", 50303 -> "|Bengali|", 50304 -> "|Serbian|", 50305 -> "|Azerbaijani|", 50306 -> "|Slovenian|", 50307 -> "|Kannada|", 50308 -> "|Estonian|", 50309 -> "|Macedonian|", 50310 -> "|Breton|", 50311 -> "|Basque|", 50312 -> "|Icelandic|", 50313 -> "|Armenian|", 50314 -> "|Nepali|", 50315 -> "|Mongolian|", 50316 -> "|Bosnian|", 50317 -> "|Kazakh|", 50318 -> "|Albanian|", 50319 -> "|Swahili|",

50320 -> "|Galician|", 50321 -> "|Marathi|", 50322 -> "|Punjabi|", 50323 -> "|Sinhala|", 50324 -> "|Khmer|", 50325 -> "|Shona|", 50326 -> "|Yoruba|", 50327 -> "|Somali|", 50328 -> "|Afrikaans|", 50329 -> "|Occitan|", 50330 -> "|Georgian|", 50331 -> "|Belarusian|", 50332 -> "|Tajik|", 50333 -> "|Sindhi|", 50334 -> "|Gujarati|", 50335 -> "|Amharic|", 50336 -> "|Yiddish|", 50337 -> "|Lao|", 50338 -> "|Uzbek|", 50339 -> "|Faroese|", 50340 -> "|Haitian creole|", 50341 -> "|Pashto|", 50342 -> "|Turkmen|", 50343 -> "|Nynorsk|", 50344 -> "|Maltese|",

50345 -> "|Sanskrit|", 50346 -> "|Luxembourgish|", 50347 -> "|Myanmar|", 50348 -> "|Tibetan|", 50349 -> "|Tagalog|",

50350 -> "|Malagasy|", 50351 -> "|Assamese|", 50352 -> "|Tatar|", 50353 -> "|Hawaiian|", 50354 -> "|Lingala|", 50355 -> "|Hausa|", 50356 -> "|Bashkir|", 50357 -> "|Javanese|", 50358 -> "|Sundanese|"|>;

eosCode = 50259;

textDecoder = NetModel[{"Whisper-V1 Multilingual Turbo", "Part" -> "TextDecoder"}];

initStates = AssociationMap[Function[x, {}], Select[Information[textDecoder, "InputPortNames"], StringStartsQ["State"]]];

init = Join[

<|

"Index" -> 1,

"Input1" -> eosCode,

"Input2" -> encoded

|>,

initStates

];

probs = textDecoder[init, NetPort[{"softmax", "Output"}], TargetDevice -> "GPU"];

language = sample[probs, 0, Complement[Range[Length[labels]], Keys[languages]]];

labels[[language]]

];](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/71f71d90ec8f62bc.png)

![GraphicsColumn[{

AudioPlot[audio, PlotRange -> {0, 5}, PlotLabel -> "Audio Waveform",

FrameTicks -> None, ImageSize -> {300, 100}],

MatrixPlot[logMelSpectrogram, PlotLabel -> "Log-mel Spectrogram", ColorFunction -> "Rainbow", FrameTicks -> None, ImageSize -> {300, 100}, PlotRange -> {{0, 80}, {0, 500}}]}, ImageSize -> Medium]](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/30b7f70e7e9507fd.png)

![index = 1;

sosCode = 50259;

init = Join[

<|"Index" -> index,

"Input1" -> sosCode,

"Input2" -> audioFeatures

|>,

initStates

];](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/73596c0960677a81.png)

![eosCode = 50258;

isGenerating = False;

tokens = {};

NestWhile[

Function[

If[SameQ[index, Length[prompt]], isGenerating = True];

netOut = textDecoder[#];

If[isGenerating, AppendTo[tokens, netOut["Output"]]];

Join[

KeyMap[StringReplace["OutState" -> "State"], netOut] (*include last states*),

<|"Index" -> ++index, (*update index*)

"Input1" -> If[isGenerating, netOut["Output"], promptCodes[[index]]], (*input last generated token*)

"Input2" -> audioFeatures (*audio features for transcription*)

|>

]

],

init,

#Input1 =!= eosCode &,(*stops when EndOfString token is generated*)

1,

100 (*Max iterations*)

];](https://www.wolframcloud.com/obj/resourcesystem/images/c10/c10dad08-bd7e-4e24-960c-3b22cf1eb532/560d23cc7da0c9a8.png)