Very Deep Net for Super-Resolution

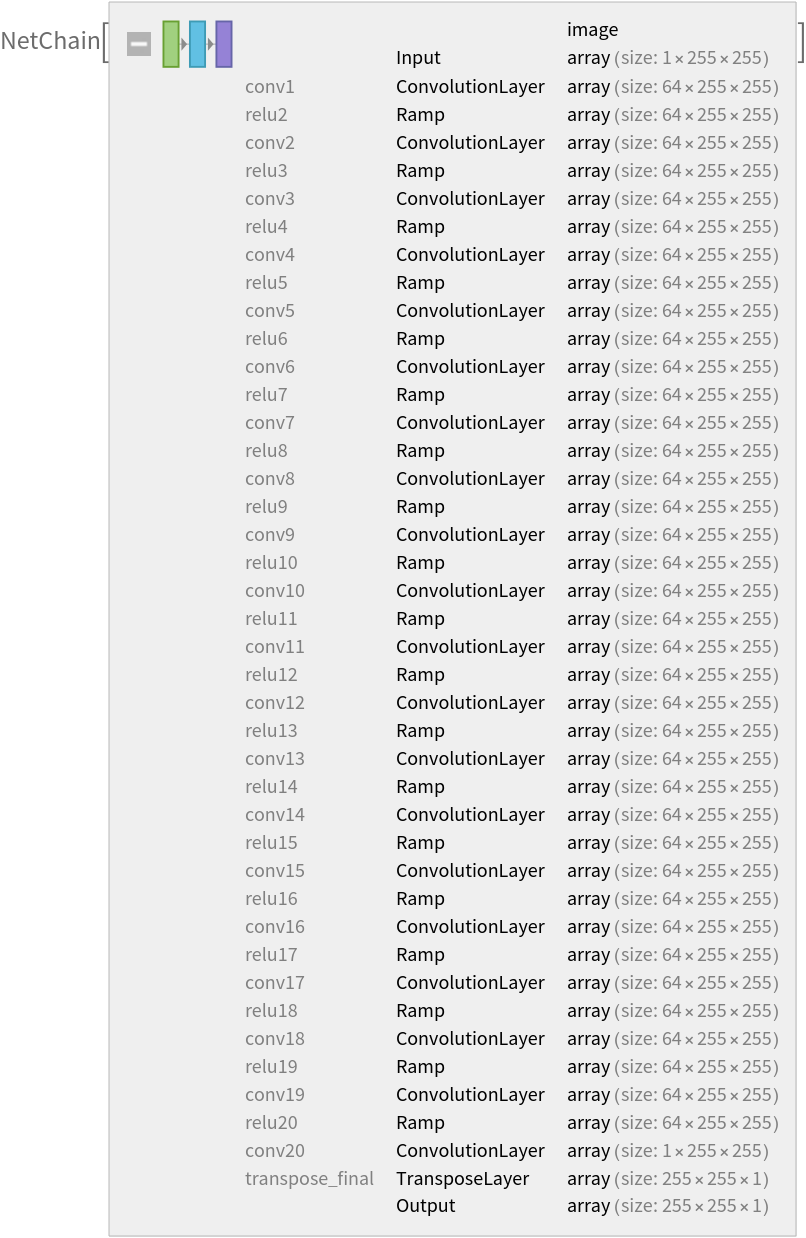

Released in 2016, this net uses an architecture inspired by VGG in order to create super-resolution images. It takes an interpolated low-resolution image and refines the details to create a sharp upsampling.

Number of layers: 40 |

Parameter count: 665,921 |

Trained size: 3 MB |

Examples

Resource retrieval

Get the pre-trained net:

Evaluation function

Write an evaluation function to handle net resizing and color conversion:

Basic usage

Get an image:

Downscale the image by a factor of 3:

Upscale the downscaled image using the net:

Compare the details with a naively upscaled version:

Evaluate the peak signal-to-noise ratio:

Net information

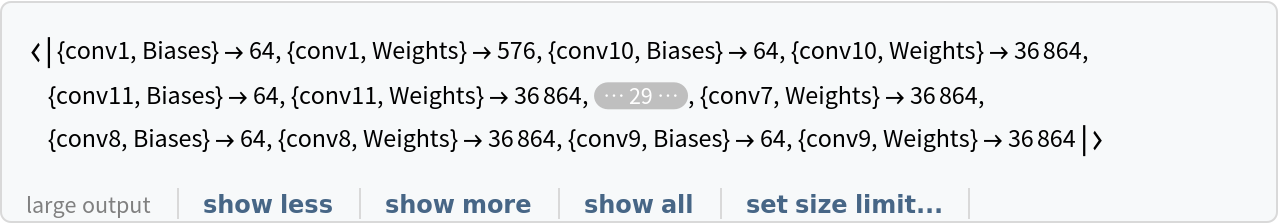

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

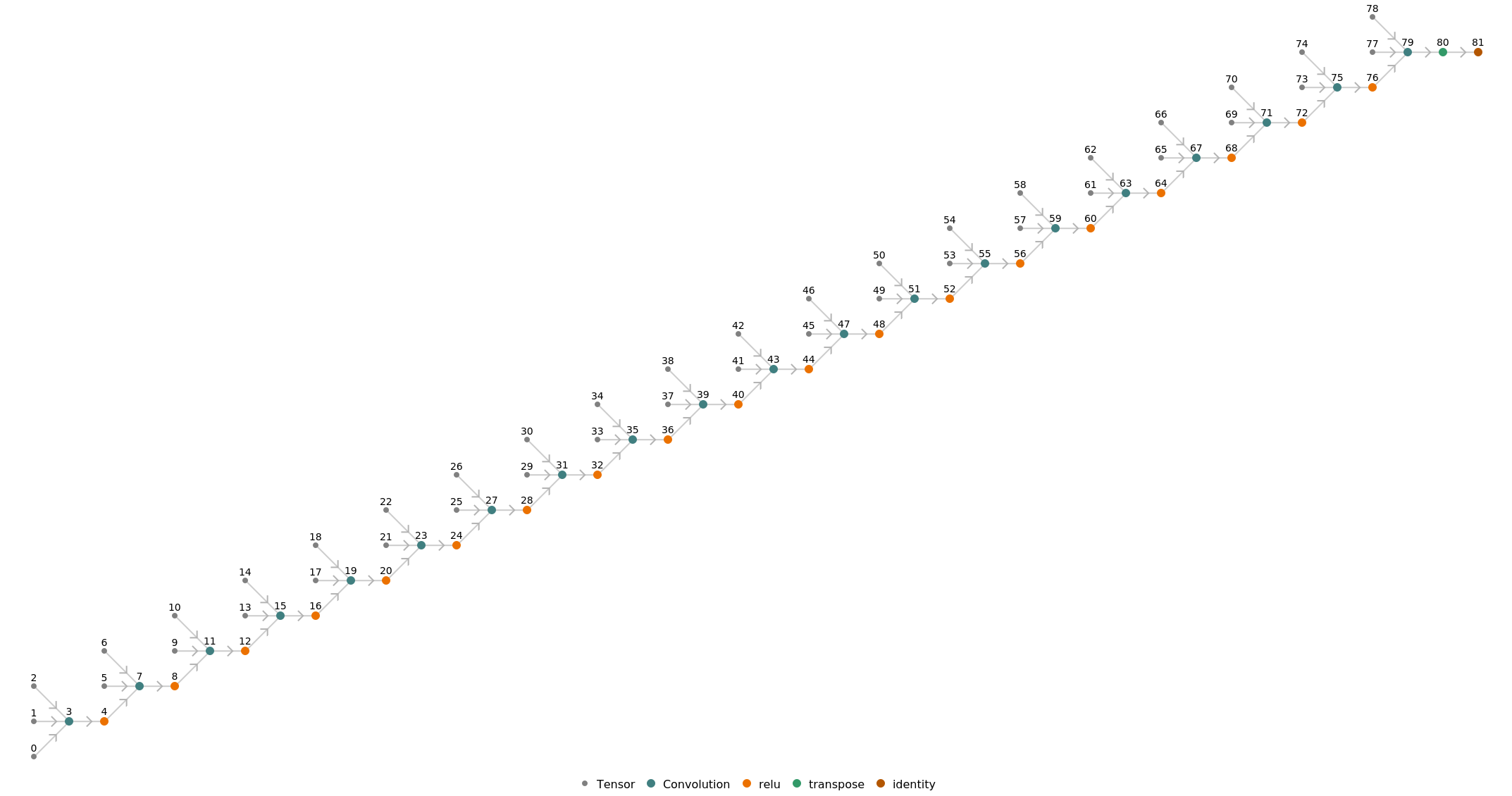

Represent the MXNet net as a graph:

Requirements

Wolfram Language

11.3

(March 2018)

or above

Resource History

Reference

-

J. Kim, J. Kwon Lee and K. Mu Lee, "Accurate Image Super-Resolution Using Very Deep Convolutional Networks," Proc. of IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

- Available from: https://github.com/huangzehao/caffe-vdsr

-

Rights:

MIT License

![netevaluate[img_, imgScale_] := Block[{net, interpolated, ycbcr, channels, resizedNet, diff, rgb},

net = NetModel["Very Deep Net for Super-Resolution"];

interpolated = ImageResize[img, Scaled[imgScale], Resampling -> "Cubic"];(* upscale to the final size *) ycbcr = ImageApply[{{0.257, 0.504, 0.098}, {-0.148, -0.291, 0.439}, {0.439, -0.368, -0.071}}.# + {0.063, 0.502, 0.502} &,

interpolated];

channels = ColorSeparate[ycbcr];

resizedNet = NetReplacePart[net, "Input" -> NetEncoder[{"Image", ImageDimensions@interpolated, ColorSpace -> "Grayscale"}]];

diff = Image@resizedNet[channels[[1]]];

ycbcr = ColorCombine[{channels[[1]] + diff, channels[[2]], channels[[3]]}];

rgb = ImageApply[{{1.164, 0., 1.596}, {1.164, -0.392, -0.813}, {1.164, 2.017, 0.}}.# + {-0.874, 0.532, -1.086} &, ycbcr];

rgb

]](https://www.wolframcloud.com/obj/resourcesystem/images/7a3/7a379346-3f69-40ce-b0df-0f34a9d9ee7b/2303191acb844d17.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/428e8456-38ea-4841-977d-e0422f89dde5"]](https://www.wolframcloud.com/obj/resourcesystem/images/7a3/7a379346-3f69-40ce-b0df-0f34a9d9ee7b/33ebb805b910ef8a.png)