Vanilla CNN for Facial Landmark Regression

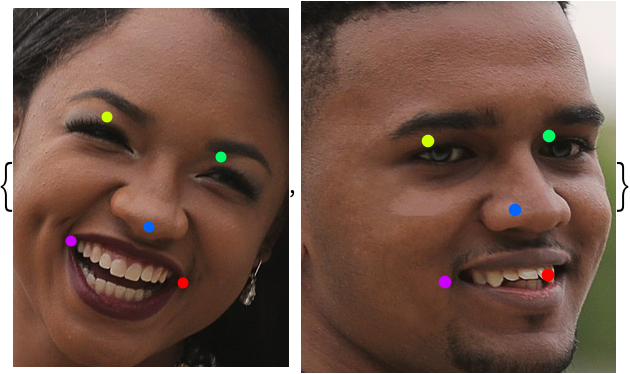

Released in 2015, this net is a regressor for locating five facial landmarks from facial images: eyes, nose and mouth corners. The net output is to be interpreted as the positions of {EyeLeft, EyeRight, Nose, MouthLeft, MouthRight}, where values are rescaled to the input image size so that the bottom-left corner is identified by {0, 0} and the top-right corner by {1, 1}.

Number of layers: 24 |

Parameter count: 111,050 |

Trained size: 485 KB |

Examples

Resource retrieval

Get the pre-trained net:

Basic usage

Get a facial image and the net:

Get the locations of the eyes, nose and mouth corners:

Show the prediction:

Preprocessing

The net must be evaluated on facial crops only. Get an image with multiple faces:

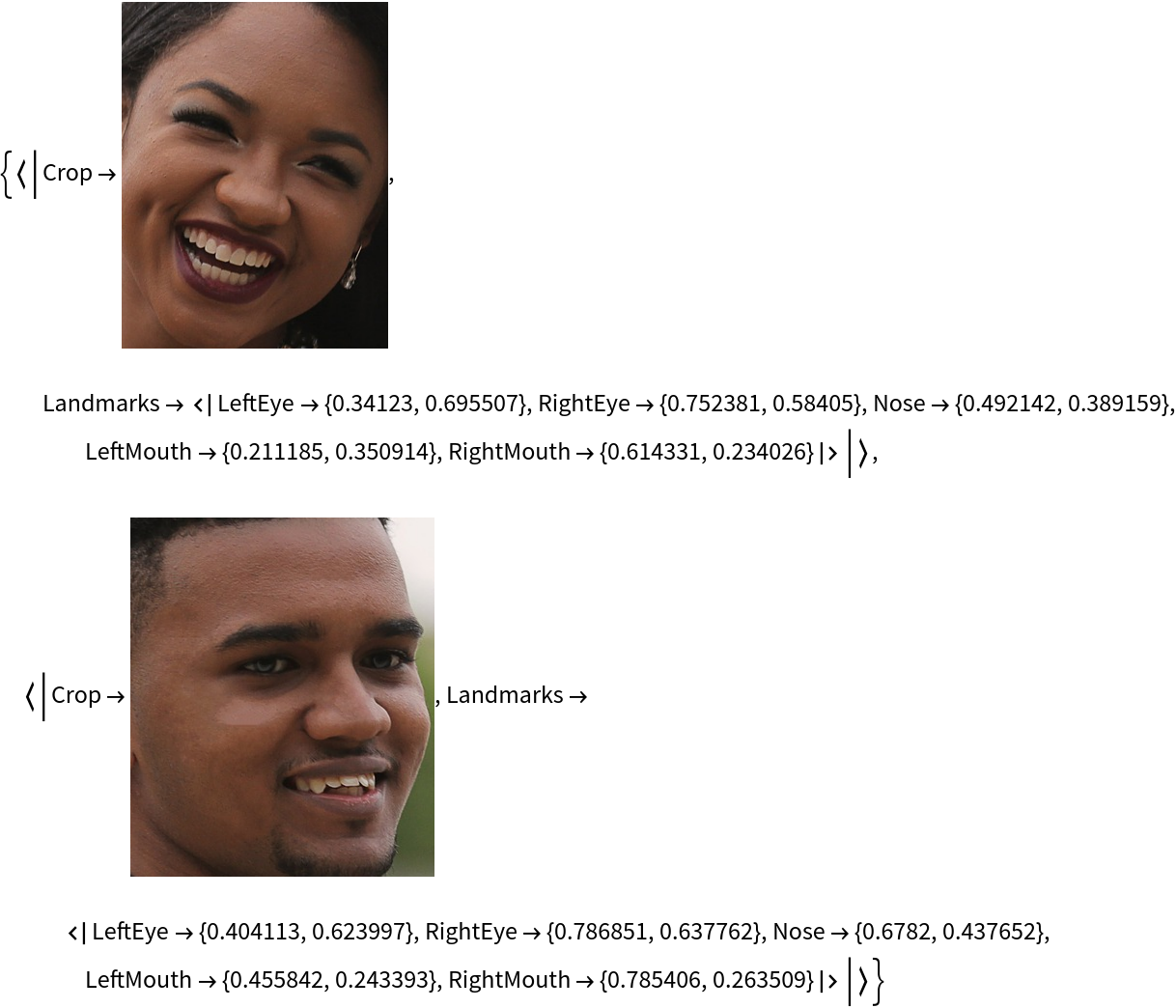

Write an evaluation function that crops the input image around faces and returns the crops and facial landmarks:

Evaluate the function on the image:

Write a simple function to show the landmarks:

Evaluate the function on the previous output:

Net information

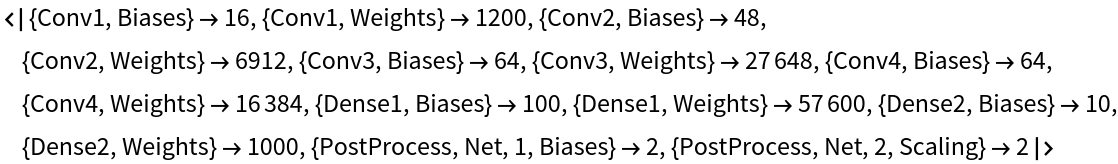

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

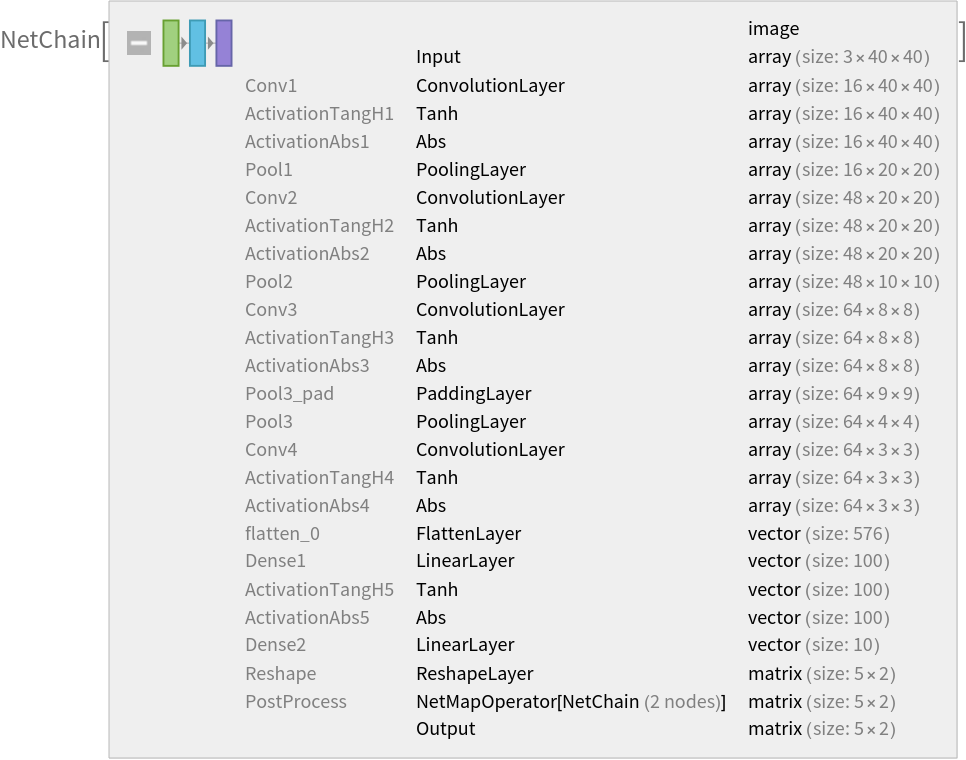

Display the summary graphic:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

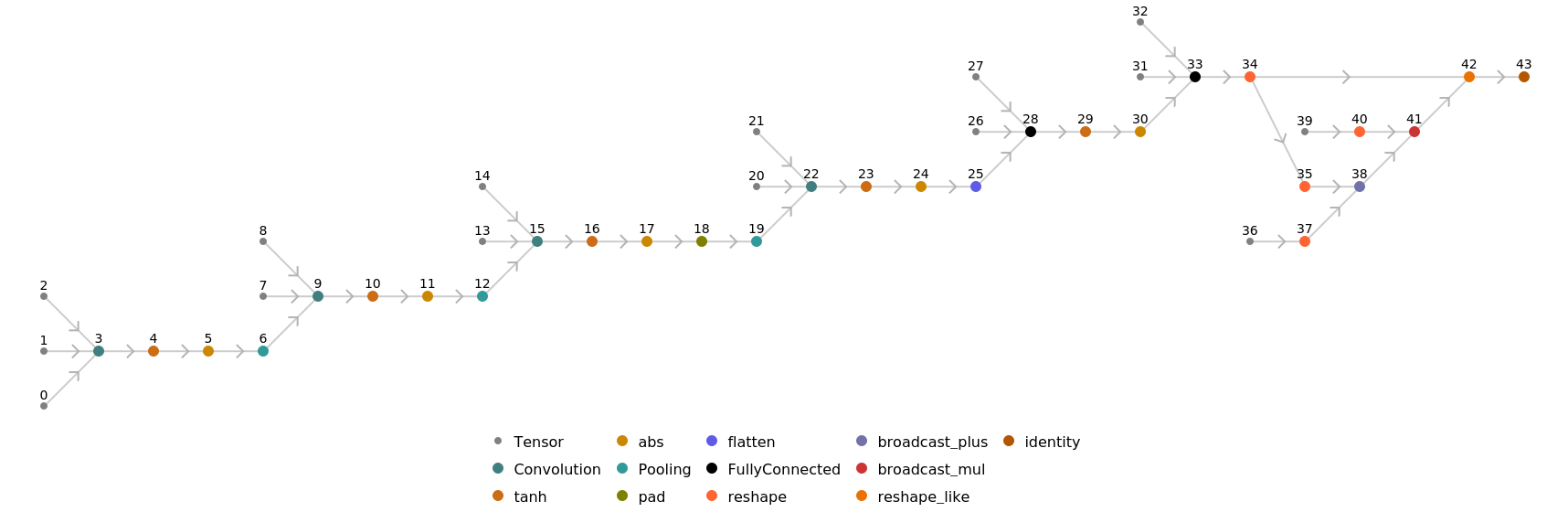

Represent the MXNet net as a graph:

Requirements

Wolfram Language

11.2

(September 2017)

or above

Resource History

Reference

-

Y. Wu, T. Hassner, K. Kim, G. Medioni, P. Natarajan, "Facial Landmark Detection with Tweaked Convolutional Neural Networks," arXiv:1511.04031 (2015)

- Available from: https://github.com/ishay2b/VanillaCNN

-

Rights:

Unrestricted use

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/3053cf62-c347-4351-8a91-8917991b0c1f"]](https://www.wolframcloud.com/obj/resourcesystem/images/2db/2db2e4eb-998b-4165-b34e-f1861f61ead8/2ca948f2cd7b26ec.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/186b437f-d1ff-4390-9170-170ba9a70246"]](https://www.wolframcloud.com/obj/resourcesystem/images/2db/2db2e4eb-998b-4165-b34e-f1861f61ead8/778399f6d6cc39dd.png)

![findFacialLandmarks[img_Image] := Block[

{crops, points},

crops = ImageTrim[img, #] & /@ FindFaces[img];

points = If[Length[crops] > 0, net[crops], {}];

MapThread[<|"Crop" -> #1, "Landmarks" -> AssociationThread[{"LeftEye", "RightEye", "Nose", "LeftMouth", "RightMouth"}, #2]|> &, {crops, points}]

]](https://www.wolframcloud.com/obj/resourcesystem/images/2db/2db2e4eb-998b-4165-b34e-f1861f61ead8/0cb5dcda4bce27db.png)

![showLandmarks[data_] := Table[HighlightImage[

face["Crop"], {PointSize[0.04], Riffle[Values@colorCodes, Values@face["Landmarks"]]}, DataRange -> {{0, 1}, {0, 1}}], {face, data}]](https://www.wolframcloud.com/obj/resourcesystem/images/2db/2db2e4eb-998b-4165-b34e-f1861f61ead8/0a8e95333e96ea39.png)