U2-Net

Trained on

DUTS-TR Data

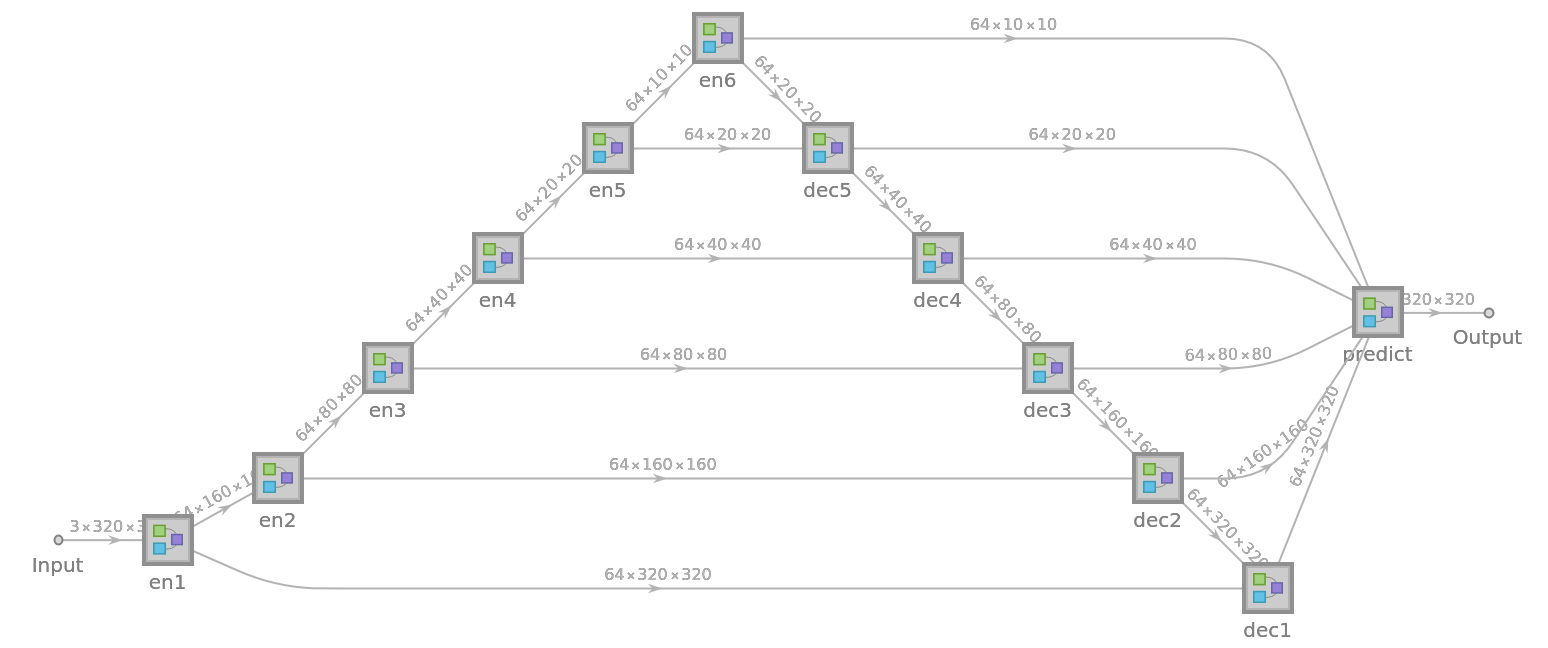

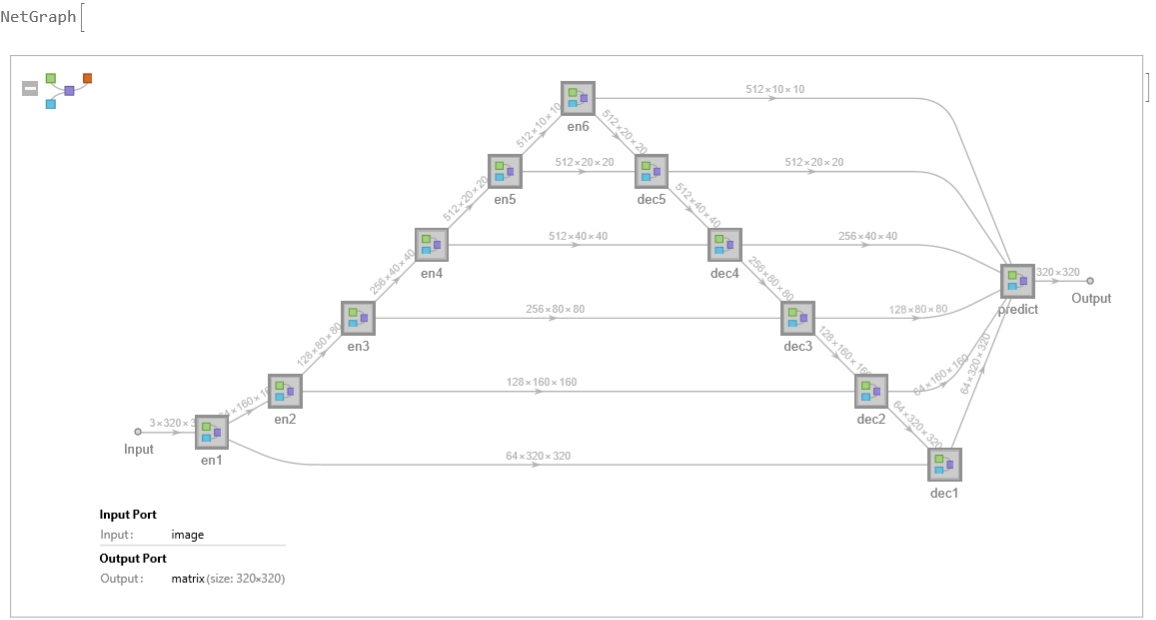

The architecture of this models features a two-level nesting of U structures where each node of the top-level UNet is a UNet itself. This design is able to capture more contextual information from different scales thanks to the mixture of receptive fields of different sizes in the proposed ReSidual U-blocks (RSU). It also increases the depth of the whole architecture without significantly increasing the computational cost because of the pooling operations used in the RSU blocks. Such architecture enables the training of a deep network from scratch without using backbones from image classification tasks.

Examples

Resource retrieval

Get the pre-trained net:

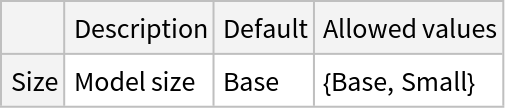

NetModel parameters

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

Pick a non-default net by specifying the parameters:

Pick a non-default uninitialized net:

Evaluation function

Define an evaluation function to resize the net output to the input image dimensions and round it to obtain the segmentation mask:

Basic usage

Obtain the segmentation mask for the most salient object in the image:

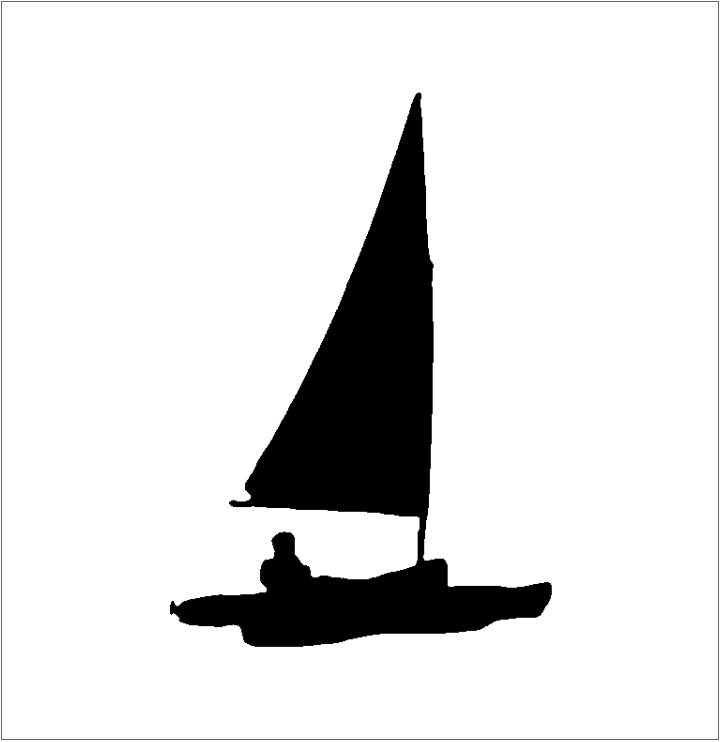

Visualize the mask:

The mask is a matrix of 0 and 1 whose size matches the dimensions of the input image:

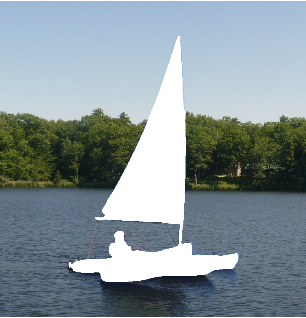

Overlay the mask on the input image:

Convert the mask to an image:

Crop the object from the image:

Delete the object from the image:

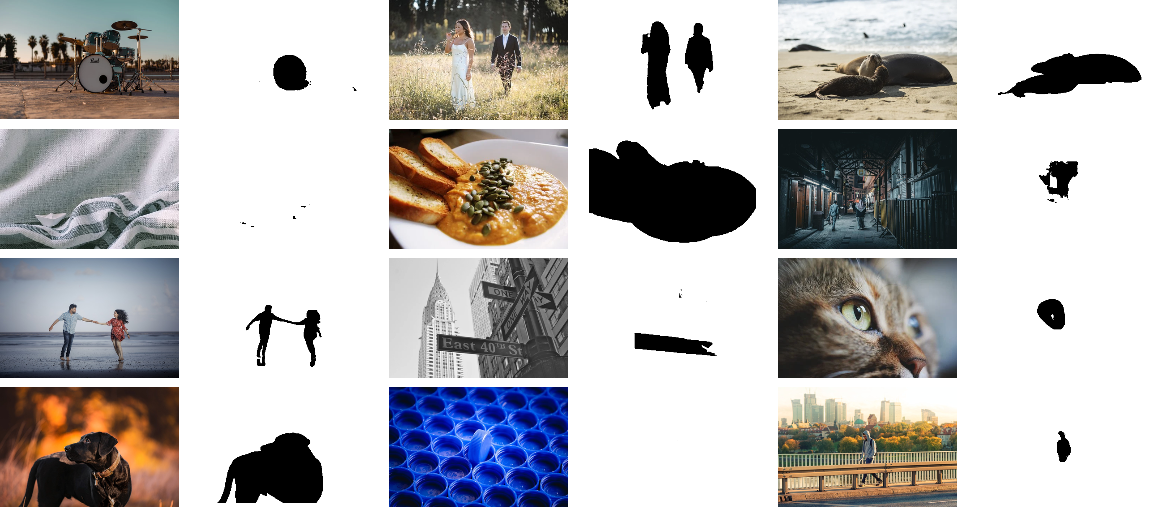

Results showcase

Take a list of images and obtain their segmentation masks:

Inspect the results. Some images are more challenging than others and salient object identification is inherently an ambiguous task, so results can sometimes not be as expected:

Net information

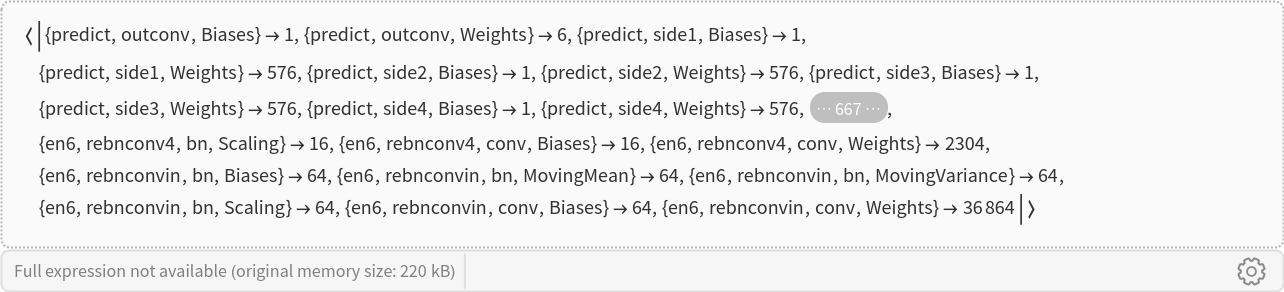

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Resource History

Reference

![netevaluate[net_, img_, device_ : "CPU"] := Round@ArrayResample[net[img, TargetDevice -> device], Reverse@ImageDimensions[img], Resampling -> "Bilinear"];](https://www.wolframcloud.com/obj/resourcesystem/images/613/6135a8ae-7479-4921-a36f-80c2a8ea128d/51320ad4f4257e2d.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/a0233609-fe9a-4ad6-bd45-9785becb66ce"]](https://www.wolframcloud.com/obj/resourcesystem/images/613/6135a8ae-7479-4921-a36f-80c2a8ea128d/77ddccf6c0d2e6b7.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/4d4650ad-fcc8-4d5f-9b93-c60c306ce150"]](https://www.wolframcloud.com/obj/resourcesystem/images/613/6135a8ae-7479-4921-a36f-80c2a8ea128d/003fb75202947bce.png)

![results = Transpose@{imgs, Map[ArrayPlot[

netevaluate[NetModel["U2-Net Trained on DUTS-TR Data"], #], Frame -> False] &, imgs]};](https://www.wolframcloud.com/obj/resourcesystem/images/613/6135a8ae-7479-4921-a36f-80c2a8ea128d/2a9407d5270536f7.png)