Single-Image Depth Perception Net

Trained on

NYU Depth V2 and Depth in the Wild Data

Released in 2016, this neural net was trained to predict the relative depth map from a single image using a novel technique based on sparse ordinal annotations. Each training example only needs to be annotated with a pair of points and its relative distance to the camera. After training, the net is able to reconstruct the full depth map. Its architecture is based on the "hourglass" design.

Number of layers: 501 |

Parameter count: 5,385,185 |

Trained size: 23 MB |

Examples

Resource retrieval

Get the pre-trained net:

Basic usage

Obtain the depth map of an image:

Show the depth map:

Visualize a 3D model

Get an image:

Obtain the depth map:

Visualize a 3D model using the depth map:

Adapt to any size

The recommended way to deal with image sizes and aspect ratios is to resample the depth map after the net evaluation. Get an image:

Obtain the dimensions of the image:

Obtain the depth map:

Resample the depth map and visualize it:

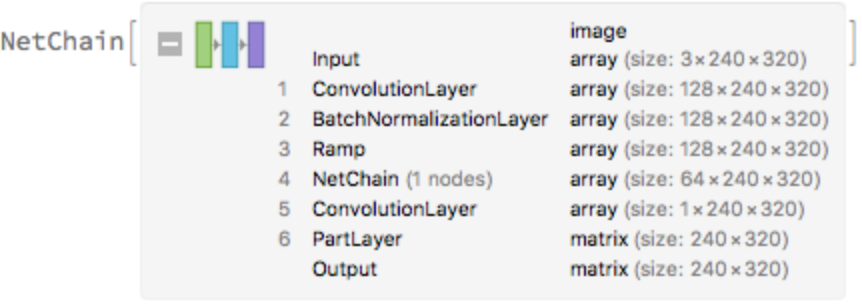

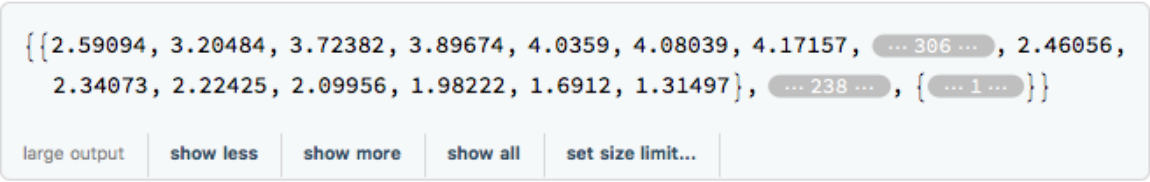

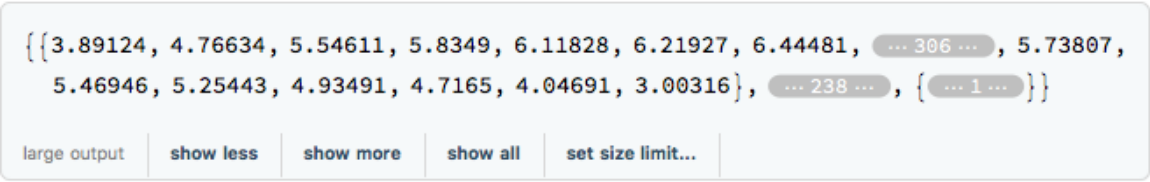

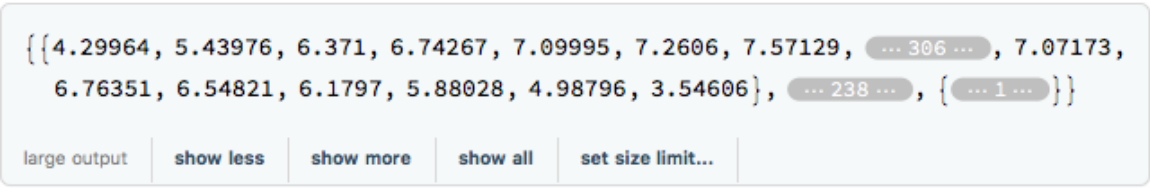

Net information

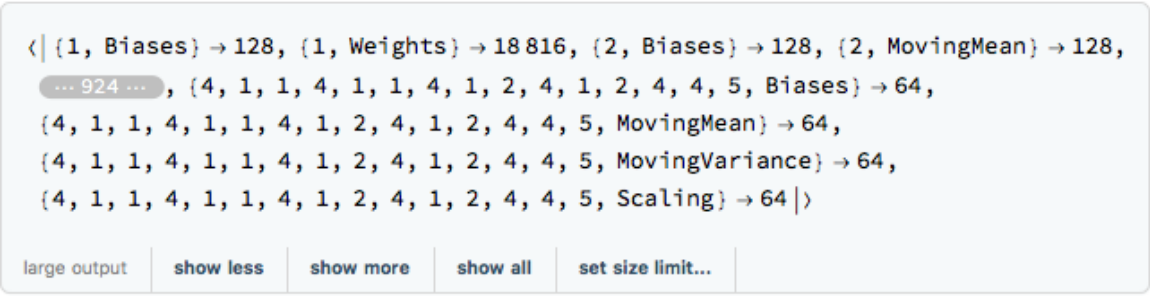

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

Requirements

Wolfram Language

11.2

(September 2017)

or above

Resource History

Reference

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/b4c60dd3-7596-4c9b-8483-7aaca02f10d4"]](https://www.wolframcloud.com/obj/resourcesystem/images/459/45974932-854c-4af4-86aa-faa1bff7e7ec/62f41150360634f7.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/267a0a0b-59fb-4e44-9d6c-ee7f7b56ef01"]](https://www.wolframcloud.com/obj/resourcesystem/images/459/45974932-854c-4af4-86aa-faa1bff7e7ec/441b629544194985.png)

![depthMap = NetModel["Single-Image Depth Perception Net Trained on NYU Depth V2 \

and Depth in the Wild Data"][img]](https://www.wolframcloud.com/obj/resourcesystem/images/459/45974932-854c-4af4-86aa-faa1bff7e7ec/72b56be83d01c8a2.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/d1770d7c-e755-4076-89a4-4e44e3826533"]](https://www.wolframcloud.com/obj/resourcesystem/images/459/45974932-854c-4af4-86aa-faa1bff7e7ec/7f56cf645199e15e.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/2150464a-046d-4f7e-902e-3f7c34a28f13"]](https://www.wolframcloud.com/obj/resourcesystem/images/459/45974932-854c-4af4-86aa-faa1bff7e7ec/0c69e93fc4d03cfc.png)

![NetInformation[

NetModel["Single-Image Depth Perception Net Trained on NYU Depth V2 \

and Depth in the Wild Data"], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/459/45974932-854c-4af4-86aa-faa1bff7e7ec/3246c7dc19e4bf5c.png)

![NetInformation[

NetModel["Single-Image Depth Perception Net Trained on NYU Depth V2 \

and Depth in the Wild Data"], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/459/45974932-854c-4af4-86aa-faa1bff7e7ec/1dc90f8a27757d98.png)

![NetInformation[

NetModel["Single-Image Depth Perception Net Trained on NYU Depth V2 \

and Depth in the Wild Data"], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/459/45974932-854c-4af4-86aa-faa1bff7e7ec/6e4792b12a4f1d41.png)

![NetInformation[

NetModel["Single-Image Depth Perception Net Trained on NYU Depth V2 \

and Depth in the Wild Data"], "SummaryGraphic"]](https://www.wolframcloud.com/obj/resourcesystem/images/459/45974932-854c-4af4-86aa-faa1bff7e7ec/324340a82f64dcac.png)