Resource retrieval

Get the pre-trained net:

Basic usage

Classify an image:

The prediction is an Entity object, which can be queried:

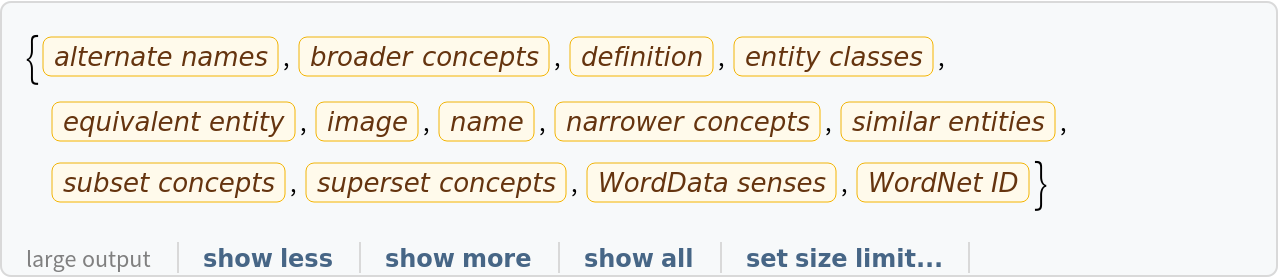

Get a list of available properties of the predicted Entity:

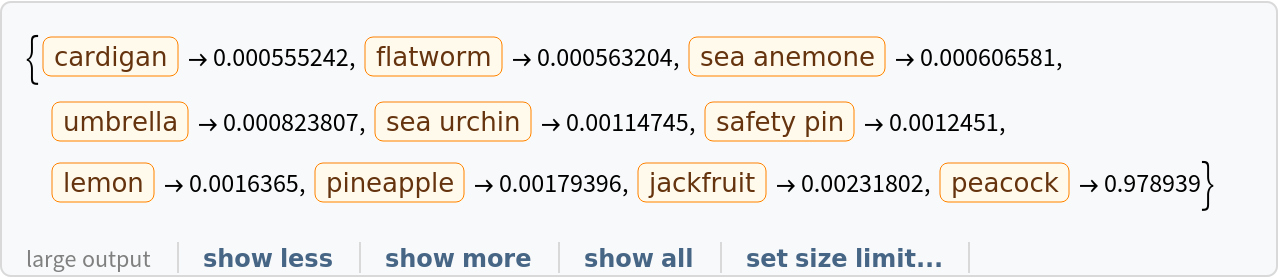

Obtain the probabilities of the 10 most likely entities predicted by the net:

An object outside the list of the ImageNet classes will be misidentified:

Obtain the list of names of all available classes:

Feature extraction

Remove the last four layers of the trained net so that the net produces a vector representation of an image:

Get a set of images:

Visualize the features of a set of images:

Visualize convolutional weights

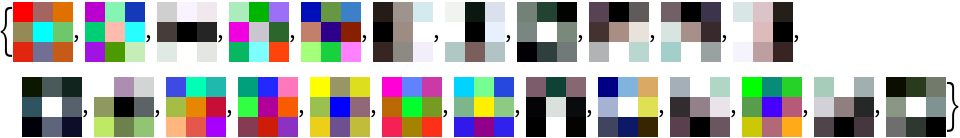

Extract the weights of the first convolutional layer in the trained net:

Show the dimensions of the weights:

Visualize the weights as a list of 24 images of size 3⨯3:

Transfer learning

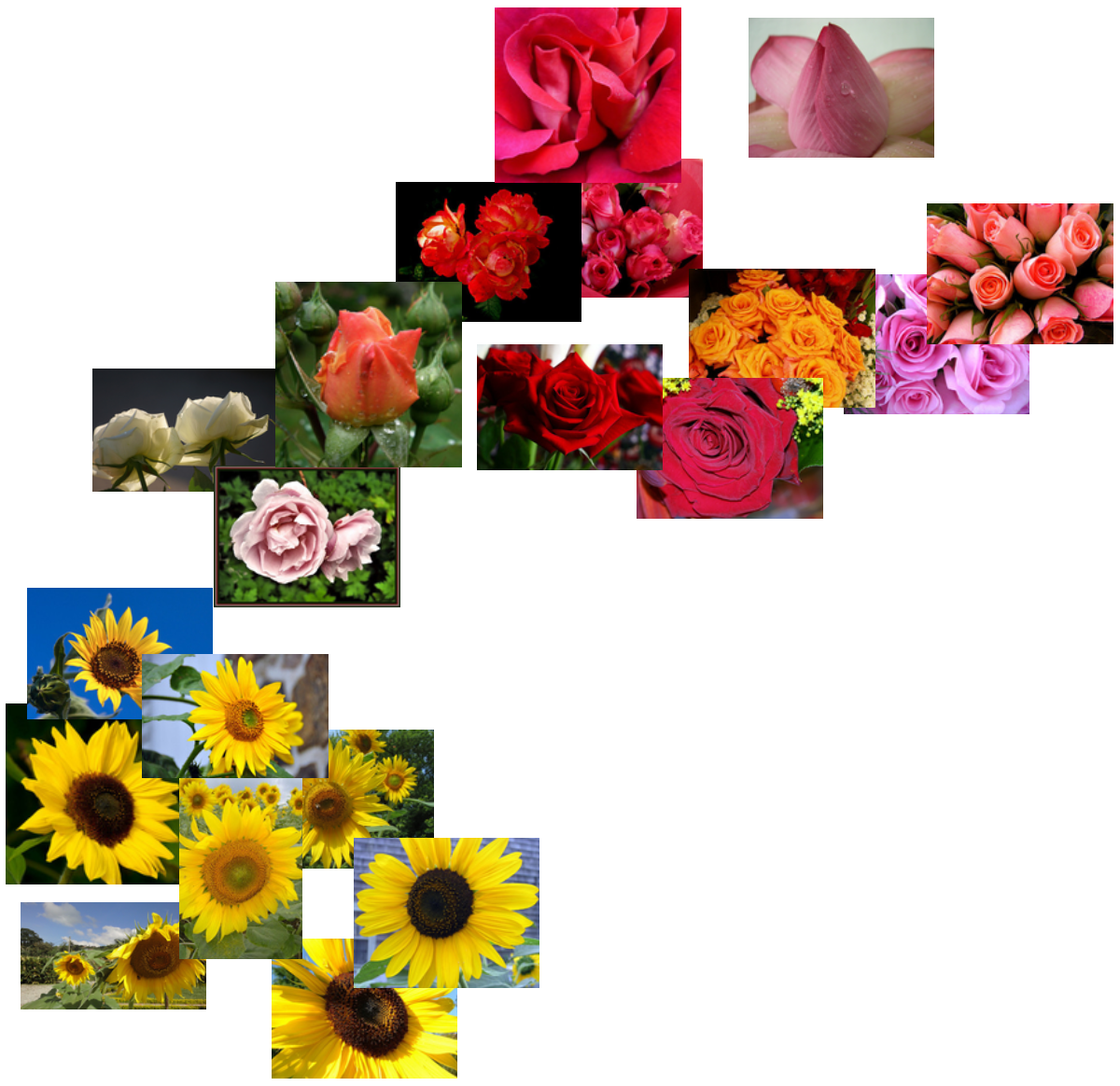

Use the pre-trained model to build a classifier for telling apart images of sunflowers and roses. Create a test set and a training set:

Remove the last layers from the pre-trained net:

Create a new net composed of the pre-trained net followed by a linear layer and a softmax layer:

Train on the dataset, freezing all the weights except for those in the "linearNew" layer (use TargetDevice -> "GPU" for training on a GPU):

Accuracy obtained on the test set:

Net information

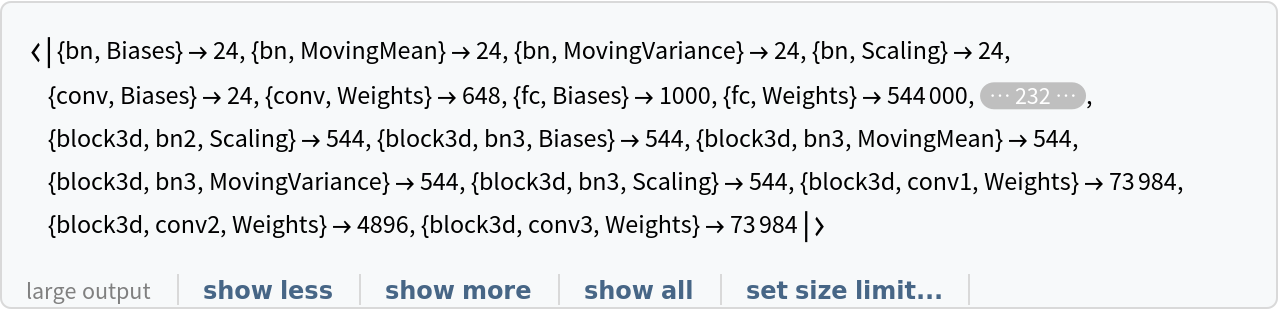

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

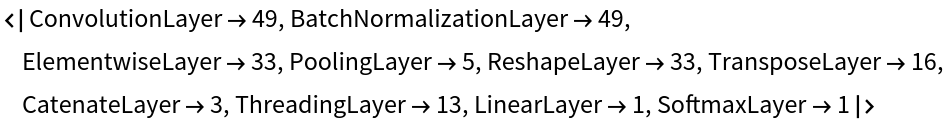

Obtain the layer type counts:

Display the summary graphic:

Export to ONNX

Export the net to the ONNX format:

Get the size of the ONNX file:

The size is similar to the byte count of the resource object:

Check some metadata of the ONNX model:

Import the model back into the Wolfram Language. However, the NetEncoder and NetDecoder will be absent because they are not supported by ONNX:

![pred = NetModel[

"ShuffleNet-V1 Trained on ImageNet Competition Data"][\!\(\*

GraphicsBox[

TagBox[RasterBox[CompressedData["

1:eJwcu2V0XGe2rV22Y4oZZIEFFjMzl5hZqioxs1RiZmYGy7bMMsZMsRMndhIn

DnZ30pA0pplun9N9xr197rnfn+dbtsfYlsoqbVjvWnM+c++yaVFdatlmhULR

tEP+Si1sC2lsLOxI2y8vMrRNleXa0pIYbXNpeWmjT9EW+ccy2TSyvSGbvac+

qWojcos8iEiwxifUEGWCBRn5fvgGWBERbUtgrBXGTvsITbEmNcuD+bkGYmOd

qWsOYXi4GFWGC1nZ5oSkGqOp8CcmzYXSihjWL01zfL2FC+fHqW7IxSXoEKkF

HiTk+pBdEyz7deD5Zw+4+vAS5r6HCVFZoCrxxs33IP0zWVR3xDA8W8OdZ6dZ

uzmCX6oF6bVK4ioDKGmJY2SqGDevvcTEH2N2pZzYNF8Sit8gOfEgpoZ7MTLc

gZP9IfwC9pGQehhz881ExJuSkGNOaNphMip0mL+Qx+q9FmLKNrO8UcZ//vvn

PPlkgareAMLydNC0HKNp2JnVMyVoOx3JrrUkQLWf8DxLQosPEFZmglfqXqnZ

FuIy9tIzncjFu23UdbviF34Qe299bOz2U1rjj7YjgoQsK2LTHalpjcU32Jz0

bHlflClmrocJiDcnszCQvt5MyiqiKalxpqE+lur6KFJUJrgFHqJ3pJT0XD9a

WvO4fHuVueVaHt4/S3NHBaFJ5pQ0BJPfoCQ4yRa/CE+++OlzLt0+g63vUWp7

MnDxNkCdH8z6nV5SKwJ4+tlD/vj3P3Px3jliCzzJawzFL86Egcl8SsrCCAw5

zKTU+eS5Zjz97YnJ30R61g6OGe/EwmIfNpYHsbXdg4f/m3KNsXJdiTj67CUs

ew8dp4M48X43GZ02NE+Gcf2dXq69O0zzfCw+mt0UdFlR3u5E12gErb1Kcsqc

CE45QmK9OUkNpsRXOJNcZUJEzg7qJoIYXNLQUBdJTNRO8ssNWD2nxT1EDyPH

vUREWkntPFDnWktNIwlNsCX81RZnhabABztfI/wTzCiujGF8uk42LekaZ2ql

Xkmp5mTlORIeY0ZVg6xRhjP1Wg0P3r7G7TuL3H5wkv6xesKSzChvikZVGoh7

mAF51Vn883/+i8fP7qKM92Xx7BgWDgeo6kxg451hJi7ncPvFCn//v/+iY2iA

4o5IkircSc12oLknhZCYY3JcD959Z4XmAQ3mNlvI1FqRVrqTyFAjkuMcsbTZ

i7XNHlxcdqNtiidJ446FxyZS+7xpvKAhttOAzB5b7n64zMTpQhJrzbAJPUCI

xpDYHEPSC2xJTLYhp9CWZHmd2yDHL7MnrsyapBozSrtM6Z1P4vIn4+T0eJNS

cJTTGw3certbZswPc9nXUefdeAUcJSHTiZAoY0rrpDfro8kuDUAZaUNjRwbh

qZ6Yuu2S+sVS15LC8EQZ+cW+0rv+tHZFkKqS9Uy2pLE7kYw8dyqrw7lyd5Lr

b81x78lpqpuyCYm3kH2GkFsVTECcEeriRP74H39gYmGK8ORA5s/1EJ5my/ip

ErKrImkZKOLjr+7y4It7FHVkU9AZQEiONS398n1VANHxhiwu5nP5QTcJRYGU

96gpHglEpT0gdXclIswaS7tt2DntJi3VhrCwgyijDpHeZEjJcXfS21zJbXGn

ezqcnE5XlEUGOEcewSpAj9LWDC7eHqKoxpFyWdOoKHNySv3Q1Hmh0XqSXGxI

as1Rhi/nsvF0lPJuF1LrD1C9EMjanVZytRY4ByrYYbsdfaeD2HgfITjDGlW+

NxHJ5uRWKimujSIs2oGe4XzSigMxsNmHKseXHKlr73AeDS0JjE7mMT5fJLV2

JipW+rMlkjTRWG1TLKev9vPhx1d5+eUjugZriVe7UCbr0zqYjk+EAUPT3fzv

//knbcPN5NYkynyVE5Bow8KZERoHYzlxqYUvfvget17ekVlNQFXnSX5bJAun

OwmI0Sczz563n8yi7U4gMNKWm083MHbdRuWo1KTbCZ/gQzg675RrMCExzZSo

xEMk5cps11kT0ahDxagfXQuxNPX6k1hjgZtqN64hBvjGHGXlYjPjx7NILz0s

PuBJjeh9dnkIqVUuRJaZUzWpZOCihsrpaMo6XBic8qe+24PUWmc8khWoyyxZ

v6JFL2EzRzwOYex2AJuQQ9R3phKn8iSnPIzS2tjXelspGptSJP3raEBMkifa

1jiapKfbu3J4970zjM81ygxZkZBkJ3oVSW61UvpXw9W757h8bZ6vf/YZHf0N

Ul9nqtviqWgIx9ZtP4trc/zj33+T88umczRHelpmQLR7daOHluE07j27yfd/

/Z6rT6V/25MJzTjG2Fo17aNZeEYe4PbTKX711x+hqk+S3+knvjiIgup4WlZD

KR7fR1LBYUIjjMTTjPAK3oFX3B7xMSfRTDeKZ3zovVRMzXI6Vf1hsm8j3CM3

kVwgntKeJLMl8y89mlwuXt/iSLrWgYpOJWU9AZRNx1F8Mg31eBAZDTaUDsg6

ncghKMuA1HIH5k/myVZIRpktRYuuWHdu5VDALowdd1PUHEBalR/x2eKVpb6k

5DiRUxRCSV2IaKSNeJiJ6GYIM4stnLswx52HZ8THW6mqjSQy1ui1trya/5Ka

bJ6/eI/T5+YYm+siv1ZNdkkgxY1RFGnDsXLR59Hze3zxi4/IFy2dPdWGd4oB

s2eaGVtpZGq5i49/8oT/9a+/cue9m2RWhZAouju/3oa63o4Cmdvbj8eYvdT5

WjuefP6uaEQkk6eqiZL+im7eRnr1HvJqQ3ASDXT330Ok1h71gBJVlzelJ6JI

W0wjfz0flbBCQqUpRXUOcm1eRKksCcowFH4wJbPek9Q6RzKaHKjvD2XsXCG9

1/IIGzAnsduGPtGyrAFforSm5IoXNvfH0NwaT0CCDo4h23EvruVYZjPubY4Y

KHfil3SUonoX6QkvavrTKasMJa/Kn46xNFSiEVYeRykoTaS7t5I7D84wdbyD

UxcnqamLISLWkKqmOMqbY6XHc3n+8XUm58tp6U0nMdebGvG21sEccppSxWP0

ufv+OdauDlJQmcTUxhC2sQacvjPD2Ikart2f56MfPuVP//oli1e1wl32opO5

9E6WoswzoHEohkuPhomrcOX84ylKurJZvDIquheAffB+gvL30DbuR67Mobnr

LtyD9cjo8SdhMpCEWSWRF3MouS3+fl6J3fk4aq5FUzQXTni+Af7S5yGaowRl

G5BQbUeKcFL1ZCRTF/IZupRD7JAZjkPOBG/EE7oYRfyEH4Xj7rROpRKRq4NH

9A4CU3XxiTmI4s0AFFvLMNd041K3H+e4w3QMJEovOKIdSqJzMIqEbDfyq4PJ

LPPG0m838amBLC71cPvhcRp6s1g61cfoeKn4jRnVLbEMzRZJLVO5cmOKxeNa

7jxeF92LorUnh/ahUtHaGEJjzHn84ToDwrdTC3WU9iSTUu3J/Q8uk9am4cWP

Nvjp717w5W8e0TCSSXSJI6s3m6SH7UmucWFsXcupO2Ok1wlj3Rmifb6CnpVq

YWw9HHx1KO5KZHpVS1icJf4ReiQU2FE9EUz8qDN2F9Rkviwm50EnUfeaMb0R

i81aOMWrvoSUG+OTehBflQ6+WXoE5BmTKN7StKSibDKa2LEQYqVnM1+Uc2C1

FEW9ir0ttgRNRBHXZkCo1Dcgw4CwVDmmypitRwpR7Ihgs+1ZLIekP0u2U9Pr

LRxzjNC4Y+RrRbf7RMNrAtDke+IXb0VosivD05VMzFe+1uv1jVFWjreTJT1a

og2jfyqL2qYMHr97ifef3eDh87tUdFVQ1ZZIm3BrjMpH9C2C937yJalNIwSO

rnOsZRSTrFrs6k5zxEfF2sUJ/v7P76SHH5BUInliJISaiTAcvQ7TNJ7ByRtD

ovVqps92snR5jKvPThIprOYcaPCaQXpP1lDYFEG0cE2R1o/kQmeK612pOV9C

yFc1FFyqZXf6C3QTz1G5UETCiTCcZv2JGvfHOl8Xq6Q9OKTpEFbtRsPxAqIH

QnBs9+DAYCD+X9SgulfAJu/77LRcxDgjimMVUtshyS1lx/CLEVYI3S1+dJh9

Mb28cWCNrdb3MG/x51jfG6RUHBVPDqS0WmZCtLNR9L6yKYS6ziTiVS6iT/bC

W+n0iidNrtSxcfsEzz96SHKy+Kd4Y8dwCpq8GL779Uu+EW97+vIBBY0ZFJcH

0zVWhluIBVV9JQS0raOwaUMR2MPmnCEUeyPZ6iPrvd2a2cVFXv258ewOnpLr

rr5cJ1vOzy/MjutPT3DxwTjtYzXcf3mTC/fWmThfhXP0fvxjLGgZzBSWUxIq

mTKvNoymoVyCEkyoHEvB5Vw50T9vJHyimjeUX7D3aDkh6myWF5JwX4om+kwm

+v0+GGkOYJp8AFfpXWWlC8oyB/xbvPDQOmE8FIr7hRAsYtVsM8jB3D2Mkiov

DGtM0NUcwdJzGyl55kwIOx7uEEYsz8S+MB2TPkd2j0vmKzpMWbsXTT3xDI5X

0DdYQnljLN0T+WiEzVyVBuSUhLN+oZ+l9V5mL/RwX7S0si5Mcos3lQ2hxCX4

8cWXH/C73/9SGPal6Hcrrf1pDK6USAa3xK2onM2ZS2wPH2DbYQd2H3Ngy8Gj

KA4b4hoRzEdfPeAf//NHYYFGCociWbrbL5powamNKe5/IvUUH5s6NcijT+/x

yY/fpnYkWOqky+BsLS1jBcSqrQlONKN2rJA08dOi4QRKzxSwW7xt/0YkqrfT

sE+cZtuRFezCK5ldSid2NZ/giyq2LYZgWmmFk+ooe8SjzIVVgpOP4RqjS3C6

Ge5pNuhJDgkQ3j0Y5YNBkCeJhfZSPz8UWjfeiDuCSdJB4V0LtqRsY1vnNnYs

bUYxfZRtdWY4p+8lSTxY2xUqPOxCUWW4nHclw4tVNPZliEbvJbtdNOJCFR3n

qqhZDGL+Shndk8niVf50DquJS/Xgh9/8kD//7Q988c2X9E73UN0VTeP5TA43

J7O5bpY34qfZYRHNZmML9huaoefpgat/KKevrfDbv3/F17//kgRh8LPvjJLZ

LOcimeXLn34gvbtM/YBK6nyG47fbWb85glus5NYiPy7dO0mw2o3AFAsqh9LR

9MVRtKqi60E5ER0OWFeYsS3bmiNTXiSfTcC/qp7g9mwCR+w4KhnX9GIG22aD

OaQyRC9qP04VTsS1RGDpsQd7n4PChQYEx+jgotTHIO0YBmG7RCd28mbsTnaU

GKIQ9tuksUGh3IVusA6HXPfzpvcedga/yVbh7y2JBphE7sMz8QA5Na7CAkFo

26PonSmifTyPnMpgPMN3iY4fJlnmpe5sOaG1tqzeGWZ4vpDS5kCGlvNQl/vy

8Q9e8tu//YonL94STW6iuD2Y0nPpvFGVydaqZbYFV7N59yF0LIUbc5LIr8uk

ebier3/6Of/877+Ixz0nW5vI1fdW8E22Zub0MD/87hPmzo0zttrDR/Lz+59e

JCTPCO90U65/eB51Vbjk26PkjKZQdSoLzUCg5IgU4lq98Cs0xy7FUOoicxL5

Jm+WOOO+6IbNsgOKNgf2jTtg0euFQaI+llIb68ijmEYdFa4xlXl0JCDAGBun

vdg7H+CY614cw/YKs5thmXAYY+Fks8wj6Pq8yT6rHew228Jeqz3oCPMe9dXl

iKzDwSR9jiTtxyHhEN7S49EqXZr6YmkbyKB7KpuGwRRhNFfsvQ5hHbaD7C4f

KhZSSWhwoWYgnaIGX7LqXBlZVpNW6PaaBe59cJ1LD04yOt8sjCAM2+PLkSg3

7NOK2WoRhbmzPSEpbpQ1akSDMlg9O8fnP37Bd394QZuw/+xGGa3zauKLgvjy

u8/48tuXNE828INffs6v//Kt5JAqrPz2071STv+pOoJyHMmbTaLiRCp5o0pq

JhMIybTAOlwHXZfdHLHeJT21m0PCGQe896IbegijXEMODTigJ5nPOVEXc7dd

BCZY4x15jGPOb+IhuUeV6YGT/R5MbfZi6bBHssIW7JX7idKYkVxhhVvaEWwj

ZE18DmPtcQTPUCvsPAxRFQZhZrsbR8mrul57sY46jGPSEbyTj+IXu4fGrjja

BzKZPtlI/3yp5A47bP3l53EHSGq2J3VGmLHPFU2nt3CVOxlZfqLXWUTGW1Ml

OjB1oonT99cYPDtMcV8KNoNBuHR7s/LoFDkjbcJa4cRkeVLZmkdLfxXHz5/i

xWef8Ie//pWGyTouv7OMb6LMx9V5fvLbr7n55CpXH1zgZ7/9AVfuncBaWKy0

M5P1G3NEyJpqxoNpOpuNVrLDK/ZfvtVLrmiEgc8ejjrt44jxm+joS48dexNd

tz04hR3ALGAnhim6eEitPERj00r9pD76hESbUin6FBFnQnDCMRz9dNAz3oyv

8rDUVThKbY5L6B6cpTe9E4R3E43wkH73jDIiNF3q5L2P+DgHlEFmBCrtsXLT

wUBqrcyyxj3xKP6p+qjlWPPH2yhryGDmVDepBZ4EJEq+UethXnEAzx4nXFdd

MVk6imralqzqQMYXakhTedDRl03fUikDJzqZmOsmZzILxaz4y2kv5j5ZZeC9

Wen9MOGvIMqaMukfrWP55DI//PoLfvenPzJ2fpT+401EqkJ4+dOP+fIXL5ld

H+ezn3zE7//5CzSVMYQkO/PRz96lRJghqkGOea2QlhNqusWrNt6dpPtkGfFN

ga/72j/RHjsfQ+yd9DCx2MdhEwUu/tJv0ldG7tuFm3dLnQzwitQhKtWCoal8

isr9CJQet/Dajlu4nnBqKOnZzq/f4xy+D0fRVRflPpQJws3Svx7hkhXl/U7K

N3EK2U1hsTflco2RKQ4kFthiG7ifaI2tzJT4b46lHM+CSzfnmDsxxOzJYbIr

QglPtsWj1IidNdvxkrnSPipHeVGJz2knwntc6ZnLIy3bg8aeLIbPthFbEIp2

ohyvEwmEHo+h7Uk1xz9YxP96PvqDfjSt1dHUX0xHVx4LC4O88/gdfvCzz7n8

8SnJ6tGsnlvg2z9/w9XHF8TbLvDhdx8wttGPR4Itt9/Z4Nz94yg73MhZjmbl

4QjaORUb74yz/miQrMFIAkslF7eFEZwl+hp0mMBEU5ylF51lfl08DDhmvQOv

cBPhex98Q49QUOFHdJqZ9Kg53vG6UqcDkos01LSEoymyIyTGBNcQmfOQfThL

bQOi9QmX7BkiX30jdfGX70NTRF9Fl0rbvIkTRogVNg9INCf5FX+F6RBZYI0y

05zAJHOKmiK5eHWJm/evUNqYSUiSFfYVRth0HqPnSjwXNuq49G4/YWeFL0ck

941nEZHtgqYkkpO35oloTKDqcj0OazGcfTbEN0/O8FRqN/rhLFtqrGh82EP/

dB29PbWMD1aycX6Fr756zvzFQeJqg3n+9VM+/f4Teo/38f4PP+TLX70ksS6c

zpUaHn95j4TWEKKnArn40SSNixlSey0XH/fTdVJDxrA/ZcK2gXUueJfokFHl

RGqpLRnl1tR1x+IRoidaa0dWfjDRsZZ0jmeKz7pJ/+qIxipQZljRNJrJkuSR

CJWB9OhhotMt5OeWZEje8g3Tx8FzB+6Bu/BQHpQa7qesO1RmN5nW7nAySk2J

zxQtEe0IEh1XxtngE21OWJYjgRmiA8mmxKS7cOXKGs8+eIfSllz800zwKDbG

rsWUq2dy+dlbbXz30TCtlxPRy3Wkdr5Acp6tMEE4D9+/QfBQPFZzyVjOe/Jc

avofX9/h+w+uSo8+JP5CO4XXWhi5Osb64hiDbbkcn+ni3rsXSKyIFG+r5wd/

/JRbz26xcHmVH3z/peTiRdQt6Tz/xSOaTpcT1O7I8LV8WlezaJ1L45Hw8dL9

HvJGgkits6VsIAj/Qh1SWo4Rma9D9aSLbErS6g2o7A2laTCNkgYfqnskJ/Z5

EFq8k4Dk/dQ2S3+c06KStQmSfvKT7JucbUVzVwSZxS4ERppg5bAPe983hSP2

iQ4EMzaXz8RqDi0jsaxuNOArHpaaZUmKbFllXkSlOJMgfuMm3hlT6IlSON1b

+KR7oJIf/Oh9Wkcr8El2wihaD9PqN3n2Tg0LayOsnp7k9LMiLHOtSGkNwznS

mOT8SD74/DmBbUnskWs0ndLlwaeLdCzOcfHKBT54usSgrEvKcgUjd6e4df0C

s4NVPL3Yy5MnG+Q1V3Hu3Vt88d0LTl2Z5Pp761z5/BqNUzUs3V5g7e0J/IVp

y+8m03VLRcOqmhNvtXD3+TrNp8pI6fWVOsbgknEYZYUefkW6pHY4UdglGbT0

EMWtofSOx1HZ5UfDbBi53Y74pu4iJM6IkaVa5q91yPuO4aPRJUTyQIvwzMqF

CjoHQ0gu0sMrfjuBsUdJL3Yjt8aRwel8jp+tZ3KhlMm1YvLq7IjMNsDD9wD1

bZE0DySTKwyfWepNQJIlYdmuhOc5y/kZE57jxOhcNW1DWYRLb++SOTGv2MvM

oyZ2GWjZalrKyNs9hMr1+giTe6ZZiIb7MH1xBrXk6qMt5jjOHKXq1gYKhZZ9

thWcuLlA13uNOHVHMC5a8P4Xz3ny6BpDbz3Ff+wHGPX9Gvul74k8/iO05+9x

4+OH3PrgJiMbY5x5f5nUVh+SFpVk3czCpMeB5afL4nWPpe7DxNQ7k9jljnel

GbaSw5RlJjRKb6W3KvGTax66XE3fWQ29C3GcftBERoez8MMBSttjaBrXoBR9

NA7dgUOsHvVDOYwtVFBWH0xCvjWqEkdhFT3Sm22Z26jk/U/OMi/rWdcbRutY

Ek0joSSoDgoTeKDOtiUuyQR1rj1ldcGyjxByigNJyvcSbXCVPs8lLNcZ0wR9

xufahJ/6Scr1Ry/yELuyt5G9nsmbjlMoTAfJXBmh4po/QS3uuIXJmmTZUzWc

w+CZNixrPNBp3kHOtSHe8L2PImCZzmtrdD7X4twUytyVKckOH7N29x0UEbIG

xd+i6P4PFMP/iaLr32wb+Scu899TcPdDLn10hcqTxbh1h5G5nk70XAQlc1mM

C4uNnNGS2RnD+BUtsbX2uOcY4JZlRmpfMOU9UXgkGYnn1snxGhm4WEz7q3u5

DRZE10rNL2hZ2KglqNoc44TtOCXovO63s9emxYMiCEs3JqvcXXj3GAOnK7j9

4gILF8uEcbL42Z8+ZORkATGlB9H2B6HpcmTsch5RorFq0Xv/+EOEpB4TT/Kj

siFWeDQBZZITpU0xaEp9MQ/bL6ySyJVbS8QVhOMq3vqG5Lyw2f2EtHWwPec9

8ZECiu6HoKz1Ib3Ah4Rsd45fmeDUjUnRrkg2yTnnXxI2mT6LzfAGC29XkvSs

mpIrbVx9+ypf/eoZWSM3ca+7iVn+GeLOfYPDuT9LjX/PpqG/CNtJrTv+it3E

MyIu9KN6b5Tku41krkr+udlE7bVqEir92Li3SsvxSlzzDAirFf+UPKnpDCW5

1ImuuULxqg7mLxUxcKkd/x53IudDJY+kM3BTPEz02jfrIFHlNqga/UiqcCW4

2ELO3xJNix9lXb6MLuWy9lYFXfMa2mfSKRjwI6/T93Xdq3rCuPxgkL7FFOqG

A7ATVgtLPUJUtgneMXrSx0qq6uPpGcmnoDKBbOG9MuFzp1QDzJRHaZurlHXR

4l1kwcEAfXRzt5F1fD957W50bDgRcjJKNC7m9XOQlFwfnnx0j/4Lr+7TRmMd

fADD1oOozrgxcCoazbMSnBY1dN/t58rTy5x6dA7vvFH++B//IrlkmbT2DS5+

8mvqXv6draf+xKa1v7Gn9g8o6r/H58yPOf3RCZRyLarbWoqvFBG6EEvzuQZm

3xrAM9uG0GpnWs9lk9GuJEOY6/L7s1QMJfH+p2eEY6ZJnA3BZyka5zOh+E34

4tBkga9oZnK9D9GFHsK3RjhJ3gou0KegM4TG4QRhz1TGV/JoHpKaNrmQ3+GH

usYada0pxy+Vs/RaJ+KEQRwITtmJSdB+7NIO4l9ljGX8PnTspSfjnBkaq6Cx

rYjkgkDK5f2B2WYYSaZxjjajejiJsPpA7FP1OBi8i51527Ge2477LXcs24NJ

6gxGXW8vaxPOpz9+ytS5UfzFE50z9uHVYsW+BSe8nmvwuFxLjvR871u9nHq2

ineq7DerEvgnP/rJXzhsGcdO6zjyWoRxv/gzRzo+QJH4Drt7/8ibYz9j7sUT

Oj6ao/jpELmLSWRLZjv98Qn8W1zI6RNuvtlHUpuSlGYld99bpHpCzfz1Tp59

c5/s2Vh8T6WS9KKMkoc5JF4tweF0AE7TkUQLs9pEHMJFGDexwI6cnnD61nKp

Gojl/JNpVq7WktVgRtmgL6pWJwZO5tC2kox2NoWCLldii4xwTd6Ne/wBtgg/

bEnbzZZkqZ3wsGOkKY7hdowtihattQjvvrrnEESs9INbhGQ2Vx3yG6LJaInD

I1MfczmPwwEH2J59GJdhH9TDUaJ/ftJ/pqRWOYr/dXLtgzW8Uu1xi91J3KAr

cWfUqK/WkXm5gpK1Ek6+e5yNZ5d408KBqv4O/v7vP/DP//PfJMbl4Bzozq4D

QZSUzvPrP/43Nkln2VH2kDeqH6JcucHqj9epud8l65TK6O0+HHLNUI1EsPp4

hNLRRCpmcrn/8jLN42lox5JlRqbI6UjCeSgGh88baXm/kAOqK2yLuEHmXDFO

o67YNXjhnKZLYY2SmoEYSqYSmL3WzLlHA8IuyWR3uKJqNqdtOpb2E2nkLkSQ

0etOYLkZkTWvntsJyyUfllyvy87Cw2yvPcCO2s28qT7w+l6QZeABEiUDjolW

tfSn0/bq8zrNUcSU2+OQpENsrhvNU0WkS+60jtLhWJohjqlHyWwPoUYYNG/I

l9x6G6KLZa5mxXuWcogrDcQu7k00I35Sh1ZOvL9Ewbzo5mktlz86x4xo9KHA

CDabeDMwd1x6+D9p1vZxaJ8tlnb27HJUsnb1HX777/+PbWmzmJbN49Y8RtN7

c3Tdb2Ph3T4KB1QkN0cwf3+AwkE1NbPZnPv4OAUTGtou1JM3qaJwRk3mYARW

w4GY3kij+U4WWxMeo9DpIVpVRO+qsOVMLMET4fgIy7tE61A8EE/VYBJp5Y5k

1DtQPy/rNhFKrHCCUbctmzL3Yph+GIeMg3glHCRcZUF1Rzz5tUHsGLUgYsKN

9lkfopbNsOkQv83TwTVqLxV1IQxLBh8cL6WmK5nEGmdcVbqS1XeQWuJFeZtK

sp4pFtK/lsm6VMyqGVgooXwwSrTXiugiKzKXYsk8lc3e0sMc9dyMZjKYlcez

XH5xlsKpNMavdXP66QKty1p2uPmxyTwYx7hc/vx/f8O//v0XevsH2R0YztaU

Ejabh/HRT39Fw+Jjisbv8Nm3f6D68nGWPpqlaSWLzJZ4Tj2cpXOulMV7Uwze

6yK8M5yBq60UDqtl1o5QMp5E5ZyG1LZgXBp9CFgUrZ2vYJv9afyz5fcmQwmW

jKJcTEC31BH7SAOMvXbJ7OrQvZBF27xaekuDUbMT2zcSsH8Uj+GUHfqlMt8V

ppQNRQhPx1LVHi98YIRiyYnTi8k8O1eOf+cAJuXD8tUa/1w9IpPsmJypZe5E

F03D6WSUueMULLlNuVsyTyIff/4uWcVJWEt9nVKNia0LYOZSJ+3TKiLiDYip

dMCq0wqTASsSbqRgU26HssGdhffnOP3OCmVTaiau9LP8aJC66VIUR4zYqq8v

X/VJzC3mN79/R/r4ezpPnUdhG8Q2a3t2OKv45jf/xd1bXzMz+Q6f/fJ3TN2c

JHcgipNPJqmfLablQgPt1yUbtAYwLLUtG8nELcWIuHI34ksDCFXb4xFrIYzv

gF6SIaHjwhCyPhXreSQuBmOwFIeF+N7mImvMA4/gEWos3Ov52usjhFvtVVbY

VUrOu16A9qNq3kw+wR6/YVzrkgh/9Yy62gGHoO04Bu1g06o9YyeSOXGumS3G

ISh212GY007GojHZ7QGkp3hx/too7cN5lNSJRqhcCZAeDlYbs3F/jZVLI8KU

uvhkGuKVaU77ipaB5TLiciUftztxqNqEsE577t/QMv/BAF6VgUy+vcz8w0Xy

B5MZPtXOxpMzZM1oeNPUijcOHmbrUUMUuw6gY2NBXms9Nz75iOrxIRxjwglK

KZTjfsC3v/gLmWkDNHUvcuHBDeZuDJM3EE21aNrAzVGCGjzpvlpP0XAqoSXe

zN0epkX6NCrbFxtvXcycd+EaaIilz0EMY42xbzHCdNCKzYMu7F4IZocw17ZX

tc/2JCJdcpbK7fW9UDt/fbyijQlRWxFZG4Jm5VWWbUCxpwnHiBBi2i3kel0k

9x4lXjxLUb4fo3kv6mTt9Dylh7Y3s1P436/vIKVL1uRUhFAuvLZ8YoTuCS3p

Ne4EZO/HR31Y/FHqOVtAouTzwMKjuKfro9KGM3hWS5LWS/rXDt1MA0rEz6/O

VLPxgfRUn4rZm+v0nhsnuzeF3o020o+30CqaYReRh2KfDjsO6LH1sC6Kg9LL

BsLZwXGYZucLDwYQV1zMg7fv8vHz97h9823a+kd59tkXjB0fZfB8Oy1n6onv

jaF+o5Ck/ggCRDPPPVvh+L1ZlBrhAqmpR6wZ9n46uCkNsfLWkfk7grLEHvsK

YyzEN1za3XHIspLaH8Ez2AJD51csYUp0xqvPnAoDyPpEpZvhGWlCUIYB/sJF

h/2VBGl8yJIc7dHqSmCZMJJoy9GcYygk3+wfM8Kssxx9zTrO6laMBi051HqI

1gHN6/t144vtdE1XUz0kuiJa4JF8AGfRl5qJDGLLnfFOOSzHMiK+0Jfy+QJ8

mlxxLXXGqkHYcDGAiJgJRt6qIfdkCuNXVymfaiZvIpuK6w0cnY6g/Vm3eGUS

iqNmbDE3RXFoF1sP7WPHYX0279NDsfMgigO6bDmiI6wXxOD8EE/fv8vDu7d4

9vIz0eUf0Hiyg5SZZAZuV5Pc6olaMv/ll9PM3mkkpcmHyFefLxXeCilyIirP

CRflq+doR/BNMMZN/D601JyAAqmf5Da/WAOs3fZyxHYXoWnuKBOtKdW++hyu

N3bucg7B+gQE6uPpZyjabCTavg/bJOnVMlN2iDbvKbNjv3BCYKE1B6XmikIT

ds3sx/KCOYYn9Nly0pzNtbqE5phJRk4hq1ayi2TzeuEJpcoGuxyZq6KDONTr

E95kjV/xPnwqDhLf7cvYhV5cK50wy7TCVGuB/7ysaVYD2stdFN8qofZ0G1lj

5WQuFhC5kEnBiQzJFNdI15ahsAnjjcAY3nB3YpPRfrbo7ZM+3oeBtwOatlwa

xD9z+zXM3BqjYSmDDmGqtjYtZ06tMXpWGOJMCtG9ruSMRvP2z29SuSAeLBk5

qt6J/MkoiqcTSWz1J687lMr+MMLUhgQlHMUzVld6w0Vm2hXfSMPX93zd443w

SbB+/TnS5BwrkrIdCIm1xy/SjKAwG2IT7HD22ouj8z78Eg3wiTPjmDDvAeVB

docasstjPwG5+lgU6rGvzgxF7lYUjTt5o24XB6qM0K22wUHWI7LAknytUlg4

hrqhFEIyxRfCd3FImGR/+i70i3TQq92JXqUO5lpHmpZrKBTd8MyRGqst0JmQ

/C9ZaeBRCZE3a0hZqaJ8VUv5WhVek3GsvV3FP39zldX5GRSu6cLiDWzNK2dr

jC+bnM3Y4+3J6K1Fbn/+Fo3rlYzcGGXkTi9pXSEyC23E14bS1pjH0w/PEyS8

nzUUJ7PSTEyTPyFVfkze6KV9vRDHsmOSz5zpPVPHwJUKEtptUEr2HTpRQ2lr

Co7+uqLJwq0RxoQnWBCd9uregSdRKVZ4JlqRWhyKpiyIsoYoohOkzv56uLnv

xtlt9+v7wq+eTcYmWmJluwdj073Y2OniKyxmkarD7uB9WIVbYuqli0OizEu1

Lbr5ehiWmOFWYEJJaxRLZ2Wme6NIrw3A0nM/BjF7OZy6iaBp6Y9L7uxoOMBO

WQv/ligaJdtHvvosd0sS+0od2CMMk/CyhKDrfaSca6LgRiNpG0VErqfTeKNW

MvU4c4vj7A3IQhHZyGbtINtLi9gUHo1heSOn3lljQTis4kQVmtlMDFXH6Lrb

RvNV2Ud3GNN3e5i63iU+NsnDLzeIr/CXuodz9sN11p+MUSy5OFGyWepyEOVz

0USWe9CxmsP42QrKZhJQdQQIW9ijrnYludiB1pFkwoQ5vCIPkVrjTWSVF4mF

niTluBIptQ+NPkpUohnJapmLCl9i0o2lv83Eb2PJL/UnQPTa0c0A22BDbIMM

cBetd4nRp1Kyb2SBF0mNvgSV2RJU7YNxsSV2alMyhYdr+1OoaI/CJVj6PeoN

bAePcOJsHNfP5aJcs2V/tS7H6t1FB7NIGcokd7Yan54gDjR7obigIvSheN+t

OtR384iYD8K8+ihxU6K7IUOSQ07jV1COIqCWTZWTvCE13lzai6J6labzs2jP

VFF2ogJH0c6s8WKUTdHil9H0P+wnayGdro1Grj+/RIqsad+ZLr772xeMrNZR

LXXs2aiUbBFLSLkp2YP+rL07zdrDAZqXM0nt9SeuxZHSkRD6TuYSkC88kbyH

6Cp7IqW/o2WWfXJ0cQvbRXqxDc19sSyf0dI/mUVNq+hMk5Lq5iBhVSfihd2U

YQYcM9/GgSObSGzzEG2SbBdnQmiGzECRPaU9UZL5Y8lujKNhspDI+nDMxE/1

4vSIqfGheiAJh3BrNqXrSuZ35u75XK4JB0xtJEousuRgmz0Fp4soPtOAT5VS

ek3/tafarCbgfi8X+4eppD2KJX3VB+8uR6ofx6M5Mc/cF0tEj9Sg8NGypfIc

m1pusHniPRT9X+E7NM2jH94hQ/JX8dl8eh60Y6Qxwa8jTnS1gO7LHaxJlquQ

Nb3+wQbnn66jnSqk53Q1U1daKe0LJqc/hKELLSw+nab1VAnx/d4kzcbRcbGO

EsnS2aLJWW3CgKK1PpnGeCcfkp41J7vBl4GTZSysa1k4W8eM7HP5QgWza/mU

1fgTrbbEN3w/Dh7bMLJR4BkknhhlQkFBIAWDoWR2+5Ek+h9VYUt0haxZljFL

Z7rpW+yQrFBF0VgaMZ2Sq3LMsUo0olCYQdutFn00JG7JlVFhu/0+ySira0m5

HsC+TkeCZqOxrfNle/kebOoPkj4cSdCYM0eOO2Awa0XGkyB8H7lwYNmDAzeC

aHieTsdP24X1O9jsmMuW/FkU2vtsnvkGxYm/YDl4lzHJJeOPJsm7WonneBRh

i5lE96QzujHB3J1Rmk7X8s6PH3D9xRn617uZuTNG/lQ8qoYAyS85XP3kLENn

i4ns8cayVpjyXBTuazH4LKeSvyI8J4wRUWyLf5aFZCVrCtu8GFzK4/Stbt7/

/CKPnp/hyafnmTtVR0aJ82s9iE7UxT1qP24xByhuCKRHclipNoyh0SyaWsPQ

ivYUTMnajiqJEM8PzrWjtj6WwlJfKkcy6DnZRsGohsyRJHzK3TEI18fh1bPo

9jCOJDuzufqA1DicQ/6t7E9qIeNyDLtaXXhDGDlQslDqu0nYnHIlotcLzRkl

OrPmxG048qPPxnj3B0N43pdMc8WTQy8kk7w7wNTzi+wK0LApdpxNZY9QLP4K

xfW/sK33M3LfP03BJ5NEXm8l6/Yg8W+P0PZ4Wbj6Co9fPOTT33zO6p1lFq4v

MXK/l/SJUIpFey68PE3XpUrRhWgK+5TEjCajeZTD4NMGyh93E3ApCsdJX/yr

XPFUHyOj2ZOMbh9UXQFce3tK9jnImTtDnLvdx+zFCqo7Xt1f8WJKZqBP9j84

qSZV6lZa509+mQd2vjuw89mKY9h2fNKM6VsrY/lmB12SEdsWpZb1cdT0RaJp

dKVzIo/KXg31MwVUymalkswQooN+gglWJT5szzDDZGofsWuRJNV5EXbeAYXM

wc5Gc1rXk3n7Xh+xtyIpeZrJ559OcOlP4yQ8Smf93RFuvzzO6vcjeF3LI+Ru

EzlyDemXtRxOj0eRsMCm6ucoJn/Fpsf/ZtPQ96guL7D600FufHuV2a9O0/z2

BHXvzpC6Xk/JVCsXn93gwcurwmmdpA+F03GjWjRjUTJcEqnNzq97eOnOAh1f

TrLwVSkHSh6xJ/kxPReryF+PJX4uCacmL/yKnEmTLNy8Vs2lx4v0zGcLN0XR

PBZNUasHjaNBTJ8oYPFsJcUt3ihTDAjLNsc3zQDfRD2SxPPUBf6kl3oTHWNH

blMQi7dFP99qoetsNZXjhWQ2h8i8iF4LRwTl2JJS50fOvBpldzTHVLboBR3B

L9uN0LZ0tuUf4tCSIboX7dm95iOvhad7bcm4kkqN5DaTkyak3Iuma17FvT8O

onpUgPqShq6njbR+00n+nU5SbxdzZC4It+UM8cViFIG90r/vo+j8OVvu/BvF

2J9Qn7nJvV/d5+df3ePLLzaY+fQ0VY+nmH1+QryvhSqZ24nLQ6zcXuDUe8uU

TmtIahfvmc9j+EoLk7dHSBSt87moZvppIvrF91DoTpDfUMjocRURF1IIXUnB

uyOCOLUj0cXOdAuj331ynPPXe+mdET3KtyevypOKV8/WSiwpaJJMWeZAbKWz

aIsdETnCFqkm2AUcwsp/F2c3hihsDidAckRubyjLb7UzfKGVtPZkjkUZYyOM

bZN2DJOsYxh2eeE2EYlfvRum6cc4GrmbiHYnLAvc2Rqmz9YKS3ZWOXK0y5lt

TQ7o9toRJYxwbMCGhEuprP+mi8z3Cgg76c6l74o5/bN+QoSJgy+nYyvvdRev

qXmxSN75DsnEHSjyRB+qv0Jx9l8o5v7G9s6v2FL9AfG5y/zw01P0vFgm7+40

6x8uMXill65z3Vz65CJr75+h+UIjzZdqKJvLomGxiCzJRr7CHREtQTiX+RA0

5UXcSQ2HNefJ7aqlaSWciMtZqM8W4CzvSS0P5tTdcaauNtM+o6FmMJEMyVhR

Ja6k1vvSMBYjjGeKf7YB4cIb/nFH8Eg8hE/SXhz8duMvebZMNHFqvYYxyURD

y0Xk1ylp7EilZUqNZjAJ5wx3DvpL3lNKhirdgyJ/H3tSrHGq9cGpUfJz7h5M

ay0xEy06kujAjmRzdok37q6RzC3zoldjxfKLbPJPu2B0PoG5j5qp/KAE75O+

9D6tYvlHU2R9VczxT1W0vpVJ/LIa9cVaAjbKeCOxEUX8bRTl4m9n/xPFyf9B

0fYHduR/jVH0dZafzlP5cRcNb41QcjyPitUiVt9dYvbeJJnrpUw+XOb8B2dJ

7w/HNcmYhBI/6kfzCE5xIiDFnCMZ5tjWG5A27UDmUoDwuSfei4lo1ipwK3Mn

UxvLwPF6inqTCBOmiqtxlaxrTniNnXCrsG7+MZKKrQmS/JfW4E5JS9jrezPp

VRZUdvozJnlmbuPV/YRw0Wg1C8Icc+t1zK+0UtFTiK/wXGJ3FLsSjnCowxKb

aSc8+3QxbNuKb8VRMgfi8BU/NM3aj4/w8Yhop47Ggd3Rb6KbqUuReFriJT+U

Z0MJnolBczUUkwkfLv1mjpM/X6Hv62bu/mOGis8qGH+YwcXv5mj9rJof/2KV

p78/g2/bIArfJbaUf4xi9R9s2vh/ohF/x6D7Kb13R5n4tA2PjWJyJKvM3pN8

dqVa6pRB7noxs+8fp+hsIcnD4eR3pVEwnkGk1p+ocj/ii7yJV9vjHW6IQ8wh

TCv1ODJiicVgChmzaqJrfXGNt8ba8zDWgXqEpNsRmmZLRKYtMQW2pNa5El5t

KfPtjXoyjJh2ZxJbPBhfrya/OZjsGmc0NQ5oR6Jom0qlcy5X/CuBRskr9eID

C+ebufnsIoEtgZKdHcRrLNg7bc/seiRzJ1uwbWzCpskF9VKQ5KggrPKMcVfr

ETzkhqLSku0DLsSecGfgujsx8zHYbWSiftnP0vtV0pfZJFxX0f7zQSKfail5

X7Ly18KSP66n+5sOCj7L4sHzJX7/s7uUHj+Nwn6YLZkPpb5/R3FOton/5o36

Lwm92o2TcI/TSgET78zQca2dhstl5J5NR3urlLoTeTQKbzWuFqIejZVsqKR8

RkVmVxSa+mBKZf7DCx3wTbYiqlo0o91friWalEYfApNMcAoyxtHHEOcQk9fP

wWPULmgqRcf700ip9EUpbOY1ITpz2oOQWX1CanXI7gyhfiqT+t4YMmQdK3rT

aehP5eytYTYeCM+sNFE3kEbVUATxzR545ThjFnKU3ZLFt3Tbsijcpe1tQ7FJ

w+6EWo52HCJeOCW+7ZUWG7M98QC2Yz7Sq7EcejsdvS4/ou/l4zoRgM5cMI2f

9DH1xSxl93uo+OEIKc+aOfebC2g+72Xi216mf9WL9mUu2aJ5F360RvOVeanv

CJvVosHTf0Rx6j/ZNP0PFFW/F65YJ2m9kfKLlWQty3rd7JOe7qbodB6pk5GU

nxC9FM0cl8wxKhyXPZRCy3IpmrYYbEQr4spdpZYOBEgmDRVvSZRM5pq4n3jx

s+RiTxoGMoXB1ORUR6LtSiQ20YqgYPm9NEfi851Q5vgReSaRwa9DCSzLwbez

CpdJV+LGkokqPEZIhiUuCfspqvGgfVzDzFol5+4OcOpBr2RyNcmNtvimCuNG

GWCqNEBR7I7duWgqhgvZZdfFUZ9O9naY4dTrSuVqBmb5jiiKzIWhQ8grErbS

OmBdb4f5WBLTn3aQcTUegxtpWK6EUvWjSbqlzhkv2ul8MU7td2u0/7CNk9+L

Vnwreesnnag+ziF0toZNDkNsyhCPH/4exbp43In/YlP379nZ9BU567NMvtVK

3fkKOi7XU3i8QDJxI5VL5Sw/mWdAmGTi6QD1J0rJmtKQ3h1DXJ0PrpL5TEN2

ElxkJDzp/ZqTUur9SKuS78s9SMqyEW11IbXUmTCNJbGv7hXkuJOsdsfLT5/E

NBcycj1Irwxg6JZwe/hxFGZThHWGE9JoQ3ilJ5llfmSIh7aNqFm7OMDoSt3r

+7iVcwm0nUiXWYonoUHmosAamzh9dEIPoqh3xnhDtPSkGuOVYOknJwzy3Rg8

If56rpet+cJsJ0PxK/HCqNEfF/HOQ9NxJL/MI+ZKEllX6sh/XIHXaiqzP16j

9vMx+j5foPHbc3T/ZJnJX50l7pNyYq8oKXpWietgP4qAJTYXisf1/VxqK717

4p9sHvwdirJf4jpylSrJq2rJjnXnKxk918z4/TFuf3WDWx9ckB4uJ2Y1neCl

dKqvtlAuehFU4kBhfyz1IxoSSl04c2+UC3cHqe+OI1YY1CfVALc0fZldM7zS

Dcmq9n/9f7pjC50JUVkSEmNBZIQjBZniK2nmJGhNCM2MQKFXS1ZFBAlt1tjK

MSy8d2PltBNzt910Xyxi5GotjedKhGm0VIwVo2pOxi3DDo1ocEyNH1YZJryR

a8LWdnN0V49ysM8Vq1Y/jERzXHOi6ZjvJrojRfzHD/1TlhxolbW+lo3ViBN2

48FkvZXHzE8WWP5lE9HPSyn8bJCSrwbIftJM6pczZHzUx8SvT9Ly9RT1X7dz

9q9DhExNir/NsSVb+rf9pyhW/oZi+X9JL/9BXv+WHV2fkDZUTdV0AYNXB7ny

4iwtS5W0XeuQnKBBvZJNhGSl2mctlD2uoeheE2lTKrLHVMxc7SW0wIXyV88O

2iLIbPEjptwJH5Wp1NWU6CIH0c8sWmbKqB6Pp2EmCXWDF8k5LvgFGBEX70iq

yplQ1UHhkjfwzPGgQGYjotcXxy5X0aBj+ChNCAi3QTUVStlSKOkL4aRMRjF0

tY6G5UrS2pLI6U4jsyOe4Gof3kjTYUuMHm96GWIRZEWhZMys5UI8W1QcTXPG

RvL8tnZf4Skztkq2OzYXi5FwS9S7FaTei8X9rQwqvmgg6N1Sct7pJ/1ZB5Vf

DtLz61WGfjLJxb+eof3bFVRftDEq6+A5soIifJXNWXdQDPxMPO6vohH/lDz3

Fzb1i160/ILM1fMsvdUjOVF04kw99QulKPujsWgVTpdsP/pNu2TgQWreWabu

cTGRa4WEj6TjXOmKvcqajPpQ8oYS6TldSftSJnWzGWTVh+OnMpfaZlE1KJmu

yo7oCtvX/4cjJt2WaNHi6HR7IuQanYVz7f118E0/gkO5FcdaHTFt80BfZYSr

92EsnPZScTyFotV4KlbSaTyZQ9FEOFk9zpRNxVC6oMGr1JGYtjCi6iIJ1vjT

PVTP4GwjCTVxGMd5sDfaDL1aI45U6bEr25RtM57sGgpmh8zV3gu57Bbd1zvt

Tu7zbC7/qo/yp8K7P5lh5R8nUb9dj/ozycuPq8j8qIn8bxfJ/nqAiV+MYtA5

gSLzKptynqAY+SWKtb+gOC0aPPVXNk2I33X9Dv3hF7RdaqZ8UUVofww1J2pp

XtFKfgjA6YKam183sa3iQw5GXxD+aSXzagHKU3not4TgJ/2r1gYR3x5M1UTq

/1/UW0a3mafbnpKTgiQVqDCbmWW2ZZRRtmXLMtsyg8zMzMyxncRxyGHG4hSk

uKuau+tUdx/u6QN33bvWzJm5n3/z2D6z5sO7JAtsab/Pf+/ftvRKFHWGkCt5

FJ1mj4vuhLCTDVElDmiKbdDX+FLapyO+VIWxWU1pYyh9sg4m5guJldv7yf4I

NzqiEtY6kWiJVawNhgwVHsHHqFsxUCR+27Nezuj1eukxOVSOxZPfF4Gh25dY

8ZS4WhXNkhsDG11Unytj8q503N5CDiT5sb/ME+WKDW8OH+CwMLhFnQv7qryw

OK9HWW/Fu0N56H5sJvxuFKX3S8i8Vkr6i3zi30uj5qMmhv90g4wvuqj7aYGS

7xeo/v0kK/++yJHWBhRhfSjzxB96/yCzK/57SXrG4v9CsfCfKGdknjv/Bf35

JbpuVRI7pcdtNI7YmTSC2yLY3xpG6zdpxMzIfvK6SXN/IwPCF6pbRs5MppHS

FElOh4Yk8biw2gCKZrO498kS3WsNTNwdYObhICmtUeQP6OhcKGDqXjvhpc5E

SyfOrvYlRzhDn+5EcMQR1BGHcZJTS593sbXdwymb3Vg6vo2t627JTh/a5g1U

CVu3XaqgeTaP9vPFNC0YqZb871w2YBzwI7JRhbY3lNRxDSF1QZSMZ+NZGoqy

yIqgxRj8F3zZO+LFMeHNAwOJvD1sIO5uNu8uJ2KzFE3xhwX0vB5k7LcT9H0/

QdOn9Yz9OESvZFvC9320/f4Cxd8t0fjzPHV/HcdivFN0qcMsT/yh4/cyv8K/

l0XbK//3Vs4p12Se2/8Vq4FX0oO7qFqtwHdIg0WlPR4mF8wzPDlZ5kD5uj9F

l/Opvypa3zUSc7kYn6USahaKaJSO1bxSQGxjANGtwUxdaSRjIAFdTRCBObY4

Jr5LqNESTc4pbCJ24S3ZF5x7Bv/kE1uv0YVGmxOeaIFTkOjqug8nt0O4+h7C

yWs3Z+z3YO90gJbFPDol2ypu5hC4GELJqPj6SCmVPZl0T5ZL1hbQdC6bnIFo

MoY1JHb7EzMYQWSHPP4sXw5U+TIns++m6+NAuQ+7pTPtqo9j11AipzayiJhP

RX23hNbft5JwXvqn9ICWz0a4/193KPu6gd6vh6j/aUn8uJ+mn9eo+uU0Of8w

jNedDpTBnZJvD0VH8d/Jf0RxTfz3yv/871n+XyjH/40dTX9i9P5tfv2bL/no

65dMbAyR3LT5HjidZEcaHuk26MbsCFuNJ+piJsZrhVv93i5T1rN4hKEzRvqC

GyFFrkSXexFodJDNjsBCB8KkF4emm5NQIx2tPRzfrFMEFdmSVB8gnOtCnNEZ

nfyOwJgTeAWKB3sfxDvmjHi2BzkmP2Lz/DGNxFM0kkDkBQ1HR71xlv6VNxXP

1XtjzCx1MHG1m7Zz5fRcrqR2WebgQiGF6+kEDASwPzuYN9KDiHtsJKOtg0Pi

4/tHLDjYqBYfDmJHayhHbqRxZlm6xqVc+r+sZ/mPPVv/oyp82UDKR2VUvi6n

7esxGv+wRtY3U+T/dpmSv0xT8lK6RWgNyqy7KGp+RDH3zyju/W+ZY+GIq+IT

N/83ZqsyzwP/E/eJJzz51VN++tMf+PyXj2kdy8c3UzSV/Z1oCsVaa0tAjYbG

1Wp0Mqdu2XbYpVjjZXQlstYf/0wnVElWeIp3JpnUaE2+olEAUdIlkqWLpTRH

kFjniHeJI5EmYbVqJ+nbKmKK3UiUjpuU44h76EEcQg7gEX8cTfZZ8mulw1So

SOsMIGDWS7rnCU6uuBK+7ovneRs8brgRO+dP/YBeWCWTprkyetaamLk7RI3k

SflaCSfLhRekQ5p1RLPvg3iOnHNiX4sD7wx48qb0xN2TQSi63TgmXbbqqxJS

Fw3UPRYff93O+b8+YOSPM3R/O8HYb+bo/vUsVX+/Su4vxyj44zzjv1/mUHQB

iqQrKOu+RzH/L9uzuyr63haPWJf5vfz/SN79F8d6fkXVhVbSauPJ6U2iXtZg

Uk0wSeKxWe3xFIuHhhd7klUZRnFjHMFGJzwli4KL3MnsixLGy6ZsKJWcJvHb

pmjapwspb0+iY7yAss4kErsi8B2NwGHUj8ARO4KaLYkUVkrvjkab7yz7U9Z3

TRRO4cISeguqO6LoXhLOv2RCP5aImeSQw3V3er7Ix2fwAbtTPuetpgbhLWfC

urVMXOmlYczE6Ho/g2t9pA4nUjFTSMlSBunD0aQOFZD5fg+WK7Hs7w9hX5eG

PeLRuxb0JN9K4cANDScH/Rn4ppvurwrIelpA3PuFeK0HMSjaGp+3UPFqiELJ

uKbfzlD221WG/7qCU7YJRcIllCPCv8M/bc/uLZnhm//XVl9WLIkPT/0nZlW/

Jmplmqz1SnneaYw86uPS+9PM3Oql7WIDDRcaMc0VU9AcQ/WwHk2JO0FZwgYF

bpSN6DB0hJMhWZfeGIyhKoBkWdfxKe4UVsVgrIhAZQojTB5v12eOwtxeJM3r

CR/yQz+aiKbIngjphLl1gWRI766T5940EEPpgJoJyTJLUwjvtLlTJfz01asp

YkqF6xX9HAx9ytvStXwuGbjxwQWaZHavfLxK9z1hiCs59FwX5rwh+2cxFh9h

6/7lUjzk9jsLHNlbE8g7HWHsEd/RvMgk9Fo0KU8yKP6sgPCLUYz/MMLS7xYY

+baD9MfNDP/lDp0/rZHzqkO6xxw1v79K57+vsDcjE7OYSyjGf4tiVDJuVWb4

3v+5rfOSdI2p/4Fy8j+2cu5Ixyqn5vPwak4gdVBH+61qkno0VAykkiYdIq0j

TmYxGSfDafyzrfHUWxKQ5UpEnrtk10Fc4ixwjD6Jt1zuFio+G2lDvMETTYI9

mVXJlNxOZeRaIAr/jzkWe4ncWTVpQ/Hii7EYilQUCkv3jZeyfLGLxj4tCxer

mJgr4li5H3t6ZT9+W8aTV10s31jiuOEltr0Pcf1QTfyccOW5AvLG9QxLvo7e

7mL0ZiPF8/m4j0WQM5JNivwNe+nzh+p8OdIcxKEGe940RvDOjDfmK5HkyrrQ

Xk6l4IWJhm9MdH1nov5X0Ux/k0PLqzHRtYXk92spEo4o/Pky4d8N0PSfl/Br

F4aIkI7c8Qvxmd+gmP6n7Wy7LBovCU/MyPlR4Yju/8He5m85PltKyGgafXfb

KZWsSJhMxL/UnaLuRAq79JT0pqERFouu9cJeGHfTo0OybNGW+hCU6SwZbY6H

wXLr2CeP8BN4+BwjLMKBlOQwgvNd6bnjwv7wSd5yk3Uo+aOv8xY/DsMt6jBB

CVZbx1y5RR4hTrp2TLpsGY7YpJ1lR74dhxciyPxVKUu/aaPre+mu38aRdDmc

1qV6Jh/2sHirm+sv5nj26hpzD7u3/lcaOBAi2RKLdbMP3n1eOEuXfrf2LMcq

PXkrUYVZowvWY674Sr7lfZxD199nk3Yri64vO6h/z428q4k8+o9zsnZKaPp6

kv6fLhL9sgK9MEX4rydwbG9EoZ1HWfuFsO6vUQz+aft/aZs9bjPb5mUbEhbu

l1mu/kdODM6RdaOZCx/OcemDRYafDWASzqyfymfxzgh5g0bpes00rdZsddry

mRKKhtKpl7nPES8tHBI2q1eLZp4kFvliyA4iINgafV4AQTFepNUdpnBMI3lX

wFy/J+opF8JbQ7DRWGCuOoq9v3Bc8GFhCOnGKU6yOeArXGJvcuDNfAfMpj0x

fxyJzdMA9py3I7QtnuGlVkzjWQytFUsXyaZCekvuuHTQvjB2LLhw+Ho4hxf9

ObVqx6liFceMx9iRtJ+3s315oyNSvMKCqBtZpHyaht9cNK0/NLL4tyqyblaz

9pcB6j9Np0707PldC10/tUs/nqTkuxWOftIj2mrY6TeIouixeMB30uN+Fq+Q

GV6Qmb24+ZqRzPCQ9LlhOW3+J/Y3fIFnr4G0/hSqp6vJ7E+joC+BuMoQedyl

JPXlCCsVYRozktaVjEYyMLYlBP8CW5Ib/ETjWDI7YuX2gZJzcWQWhBAW60x5

dSSFhRE4hpiT1GxNxbK19BjpBLPJGPpzCShwx8LvCA4+m+9dtcDGaz/H3d/i

jPcuAlp8Ca6xx1069lmZt7caTrFj2o5drfbkjCXTt1pM7oDk6lQkFUtJlMia

s67xQ1Hii83dSDLXFtkRPou59Keds37srLWXObPijUw39pT7c3TICbdlX6xW

Ekn4sILEp0mkPkuj7qs8Wr8qRP+BgSaZVePzWLJfN6L5sJ28bweI/XmS3elq

zJzLUBbelo4hHtEvPjz5r+K7/yYeLPouyNyOyjwP/g1F6z+xs/SX6GemaVir

YfX5OkO3h+lYrKWiJRcXgwc28pgC8/0IqQ4moiqEqAo1mopQXIUnXUbCZN9Y

ENxxhBDRXF0cRojwWnCULaFhrmi1KrzDLAiR3hLVGk3wcBSRwzoihLWjcz0J

jHPA2f84hdVR6LICCM2RHKz0QlVljlv9cQLqhDlq7IhrdsGnxYXAVg/Kp3Ti

vQZarhTTf7WB/o1GGm9KL64WnjNJP3yez7XbC9iFTxF9sZPdi36SlYEcvhnC

wakIdjc5c/ZSHLb9Xug/0eJ0MYTQS9nSgXswPdJS8UOteHMSplcNjPy+kZpv

GvF6UE/K6zqq/zqH/ZoJpZ0eRe4Gip5fyvz+negqnrAovjsumo5tHhMnGveL

R/TJZcU/S39bx3RriInnM7z/1SP6b03zwe/fZ/35OXI7DOg7Ewko9kVTqt56

/Vstejm3RNLxUzGVVwOIHShEt6ojYDQW73o7vKLs0cSKX+j90WhdiUp3E8bw

JUz82FVvx3FvYV7fY7h5HsHJ5QAhoeZye3v0RUEUNgaSLH0iYdiVsIazxLQ4

ou9Rk2GKZPB8LZeeDdFwvpCejWIWHnVgmkkXPgnldKtk2LB04ktZXPjFPPc+

O0/MJ6nik84kCOuaRoTJmn3ZsRTDofFA3G8nc3rDl6B5f+p+H0qInC96GEbO

R5mk3w4l41Ypg39fR9YDdwyfTGH8x1Ui7uTguFrIWy4pKPXnUVS+Fo1/3v5f

+6z4wfDftud2UeZ4/L9Ea9G57j8wK/2SnCcNGJ+UUrDSQFabltQOPWsPpinv

NJDYHk12SyK9M5WU1CWSVhJGTFkUfbciSBkfRmH1moSGDJKWggmRnu+RdIrA

KDvhNekLmX5b7632Fw1dfI5j7XIYN28LAtTSTbyO4uFxGHub3XiJT0QnOJOS

ZUdylwcZ/X6UTmgoHouWPl7ExUejjF2qYlZ4snrMQM1EKvmip3EwSRi4k6bL

1aS/6GZvbwIeMo+hd3WcEf8KLA3DuyGYutv5HE2Q3JwJI+CyL1VfBEnntybp

qRdhKx5o18IxrBUx+osKxr9LpehlPsW/HGfmzyYGf+rE/NMRsv9ygdiPR1C4

xqM0LKIo/1w89nfS10TfCeGzqU12EDab2cw5YeLZf0PZ9jfMav5GwMx52u5W

EPMgB5/JFAJLAkgQH4gVTzsVeQBL7WkCU13QZvsQGmNPkqxnfZmb+LU3ezTD

ZJliKJnxxH8wGOs8Ryx9DhIaZ01BubCwZJi7cIWH91HcVYeJ03ngqbZEnxuE

Lt0X/2Bb8es0IqNdSBU/qpqPpnMxi97lXPIGgijpjKSoL5z0Bi8ypGfn9sZT

PZpF62qe+EQjTQv5mNa0eF9QsaNLxRuSnYF5fpS0ZJLalI5LsvTmRiec+tRY

dFkQOyl/+6o1Je8nkntH+ur1OPKexVH7STBTv/Gk+7k/cTfFG29VMvpHHfo7

Frg+7SPpH4awvJfDG4FpKJJXxI9kftv+IHn2jyj6ROMBYeGRf9ve5qRvDItX

tPwLymrp0aH/hHfqNC2P8nCQzujUKLpkSTdoDie1OgyXlDOS+ftRJ9sSZxCf

yw0jPs0Pb+m20eKP1cNupM8H4jmuln7gwzH/E4SLLwwtlWGsiiAi0RzfsCM4

q/Zht/mZPC678Al5F71BerPGnuy8ULTRKvQ6X7J7nIkyWZBYJWu72Y6MZhXG

3nBK+uNpmCqmY6mJ0qFs6s/lkjKvQydrvmA+hrhGS2EPC9zTrXBNOIG2wo/0

wTTK56sILUmh6kYT3uXWBM3aE3/ZmbT7KgzLNtz4cyB1T8Op/Ua663U76n8o

pv1byeUPCyl9YiDhjoHyPy4S86n077sZ7E/PQhk/j6LgFYqqH0Vj6XG9ou+g

5NyoaDspvjAhfjEuc9z97+xo/ivKrL8SXrjE4koyPtcKOC3rPLkzB1NXIcXd

qWhyPQjPdsE3+iz+EdYkGnyJjHfCP9qRKKM9YR2ehMp6VrUG4lPihVusNVGZ

wbSPmsioDCa5VEVsjiteEacI18lzy1eRW+wh+triI5r7+h4hMsQBP9VJtOLh

SS1uJFepyBkKovtaEeO3+qmbLKZB+p1pWjxL2EEnvTd7OYWOez1svFpANxa2

dexIcrkKP+maqjxPnIr8ME4Uc/HyOHn3mjndeJLmu6cw3HGTnudMy72jtH7q

iWpW2PID6dU3ReNHufR/L93lu2TJpVgiP6rE+KqG2KfVBH53jjcSs1HGzKDI

F33zvkTRIBnX8ffCw/8gsyz+O/If2z7cL6et/4pZl3iH6Sc0jTepuCH+cMWI

i/hu3XIrD358yOCDEYbOS2+siSYo2Q6PiM3Pr3UnLtkddbQrMUY/soUBQvJ9

cUtxxj7sDM5hZ2XGNYwsNW69XyqjOYzwHJnLHLlfvjdDlxpZezbGxntzdEiH

KyqLIDVFRUiQOem9IVTL3E1f7KB9VdhQ/KpmIoPKCQP5Q1rC6lVkjKfQfqVG

8jWL4ccDdG5UEjHkQUyTD8HVPjgIQxcuV6Fui6N+tYK86RqO3lFjuKolPs9G

5nYPUVfN0Z/zpOtbfyI3gmh+nIJWrh/7ZR1LX4fS/F0xNZ/HE3cjmdwPc7a6

sufdHvYVVaCIHBV9P5IZ/kZ6hHhEvWjc/Oet14gUHTLLvcJoHX9F0fQvW8fX

K4x/Zq/pI2LX+zHv0xM+WEzqhF66fQMr96e5/+VtLr23SMng5jGJesKSVKQV

BKFJFs7S2RKps8HJ9xAnHPZy0uEwRyz3EihcW9kbTfV4EjktGgYvlLN0p4Om

0QyMTeGUdsZT1qGjf7GM6uZErtwaoLQ8johlKxIvq0hYs8JuPoi45QSMw4nE

jQQLp2vQt8fRe6WJlacDGIV/s2biiB4XBlwMxH5I9u/gMRw2jqC6m0TwUibR

l3JQjVThcSWa+x+MklUrrDVzlNFnHgx/7EbKjTOUfeRF5RM3jB9E0vVbA50v

nORxeKO/F03GzSCSH2cR/CiVgCe9HMgsQRnSizL/pWTct8Jgv0JRKlud+ETz

X6R3iKYDm/Mr+vb9H1v/i1BWyeXp3xM0ukTmbC76vnyyVrJovtMi+VGFrklD

0Xg2Cw/HefntPe68WqdhMAtjdTiquDO4xh7HM+oUbgEncfQ8xlnn/QRozIlL

MxcmPkuU+HaY7jTZ9f5UC4M1DkTy6PMhliVDantFsyyPrdfzNznN69Ypgu+G

4/cohuBPgznwUicdXkvJyubrGeWk9CTRIL4+cr2GguUk4nv8COt14cS6N4c2

jlL2ofD1rT4SHqpRf5KE5Wg6XfeukHOuiAdP6li/UEDzDQtmnwcz+aU3QRft

8Fx2IO+FO4UX9xHefYrGJ16k3ggj94UW26k4Am9XyPkcjq8k8JbRhCJiSrzh

/W2PKPpe9JWuXClzXCVzXCPM1iaZ1yZ+0S3Z1vHPKOvk8vw/8WbuvGRLJNXd

kgvnsqm72krvjSGyRvLQtggPSZa3y3odvlJP1Ugq09ebKBCei8v3wke82S9C

mDXZFV2emli9SnjAmXDNGel01kTqHbANPYBrkPhtwhESS50w1LhKn0li+XY5

nUPxlJcH0fR5L+9K5u40/p7QhSYSv0kiZimepDbhjaZQCqdy6b1cQ9NKJjkz

sUS1qQju9GDHOWHYj8Mpv3cHhdf7xEz0kPFdBqFjuUxc6hImEQbtT6XvYg09

Dy0Zuf02A+/70vTKhaxbXoRPCuMvu5N8x5vqDz3o+1xFx0tXMjciSLwTTf6F

eA60JvNWTA2KmPPb2lZ+vT3DdcJpVTK/jeINjaJv3R/l9M/b5+t/FoaQnlf4

E/uKPyfIlE6BzJdfkyORPRE0nCugcSZfulMeTevNGDrTUEkuWcQeJaJERYUw

Z4H0+prhJOnI6i1/iynyJlD6X01f+tZn6wfHmaPLdMFPd0r6hzBw5Cks/Pdy

2vdN0XwXYZmHxa/NKTUF8PD1KoeCJ1HseYT70D3SPgsgesSXhFo3ooRpc/sS

6VsvpXI+Dn1/kPSfGBzb3VDOq5j4spVnzxc5kTBF+Mwy9d/EkDiQypUr3dy9

vsrk2gaPP/ySNOnJvTdUlNw2Z/SzfZRc3UP9C2sCnvmQMveucKYDKZdDaP/M

nYQbwnDPszjR1czbNRcxC+xEkXBd9BX+LftC/OEz0U58uPzvtrf6TZ8QXatE

80rZquWy6t+hLP6RHUkfEdTdxeIH02ReKORovSeere5kDUcT3xomMxxJ5Vwx

nRdbSOvLwjHFipBUc1Tx5sSU+Agz+4nfGqgflIw3qgmItqS4Oh51rDPpOSHU

96STIt3YN+YIAYknCYk/iVfMQfykkyRmuBESY82XryfoW5jhkO4+hbcmpDfZ

om52Q1vtiHe2HRWL6VRIvpWMhWLsCqJurpqI6SKUA860f9HEd7+Y4+n7F7nx

TQOV9zVEl6m5dKODyev9DD96wNrGMwpNKcQvONH0SEnq+AEMTwPoeu8t8cdD

kn3Sw586kr4h8zVtTcSSD7YLHaLRBm/mr6EMHUcRfxdFtvhDmfBDqWhbKB5R

9MO2F5f/dlvb2j9sZ1/JL+Syn8SvpU/Hf8L+gi7KVkp5+ItH5N5owXUoGP82

yeW6QKJq1YRVSA70xG2xQXxrEulNWlzCT2In85y1+f6I7mQiZa5bpQM0diRv

fbZcUIw58fFutLRnUlQdS2y17Ldaf9yLnHGPOS5McoIQ7Vk8Q47w4Y/n+OqL

FR5+Pc3wrw14z9rhm28t9/EgRH5val8ocU2ewhJ66cz1jNztwH84A7MJNU4z

gVz/ZozXf1xi6RflJFYLx5miGLpcTN75fBruLzF17hIxw+KpA47kzx6RPnGI

lg+PMvLBHrJuWqG7dZbIVSt8F6zwm3PgxFiC8NVNzKpFU10zis21FX9b9H0u

HvyxaCsaF3237cMm0bZYdKwQP676jegqeheIhxT9EmWOXJ/wGfvyltB2iS+M

p5O/Igy/mo5uJglNZxDBNUE45PkTUhlAZmMwnkY76bCFrL+8IMwah0on+RZz

AI/kMxT060mri0YvnNE+nINWuDcywgZ9kjeFTWGk3c4l+qUzoe1HsNcexD/J

El3OUW79fIG7Pwlz/a6YM7dssayxQFPiSt1qBpE1vkTXqUjp1DB/9wK/+PnX

1C8U4djlKvPrJp0pAKt58a/1XMKlVxf1xNMxZ6RzOp/Mc9LnhgrJ6Uol63Y8

HqWDGAbT6fvRTnLzbSofv0X9+++SPfk2SQsWRFyxxHPBgX0V1SgrrmFWcB2z

iCEUYcMoUsXjc19sz3DuZ9sa5n0lnrHJEzLHJtG47IdtzbPFQ3Kk62XKqfY5

e7PuEDlbirpRha4vSBgyiISZHPqed5A7o5O58yK4OZToKh98smxQZThxfkM4

Y76IuWczaISTfVJP45lwGG2hqzBGCOmVoRR3JJGYG0BYjBvhWc7U3soS/vXB

qyoNda/0t4YwKppzeXclmGN3ozn0UMsbi7bYNNiS2xlNwUwEKd2arc+uaZor

4Nb713ny/h0Gb7Wi7VWzu9ga5/lMTO8vYDdXRLYwRu5wLn2rlUxcHhAuiMG1

UEXgWBD1N2MpnJmgUp7n2ndH6Hj6Fj0fHSJ39Q3se4NJfGhNzupeUs/t5UBf

u2j1GLO08yh9e1Bo5kSr+6Lly2190z4QbV9vd41CmeMS6XQlomu56Jv/1bbu

mzOeJ/sh4Q5m4eu0XLtH3fk6AsR/rSRXbGt9pGvmcf/Hp6SN5uBS7E60dApt

USiacm9Sq/zxNjiQUZsoWZchmgWgKVKhTjqDr/4EkYl25FSG0T1dSWltKuoo

Z3QNDsw9jEbh8gDrnD5Sxt1Y3pjnxKA7NsPCBBdDCZz0IvdcMqWz8cS2eJHW

pWXgfNvW96J0LjTz8z/+SfpyJ6pie2Jlpmc/nMG9R7rnlIncgTTyxnNoXajh

wodX8eqIIlS0c2rxQzfrxcxkMq/u5VK5eEB8Y6/sg7fQrR0l7Y4LbmOnSX18

FtOD4xweE78tel/0nUXptfn6xTkUWQ+3j8XI+VC0Fo7I/f884rttLy4Tzy3d

1PZT6RYyt0Wb8y15mPJU9s8VDqQV0Sc8c/93H+A7msjB2s3PxwiiYrmGwfsd

VJyrIKoqkoiCQDKa4inszSAkzxe76MPYaY5jl3Jq63sKhpYbSBrMEN+wEm89

THZFGIUloZiqhf2zrGi94YZNYhsRucW0LTuhqQojMNsKfZuHdDRh5cVk8hvD

0Ld6kdwSQMNMLleeTdA1b2Lj5SVuv7qPrzBLQKsPa8/7aXpej2o5jsjRVEpG

07Ze36i7VETb7XbSLuajn0/EvsCDQ0XWlK0ZWZtLIrzlOFnn3uTCy91UPDrL

7FMziub3o7lwGp/FcOm3om/pE8m1bhTeLaLvsmj6QDTdnF+Z3SzRMFfmN/eL

7Vnd3EpF7wLx5mzpeGmif6pclyW30T1Cqb6IMqUWM91ZUjsKuPftHeJms+R3

mmPTHUxEZyDNV6tZf32B7LYsvOO8ZO1nUDVQQJTsA5skJ7zibHGIP0PTVBbn

n3aQ1BNLqbBnYKI1ySmuGITVvKRbG3s0lN1Sc/FKKJML4fgKp+il2+a0qqmU

+WqcNZJXHysZGsm5+2P0LDRSLsw3uF7LReEwrax573pX6cdVtN2rI3YthcqH

lURPxpO7IH+vzVe8R02heFf9rSY0UxF41Hmzu8CNqkedpPaHc7LqMEa5ffMD

T1qevU3pg4PUPDhM/cYeYvstONArmZa9Lt4g2qrFHxJXRatHMpOinVE0TJUZ

zvpse8v+bNsryjd1lstTn277R7KcN8gWu4HCc5a3KzrZu66RWRcuKA/j3JN5

ci5WcaTaDadWb0LFk8tXS5i4O0bTdIPMXyAlDal0nK8guC+Jw5V+wlfWuArn

BuadRVMfyPy9fvHOSGIrAogyuBOZ5ktBfTpp0ntjZ4Mou5ZBYqcP2S2+lA1G

UDoaQ9lIrGgdxNytNm5+tEpeRyrGoWSm7w6ha4vFt82e4pFEWq6byLuQT//d

fnremyB1TEfWdDpeTcEULZSz+OACtcvNOHS6cbLRgh3VVnR+MIHLQDj7ag9v

HS9x7fth6u7bEzh6lqIHR8h7fJrM9xw5PSN9LeM8Zt4NsrZHUSRdlK4r/muU

+c0VjdNlSxWd0z7enuVNLygSfbNebN/OINyRLKepjzCLX5fuI3+3tZaWJxn4

rKWyozuQvcK5dZcbWfliCc+WEI5mW4o3B5I+lLR1rNzgxgBdQ+WkVqsxPaqj

5pfZBE7Y45BzCucMF07l2nAicj/qEm9GpXOPrrWRYAyVjhdGzWg9edJhtX1a

vKWHlwxGUi5zldEZRl5LNNOSnc1TRtIaQ8lsi6b7Yj01U6X4G92J61ZTsiZd

82oe1bKm7r9+Qt5iIcbzBRjEf2MGw7n35XN+9Xf/TMFkM9Z1juyvOMPJfnfi

V+LwmPHmZO8JtBe1XPp4ndyb4vnjB+m4t4ORW7uIvGTFO/PV4rMz7FCJvpvv

fUi4JlrKHOY8ljUts5ki+mZ+uu0Pm3m2uRXKHOduXn9Drr8q2t5CoRfGi1xA

4d6Prama9tuzHEp+jyTpSgeGA1EWOJCznMvL3wpfdBl4N9UW+3xn1MKxm+95

ff+H67QM5KFujWPjqxRSBqoJGS4jqD0YnzoNtoYT7NGewS7Xnu7ZbK7cmEJX

GInWGCynIfimq3DNVJHVGiq6hpEsXnztwQKrN8YlPwPIkm48er2Z6Ru9xBcK

hxd6kDOVRJH4aPFF6TwPemi61E78QByNt2qJHjPg1KRi5cNr3HrvBSpjCE61

1pwyHcdCstVzKhibcRUW3WewmvVj5tNlLEwHCZmyY/Zjc+bf30H7p6c5ul6P

Iq4XMy/hiHjRNfXhNj9kydpPl4wzbGq86QWbXrup9X/7boZ4SIrcXi/6psk+

iV9BGTImPtPJvnh5Hl/P4Ji6QnNPEwU3UlB0eKLoskMzlMjVL86TPpfL0Rx7

DiSYS09wIrLFh4Fr5cIXOuafpOBZ8AyF3zXxMS0mWbtRlcHsiju9dayqneEk

mbn+rF/pYXiygOwSDdrMINyCrUXfcJnhGBqX8qgbzSbG5EtSZRBF7Xr6LjST

WKEhMtebtF6NMIeWmqtNVC+3M/1kiYQhHXpZU13rHajrE+nYmKZ+tkt8Xodf

qSfWxVYcLbTFqskN55lYrLv9t/jaotuHpY/XsGl1wmbwDIljJ5h57x1GX+7n

2GWdeG4XyqAJWecyh1mbmSbekCbrP1W2TNHSIJtOnq9eNsMTuV66R7roq9/Y

zsPoKRQRgygC29ip7sfMU3L/bhaPfzfMjxtzXLjZyKGxQI72qdhhssW8U03v

405Kx7JxNPqwN+YMFgXOuBltCRMtMhfVDG/kE17Yz8btHEwbuWQuFRIpjGue

Z49/rTcRm99nkiTeLFttl54O4T9jcRTlAwZuv5iifboA3eZxi0ORFAwnMrbp

E+cKiJX7xtYEY5iIJnkigcJpyboXj+k8N4amK5L8mQIaLzUS2ZjAjQ8fiR/N

E1jkQmh9EJ4dfli0ueI0GMRZYXvnJj+sG91xlP258sk1XDp8cGhTYtm8g4Lu

k9z5bgeqq2rx3XbMYi+IhtLbUoR50zdnVjROeb6ts0EuM7zc1j1H5nrzPZZp

ctvYFbmv8EekdJLN42uDmlComkTfYnbXJKB/0MTtj0ZY/nwI9/Pp2M9EsafJ

izdMXpxs9aVc2Gfz8xMDhNH2JoivJZzEUzqss7Bu9r0Q3ntdwKvN4yceFOKz

+dk9dT4E5DqSNyIdutYXH5MbATkOROY7CCdIZjaFcOHeJJ2T5aQ0qMiXfIut

8yVnIIHSSQOaShWhJhVpQ3HScSMoX65g+PISD199QnJPNhkDSZQtlFG+UEz6

cDIbwr2XXt4moMEb3UiM9OggzrS6c6ohHJ+RFGyrQnCQTuotLDf/YhbvETVp

kz54Dpih7Twmf38/rm0hoous60TxUr3omSqekCH6posPJIu2yeKzqTKzmaJr

hnBb5j3R/Yr4wZL4tcx8tGRijGgc2o/CRxjEowEzlYkjc3kopvQ4XTeie5zD

oRENwc8LCRzJ5Y08T96s9OJUpbv00zQqZvPImSlnX8xZTmXa4VauQT1Vgv5K

Bq0fFFH3uBRPYXuvhkAcNz8rQjpJk9wnpSmcgDxb7JKO4Wt0IEz6dut0DimN

XtKJbXCNsaNmwETdUjHJXWoi6pzQdUh36Qgmbiieurl6Xnz7Ke1LY2jFPwrG

MimfLCF9JFuYLJfz752jaLqQoB5/ssXPXMXjDknHzn5STM29CpJ7a7EpCcGx

PoqWtWZCRgLImLSXx/oGAR0HsM6yxSxaWDVB1rnu1nY/MIiehqfbvrA5uxmb

Oba5iS+ny20M4rdJMrexkyjCB1CGD4rvNovv1gqbSddW1clpLZH3esn+MZcz

636YzUi37/Mk/GIelc/a8B1MFlaTHlsdgIfwhJ/JlazNjF+swKLEh2Np1oR0

JpB8vozgFQNhC0kkXcojby6TYJM7dqmn0OZ5MTZXItyswybBhpOhp3DS2pLS

6im87IpP+uZ3Z1byiz9+S9VcOV6lDrgVmxNW44enyZM40XN4ZZyZjRUSGtJI

atKROZBB2wXJuJ5UaoQnFx/NkjpuIHE+moj+WI6Xu9LyKpjll/5MvXBh5KNU

4vuK8SyO3fpOsPBuO6IGdhE3sgeP/n3sKRLu111DmS7zmSRzmSyeqpO1nyT5

liyXpYqu2Y+2tU0TbTMlx9KFMXSL4gmDW55gFtaJ0s+E0qMEpXsxOzzLULrl

krTWyqefSX6vbh7jGc6hDke5vz/Bi/kYb9Vh0xLJ6bYYdgqrW1aFckBvQ4Ap

gM4r1dKPfdgVcwyryhDO1oaStr75ekgqcbWexDb6EDUYjXeOC97CE5psR/QV

oVv/owjVS4+eCBYukN4xnMe9jx4zfLGdFPlboa2ueFbYEFLlhapcRWJDOmu3

r1DSX0NCcwIp0kPyp4sxzdcQUKWh/VIHw+uDRHZqSV9IIXI5iZBZFzqeJeAy

sk5ayyDnH0jPuO1JcqNBunckiaNuJE6fpPvcKdy7rFFsfp6R8aZ0BfFVg6z9

JNEzQbRMFq1197f9WLhLkXV/m8XS1uTnpW1vCO9D4VspGS/87F8j3bocM488

drjmonDMpnK8hV89GiJ76gluJc+plK65Z/O9XKKh9VAsAbNpeHZrOSRzbCbZ

fEgY6miOG9ZGT2rOVaHp0fJ2hjlH9JbouoJoXk+leS4e+4wjRMjcz7+YoElm

RpPhgHvMafJlX83daSeyyJPQMjdm7oxx8+Ob0jOMRJSqCDSdkW5rhbPsu4hW

YeN2I9NXegnJDiemQUP6YCZFA4X4VYRLBkQyenka00oZ+tUUDBcSiT6fQN5N

O0yvZ0Qn6QD+H2C8NMf043fJGQ8iozmWJOkfEW1WjG9+t6JkqqJsaPt4gIzn

2z6r39RXtEySywyia/LlbQZLkd6QKtoapNslzKOIGkERIH7gVyHc0Cn+3SKz

a8TMWc9Ot3QU1kYSOo188bie6gHJTUULBQ291FxJ4MCm35e4sqfMF8+xCM4U

B3CsNog3ZB6PlgdzMt+NvTpz4geTqJHZP5lhh22xK6oyKyLL7CkfS0eV5YZX

oZc8tzbK+nUYO3R4ZlqiMXmgSnSjQNZ55UoBTWvl5HfFoRLmONTgj/VyGK69

YcS0aslsTCXZFEVUWaSwcCTGgXKqJloIq0hi7M4y1z64Sd65fLzHpaus5ZO0

kIN6w50HPzST3NrMMeM0Iy+XePGBZOWEK1HFEWT3+XNkKIaw8zpW5mI4a5LM

z76PMkPmVS+6ponO8Zsa39pmr80cSxY/SBY/SBQGSxLP1QorBEmn9qlgR1ir

zLF0am+ZYadUlA4JmDnFoziVhHWJH8tXMvnq7iy6oipqOlr49kYZYbNRHB/2

YU+9By5TksPD4ewWX9td7c+JRg37ZNZ2ZwdyQKPCp1RYbdqAdZEHJ0q9cCz2

wUf0D670xznXE0f9GfGMYDplzgzCuWqDLS2THfwonts4VyWemEN8gx/q3kPk

fRNI+c/lxK1LN+szkN2qxz9FrjOpiS6NorCvkKrVFiIq4pm4PsfQ3RE0E1F4

9UZSttKCaqqIE90efPpVDV+/6ODzTxr55FoJL7+uIHtRckG8K2g2haCxWPyX

DJSu26MdyBdveIzZJhNs5tvm/GrFJxLlvO76tr468QKdaBs9IJ4rsxoqPc+3

TDQtkRmu2vYIF5lZh0SUtjEoLMIwOxWNQu1B7JQTd+cK+OFeH6+fNvLtei1T

qxrqrgZTPmdO4lI0zR/VUP5hAcYneqaeplPzXIvjeTWOYwmcKXTHZrMLSJYH

DCfgXRtIeJ0/VmmW2G1+nn9XPGGb3yEi2qbLGo+VtXDhwSzrdxboXmpBNxqD

88RBqj93pu5hBt3PIqh9lULuZAbqTGesQh1Qye+Jr9BTIv3cxuhHSlcBY7dn

yBRecO5xk9ktoGKxActWNWaNjoy+KOb163G+eNLJRx92s/qqjNABPenrUVTf

DcR0yZW6226MXzpCyTlz8d+1bV7Qih+kiM6J4rlaybGkTV4Tf0iWzpuw2R/E

b4MaZRM9ffIk0wpEY/FaT9HWPk48IQqlpWh7JgTlmQjeUEmfrTtM6WII98bT

+WyliofymHsv+HPpdqpoX4VpMYWxB+E8eq+O766XUj2mo2C0mJGbwWSf85H5

8cKmyo09BgfZF9mSzVG4lktnylGxM8oKy1Q7kmV+3TJsOJNqSXRHDAMXO+mY

rmbwRhNRSxH4PzhL4v0Zdlb+TfruXfo+DiZhVGY90w0/2XfaihQySjPFUwpx

yvChc2mI2pUeoobTsBEfz1qrYOjWPPvSxLfabLCa8yXvagaDn1TS/EByfMaf

imsqJj8JZXo5gNmJZJZXE1iZD6JvcS8etTLDcfdQaq9u+0KSzG3cRdFZtgTx

hTjxBI3MbmibdDyZV2+jsEI6Sm/h202vdUpAaROBwlKzNbsKc8mrY368fXwP

O5N3cbZrJ7mSqQNzarrnZd8ueTB3zcDbLc0czG6Un9N4sVbNbx8Oo+oSf7e/

S0pROb3jsbjXlxA+5IHl5ucxpDgJO2vQjSVzNNWKk6mOnNS6YJVshZXWgshS

DUd1NtR0VzN6rY/soWTsh72JexFCxXNhoLPnJZN+oOW+CcOqDRFVajJ7MtHW

xVM8WEqm8Ia2WsfasyvS0QuIljUfOhxH2dVG+jcm2JvmyLGMo3jPhHJ21Y1D

7dZ4TvnQ/8SdrrUzTI0m0yusdm02k9uDRpa7Mhgdk33ecBazeGGtGHlucZJF

CfI44s/Jz5szK50hXDxBXY8yoByFV46wgeTipg84xKOUud3S1jxQHn8wyrPS

BU97ozxkzZ7Te3kjbBdmOQrM+nagXj6N6bw1C+NhtIy5Unouiv1xBurGyvho

Wcfz28VE14r3KNI5EVLDnQtayuuKCGsvIrPfg7czPThS5UGYaJw4FYe1nFfE

OXIwT411mh8Hg09zXGdHQXMmFdJNAqQHHurwIOGFntdfDFHeP4xLxUMGnueR

et2BgOYAQor8pHOEUzRRhm9ZLGn1+aR0CJN0iO+cSyF2Utb9TBHHG93YX3Ca

w9KJcgezyF0zELTuTc3NQDoe2NO9Gid8scHOge8JPH+OO9KLbg8k0y19KDLP

kh3aNGECmdOo8a3PpdzKLMkuZZBwl59wrZ/4gSqNnS7x7HSIRGkVgpmFP2bm

PihPe0meqVCcEF1PeMnmws4Dh9l78k3ecHhT9tFbKJr2c2BoN97Tx6hccCat

1pyrC7Es30rl8w0D76/ouTypYXUuh9TaBIztobw3l8xAXxiJg4msryZK/w/B

p9UD/bg3xRsaim5r0M6I3z1Kp+JBMtlLGjyM9lRKN46uCsc13YG3Kh0Jv6Xl

s6/H+OH5Iq/f6+biNxmEn/fAt8eXIOGY6JZ4eq70Y64LYOb2NfEfHWEdcXiO

R5M6n0fbpUFcepI4VufMoQp57PPxhAvnaiYdSF5youHecWaelXCsU7qYQnIp

fpbRJ728v5pKe00gPtm2vJEQJDp0i65d0sXaZF6lgwWWi6bZwl0GlK4yp44a

dtio2XHWB7PTnpgdFZY9bIPyqPSGY84ojrrJeReU71ryzr4DHDn6JodPvsW7

qn0cSTnEm7lvo2jdy4H5XTiMvkNTpz+3Z6L5/kouD6eiqBRPnJGZujWVyIPz

cTydi2Wwx52VtTA+WM4SfgoncvA4xolDNF14V9jemzu3Ypkbd6S8y5+SSTUt

i7HomvSEF6lxNriirHblQK8PG1/O8NEPa/zq1xcZ+1I67bQ7ttW22KS6EFEf

R1J/GkF1CUzeXeKg3E/TF8XZfh8yN4+bvjCETWUE5jVuWDW5kiz7NHzGCs3Y

CeLPnaTghjXXRN9W6SP7XNPxLu/m7qNW7i9kM93nhVO2FcroUJShkltq6bf+

m7mVicIjBaVLguRWOEprf1n37qKjk2yOmMn6VxywQLHPHIXoqTxkheKgPTve

tcLsbdHw4B7OWO7H1mY3nnEnUBdZoc53FtY9yc7qgyg638Guax/DHc6sDwlf

jpzEeuwNqk3SOUtDuN6tY6Etgu6uYK7PGXkyW0VpbwpZY8Jisxa0zBzhynwK

v7oaR+VAMG/qHvKuYYnKGmGJ8ig8dba4RVsKF53mrSk1HhNa+p/30vKsGZu1

KPb2u2BX44y93l56l3BKli/5o3WYVoVXYw4S0B6O21AYWcK/udNleNYFYlMi

PbPMSZjEgqxl2c9T9qSvHSV2xoXbNyr57cfdPHjWx6cPO/n2Wht3x9Jo73Lm

dM4ZYSzJpMBC8YFMmVnR1CVSfDYUhZWvZJas/ZOi6xHr7e2gaHrgtGh7EsXe

E9unB05htl98fPcRlDt2cubEPiysdhEadYrIcmvxODt09T7SSZ1wyz+GfeEh

TjXu42yZkuOlSpSFOzBreAe3wX2E1BxCW3lIOudJEjvO0t8RxJz0EJv6AHJ6

DWzMayjptefSeCQ/XBP/6M9A8WaqbBVyn2SijWrcpdPZhJzgVKIllqPRWC7o

sF+Q3GhzQDEsXbHHG4cKD9SFoZTOlrA7zpnB64u4NMVJz7HErzEI/54wQgdj

8GsPw67OiRPlFrj2u1Nyw4H6ixaETCVhf02LduUsHZeieL2exRcbxXxxpZZX

ktfTQ4EElh1jZ8QxFHa+KKUXmHlIVjnKrFp6CmM5ozxpJx4geh4UPQ+Ijvvl

tvuOonjnv7e9x7Y25b4jmO06wM433uatt3ZgeeZtvP2ObH3+ZlSVPF7TWaJq

ncnp9CCj0oVQ6bjumUc5GLaPXf77MPN+A7O0d2SuFShGFOzoOcGOxl0cHnub

2NH95IzsFuYK48FCLh+IB1T22FI5aMFT6dxzF6KxjwjCPjiAgaEgYlO8CdLa

4xhmLfwVzKkiL4rXSrCclRkac5aO7ox1iwcOBS4yu+XkTDXjWqOleqmd3cXu

7Eo8gk+1O9HSw87WubO33JbAgSCKhM+LP4in6q4LFfetCZ/35F3h8ohzzhjn

9mOadWd6MIgLneJZfQFE1e0WfpLssTmB8rjwj0WAnHcTFhAflXk1OybaHjiD

2YETmL1zZOt7k5V7D4ie+0Xbw3J65P/fdgkrvPkWu97cwSHxhpg4B2qbtNS2

RgmfemJs9iezwZfyvkAKG9QUb343jfBOdK7kRIYTpzPexrphL0f1B6RLitYt

hzHreBfF6lGUV63RTzjz0VwqLxaEfYaiCB06jlvjTqY61NwcT2S035eJ4UDW

xrz4fwFwto65

"], {{0, 102.}, {88., 0}}, {0, 255},

ColorFunction->RGBColor],

BoxForm`ImageTag["Byte", ColorSpace -> "RGB", Interleaving -> True],

Selectable->False],

DefaultBaseStyle->"ImageGraphics",

ImageSizeRaw->{88., 102.},

PlotRange->{{0, 88.}, {0, 102.}}]\)]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79cab740-eca5-4921-b1a7-e79f82fb39d8/35cba68d81b1a5a8.png)

![NetModel["ShuffleNet-V1 Trained on ImageNet Competition Data"][\!\(\*

GraphicsBox[

TagBox[RasterBox[CompressedData["

1:eJwcu2V0XGe2rV22Y4oZZIEFFjMzl5hZqioxs1RiZmYGy7bMMsZMsRMndhIn

DnZ30pA0pplun9N9xr197rnfn+dbtsfYlsoqbVjvWnM+c++yaVFdatlmhULR

tEP+Si1sC2lsLOxI2y8vMrRNleXa0pIYbXNpeWmjT9EW+ccy2TSyvSGbvac+

qWojcos8iEiwxifUEGWCBRn5fvgGWBERbUtgrBXGTvsITbEmNcuD+bkGYmOd

qWsOYXi4GFWGC1nZ5oSkGqOp8CcmzYXSihjWL01zfL2FC+fHqW7IxSXoEKkF

HiTk+pBdEyz7deD5Zw+4+vAS5r6HCVFZoCrxxs33IP0zWVR3xDA8W8OdZ6dZ

uzmCX6oF6bVK4ioDKGmJY2SqGDevvcTEH2N2pZzYNF8Sit8gOfEgpoZ7MTLc

gZP9IfwC9pGQehhz881ExJuSkGNOaNphMip0mL+Qx+q9FmLKNrO8UcZ//vvn

PPlkgareAMLydNC0HKNp2JnVMyVoOx3JrrUkQLWf8DxLQosPEFZmglfqXqnZ

FuIy9tIzncjFu23UdbviF34Qe299bOz2U1rjj7YjgoQsK2LTHalpjcU32Jz0

bHlflClmrocJiDcnszCQvt5MyiqiKalxpqE+lur6KFJUJrgFHqJ3pJT0XD9a

WvO4fHuVueVaHt4/S3NHBaFJ5pQ0BJPfoCQ4yRa/CE+++OlzLt0+g63vUWp7

MnDxNkCdH8z6nV5SKwJ4+tlD/vj3P3Px3jliCzzJawzFL86Egcl8SsrCCAw5

zKTU+eS5Zjz97YnJ30R61g6OGe/EwmIfNpYHsbXdg4f/m3KNsXJdiTj67CUs

ew8dp4M48X43GZ02NE+Gcf2dXq69O0zzfCw+mt0UdFlR3u5E12gErb1Kcsqc

CE45QmK9OUkNpsRXOJNcZUJEzg7qJoIYXNLQUBdJTNRO8ssNWD2nxT1EDyPH

vUREWkntPFDnWktNIwlNsCX81RZnhabABztfI/wTzCiujGF8uk42LekaZ2ql

Xkmp5mTlORIeY0ZVg6xRhjP1Wg0P3r7G7TuL3H5wkv6xesKSzChvikZVGoh7

mAF51Vn883/+i8fP7qKM92Xx7BgWDgeo6kxg451hJi7ncPvFCn//v/+iY2iA

4o5IkircSc12oLknhZCYY3JcD959Z4XmAQ3mNlvI1FqRVrqTyFAjkuMcsbTZ

i7XNHlxcdqNtiidJ446FxyZS+7xpvKAhttOAzB5b7n64zMTpQhJrzbAJPUCI

xpDYHEPSC2xJTLYhp9CWZHmd2yDHL7MnrsyapBozSrtM6Z1P4vIn4+T0eJNS

cJTTGw3certbZswPc9nXUefdeAUcJSHTiZAoY0rrpDfro8kuDUAZaUNjRwbh

qZ6Yuu2S+sVS15LC8EQZ+cW+0rv+tHZFkKqS9Uy2pLE7kYw8dyqrw7lyd5Lr

b81x78lpqpuyCYm3kH2GkFsVTECcEeriRP74H39gYmGK8ORA5s/1EJ5my/ip

ErKrImkZKOLjr+7y4It7FHVkU9AZQEiONS398n1VANHxhiwu5nP5QTcJRYGU

96gpHglEpT0gdXclIswaS7tt2DntJi3VhrCwgyijDpHeZEjJcXfS21zJbXGn

ezqcnE5XlEUGOEcewSpAj9LWDC7eHqKoxpFyWdOoKHNySv3Q1Hmh0XqSXGxI

as1Rhi/nsvF0lPJuF1LrD1C9EMjanVZytRY4ByrYYbsdfaeD2HgfITjDGlW+

NxHJ5uRWKimujSIs2oGe4XzSigMxsNmHKseXHKlr73AeDS0JjE7mMT5fJLV2

JipW+rMlkjTRWG1TLKev9vPhx1d5+eUjugZriVe7UCbr0zqYjk+EAUPT3fzv

//knbcPN5NYkynyVE5Bow8KZERoHYzlxqYUvfvget17ekVlNQFXnSX5bJAun

OwmI0Sczz563n8yi7U4gMNKWm083MHbdRuWo1KTbCZ/gQzg675RrMCExzZSo

xEMk5cps11kT0ahDxagfXQuxNPX6k1hjgZtqN64hBvjGHGXlYjPjx7NILz0s

PuBJjeh9dnkIqVUuRJaZUzWpZOCihsrpaMo6XBic8qe+24PUWmc8khWoyyxZ

v6JFL2EzRzwOYex2AJuQQ9R3phKn8iSnPIzS2tjXelspGptSJP3raEBMkifa

1jiapKfbu3J4970zjM81ygxZkZBkJ3oVSW61UvpXw9W757h8bZ6vf/YZHf0N

Ul9nqtviqWgIx9ZtP4trc/zj33+T88umczRHelpmQLR7daOHluE07j27yfd/

/Z6rT6V/25MJzTjG2Fo17aNZeEYe4PbTKX711x+hqk+S3+knvjiIgup4WlZD

KR7fR1LBYUIjjMTTjPAK3oFX3B7xMSfRTDeKZ3zovVRMzXI6Vf1hsm8j3CM3

kVwgntKeJLMl8y89mlwuXt/iSLrWgYpOJWU9AZRNx1F8Mg31eBAZDTaUDsg6

ncghKMuA1HIH5k/myVZIRpktRYuuWHdu5VDALowdd1PUHEBalR/x2eKVpb6k

5DiRUxRCSV2IaKSNeJiJ6GYIM4stnLswx52HZ8THW6mqjSQy1ui1trya/5Ka

bJ6/eI/T5+YYm+siv1ZNdkkgxY1RFGnDsXLR59Hze3zxi4/IFy2dPdWGd4oB

s2eaGVtpZGq5i49/8oT/9a+/cue9m2RWhZAouju/3oa63o4Cmdvbj8eYvdT5

WjuefP6uaEQkk6eqiZL+im7eRnr1HvJqQ3ASDXT330Ok1h71gBJVlzelJ6JI

W0wjfz0flbBCQqUpRXUOcm1eRKksCcowFH4wJbPek9Q6RzKaHKjvD2XsXCG9

1/IIGzAnsduGPtGyrAFforSm5IoXNvfH0NwaT0CCDo4h23EvruVYZjPubY4Y

KHfil3SUonoX6QkvavrTKasMJa/Kn46xNFSiEVYeRykoTaS7t5I7D84wdbyD

UxcnqamLISLWkKqmOMqbY6XHc3n+8XUm58tp6U0nMdebGvG21sEccppSxWP0

ufv+OdauDlJQmcTUxhC2sQacvjPD2Ikart2f56MfPuVP//oli1e1wl32opO5

9E6WoswzoHEohkuPhomrcOX84ylKurJZvDIquheAffB+gvL30DbuR67Mobnr

LtyD9cjo8SdhMpCEWSWRF3MouS3+fl6J3fk4aq5FUzQXTni+Af7S5yGaowRl

G5BQbUeKcFL1ZCRTF/IZupRD7JAZjkPOBG/EE7oYRfyEH4Xj7rROpRKRq4NH

9A4CU3XxiTmI4s0AFFvLMNd041K3H+e4w3QMJEovOKIdSqJzMIqEbDfyq4PJ

LPPG0m838amBLC71cPvhcRp6s1g61cfoeKn4jRnVLbEMzRZJLVO5cmOKxeNa

7jxeF92LorUnh/ahUtHaGEJjzHn84ToDwrdTC3WU9iSTUu3J/Q8uk9am4cWP

Nvjp717w5W8e0TCSSXSJI6s3m6SH7UmucWFsXcupO2Ok1wlj3Rmifb6CnpVq

YWw9HHx1KO5KZHpVS1icJf4ReiQU2FE9EUz8qDN2F9Rkviwm50EnUfeaMb0R

i81aOMWrvoSUG+OTehBflQ6+WXoE5BmTKN7StKSibDKa2LEQYqVnM1+Uc2C1

FEW9ir0ttgRNRBHXZkCo1Dcgw4CwVDmmypitRwpR7Ihgs+1ZLIekP0u2U9Pr

LRxzjNC4Y+RrRbf7RMNrAtDke+IXb0VosivD05VMzFe+1uv1jVFWjreTJT1a

og2jfyqL2qYMHr97ifef3eDh87tUdFVQ1ZZIm3BrjMpH9C2C937yJalNIwSO

rnOsZRSTrFrs6k5zxEfF2sUJ/v7P76SHH5BUInliJISaiTAcvQ7TNJ7ByRtD

ovVqps92snR5jKvPThIprOYcaPCaQXpP1lDYFEG0cE2R1o/kQmeK612pOV9C

yFc1FFyqZXf6C3QTz1G5UETCiTCcZv2JGvfHOl8Xq6Q9OKTpEFbtRsPxAqIH

QnBs9+DAYCD+X9SgulfAJu/77LRcxDgjimMVUtshyS1lx/CLEVYI3S1+dJh9

Mb28cWCNrdb3MG/x51jfG6RUHBVPDqS0WmZCtLNR9L6yKYS6ziTiVS6iT/bC

W+n0iidNrtSxcfsEzz96SHKy+Kd4Y8dwCpq8GL779Uu+EW97+vIBBY0ZFJcH

0zVWhluIBVV9JQS0raOwaUMR2MPmnCEUeyPZ6iPrvd2a2cVFXv258ewOnpLr

rr5cJ1vOzy/MjutPT3DxwTjtYzXcf3mTC/fWmThfhXP0fvxjLGgZzBSWUxIq

mTKvNoymoVyCEkyoHEvB5Vw50T9vJHyimjeUX7D3aDkh6myWF5JwX4om+kwm

+v0+GGkOYJp8AFfpXWWlC8oyB/xbvPDQOmE8FIr7hRAsYtVsM8jB3D2Mkiov

DGtM0NUcwdJzGyl55kwIOx7uEEYsz8S+MB2TPkd2j0vmKzpMWbsXTT3xDI5X

0DdYQnljLN0T+WiEzVyVBuSUhLN+oZ+l9V5mL/RwX7S0si5Mcos3lQ2hxCX4

8cWXH/C73/9SGPal6Hcrrf1pDK6USAa3xK2onM2ZS2wPH2DbYQd2H3Ngy8Gj

KA4b4hoRzEdfPeAf//NHYYFGCociWbrbL5powamNKe5/IvUUH5s6NcijT+/x

yY/fpnYkWOqky+BsLS1jBcSqrQlONKN2rJA08dOi4QRKzxSwW7xt/0YkqrfT

sE+cZtuRFezCK5ldSid2NZ/giyq2LYZgWmmFk+ooe8SjzIVVgpOP4RqjS3C6

Ge5pNuhJDgkQ3j0Y5YNBkCeJhfZSPz8UWjfeiDuCSdJB4V0LtqRsY1vnNnYs

bUYxfZRtdWY4p+8lSTxY2xUqPOxCUWW4nHclw4tVNPZliEbvJbtdNOJCFR3n

qqhZDGL+Shndk8niVf50DquJS/Xgh9/8kD//7Q988c2X9E73UN0VTeP5TA43

J7O5bpY34qfZYRHNZmML9huaoefpgat/KKevrfDbv3/F17//kgRh8LPvjJLZ

LOcimeXLn34gvbtM/YBK6nyG47fbWb85glus5NYiPy7dO0mw2o3AFAsqh9LR

9MVRtKqi60E5ER0OWFeYsS3bmiNTXiSfTcC/qp7g9mwCR+w4KhnX9GIG22aD

OaQyRC9qP04VTsS1RGDpsQd7n4PChQYEx+jgotTHIO0YBmG7RCd28mbsTnaU

GKIQ9tuksUGh3IVusA6HXPfzpvcedga/yVbh7y2JBphE7sMz8QA5Na7CAkFo

26PonSmifTyPnMpgPMN3iY4fJlnmpe5sOaG1tqzeGWZ4vpDS5kCGlvNQl/vy

8Q9e8tu//YonL94STW6iuD2Y0nPpvFGVydaqZbYFV7N59yF0LIUbc5LIr8uk

ebier3/6Of/877+Ixz0nW5vI1fdW8E22Zub0MD/87hPmzo0zttrDR/Lz+59e

JCTPCO90U65/eB51Vbjk26PkjKZQdSoLzUCg5IgU4lq98Cs0xy7FUOoicxL5

Jm+WOOO+6IbNsgOKNgf2jTtg0euFQaI+llIb68ijmEYdFa4xlXl0JCDAGBun

vdg7H+CY614cw/YKs5thmXAYY+Fks8wj6Pq8yT6rHew228Jeqz3oCPMe9dXl

iKzDwSR9jiTtxyHhEN7S49EqXZr6YmkbyKB7KpuGwRRhNFfsvQ5hHbaD7C4f

KhZSSWhwoWYgnaIGX7LqXBlZVpNW6PaaBe59cJ1LD04yOt8sjCAM2+PLkSg3

7NOK2WoRhbmzPSEpbpQ1akSDMlg9O8fnP37Bd394QZuw/+xGGa3zauKLgvjy

u8/48tuXNE828INffs6v//Kt5JAqrPz2071STv+pOoJyHMmbTaLiRCp5o0pq

JhMIybTAOlwHXZfdHLHeJT21m0PCGQe896IbegijXEMODTigJ5nPOVEXc7dd

BCZY4x15jGPOb+IhuUeV6YGT/R5MbfZi6bBHssIW7JX7idKYkVxhhVvaEWwj

ZE18DmPtcQTPUCvsPAxRFQZhZrsbR8mrul57sY46jGPSEbyTj+IXu4fGrjja

BzKZPtlI/3yp5A47bP3l53EHSGq2J3VGmLHPFU2nt3CVOxlZfqLXWUTGW1Ml

OjB1oonT99cYPDtMcV8KNoNBuHR7s/LoFDkjbcJa4cRkeVLZmkdLfxXHz5/i

xWef8Ie//pWGyTouv7OMb6LMx9V5fvLbr7n55CpXH1zgZ7/9AVfuncBaWKy0

M5P1G3NEyJpqxoNpOpuNVrLDK/ZfvtVLrmiEgc8ejjrt44jxm+joS48dexNd

tz04hR3ALGAnhim6eEitPERj00r9pD76hESbUin6FBFnQnDCMRz9dNAz3oyv

8rDUVThKbY5L6B6cpTe9E4R3E43wkH73jDIiNF3q5L2P+DgHlEFmBCrtsXLT

wUBqrcyyxj3xKP6p+qjlWPPH2yhryGDmVDepBZ4EJEq+UethXnEAzx4nXFdd

MVk6imralqzqQMYXakhTedDRl03fUikDJzqZmOsmZzILxaz4y2kv5j5ZZeC9

Wen9MOGvIMqaMukfrWP55DI//PoLfvenPzJ2fpT+401EqkJ4+dOP+fIXL5ld

H+ezn3zE7//5CzSVMYQkO/PRz96lRJghqkGOea2QlhNqusWrNt6dpPtkGfFN

ga/72j/RHjsfQ+yd9DCx2MdhEwUu/tJv0ldG7tuFm3dLnQzwitQhKtWCoal8

isr9CJQet/Dajlu4nnBqKOnZzq/f4xy+D0fRVRflPpQJws3Svx7hkhXl/U7K

N3EK2U1hsTflco2RKQ4kFthiG7ifaI2tzJT4b46lHM+CSzfnmDsxxOzJYbIr

QglPtsWj1IidNdvxkrnSPipHeVGJz2knwntc6ZnLIy3bg8aeLIbPthFbEIp2

ohyvEwmEHo+h7Uk1xz9YxP96PvqDfjSt1dHUX0xHVx4LC4O88/gdfvCzz7n8

8SnJ6tGsnlvg2z9/w9XHF8TbLvDhdx8wttGPR4Itt9/Z4Nz94yg73MhZjmbl

4QjaORUb74yz/miQrMFIAkslF7eFEZwl+hp0mMBEU5ylF51lfl08DDhmvQOv

cBPhex98Q49QUOFHdJqZ9Kg53vG6UqcDkos01LSEoymyIyTGBNcQmfOQfThL

bQOi9QmX7BkiX30jdfGX70NTRF9Fl0rbvIkTRogVNg9INCf5FX+F6RBZYI0y

05zAJHOKmiK5eHWJm/evUNqYSUiSFfYVRth0HqPnSjwXNuq49G4/YWeFL0ck

941nEZHtgqYkkpO35oloTKDqcj0OazGcfTbEN0/O8FRqN/rhLFtqrGh82EP/

dB29PbWMD1aycX6Fr756zvzFQeJqg3n+9VM+/f4Teo/38f4PP+TLX70ksS6c

zpUaHn95j4TWEKKnArn40SSNixlSey0XH/fTdVJDxrA/ZcK2gXUueJfokFHl

RGqpLRnl1tR1x+IRoidaa0dWfjDRsZZ0jmeKz7pJ/+qIxipQZljRNJrJkuSR

CJWB9OhhotMt5OeWZEje8g3Tx8FzB+6Bu/BQHpQa7qesO1RmN5nW7nAySk2J

zxQtEe0IEh1XxtngE21OWJYjgRmiA8mmxKS7cOXKGs8+eIfSllz800zwKDbG

rsWUq2dy+dlbbXz30TCtlxPRy3Wkdr5Acp6tMEE4D9+/QfBQPFZzyVjOe/Jc

avofX9/h+w+uSo8+JP5CO4XXWhi5Osb64hiDbbkcn+ni3rsXSKyIFG+r5wd/

/JRbz26xcHmVH3z/peTiRdQt6Tz/xSOaTpcT1O7I8LV8WlezaJ1L45Hw8dL9

HvJGgkits6VsIAj/Qh1SWo4Rma9D9aSLbErS6g2o7A2laTCNkgYfqnskJ/Z5

EFq8k4Dk/dQ2S3+c06KStQmSfvKT7JucbUVzVwSZxS4ERppg5bAPe983hSP2

iQ4EMzaXz8RqDi0jsaxuNOArHpaaZUmKbFllXkSlOJMgfuMm3hlT6IlSON1b

+KR7oJIf/Oh9Wkcr8El2wihaD9PqN3n2Tg0LayOsnp7k9LMiLHOtSGkNwznS

mOT8SD74/DmBbUnskWs0ndLlwaeLdCzOcfHKBT54usSgrEvKcgUjd6e4df0C

s4NVPL3Yy5MnG+Q1V3Hu3Vt88d0LTl2Z5Pp761z5/BqNUzUs3V5g7e0J/IVp

y+8m03VLRcOqmhNvtXD3+TrNp8pI6fWVOsbgknEYZYUefkW6pHY4UdglGbT0

EMWtofSOx1HZ5UfDbBi53Y74pu4iJM6IkaVa5q91yPuO4aPRJUTyQIvwzMqF

CjoHQ0gu0sMrfjuBsUdJL3Yjt8aRwel8jp+tZ3KhlMm1YvLq7IjMNsDD9wD1

bZE0DySTKwyfWepNQJIlYdmuhOc5y/kZE57jxOhcNW1DWYRLb++SOTGv2MvM

oyZ2GWjZalrKyNs9hMr1+giTe6ZZiIb7MH1xBrXk6qMt5jjOHKXq1gYKhZZ9

thWcuLlA13uNOHVHMC5a8P4Xz3ny6BpDbz3Ff+wHGPX9Gvul74k8/iO05+9x

4+OH3PrgJiMbY5x5f5nUVh+SFpVk3czCpMeB5afL4nWPpe7DxNQ7k9jljnel

GbaSw5RlJjRKb6W3KvGTax66XE3fWQ29C3GcftBERoez8MMBSttjaBrXoBR9

NA7dgUOsHvVDOYwtVFBWH0xCvjWqEkdhFT3Sm22Z26jk/U/OMi/rWdcbRutY

Ek0joSSoDgoTeKDOtiUuyQR1rj1ldcGyjxByigNJyvcSbXCVPs8lLNcZ0wR9

xufahJ/6Scr1Ry/yELuyt5G9nsmbjlMoTAfJXBmh4po/QS3uuIXJmmTZUzWc

w+CZNixrPNBp3kHOtSHe8L2PImCZzmtrdD7X4twUytyVKckOH7N29x0UEbIG

xd+i6P4PFMP/iaLr32wb+Scu899TcPdDLn10hcqTxbh1h5G5nk70XAQlc1mM

C4uNnNGS2RnD+BUtsbX2uOcY4JZlRmpfMOU9UXgkGYnn1snxGhm4WEz7q3u5

DRZE10rNL2hZ2KglqNoc44TtOCXovO63s9emxYMiCEs3JqvcXXj3GAOnK7j9

4gILF8uEcbL42Z8+ZORkATGlB9H2B6HpcmTsch5RorFq0Xv/+EOEpB4TT/Kj

siFWeDQBZZITpU0xaEp9MQ/bL6ySyJVbS8QVhOMq3vqG5Lyw2f2EtHWwPec9

8ZECiu6HoKz1Ib3Ah4Rsd45fmeDUjUnRrkg2yTnnXxI2mT6LzfAGC29XkvSs

mpIrbVx9+ypf/eoZWSM3ca+7iVn+GeLOfYPDuT9LjX/PpqG/CNtJrTv+it3E

MyIu9KN6b5Tku41krkr+udlE7bVqEir92Li3SsvxSlzzDAirFf+UPKnpDCW5

1ImuuULxqg7mLxUxcKkd/x53IudDJY+kM3BTPEz02jfrIFHlNqga/UiqcCW4

2ELO3xJNix9lXb6MLuWy9lYFXfMa2mfSKRjwI6/T93Xdq3rCuPxgkL7FFOqG

A7ATVgtLPUJUtgneMXrSx0qq6uPpGcmnoDKBbOG9MuFzp1QDzJRHaZurlHXR

4l1kwcEAfXRzt5F1fD957W50bDgRcjJKNC7m9XOQlFwfnnx0j/4Lr+7TRmMd

fADD1oOozrgxcCoazbMSnBY1dN/t58rTy5x6dA7vvFH++B//IrlkmbT2DS5+

8mvqXv6draf+xKa1v7Gn9g8o6r/H58yPOf3RCZRyLarbWoqvFBG6EEvzuQZm

3xrAM9uG0GpnWs9lk9GuJEOY6/L7s1QMJfH+p2eEY6ZJnA3BZyka5zOh+E34

4tBkga9oZnK9D9GFHsK3RjhJ3gou0KegM4TG4QRhz1TGV/JoHpKaNrmQ3+GH

usYada0pxy+Vs/RaJ+KEQRwITtmJSdB+7NIO4l9ljGX8PnTspSfjnBkaq6Cx

rYjkgkDK5f2B2WYYSaZxjjajejiJsPpA7FP1OBi8i51527Ge2477LXcs24NJ

6gxGXW8vaxPOpz9+ytS5UfzFE50z9uHVYsW+BSe8nmvwuFxLjvR871u9nHq2

ineq7DerEvgnP/rJXzhsGcdO6zjyWoRxv/gzRzo+QJH4Drt7/8ibYz9j7sUT

Oj6ao/jpELmLSWRLZjv98Qn8W1zI6RNuvtlHUpuSlGYld99bpHpCzfz1Tp59

c5/s2Vh8T6WS9KKMkoc5JF4tweF0AE7TkUQLs9pEHMJFGDexwI6cnnD61nKp

Gojl/JNpVq7WktVgRtmgL6pWJwZO5tC2kox2NoWCLldii4xwTd6Ne/wBtgg/

bEnbzZZkqZ3wsGOkKY7hdowtihattQjvvrrnEESs9INbhGQ2Vx3yG6LJaInD

I1MfczmPwwEH2J59GJdhH9TDUaJ/ftJ/pqRWOYr/dXLtgzW8Uu1xi91J3KAr

cWfUqK/WkXm5gpK1Ek6+e5yNZ5d408KBqv4O/v7vP/DP//PfJMbl4Bzozq4D

QZSUzvPrP/43Nkln2VH2kDeqH6JcucHqj9epud8l65TK6O0+HHLNUI1EsPp4

hNLRRCpmcrn/8jLN42lox5JlRqbI6UjCeSgGh88baXm/kAOqK2yLuEHmXDFO

o67YNXjhnKZLYY2SmoEYSqYSmL3WzLlHA8IuyWR3uKJqNqdtOpb2E2nkLkSQ

0etOYLkZkTWvntsJyyUfllyvy87Cw2yvPcCO2s28qT7w+l6QZeABEiUDjolW

tfSn0/bq8zrNUcSU2+OQpENsrhvNU0WkS+60jtLhWJohjqlHyWwPoUYYNG/I

l9x6G6KLZa5mxXuWcogrDcQu7k00I35Sh1ZOvL9Ewbzo5mktlz86x4xo9KHA

CDabeDMwd1x6+D9p1vZxaJ8tlnb27HJUsnb1HX777/+PbWmzmJbN49Y8RtN7

c3Tdb2Ph3T4KB1QkN0cwf3+AwkE1NbPZnPv4OAUTGtou1JM3qaJwRk3mYARW

w4GY3kij+U4WWxMeo9DpIVpVRO+qsOVMLMET4fgIy7tE61A8EE/VYBJp5Y5k

1DtQPy/rNhFKrHCCUbctmzL3Yph+GIeMg3glHCRcZUF1Rzz5tUHsGLUgYsKN

9lkfopbNsOkQv83TwTVqLxV1IQxLBh8cL6WmK5nEGmdcVbqS1XeQWuJFeZtK

sp4pFtK/lsm6VMyqGVgooXwwSrTXiugiKzKXYsk8lc3e0sMc9dyMZjKYlcez

XH5xlsKpNMavdXP66QKty1p2uPmxyTwYx7hc/vx/f8O//v0XevsH2R0YztaU

Ejabh/HRT39Fw+Jjisbv8Nm3f6D68nGWPpqlaSWLzJZ4Tj2cpXOulMV7Uwze

6yK8M5yBq60UDqtl1o5QMp5E5ZyG1LZgXBp9CFgUrZ2vYJv9afyz5fcmQwmW

jKJcTEC31BH7SAOMvXbJ7OrQvZBF27xaekuDUbMT2zcSsH8Uj+GUHfqlMt8V

ppQNRQhPx1LVHi98YIRiyYnTi8k8O1eOf+cAJuXD8tUa/1w9IpPsmJypZe5E

F03D6WSUueMULLlNuVsyTyIff/4uWcVJWEt9nVKNia0LYOZSJ+3TKiLiDYip

dMCq0wqTASsSbqRgU26HssGdhffnOP3OCmVTaiau9LP8aJC66VIUR4zYqq8v

X/VJzC3mN79/R/r4ezpPnUdhG8Q2a3t2OKv45jf/xd1bXzMz+Q6f/fJ3TN2c

JHcgipNPJqmfLablQgPt1yUbtAYwLLUtG8nELcWIuHI34ksDCFXb4xFrIYzv

gF6SIaHjwhCyPhXreSQuBmOwFIeF+N7mImvMA4/gEWos3Ov52usjhFvtVVbY

VUrOu16A9qNq3kw+wR6/YVzrkgh/9Yy62gGHoO04Bu1g06o9YyeSOXGumS3G

ISh212GY007GojHZ7QGkp3hx/too7cN5lNSJRqhcCZAeDlYbs3F/jZVLI8KU

uvhkGuKVaU77ipaB5TLiciUftztxqNqEsE577t/QMv/BAF6VgUy+vcz8w0Xy

B5MZPtXOxpMzZM1oeNPUijcOHmbrUUMUuw6gY2NBXms9Nz75iOrxIRxjwglK

KZTjfsC3v/gLmWkDNHUvcuHBDeZuDJM3EE21aNrAzVGCGjzpvlpP0XAqoSXe

zN0epkX6NCrbFxtvXcycd+EaaIilz0EMY42xbzHCdNCKzYMu7F4IZocw17ZX

tc/2JCJdcpbK7fW9UDt/fbyijQlRWxFZG4Jm5VWWbUCxpwnHiBBi2i3kel0k

9x4lXjxLUb4fo3kv6mTt9Dylh7Y3s1P436/vIKVL1uRUhFAuvLZ8YoTuCS3p

Ne4EZO/HR31Y/FHqOVtAouTzwMKjuKfro9KGM3hWS5LWS/rXDt1MA0rEz6/O

VLPxgfRUn4rZm+v0nhsnuzeF3o020o+30CqaYReRh2KfDjsO6LH1sC6Kg9LL

BsLZwXGYZucLDwYQV1zMg7fv8vHz97h9823a+kd59tkXjB0fZfB8Oy1n6onv

jaF+o5Ck/ggCRDPPPVvh+L1ZlBrhAqmpR6wZ9n46uCkNsfLWkfk7grLEHvsK

YyzEN1za3XHIspLaH8Ez2AJD51csYUp0xqvPnAoDyPpEpZvhGWlCUIYB/sJF

h/2VBGl8yJIc7dHqSmCZMJJoy9GcYygk3+wfM8Kssxx9zTrO6laMBi051HqI

1gHN6/t144vtdE1XUz0kuiJa4JF8AGfRl5qJDGLLnfFOOSzHMiK+0Jfy+QJ8

mlxxLXXGqkHYcDGAiJgJRt6qIfdkCuNXVymfaiZvIpuK6w0cnY6g/Vm3eGUS

iqNmbDE3RXFoF1sP7WPHYX0279NDsfMgigO6bDmiI6wXxOD8EE/fv8vDu7d4

9vIz0eUf0Hiyg5SZZAZuV5Pc6olaMv/ll9PM3mkkpcmHyFefLxXeCilyIirP

CRflq+doR/BNMMZN/D601JyAAqmf5Da/WAOs3fZyxHYXoWnuKBOtKdW++hyu

N3bucg7B+gQE6uPpZyjabCTavg/bJOnVMlN2iDbvKbNjv3BCYKE1B6XmikIT

ds3sx/KCOYYn9Nly0pzNtbqE5phJRk4hq1ayi2TzeuEJpcoGuxyZq6KDONTr

E95kjV/xPnwqDhLf7cvYhV5cK50wy7TCVGuB/7ysaVYD2stdFN8qofZ0G1lj

5WQuFhC5kEnBiQzJFNdI15ahsAnjjcAY3nB3YpPRfrbo7ZM+3oeBtwOatlwa

xD9z+zXM3BqjYSmDDmGqtjYtZ06tMXpWGOJMCtG9ruSMRvP2z29SuSAeLBk5

qt6J/MkoiqcTSWz1J687lMr+MMLUhgQlHMUzVld6w0Vm2hXfSMPX93zd443w

SbB+/TnS5BwrkrIdCIm1xy/SjKAwG2IT7HD22ouj8z78Eg3wiTPjmDDvAeVB

docasstjPwG5+lgU6rGvzgxF7lYUjTt5o24XB6qM0K22wUHWI7LAknytUlg4

hrqhFEIyxRfCd3FImGR/+i70i3TQq92JXqUO5lpHmpZrKBTd8MyRGqst0JmQ

/C9ZaeBRCZE3a0hZqaJ8VUv5WhVek3GsvV3FP39zldX5GRSu6cLiDWzNK2dr

jC+bnM3Y4+3J6K1Fbn/+Fo3rlYzcGGXkTi9pXSEyC23E14bS1pjH0w/PEyS8

nzUUJ7PSTEyTPyFVfkze6KV9vRDHsmOSz5zpPVPHwJUKEtptUEr2HTpRQ2lr

Co7+uqLJwq0RxoQnWBCd9uregSdRKVZ4JlqRWhyKpiyIsoYoohOkzv56uLnv

xtlt9+v7wq+eTcYmWmJluwdj073Y2OniKyxmkarD7uB9WIVbYuqli0OizEu1

Lbr5ehiWmOFWYEJJaxRLZ2Wme6NIrw3A0nM/BjF7OZy6iaBp6Y9L7uxoOMBO

WQv/ligaJdtHvvosd0sS+0od2CMMk/CyhKDrfaSca6LgRiNpG0VErqfTeKNW

MvU4c4vj7A3IQhHZyGbtINtLi9gUHo1heSOn3lljQTis4kQVmtlMDFXH6Lrb

RvNV2Ud3GNN3e5i63iU+NsnDLzeIr/CXuodz9sN11p+MUSy5OFGyWepyEOVz

0USWe9CxmsP42QrKZhJQdQQIW9ijrnYludiB1pFkwoQ5vCIPkVrjTWSVF4mF

niTluBIptQ+NPkpUohnJapmLCl9i0o2lv83Eb2PJL/UnQPTa0c0A22BDbIMM

cBetd4nRp1Kyb2SBF0mNvgSV2RJU7YNxsSV2alMyhYdr+1OoaI/CJVj6PeoN

bAePcOJsHNfP5aJcs2V/tS7H6t1FB7NIGcokd7Yan54gDjR7obigIvSheN+t

OtR384iYD8K8+ihxU6K7IUOSQ07jV1COIqCWTZWTvCE13lzai6J6labzs2jP

VFF2ogJH0c6s8WKUTdHil9H0P+wnayGdro1Grj+/RIqsad+ZLr772xeMrNZR

LXXs2aiUbBFLSLkp2YP+rL07zdrDAZqXM0nt9SeuxZHSkRD6TuYSkC88kbyH

6Cp7IqW/o2WWfXJ0cQvbRXqxDc19sSyf0dI/mUVNq+hMk5Lq5iBhVSfihd2U

YQYcM9/GgSObSGzzEG2SbBdnQmiGzECRPaU9UZL5Y8lujKNhspDI+nDMxE/1

4vSIqfGheiAJh3BrNqXrSuZ35u75XK4JB0xtJEousuRgmz0Fp4soPtOAT5VS

ek3/tafarCbgfi8X+4eppD2KJX3VB+8uR6ofx6M5Mc/cF0tEj9Sg8NGypfIc

m1pusHniPRT9X+E7NM2jH94hQ/JX8dl8eh60Y6Qxwa8jTnS1gO7LHaxJlquQ

Nb3+wQbnn66jnSqk53Q1U1daKe0LJqc/hKELLSw+nab1VAnx/d4kzcbRcbGO

EsnS2aLJWW3CgKK1PpnGeCcfkp41J7vBl4GTZSysa1k4W8eM7HP5QgWza/mU

1fgTrbbEN3w/Dh7bMLJR4BkknhhlQkFBIAWDoWR2+5Ek+h9VYUt0haxZljFL

Z7rpW+yQrFBF0VgaMZ2Sq3LMsUo0olCYQdutFn00JG7JlVFhu/0+ySira0m5

HsC+TkeCZqOxrfNle/kebOoPkj4cSdCYM0eOO2Awa0XGkyB8H7lwYNmDAzeC

aHieTsdP24X1O9jsmMuW/FkU2vtsnvkGxYm/YDl4lzHJJeOPJsm7WonneBRh

i5lE96QzujHB3J1Rmk7X8s6PH3D9xRn617uZuTNG/lQ8qoYAyS85XP3kLENn

i4ns8cayVpjyXBTuazH4LKeSvyI8J4wRUWyLf5aFZCVrCtu8GFzK4/Stbt7/

/CKPnp/hyafnmTtVR0aJ82s9iE7UxT1qP24xByhuCKRHclipNoyh0SyaWsPQ

ivYUTMnajiqJEM8PzrWjtj6WwlJfKkcy6DnZRsGohsyRJHzK3TEI18fh1bPo

9jCOJDuzufqA1DicQ/6t7E9qIeNyDLtaXXhDGDlQslDqu0nYnHIlotcLzRkl

OrPmxG048qPPxnj3B0N43pdMc8WTQy8kk7w7wNTzi+wK0LApdpxNZY9QLP4K

xfW/sK33M3LfP03BJ5NEXm8l6/Yg8W+P0PZ4Wbj6Co9fPOTT33zO6p1lFq4v

MXK/l/SJUIpFey68PE3XpUrRhWgK+5TEjCajeZTD4NMGyh93E3ApCsdJX/yr

XPFUHyOj2ZOMbh9UXQFce3tK9jnImTtDnLvdx+zFCqo7Xt1f8WJKZqBP9j84

qSZV6lZa509+mQd2vjuw89mKY9h2fNKM6VsrY/lmB12SEdsWpZb1cdT0RaJp

dKVzIo/KXg31MwVUymalkswQooN+gglWJT5szzDDZGofsWuRJNV5EXbeAYXM

wc5Gc1rXk3n7Xh+xtyIpeZrJ559OcOlP4yQ8Smf93RFuvzzO6vcjeF3LI+Ru

EzlyDemXtRxOj0eRsMCm6ucoJn/Fpsf/ZtPQ96guL7D600FufHuV2a9O0/z2

BHXvzpC6Xk/JVCsXn93gwcurwmmdpA+F03GjWjRjUTJcEqnNzq97eOnOAh1f

TrLwVSkHSh6xJ/kxPReryF+PJX4uCacmL/yKnEmTLNy8Vs2lx4v0zGcLN0XR

PBZNUasHjaNBTJ8oYPFsJcUt3ihTDAjLNsc3zQDfRD2SxPPUBf6kl3oTHWNH

blMQi7dFP99qoetsNZXjhWQ2h8i8iF4LRwTl2JJS50fOvBpldzTHVLboBR3B

L9uN0LZ0tuUf4tCSIboX7dm95iOvhad7bcm4kkqN5DaTkyak3Iuma17FvT8O

onpUgPqShq6njbR+00n+nU5SbxdzZC4It+UM8cViFIG90r/vo+j8OVvu/BvF

2J9Qn7nJvV/d5+df3ePLLzaY+fQ0VY+nmH1+QryvhSqZ24nLQ6zcXuDUe8uU

TmtIahfvmc9j+EoLk7dHSBSt87moZvppIvrF91DoTpDfUMjocRURF1IIXUnB

uyOCOLUj0cXOdAuj331ynPPXe+mdET3KtyevypOKV8/WSiwpaJJMWeZAbKWz

aIsdETnCFqkm2AUcwsp/F2c3hihsDidAckRubyjLb7UzfKGVtPZkjkUZYyOM

bZN2DJOsYxh2eeE2EYlfvRum6cc4GrmbiHYnLAvc2Rqmz9YKS3ZWOXK0y5lt

TQ7o9toRJYxwbMCGhEuprP+mi8z3Cgg76c6l74o5/bN+QoSJgy+nYyvvdRev

qXmxSN75DsnEHSjyRB+qv0Jx9l8o5v7G9s6v2FL9AfG5y/zw01P0vFgm7+40

6x8uMXill65z3Vz65CJr75+h+UIjzZdqKJvLomGxiCzJRr7CHREtQTiX+RA0

5UXcSQ2HNefJ7aqlaSWciMtZqM8W4CzvSS0P5tTdcaauNtM+o6FmMJEMyVhR

Ja6k1vvSMBYjjGeKf7YB4cIb/nFH8Eg8hE/SXhz8duMvebZMNHFqvYYxyURD

y0Xk1ylp7EilZUqNZjAJ5wx3DvpL3lNKhirdgyJ/H3tSrHGq9cGpUfJz7h5M

ay0xEy06kujAjmRzdok37q6RzC3zoldjxfKLbPJPu2B0PoG5j5qp/KAE75O+

9D6tYvlHU2R9VczxT1W0vpVJ/LIa9cVaAjbKeCOxEUX8bRTl4m9n/xPFyf9B

0fYHduR/jVH0dZafzlP5cRcNb41QcjyPitUiVt9dYvbeJJnrpUw+XOb8B2dJ

7w/HNcmYhBI/6kfzCE5xIiDFnCMZ5tjWG5A27UDmUoDwuSfei4lo1ipwK3Mn

UxvLwPF6inqTCBOmiqtxlaxrTniNnXCrsG7+MZKKrQmS/JfW4E5JS9jrezPp

VRZUdvozJnlmbuPV/YRw0Wg1C8Icc+t1zK+0UtFTiK/wXGJ3FLsSjnCowxKb

aSc8+3QxbNuKb8VRMgfi8BU/NM3aj4/w8Yhop47Ggd3Rb6KbqUuReFriJT+U

Z0MJnolBczUUkwkfLv1mjpM/X6Hv62bu/mOGis8qGH+YwcXv5mj9rJof/2KV

p78/g2/bIArfJbaUf4xi9R9s2vh/ohF/x6D7Kb13R5n4tA2PjWJyJKvM3pN8

dqVa6pRB7noxs+8fp+hsIcnD4eR3pVEwnkGk1p+ocj/ii7yJV9vjHW6IQ8wh

TCv1ODJiicVgChmzaqJrfXGNt8ba8zDWgXqEpNsRmmZLRKYtMQW2pNa5El5t

KfPtjXoyjJh2ZxJbPBhfrya/OZjsGmc0NQ5oR6Jom0qlcy5X/CuBRskr9eID

C+ebufnsIoEtgZKdHcRrLNg7bc/seiRzJ1uwbWzCpskF9VKQ5KggrPKMcVfr

ETzkhqLSku0DLsSecGfgujsx8zHYbWSiftnP0vtV0pfZJFxX0f7zQSKfail5

X7Ly18KSP66n+5sOCj7L4sHzJX7/s7uUHj+Nwn6YLZkPpb5/R3FOton/5o36

Lwm92o2TcI/TSgET78zQca2dhstl5J5NR3urlLoTeTQKbzWuFqIejZVsqKR8

RkVmVxSa+mBKZf7DCx3wTbYiqlo0o91friWalEYfApNMcAoyxtHHEOcQk9fP

wWPULmgqRcf700ip9EUpbOY1ITpz2oOQWX1CanXI7gyhfiqT+t4YMmQdK3rT

aehP5eytYTYeCM+sNFE3kEbVUATxzR545ThjFnKU3ZLFt3Tbsijcpe1tQ7FJ

w+6EWo52HCJeOCW+7ZUWG7M98QC2Yz7Sq7EcejsdvS4/ou/l4zoRgM5cMI2f

9DH1xSxl93uo+OEIKc+aOfebC2g+72Xi216mf9WL9mUu2aJ5F360RvOVeanv

CJvVosHTf0Rx6j/ZNP0PFFW/F65YJ2m9kfKLlWQty3rd7JOe7qbodB6pk5GU

nxC9FM0cl8wxKhyXPZRCy3IpmrYYbEQr4spdpZYOBEgmDRVvSZRM5pq4n3jx

s+RiTxoGMoXB1ORUR6LtSiQ20YqgYPm9NEfi851Q5vgReSaRwa9DCSzLwbez

CpdJV+LGkokqPEZIhiUuCfspqvGgfVzDzFol5+4OcOpBr2RyNcmNtvimCuNG

GWCqNEBR7I7duWgqhgvZZdfFUZ9O9naY4dTrSuVqBmb5jiiKzIWhQ8grErbS

OmBdb4f5WBLTn3aQcTUegxtpWK6EUvWjSbqlzhkv2ul8MU7td2u0/7CNk9+L

Vnwreesnnag+ziF0toZNDkNsyhCPH/4exbp43In/YlP379nZ9BU567NMvtVK

3fkKOi7XU3i8QDJxI5VL5Sw/mWdAmGTi6QD1J0rJmtKQ3h1DXJ0PrpL5TEN2

ElxkJDzp/ZqTUur9SKuS78s9SMqyEW11IbXUmTCNJbGv7hXkuJOsdsfLT5/E

NBcycj1Irwxg6JZwe/hxFGZThHWGE9JoQ3ilJ5llfmSIh7aNqFm7OMDoSt3r

+7iVcwm0nUiXWYonoUHmosAamzh9dEIPoqh3xnhDtPSkGuOVYOknJwzy3Rg8

If56rpet+cJsJ0PxK/HCqNEfF/HOQ9NxJL/MI+ZKEllX6sh/XIHXaiqzP16j

9vMx+j5foPHbc3T/ZJnJX50l7pNyYq8oKXpWietgP4qAJTYXisf1/VxqK717

4p9sHvwdirJf4jpylSrJq2rJjnXnKxk918z4/TFuf3WDWx9ckB4uJ2Y1neCl

dKqvtlAuehFU4kBhfyz1IxoSSl04c2+UC3cHqe+OI1YY1CfVALc0fZldM7zS

Dcmq9n/9f7pjC50JUVkSEmNBZIQjBZniK2nmJGhNCM2MQKFXS1ZFBAlt1tjK

MSy8d2PltBNzt910Xyxi5GotjedKhGm0VIwVo2pOxi3DDo1ocEyNH1YZJryR

a8LWdnN0V49ysM8Vq1Y/jERzXHOi6ZjvJrojRfzHD/1TlhxolbW+lo3ViBN2

48FkvZXHzE8WWP5lE9HPSyn8bJCSrwbIftJM6pczZHzUx8SvT9Ly9RT1X7dz

9q9DhExNir/NsSVb+rf9pyhW/oZi+X9JL/9BXv+WHV2fkDZUTdV0AYNXB7ny

4iwtS5W0XeuQnKBBvZJNhGSl2mctlD2uoeheE2lTKrLHVMxc7SW0wIXyV88O

2iLIbPEjptwJH5Wp1NWU6CIH0c8sWmbKqB6Pp2EmCXWDF8k5LvgFGBEX70iq

yplQ1UHhkjfwzPGgQGYjotcXxy5X0aBj+ChNCAi3QTUVStlSKOkL4aRMRjF0

tY6G5UrS2pLI6U4jsyOe4Gof3kjTYUuMHm96GWIRZEWhZMys5UI8W1QcTXPG

RvL8tnZf4Skztkq2OzYXi5FwS9S7FaTei8X9rQwqvmgg6N1Sct7pJ/1ZB5Vf

DtLz61WGfjLJxb+eof3bFVRftDEq6+A5soIifJXNWXdQDPxMPO6vohH/lDz3

Fzb1i160/ILM1fMsvdUjOVF04kw99QulKPujsWgVTpdsP/pNu2TgQWreWabu

cTGRa4WEj6TjXOmKvcqajPpQ8oYS6TldSftSJnWzGWTVh+OnMpfaZlE1KJmu

yo7oCtvX/4cjJt2WaNHi6HR7IuQanYVz7f118E0/gkO5FcdaHTFt80BfZYSr

92EsnPZScTyFotV4KlbSaTyZQ9FEOFk9zpRNxVC6oMGr1JGYtjCi6iIJ1vjT

PVTP4GwjCTVxGMd5sDfaDL1aI45U6bEr25RtM57sGgpmh8zV3gu57Bbd1zvt

Tu7zbC7/qo/yp8K7P5lh5R8nUb9dj/ozycuPq8j8qIn8bxfJ/nqAiV+MYtA5

gSLzKptynqAY+SWKtb+gOC0aPPVXNk2I33X9Dv3hF7RdaqZ8UUVofww1J2pp

XtFKfgjA6YKam183sa3iQw5GXxD+aSXzagHKU3not4TgJ/2r1gYR3x5M1UTq

/1/UW0a3mafbnpKTgiQVqDCbmWW2ZZRRtmXLMtsyg8zMzMyxncRxyGHG4hSk

uKuau+tUdx/u6QN33bvWzJm5n3/z2D6z5sO7JAtsab/Pf+/ftvRKFHWGkCt5

FJ1mj4vuhLCTDVElDmiKbdDX+FLapyO+VIWxWU1pYyh9sg4m5guJldv7yf4I

NzqiEtY6kWiJVawNhgwVHsHHqFsxUCR+27Nezuj1eukxOVSOxZPfF4Gh25dY

8ZS4WhXNkhsDG11Unytj8q503N5CDiT5sb/ME+WKDW8OH+CwMLhFnQv7qryw

OK9HWW/Fu0N56H5sJvxuFKX3S8i8Vkr6i3zi30uj5qMmhv90g4wvuqj7aYGS

7xeo/v0kK/++yJHWBhRhfSjzxB96/yCzK/57SXrG4v9CsfCfKGdknjv/Bf35

JbpuVRI7pcdtNI7YmTSC2yLY3xpG6zdpxMzIfvK6SXN/IwPCF6pbRs5MppHS

FElOh4Yk8biw2gCKZrO498kS3WsNTNwdYObhICmtUeQP6OhcKGDqXjvhpc5E

SyfOrvYlRzhDn+5EcMQR1BGHcZJTS593sbXdwymb3Vg6vo2t627JTh/a5g1U

CVu3XaqgeTaP9vPFNC0YqZb871w2YBzwI7JRhbY3lNRxDSF1QZSMZ+NZGoqy

yIqgxRj8F3zZO+LFMeHNAwOJvD1sIO5uNu8uJ2KzFE3xhwX0vB5k7LcT9H0/

QdOn9Yz9OESvZFvC9320/f4Cxd8t0fjzPHV/HcdivFN0qcMsT/yh4/cyv8K/

l0XbK//3Vs4p12Se2/8Vq4FX0oO7qFqtwHdIg0WlPR4mF8wzPDlZ5kD5uj9F

l/Opvypa3zUSc7kYn6USahaKaJSO1bxSQGxjANGtwUxdaSRjIAFdTRCBObY4

Jr5LqNESTc4pbCJ24S3ZF5x7Bv/kE1uv0YVGmxOeaIFTkOjqug8nt0O4+h7C

yWs3Z+z3YO90gJbFPDol2ypu5hC4GELJqPj6SCmVPZl0T5ZL1hbQdC6bnIFo

MoY1JHb7EzMYQWSHPP4sXw5U+TIns++m6+NAuQ+7pTPtqo9j11AipzayiJhP

RX23hNbft5JwXvqn9ICWz0a4/193KPu6gd6vh6j/aUn8uJ+mn9eo+uU0Of8w

jNedDpTBnZJvD0VH8d/Jf0RxTfz3yv/871n+XyjH/40dTX9i9P5tfv2bL/no

65dMbAyR3LT5HjidZEcaHuk26MbsCFuNJ+piJsZrhVv93i5T1rN4hKEzRvqC

GyFFrkSXexFodJDNjsBCB8KkF4emm5NQIx2tPRzfrFMEFdmSVB8gnOtCnNEZ

nfyOwJgTeAWKB3sfxDvmjHi2BzkmP2Lz/DGNxFM0kkDkBQ1HR71xlv6VNxXP

1XtjzCx1MHG1m7Zz5fRcrqR2WebgQiGF6+kEDASwPzuYN9KDiHtsJKOtg0Pi

4/tHLDjYqBYfDmJHayhHbqRxZlm6xqVc+r+sZ/mPPVv/oyp82UDKR2VUvi6n

7esxGv+wRtY3U+T/dpmSv0xT8lK6RWgNyqy7KGp+RDH3zyju/W+ZY+GIq+IT

N/83ZqsyzwP/E/eJJzz51VN++tMf+PyXj2kdy8c3UzSV/Z1oCsVaa0tAjYbG

1Wp0Mqdu2XbYpVjjZXQlstYf/0wnVElWeIp3JpnUaE2+olEAUdIlkqWLpTRH

kFjniHeJI5EmYbVqJ+nbKmKK3UiUjpuU44h76EEcQg7gEX8cTfZZ8mulw1So

SOsMIGDWS7rnCU6uuBK+7ovneRs8brgRO+dP/YBeWCWTprkyetaamLk7RI3k

SflaCSfLhRekQ5p1RLPvg3iOnHNiX4sD7wx48qb0xN2TQSi63TgmXbbqqxJS

Fw3UPRYff93O+b8+YOSPM3R/O8HYb+bo/vUsVX+/Su4vxyj44zzjv1/mUHQB

iqQrKOu+RzH/L9uzuyr63haPWJf5vfz/SN79F8d6fkXVhVbSauPJ6U2iXtZg

Uk0wSeKxWe3xFIuHhhd7klUZRnFjHMFGJzwli4KL3MnsixLGy6ZsKJWcJvHb

pmjapwspb0+iY7yAss4kErsi8B2NwGHUj8ARO4KaLYkUVkrvjkab7yz7U9Z3

TRRO4cISeguqO6LoXhLOv2RCP5aImeSQw3V3er7Ix2fwAbtTPuetpgbhLWfC

urVMXOmlYczE6Ho/g2t9pA4nUjFTSMlSBunD0aQOFZD5fg+WK7Hs7w9hX5eG

PeLRuxb0JN9K4cANDScH/Rn4ppvurwrIelpA3PuFeK0HMSjaGp+3UPFqiELJ

uKbfzlD221WG/7qCU7YJRcIllCPCv8M/bc/uLZnhm//XVl9WLIkPT/0nZlW/

Jmplmqz1SnneaYw86uPS+9PM3Oql7WIDDRcaMc0VU9AcQ/WwHk2JO0FZwgYF

bpSN6DB0hJMhWZfeGIyhKoBkWdfxKe4UVsVgrIhAZQojTB5v12eOwtxeJM3r

CR/yQz+aiKbIngjphLl1gWRI766T5940EEPpgJoJyTJLUwjvtLlTJfz01asp

YkqF6xX9HAx9ytvStXwuGbjxwQWaZHavfLxK9z1hiCs59FwX5rwh+2cxFh9h

6/7lUjzk9jsLHNlbE8g7HWHsEd/RvMgk9Fo0KU8yKP6sgPCLUYz/MMLS7xYY

+baD9MfNDP/lDp0/rZHzqkO6xxw1v79K57+vsDcjE7OYSyjGf4tiVDJuVWb4

3v+5rfOSdI2p/4Fy8j+2cu5Ixyqn5vPwak4gdVBH+61qkno0VAykkiYdIq0j

TmYxGSfDafyzrfHUWxKQ5UpEnrtk10Fc4ixwjD6Jt1zuFio+G2lDvMETTYI9

mVXJlNxOZeRaIAr/jzkWe4ncWTVpQ/Hii7EYilQUCkv3jZeyfLGLxj4tCxer

mJgr4li5H3t6ZT9+W8aTV10s31jiuOEltr0Pcf1QTfyccOW5AvLG9QxLvo7e

7mL0ZiPF8/m4j0WQM5JNivwNe+nzh+p8OdIcxKEGe940RvDOjDfmK5HkyrrQ

Xk6l4IWJhm9MdH1nov5X0Ux/k0PLqzHRtYXk92spEo4o/Pky4d8N0PSfl/Br

F4aIkI7c8Qvxmd+gmP6n7Wy7LBovCU/MyPlR4Yju/8He5m85PltKyGgafXfb

KZWsSJhMxL/UnaLuRAq79JT0pqERFouu9cJeGHfTo0OybNGW+hCU6SwZbY6H

wXLr2CeP8BN4+BwjLMKBlOQwgvNd6bnjwv7wSd5yk3Uo+aOv8xY/DsMt6jBB

CVZbx1y5RR4hTrp2TLpsGY7YpJ1lR74dhxciyPxVKUu/aaPre+mu38aRdDmc

1qV6Jh/2sHirm+sv5nj26hpzD7u3/lcaOBAi2RKLdbMP3n1eOEuXfrf2LMcq

PXkrUYVZowvWY674Sr7lfZxD199nk3Yri64vO6h/z428q4k8+o9zsnZKaPp6

kv6fLhL9sgK9MEX4rydwbG9EoZ1HWfuFsO6vUQz+aft/aZs9bjPb5mUbEhbu

l1mu/kdODM6RdaOZCx/OcemDRYafDWASzqyfymfxzgh5g0bpes00rdZsddry

mRKKhtKpl7nPES8tHBI2q1eLZp4kFvliyA4iINgafV4AQTFepNUdpnBMI3lX

wFy/J+opF8JbQ7DRWGCuOoq9v3Bc8GFhCOnGKU6yOeArXGJvcuDNfAfMpj0x

fxyJzdMA9py3I7QtnuGlVkzjWQytFUsXyaZCekvuuHTQvjB2LLhw+Ho4hxf9

ObVqx6liFceMx9iRtJ+3s315oyNSvMKCqBtZpHyaht9cNK0/NLL4tyqyblaz

9pcB6j9Np0707PldC10/tUs/nqTkuxWOftIj2mrY6TeIouixeMB30uN+Fq+Q

GV6Qmb24+ZqRzPCQ9LlhOW3+J/Y3fIFnr4G0/hSqp6vJ7E+joC+BuMoQedyl

JPXlCCsVYRozktaVjEYyMLYlBP8CW5Ib/ETjWDI7YuX2gZJzcWQWhBAW60x5

dSSFhRE4hpiT1GxNxbK19BjpBLPJGPpzCShwx8LvCA4+m+9dtcDGaz/H3d/i

jPcuAlp8Ca6xx1069lmZt7caTrFj2o5drfbkjCXTt1pM7oDk6lQkFUtJlMia

s67xQ1Hii83dSDLXFtkRPou59Keds37srLWXObPijUw39pT7c3TICbdlX6xW

Ekn4sILEp0mkPkuj7qs8Wr8qRP+BgSaZVePzWLJfN6L5sJ28bweI/XmS3elq

zJzLUBbelo4hHtEvPjz5r+K7/yYeLPouyNyOyjwP/g1F6z+xs/SX6GemaVir

YfX5OkO3h+lYrKWiJRcXgwc28pgC8/0IqQ4moiqEqAo1mopQXIUnXUbCZN9Y

ENxxhBDRXF0cRojwWnCULaFhrmi1KrzDLAiR3hLVGk3wcBSRwzoihLWjcz0J

jHPA2f84hdVR6LICCM2RHKz0QlVljlv9cQLqhDlq7IhrdsGnxYXAVg/Kp3Ti

vQZarhTTf7WB/o1GGm9KL64WnjNJP3yez7XbC9iFTxF9sZPdi36SlYEcvhnC

wakIdjc5c/ZSHLb9Xug/0eJ0MYTQS9nSgXswPdJS8UOteHMSplcNjPy+kZpv

GvF6UE/K6zqq/zqH/ZoJpZ0eRe4Gip5fyvz+negqnrAovjsumo5tHhMnGveL

R/TJZcU/S39bx3RriInnM7z/1SP6b03zwe/fZ/35OXI7DOg7Ewko9kVTqt56

/Vstejm3RNLxUzGVVwOIHShEt6ojYDQW73o7vKLs0cSKX+j90WhdiUp3E8bw

JUz82FVvx3FvYV7fY7h5HsHJ5QAhoeZye3v0RUEUNgaSLH0iYdiVsIazxLQ4

ou9Rk2GKZPB8LZeeDdFwvpCejWIWHnVgmkkXPgnldKtk2LB04ktZXPjFPPc+

O0/MJ6nik84kCOuaRoTJmn3ZsRTDofFA3G8nc3rDl6B5f+p+H0qInC96GEbO

R5mk3w4l41Ypg39fR9YDdwyfTGH8x1Ui7uTguFrIWy4pKPXnUVS+Fo1/3v5f

+6z4wfDftud2UeZ4/L9Ea9G57j8wK/2SnCcNGJ+UUrDSQFabltQOPWsPpinv

NJDYHk12SyK9M5WU1CWSVhJGTFkUfbciSBkfRmH1moSGDJKWggmRnu+RdIrA

KDvhNekLmX5b7632Fw1dfI5j7XIYN28LAtTSTbyO4uFxGHub3XiJT0QnOJOS

ZUdylwcZ/X6UTmgoHouWPl7ExUejjF2qYlZ4snrMQM1EKvmip3EwSRi4k6bL

1aS/6GZvbwIeMo+hd3WcEf8KLA3DuyGYutv5HE2Q3JwJI+CyL1VfBEnntybp

qRdhKx5o18IxrBUx+osKxr9LpehlPsW/HGfmzyYGf+rE/NMRsv9ygdiPR1C4

xqM0LKIo/1w89nfS10TfCeGzqU12EDab2cw5YeLZf0PZ9jfMav5GwMx52u5W

EPMgB5/JFAJLAkgQH4gVTzsVeQBL7WkCU13QZvsQGmNPkqxnfZmb+LU3ezTD

ZJliKJnxxH8wGOs8Ryx9DhIaZ01BubCwZJi7cIWH91HcVYeJ03ngqbZEnxuE

Lt0X/2Bb8es0IqNdSBU/qpqPpnMxi97lXPIGgijpjKSoL5z0Bi8ypGfn9sZT

PZpF62qe+EQjTQv5mNa0eF9QsaNLxRuSnYF5fpS0ZJLalI5LsvTmRiec+tRY

dFkQOyl/+6o1Je8nkntH+ur1OPKexVH7STBTv/Gk+7k/cTfFG29VMvpHHfo7

Frg+7SPpH4awvJfDG4FpKJJXxI9kftv+IHn2jyj6ROMBYeGRf9ve5qRvDItX

tPwLymrp0aH/hHfqNC2P8nCQzujUKLpkSTdoDie1OgyXlDOS+ftRJ9sSZxCf

yw0jPs0Pb+m20eKP1cNupM8H4jmuln7gwzH/E4SLLwwtlWGsiiAi0RzfsCM4

q/Zht/mZPC678Al5F71BerPGnuy8ULTRKvQ6X7J7nIkyWZBYJWu72Y6MZhXG

3nBK+uNpmCqmY6mJ0qFs6s/lkjKvQydrvmA+hrhGS2EPC9zTrXBNOIG2wo/0

wTTK56sILUmh6kYT3uXWBM3aE3/ZmbT7KgzLNtz4cyB1T8Op/Ua663U76n8o

pv1byeUPCyl9YiDhjoHyPy4S86n077sZ7E/PQhk/j6LgFYqqH0Vj6XG9ou+g

5NyoaDspvjAhfjEuc9z97+xo/ivKrL8SXrjE4koyPtcKOC3rPLkzB1NXIcXd

qWhyPQjPdsE3+iz+EdYkGnyJjHfCP9qRKKM9YR2ehMp6VrUG4lPihVusNVGZ

wbSPmsioDCa5VEVsjiteEacI18lzy1eRW+wh+triI5r7+h4hMsQBP9VJtOLh

SS1uJFepyBkKovtaEeO3+qmbLKZB+p1pWjxL2EEnvTd7OYWOez1svFpANxa2

dexIcrkKP+maqjxPnIr8ME4Uc/HyOHn3mjndeJLmu6cw3HGTnudMy72jtH7q

iWpW2PID6dU3ReNHufR/L93lu2TJpVgiP6rE+KqG2KfVBH53jjcSs1HGzKDI

F33zvkTRIBnX8ffCw/8gsyz+O/If2z7cL6et/4pZl3iH6Sc0jTepuCH+cMWI

i/hu3XIrD358yOCDEYbOS2+siSYo2Q6PiM3Pr3UnLtkddbQrMUY/soUBQvJ9

cUtxxj7sDM5hZ2XGNYwsNW69XyqjOYzwHJnLHLlfvjdDlxpZezbGxntzdEiH

KyqLIDVFRUiQOem9IVTL3E1f7KB9VdhQ/KpmIoPKCQP5Q1rC6lVkjKfQfqVG

8jWL4ccDdG5UEjHkQUyTD8HVPjgIQxcuV6Fui6N+tYK86RqO3lFjuKolPs9G

5nYPUVfN0Z/zpOtbfyI3gmh+nIJWrh/7ZR1LX4fS/F0xNZ/HE3cjmdwPc7a6

sufdHvYVVaCIHBV9P5IZ/kZ6hHhEvWjc/Oet14gUHTLLvcJoHX9F0fQvW8fX

K4x/Zq/pI2LX+zHv0xM+WEzqhF66fQMr96e5/+VtLr23SMng5jGJesKSVKQV

BKFJFs7S2RKps8HJ9xAnHPZy0uEwRyz3EihcW9kbTfV4EjktGgYvlLN0p4Om

0QyMTeGUdsZT1qGjf7GM6uZErtwaoLQ8johlKxIvq0hYs8JuPoi45QSMw4nE

jQQLp2vQt8fRe6WJlacDGIV/s2biiB4XBlwMxH5I9u/gMRw2jqC6m0TwUibR

l3JQjVThcSWa+x+MklUrrDVzlNFnHgx/7EbKjTOUfeRF5RM3jB9E0vVbA50v

nORxeKO/F03GzSCSH2cR/CiVgCe9HMgsQRnSizL/pWTct8Jgv0JRKlud+ETz

X6R3iKYDm/Mr+vb9H1v/i1BWyeXp3xM0ukTmbC76vnyyVrJovtMi+VGFrklD

0Xg2Cw/HefntPe68WqdhMAtjdTiquDO4xh7HM+oUbgEncfQ8xlnn/QRozIlL

MxcmPkuU+HaY7jTZ9f5UC4M1DkTy6PMhliVDantFsyyPrdfzNznN69Ypgu+G

4/cohuBPgznwUicdXkvJyubrGeWk9CTRIL4+cr2GguUk4nv8COt14cS6N4c2

jlL2ofD1rT4SHqpRf5KE5Wg6XfeukHOuiAdP6li/UEDzDQtmnwcz+aU3QRft

8Fx2IO+FO4UX9xHefYrGJ16k3ggj94UW26k4Am9XyPkcjq8k8JbRhCJiSrzh

/W2PKPpe9JWuXClzXCVzXCPM1iaZ1yZ+0S3Z1vHPKOvk8vw/8WbuvGRLJNXd

kgvnsqm72krvjSGyRvLQtggPSZa3y3odvlJP1Ugq09ebKBCei8v3wke82S9C

mDXZFV2emli9SnjAmXDNGel01kTqHbANPYBrkPhtwhESS50w1LhKn0li+XY5

nUPxlJcH0fR5L+9K5u40/p7QhSYSv0kiZimepDbhjaZQCqdy6b1cQ9NKJjkz

sUS1qQju9GDHOWHYj8Mpv3cHhdf7xEz0kPFdBqFjuUxc6hImEQbtT6XvYg09

Dy0Zuf02A+/70vTKhaxbXoRPCuMvu5N8x5vqDz3o+1xFx0tXMjciSLwTTf6F

eA60JvNWTA2KmPPb2lZ+vT3DdcJpVTK/jeINjaJv3R/l9M/b5+t/FoaQnlf4

E/uKPyfIlE6BzJdfkyORPRE0nCugcSZfulMeTevNGDrTUEkuWcQeJaJERYUw

Z4H0+prhJOnI6i1/iynyJlD6X01f+tZn6wfHmaPLdMFPd0r6hzBw5Cks/Pdy

2vdN0XwXYZmHxa/NKTUF8PD1KoeCJ1HseYT70D3SPgsgesSXhFo3ooRpc/sS

6VsvpXI+Dn1/kPSfGBzb3VDOq5j4spVnzxc5kTBF+Mwy9d/EkDiQypUr3dy9

vsrk2gaPP/ySNOnJvTdUlNw2Z/SzfZRc3UP9C2sCnvmQMveucKYDKZdDaP/M

nYQbwnDPszjR1czbNRcxC+xEkXBd9BX+LftC/OEz0U58uPzvtrf6TZ8QXatE

80rZquWy6t+hLP6RHUkfEdTdxeIH02ReKORovSeere5kDUcT3xomMxxJ5Vwx

nRdbSOvLwjHFipBUc1Tx5sSU+Agz+4nfGqgflIw3qgmItqS4Oh51rDPpOSHU

96STIt3YN+YIAYknCYk/iVfMQfykkyRmuBESY82XryfoW5jhkO4+hbcmpDfZ

om52Q1vtiHe2HRWL6VRIvpWMhWLsCqJurpqI6SKUA860f9HEd7+Y4+n7F7nx

TQOV9zVEl6m5dKODyev9DD96wNrGMwpNKcQvONH0SEnq+AEMTwPoeu8t8cdD

kn3Sw586kr4h8zVtTcSSD7YLHaLRBm/mr6EMHUcRfxdFtvhDmfBDqWhbKB5R

9MO2F5f/dlvb2j9sZ1/JL+Syn8SvpU/Hf8L+gi7KVkp5+ItH5N5owXUoGP82

yeW6QKJq1YRVSA70xG2xQXxrEulNWlzCT2In85y1+f6I7mQiZa5bpQM0diRv

fbZcUIw58fFutLRnUlQdS2y17Ldaf9yLnHGPOS5McoIQ7Vk8Q47w4Y/n+OqL

FR5+Pc3wrw14z9rhm28t9/EgRH5val8ocU2ewhJ66cz1jNztwH84A7MJNU4z

gVz/ZozXf1xi6RflJFYLx5miGLpcTN75fBruLzF17hIxw+KpA47kzx6RPnGI

lg+PMvLBHrJuWqG7dZbIVSt8F6zwm3PgxFiC8NVNzKpFU10zis21FX9b9H0u

HvyxaCsaF3237cMm0bZYdKwQP676jegqeheIhxT9EmWOXJ/wGfvyltB2iS+M

p5O/Igy/mo5uJglNZxDBNUE45PkTUhlAZmMwnkY76bCFrL+8IMwah0on+RZz

AI/kMxT060mri0YvnNE+nINWuDcywgZ9kjeFTWGk3c4l+qUzoe1HsNcexD/J

El3OUW79fIG7Pwlz/a6YM7dssayxQFPiSt1qBpE1vkTXqUjp1DB/9wK/+PnX

1C8U4djlKvPrJp0pAKt58a/1XMKlVxf1xNMxZ6RzOp/Mc9LnhgrJ6Uol63Y8

HqWDGAbT6fvRTnLzbSofv0X9+++SPfk2SQsWRFyxxHPBgX0V1SgrrmFWcB2z

iCEUYcMoUsXjc19sz3DuZ9sa5n0lnrHJEzLHJtG47IdtzbPFQ3Kk62XKqfY5

e7PuEDlbirpRha4vSBgyiISZHPqed5A7o5O58yK4OZToKh98smxQZThxfkM4

Y76IuWczaISTfVJP45lwGG2hqzBGCOmVoRR3JJGYG0BYjBvhWc7U3soS/vXB

qyoNda/0t4YwKppzeXclmGN3ozn0UMsbi7bYNNiS2xlNwUwEKd2arc+uaZor

4Nb713ny/h0Gb7Wi7VWzu9ga5/lMTO8vYDdXRLYwRu5wLn2rlUxcHhAuiMG1

UEXgWBD1N2MpnJmgUp7n2ndH6Hj6Fj0fHSJ39Q3se4NJfGhNzupeUs/t5UBf

u2j1GLO08yh9e1Bo5kSr+6Lly2190z4QbV9vd41CmeMS6XQlomu56Jv/1bbu

mzOeJ/sh4Q5m4eu0XLtH3fk6AsR/rSRXbGt9pGvmcf/Hp6SN5uBS7E60dApt