Resource retrieval

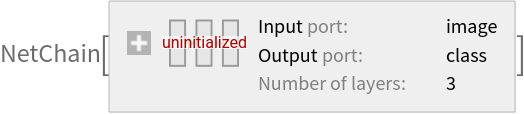

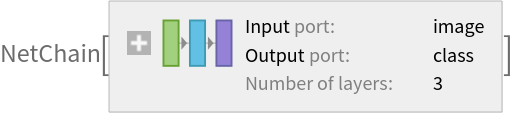

Get the pre-trained net:

Basic usage

Classify an image:

The prediction is an Entity object, which can be queried:

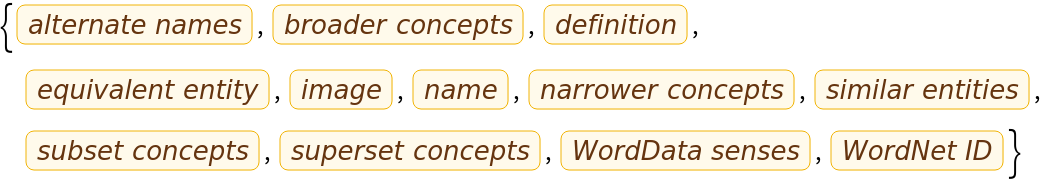

Get a list of available properties of the predicted Entity:

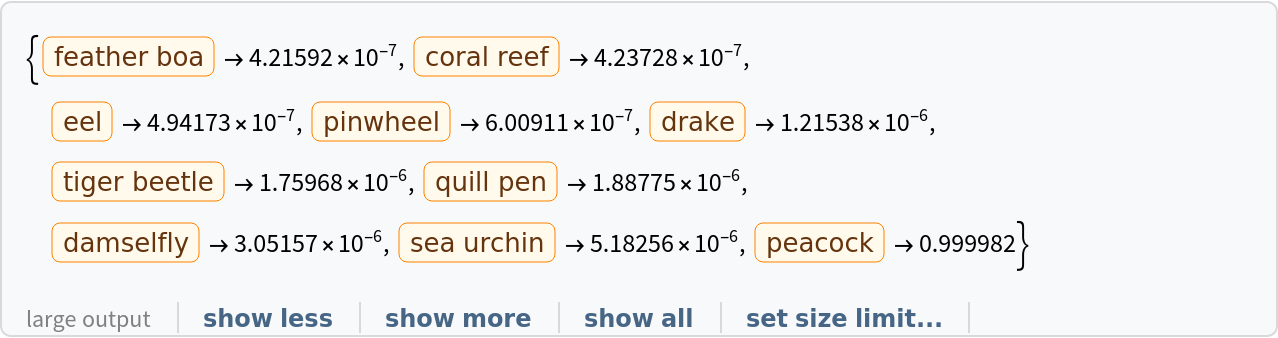

Obtain the probabilities of the ten most likely entities predicted by the net:

An object outside the list of the ImageNet classes will be misidentified:

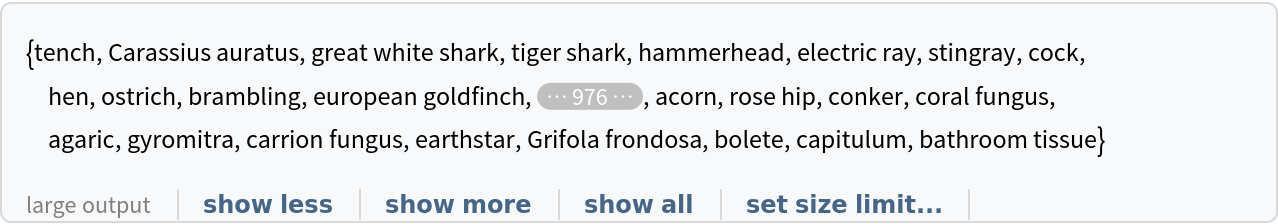

Obtain the list of names of all available classes:

Feature extraction

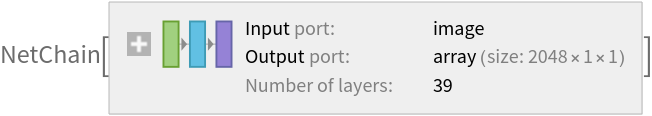

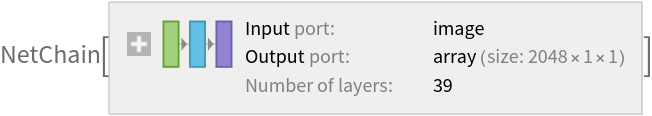

Remove the last three layers of the trained net so that the net produces a vector representation of an image:

Get a set of images:

Visualize the features of a set of images:

Visualize convolutional weights

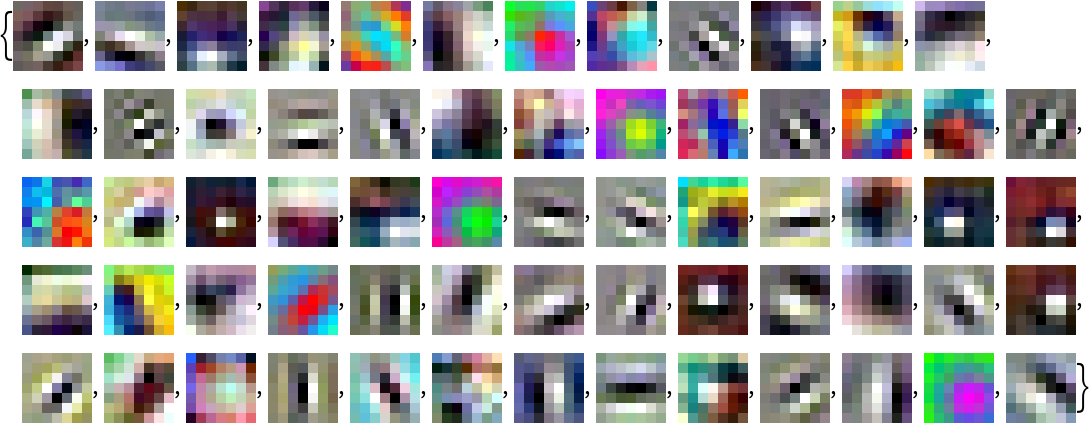

Extract the weights of the first convolutional layer in the trained net:

Visualize the weights as a list of 64 images of size 7x7:

Transfer learning

Use the pre-trained model to build a classifier for telling apart images of dogs and cats. Create a test set and a training set:

Remove the linear layer from the pre-trained net:

Create a new net composed of the pre-trained net followed by a linear layer and a softmax layer:

Train on the dataset, freezing all the weights except for those in the "linearNew" layer (use TargetDevice -> "GPU" for training on a GPU):

Perfect accuracy is obtained on the test set:

Net information

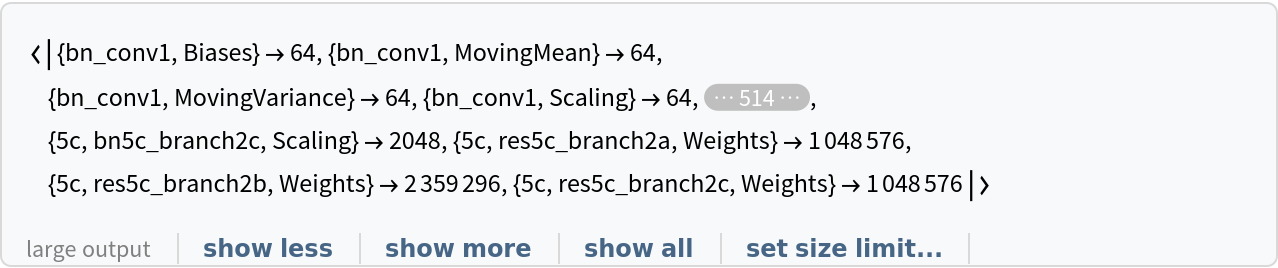

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/495cfdc6-ef4a-40d6-804d-7a57d52b9a92"]](https://www.wolframcloud.com/obj/resourcesystem/images/853/8536f93a-af9a-4f71-a516-87eee51cc193/217109dc137720f4.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/f20346ee-6ae1-4263-a506-ffcfbd66c701"]](https://www.wolframcloud.com/obj/resourcesystem/images/853/8536f93a-af9a-4f71-a516-87eee51cc193/14635bd0ac6b60eb.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/d5f7ecc6-b6de-4c1e-b590-e7993c3d083a"]](https://www.wolframcloud.com/obj/resourcesystem/images/853/8536f93a-af9a-4f71-a516-87eee51cc193/36cd495cf65ca213.png)

![EntityValue[

NetExtract[

NetModel["ResNet-101 Trained on ImageNet Competition Data"], "Output"][["Labels"]], "Name"]](https://www.wolframcloud.com/obj/resourcesystem/images/853/8536f93a-af9a-4f71-a516-87eee51cc193/7591cad16aeaaa44.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/6129318d-101e-4314-9f2c-801282df67fe"]](https://www.wolframcloud.com/obj/resourcesystem/images/853/8536f93a-af9a-4f71-a516-87eee51cc193/6a8c01f7cca68b74.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/19d423e3-e04a-4d53-b9ee-bcced4b5561d"]](https://www.wolframcloud.com/obj/resourcesystem/images/853/8536f93a-af9a-4f71-a516-87eee51cc193/0a48662240e9df8f.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/b16f50d2-03e4-4f43-a44a-345d857d6c06"]](https://www.wolframcloud.com/obj/resourcesystem/images/853/8536f93a-af9a-4f71-a516-87eee51cc193/44c52ff882580171.png)

![newNet = NetChain[<|"pretrainedNet" -> tempNet, "linearNew" -> LinearLayer[], "softmax" -> SoftmaxLayer[]|>, "Output" -> NetDecoder[{"Class", {"cat", "dog"}}]]](https://www.wolframcloud.com/obj/resourcesystem/images/853/8536f93a-af9a-4f71-a516-87eee51cc193/3c64501dd2d5eaff.png)