Pix2pix Photo-to-Street-Map Translation

Released in 2016, this model is an application of a powerful method for general-purpose image-to-image translation using conditional adversarial networks. The automatic learning of the loss function with the adversarial networks technique allows the same paradigm to generalize across a wide range of image translation tasks. The architecture enables an efficient aggregation of features of multiple scales through skip connections with concatenations. This particular model was trained to generate a street map from a satellite photo.

Number of layers: 56 |

Parameter count: 54,419,459 |

Trained size: 218 MB |

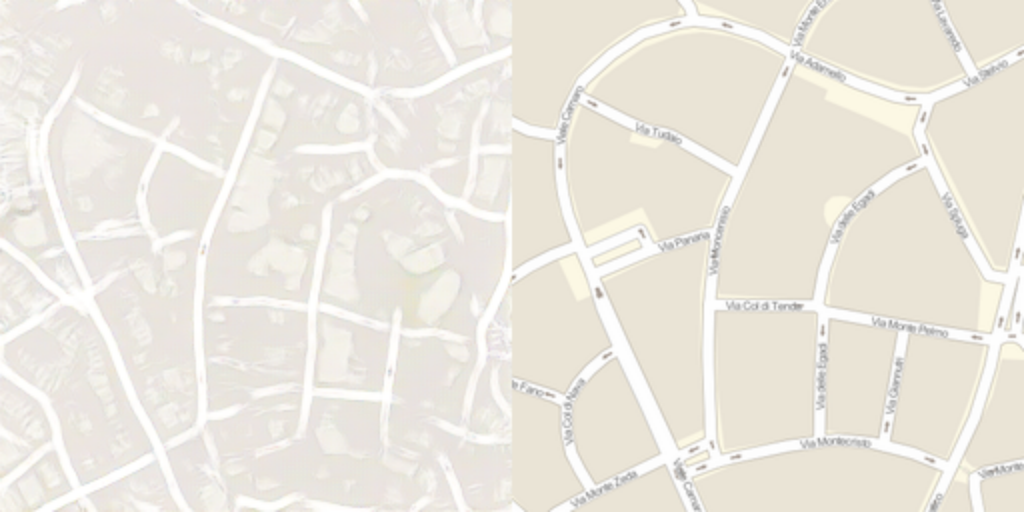

Examples

Resource retrieval

Get the pre-trained net:

Basic usage

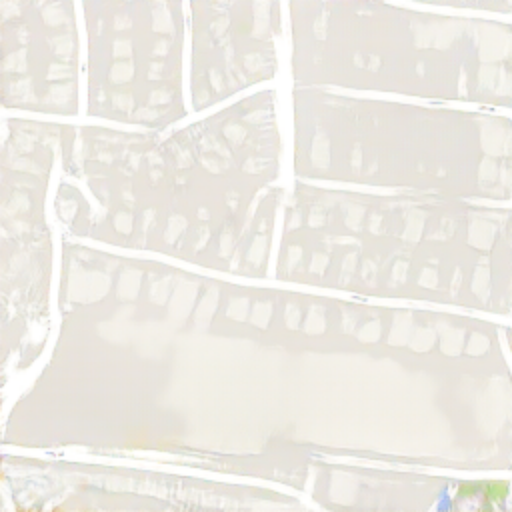

Obtain a satellite photo:

Use the net to draw the street map:

Evaluate accuracy

Overlap photo and prediction:

Obtain the actual street map:

Compare the generated street map with the actual street map:

Issues

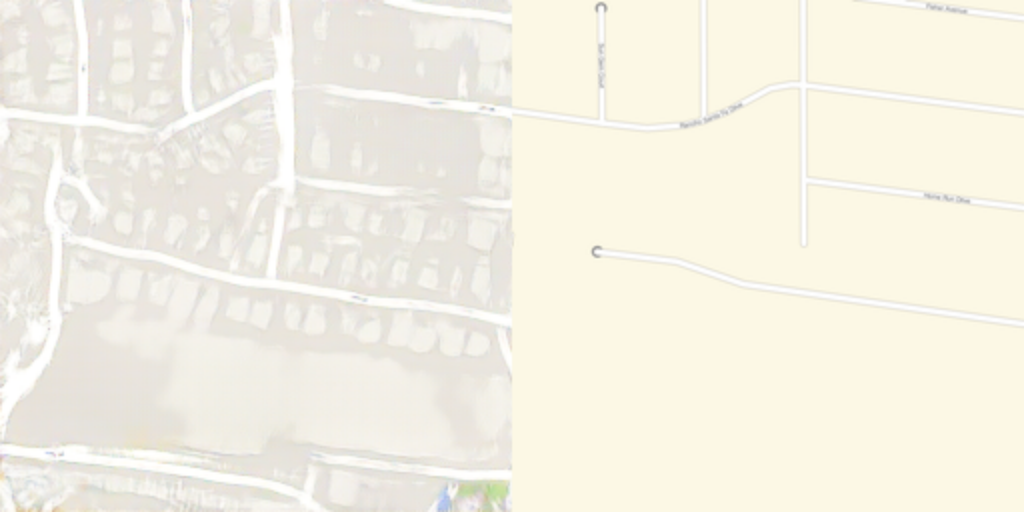

More complex patterns are harder to render. Obtain a new photo and street map pair:

Compare the prediction with the actual street map:

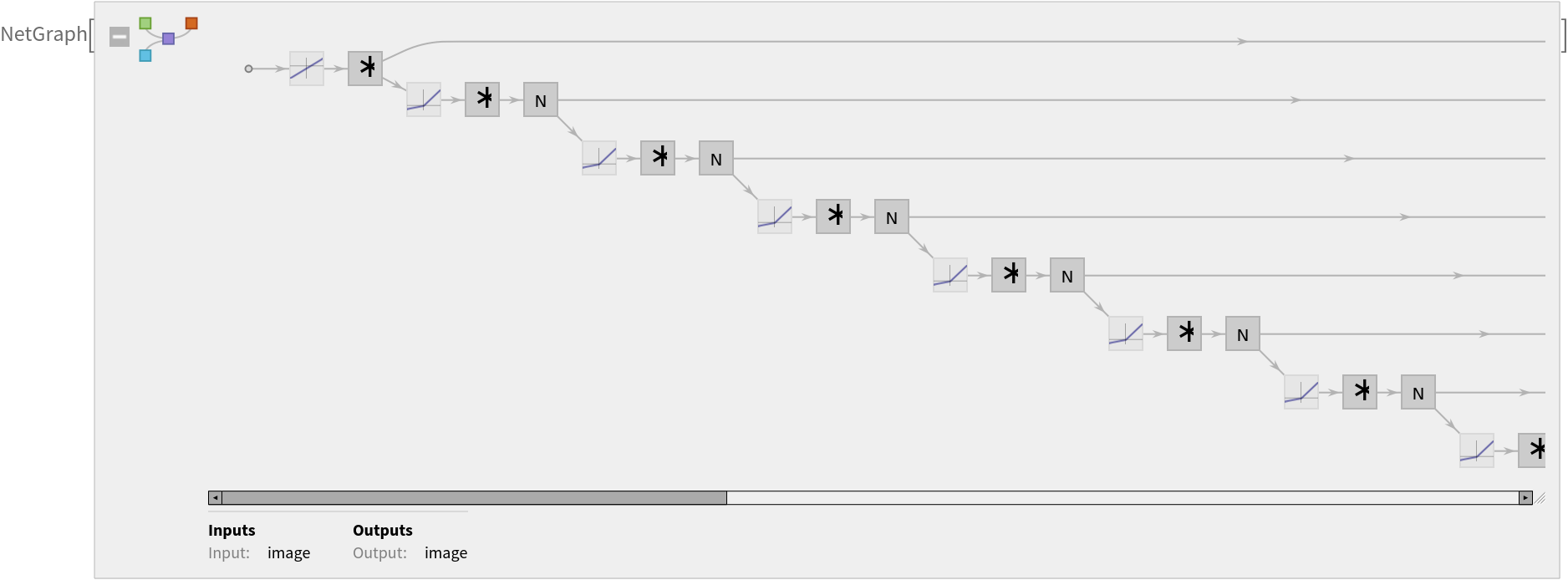

Net information

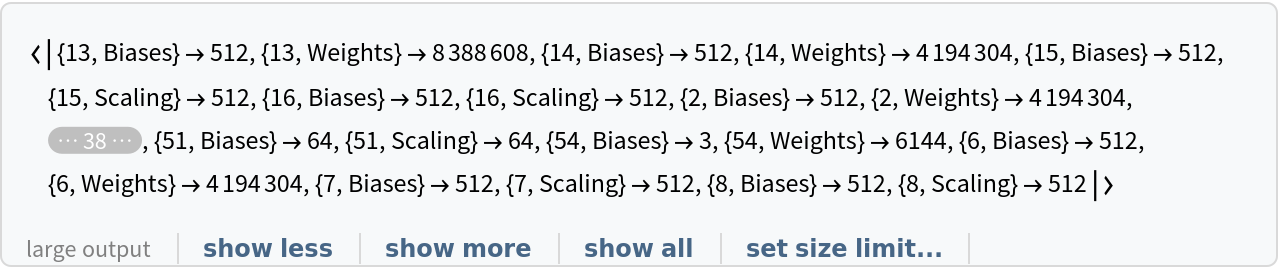

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

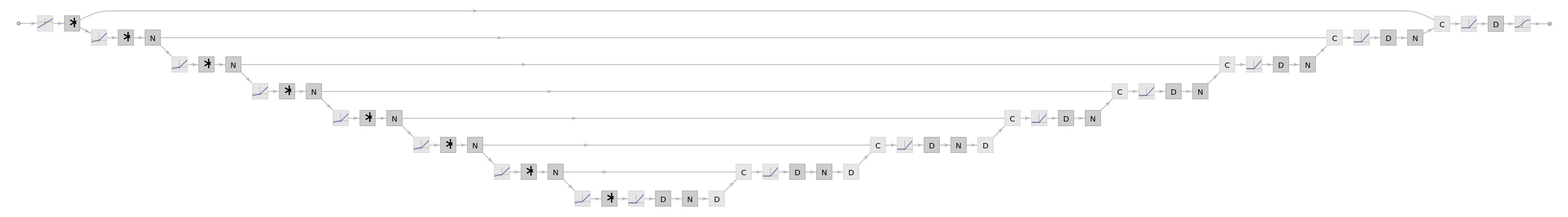

Display the summary graphic:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

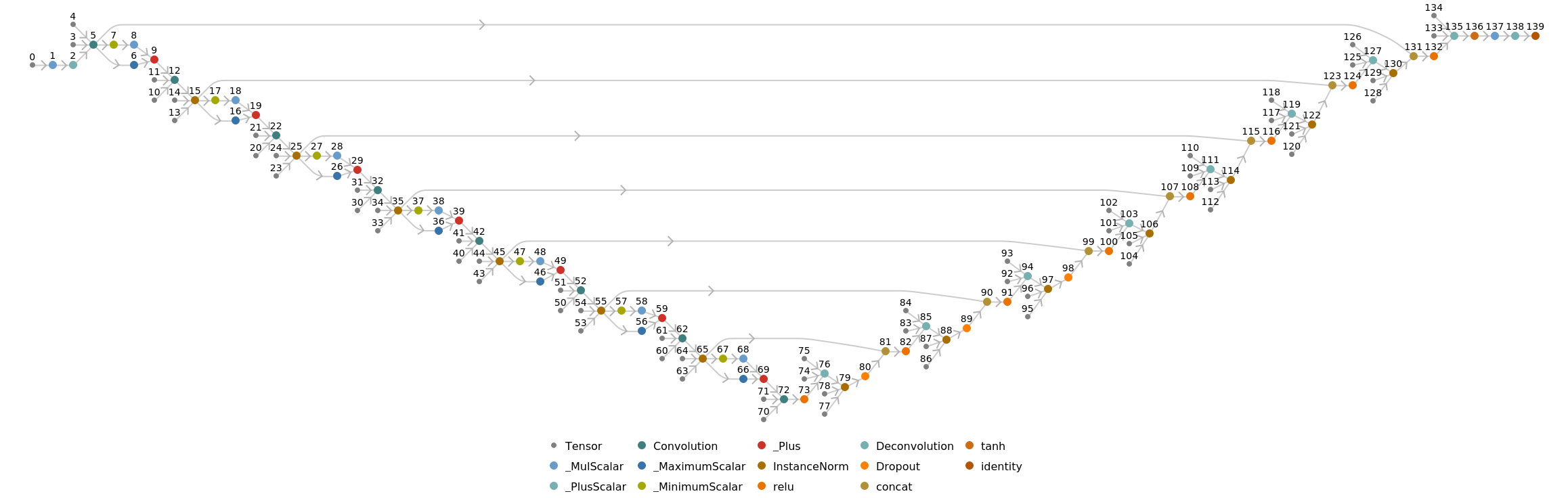

Represent the MXNet net as a graph:

Requirements

Wolfram Language

11.2

(September 2017)

or above

Resource History

Reference

![actualMap = ImageResize[

GeoImage[Entity["City", {"LasVegas", "Nevada", "UnitedStates"}], "StreetMap", GeoRange -> 200], {256, 256}]](https://www.wolframcloud.com/obj/resourcesystem/images/1d9/1d9f0360-c295-488d-bc0b-c62556fffa33/0c7860f6d4307aaa.png)

![{img, actualMap} = ImageResize[

GeoImage[GeoPosition[{41.940360, 12.533762}], #, GeoRange -> 200], {256, 256}] & /@ {"Satellite", "StreetMap"}](https://www.wolframcloud.com/obj/resourcesystem/images/1d9/1d9f0360-c295-488d-bc0b-c62556fffa33/2e11e489b770f9e6.png)