Micro Aerial Vehicle Trail Navigation Nets

Trained on

IDSIA Swiss Alps and PASCAL VOC Data

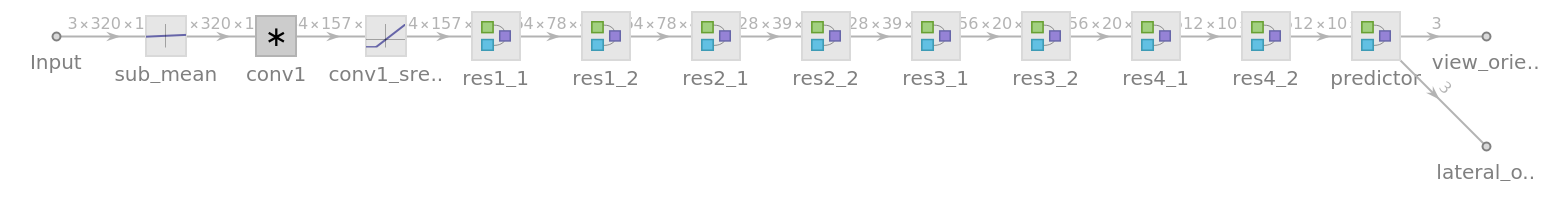

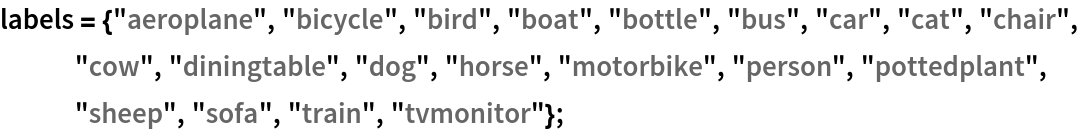

Released in 2017, these two models constitute a system for autonomous path navigation in unstructured, outdoor environments such as forests. Specifically, this model is trained for steering in a forest environment. The system consists of two main submodules: a navigation net (TrailNet DNN) and an obstacle detection net. The navigation net is a two-headed classifier used to estimate rotation directions and lateral translations given an input image, with a total of three categories each. These output probabilities are later combined to predict a final rotation angle. It is based on ResNet-18, with batch normalization layers removed and ReLUs replaced with shifted ReLUs.

The obstacle detection net is an object detection model based on YOLO V1 with a few modifications, such as removal of batch normalizations and replacement of leaky ReLUs by ReLUs. In case a detected object occupies a large proportion of the space within the image frame, the vehicle is forced to stop.

Number of models: 2

Examples

Resource retrieval

Get the pre-trained net:

NetModel parameters

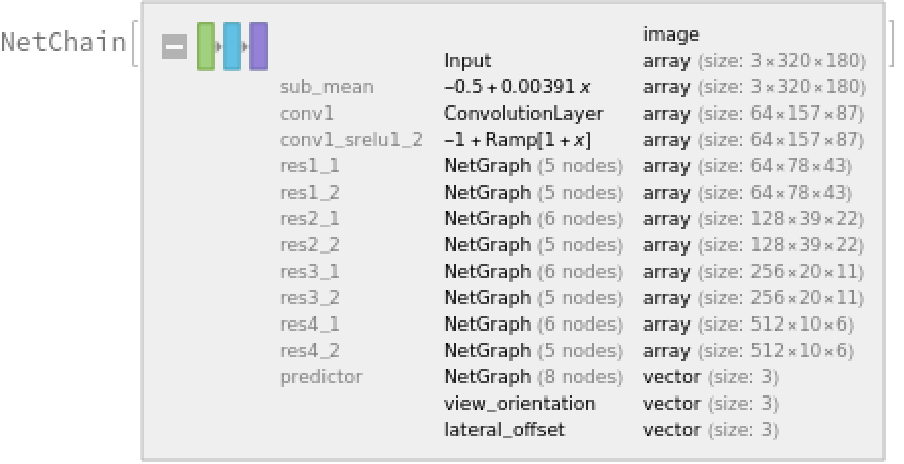

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

Pick a non-default net by specifying the parameter:

Pick a non-default uninitialized net:

Evaluation functions

Evaluation function for the navigation model

Define an evaluation function to calculate the turning angle in radians:

Evaluation function for object detection model

Write an evaluation function to scale the result to the input image size and suppress the least probable detections:

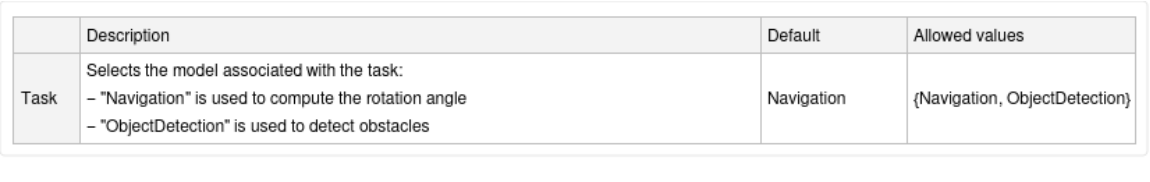

Label list for the object detection model

Define the label list for this model. Integers in the model's output correspond to elements in the label list:

Basic usage: navigation model

Calculate the turn angle in radians given a test image:

Basic usage: object detection model

Obtain the detected bounding boxes with their corresponding classes and confidences for a given image:

Inspect which classes are detected:

Visualize the detection:

Detection results

Define an image:

The network computes 98 bounding boxes and the probability that the objects in each box are of any given class:

Visualize all the boxes predicted by the net scaled by their "Confidence" measures:

Net information

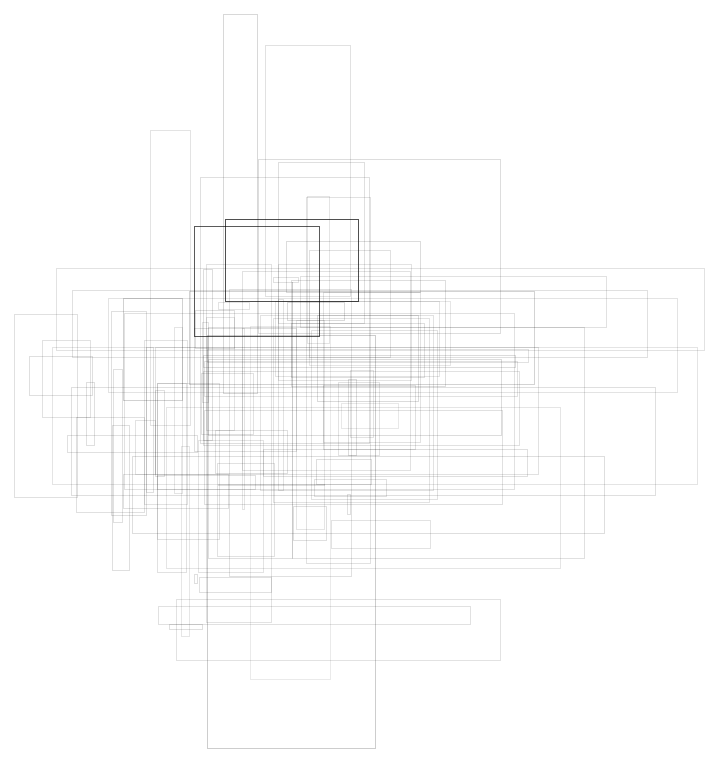

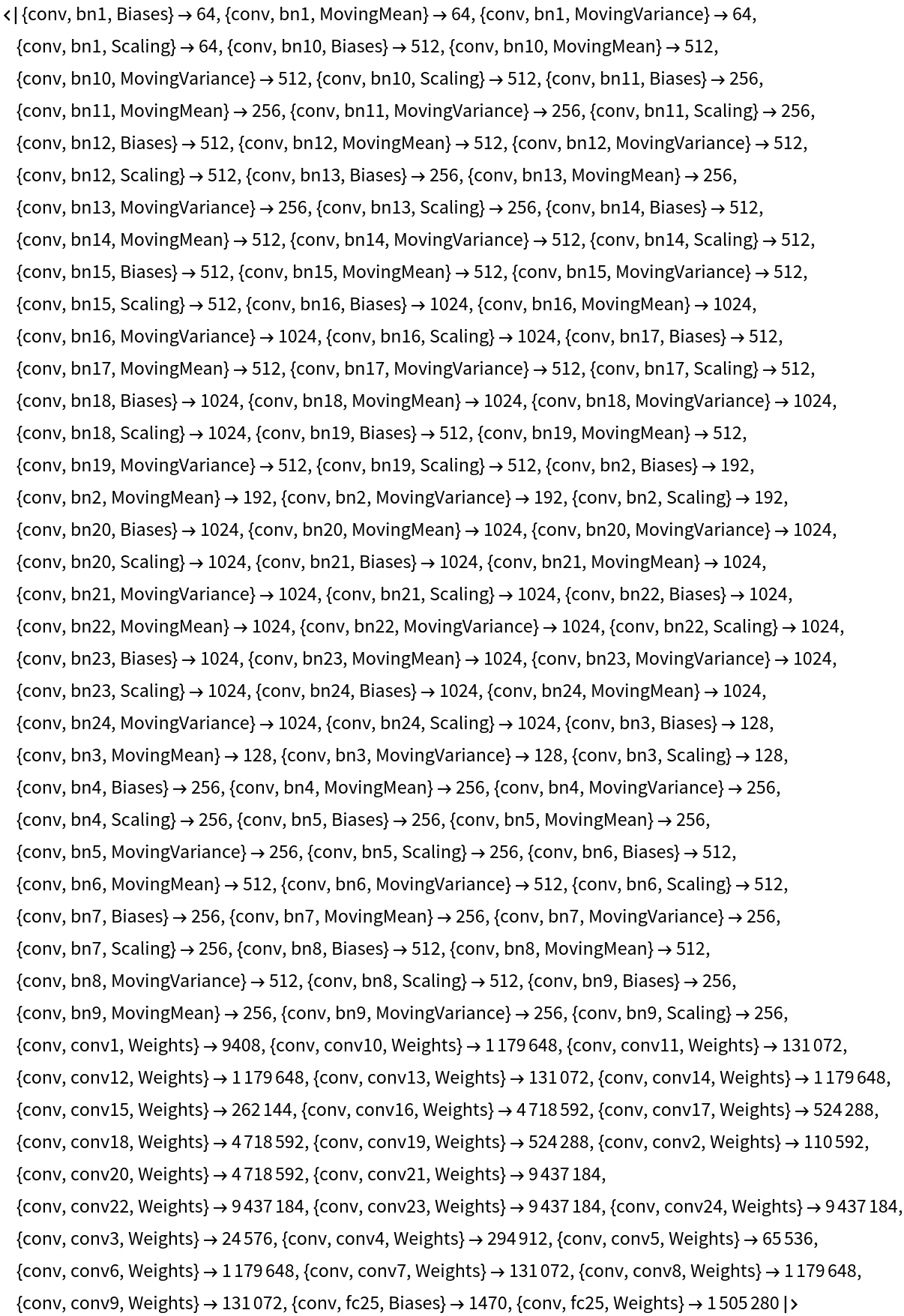

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

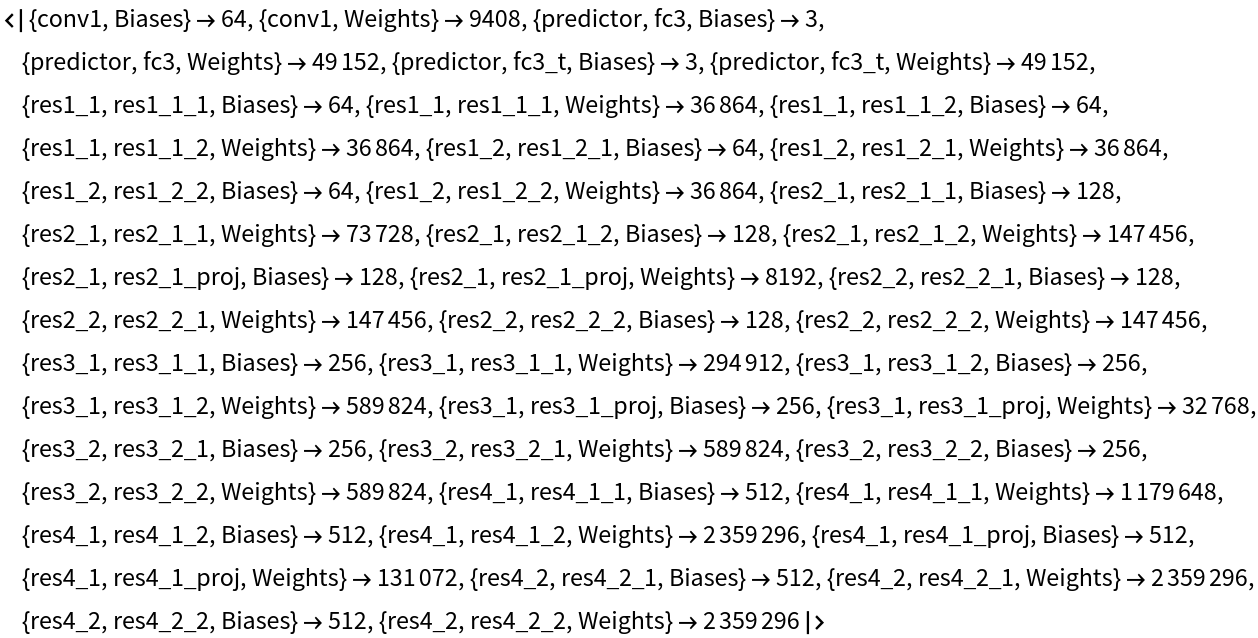

Display the summary graphic:

Export to MXNet

Export the nets into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter files:

Requirements

Wolfram Language

12.1

(March 2020)

or above

Resource History

Reference

![NetModel["Micro Aerial Vehicle Trail Navigation Nets Trained on IDSIA \

Swiss Alps and PASCAL VOC Data", "ParametersInformation"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/510715576fd31558.png)

![NetModel[{"Micro Aerial Vehicle Trail Navigation Nets Trained on \

IDSIA Swiss Alps and PASCAL VOC Data", "Task" -> "ObjectDetection"}]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/225305f809aca5d8.png)

![NetModel[{"Micro Aerial Vehicle Trail Navigation Nets Trained on \

IDSIA Swiss Alps and PASCAL VOC Data", "Task" -> "ObjectDetection"}, "UninitializedEvaluationNet"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/0463489dfd375493.png)

![getTurnAngle[img_, b1_, b2_] := With[{netOut = NetModel[

"Micro Aerial Vehicle Trail Navigation Nets Trained on IDSIA \

Swiss Alps and PASCAL VOC Data"][img]},

b1*(netOut["view_orientation"][[3]] - netOut["view_orientation"][[1]]) + b2*(netOut["lateral_offset"][[3]] - netOut["lateral_offset"][[1]])

];

getTurnAngle[img_] := getTurnAngle[img, 10, 10]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/650a2c437a3700b6.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/d6421455-104c-49a6-b76e-ee492bed6245"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/2f3132c9e8b3f1c3.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/4056bc3f-7df8-4c6e-8418-a0a200903b88"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/3f104b6165775fc5.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/d566d376-4a03-4f1d-be00-285776ff2c9f"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/0908506f23d0fd33.png)

![HighlightImage[testImage, MapThread[{White, Inset[Style[labels[[#2]], Black, FontSize -> Scaled[1/25], Background -> GrayLevel[1, .6]], Last[#1], {Right, Top}], #1} &,

Transpose@detection]]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/5339db6142dd2572.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/91115a35-2f65-417e-952e-ced73a0d5570"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/73f3849a36f1e382.png)

![res = NetModel[{"Micro Aerial Vehicle Trail Navigation Nets Trained \

on IDSIA Swiss Alps and PASCAL VOC Data", "Task" -> "ObjectDetection"}][testImage];](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/60110d1104f19bd6.png)

![rectangles = res["Boxes"];

rectangles = ArrayReshape[ArrayReshape[rectangles, {98, 4}], {98, 2, 2}];

rectangles = Rectangle @@@ rectangles;

objectness = ArrayFlatten[res["Confidence"], 1];](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/574bf449485ed23c.png)

![Graphics[

MapThread[{EdgeForm[Opacity[#1 + 0.12]], #2} &, {objectness, rectangles}],

BaseStyle -> {FaceForm[], EdgeForm[{Thin, Black}]}

]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/6731e0242173ae1b.png)

![Information[

NetModel[{"Micro Aerial Vehicle Trail Navigation Nets Trained on \

IDSIA Swiss Alps and PASCAL VOC Data", "Task" -> "ObjectDetection"}], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/241a2445a1ac550c.png)

![Information[

NetModel[{"Micro Aerial Vehicle Trail Navigation Nets Trained on \

IDSIA Swiss Alps and PASCAL VOC Data", "Task" -> "Navigation"}], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/7a649c4b1313422c.png)

![Information[

NetModel[{"Micro Aerial Vehicle Trail Navigation Nets Trained on \

IDSIA Swiss Alps and PASCAL VOC Data", "Task" -> "ObjectDetection"}], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/51bbdaba64215a70.png)

![Information[

NetModel[{"Micro Aerial Vehicle Trail Navigation Nets Trained on \

IDSIA Swiss Alps and PASCAL VOC Data", "Task" -> "Navigation"}], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/41c31d8928930a93.png)

![Information[

NetModel[{"Micro Aerial Vehicle Trail Navigation Nets Trained on \

IDSIA Swiss Alps and PASCAL VOC Data", "Task" -> "ObjectDetection"}], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/3c46fbaedd49a63f.png)

![Information[

NetModel[{"Micro Aerial Vehicle Trail Navigation Nets Trained on \

IDSIA Swiss Alps and PASCAL VOC Data", "Task" -> "Navigation"}], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/1b7e2fc997818d9c.png)

![Information[

NetModel[{"Micro Aerial Vehicle Trail Navigation Nets Trained on \

IDSIA Swiss Alps and PASCAL VOC Data", "Task" -> "ObjectDetection"}], "SummaryGraphic"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/61011a8506a44d4e.png)

![Information[

NetModel[{"Micro Aerial Vehicle Trail Navigation Nets Trained on \

IDSIA Swiss Alps and PASCAL VOC Data", "Task" -> "Navigation"}], "SummaryGraphic"]](https://www.wolframcloud.com/obj/resourcesystem/images/79c/79ce59cc-3222-4b0a-a8ed-28d68661d825/64665abde460a2e2.png)