Get the pre-trained net:

Basic usage

Get a noisy video:

Define a noise level for a video:

Denoise a video:

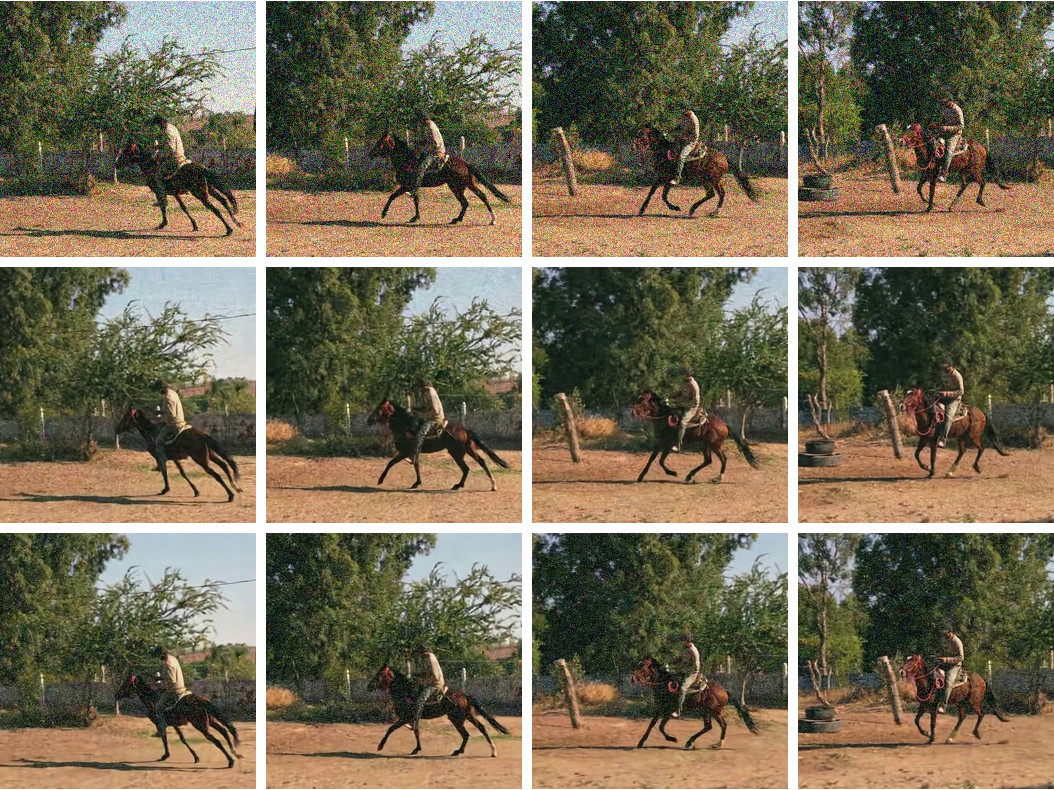

Visualize the five frames of a noisy and denoised video:

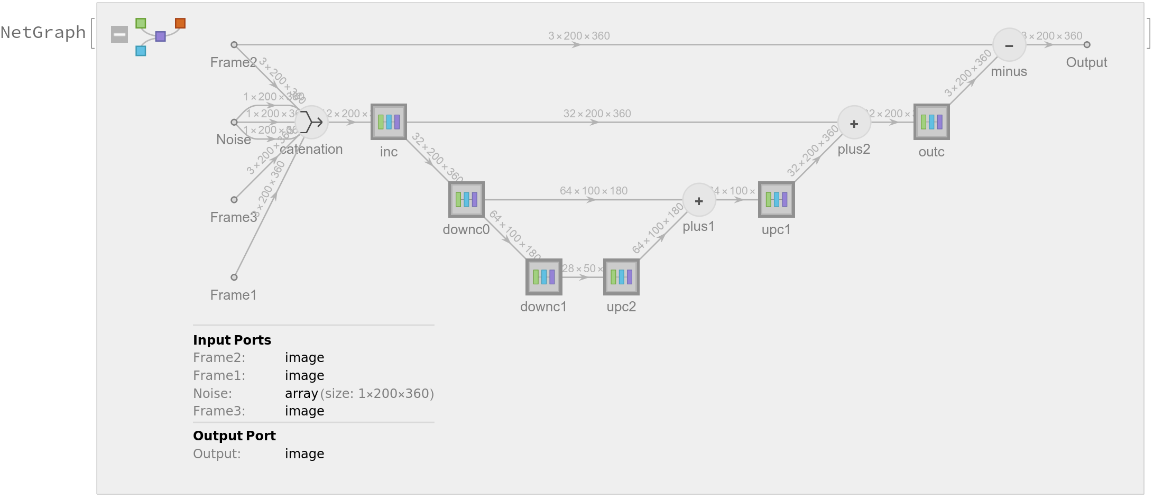

Adapt to any size

Automatic frame resizing can be avoided by replacing the NetEncoder. First get the net:

Get a noisy video:

Create a new NetEncoder with the desired dimensions (to get a resizable net, the spatial dimensions should be divisible by 4):

Attach the NetEncoder, define a noise level and run the net:

Visualize the three frames of a noisy and denoised video:

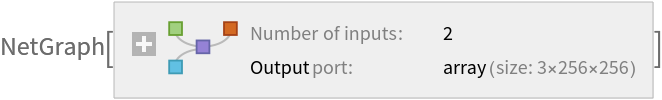

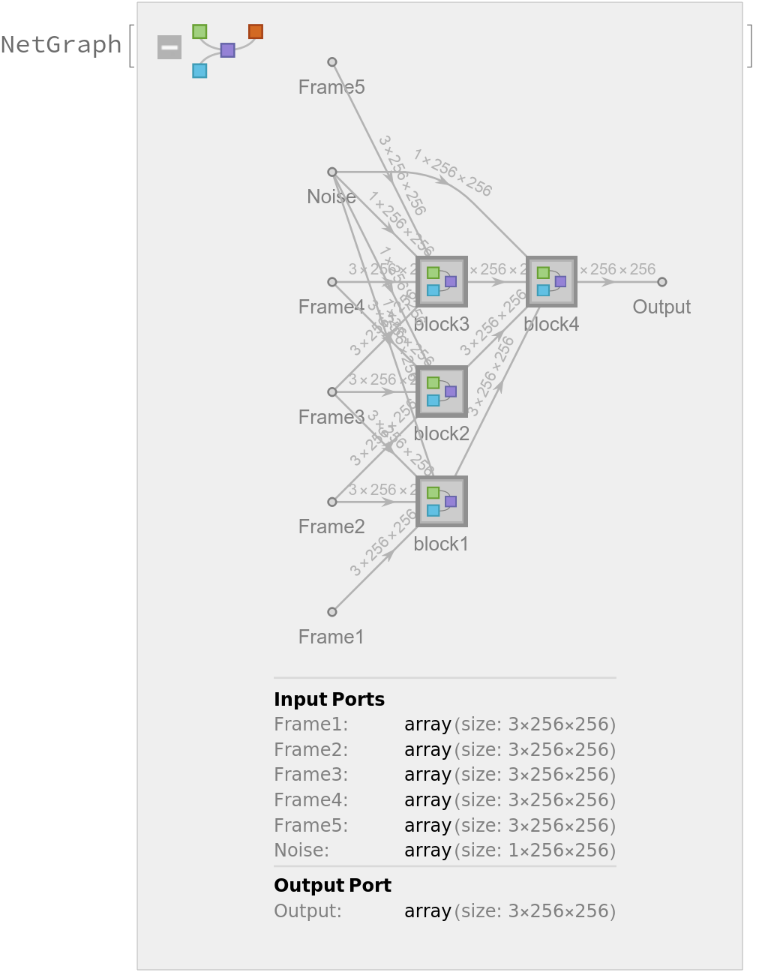

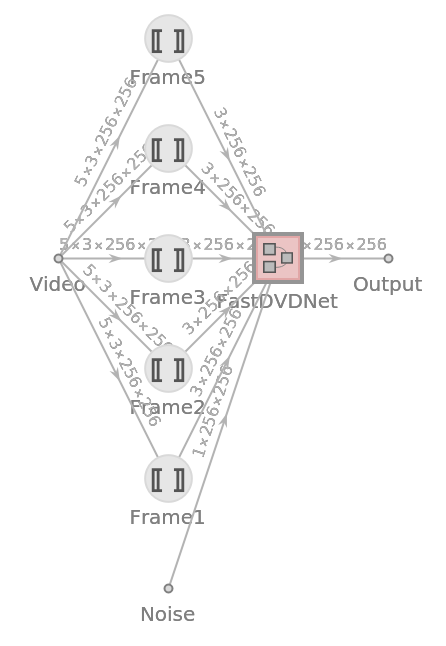

FastDVDNet architecture

The video encoder takes the first five frames of the input video and encodes it into a vector of size (5,3,256,256):

Note that the first three blocks are shared. (Framei, Framei+1, Framei+2, Noise) are given as inputs to blocki to obtain an intermediate denoised frame to obtain an intermediate denoised output frame  . Finally, (

. Finally, ( ,

,  ,

,  , Noise) are given as inputs to the last block to get the final denoised output frame:

, Noise) are given as inputs to the last block to get the final denoised output frame:

Extract the first and the last blocks of the net:

All the blocks share a U-Net type architecture. Explore the input block:

Get a noisy video and define a noise level for a video:

Simply mapping the net to a list of frames can be inefficient due some blocks processing the same frame multiple times. To avoid double counting, split the inference into two steps:

Get the final denoised video:

Visualize the three frames of a noisy video and the intermediate and final result:

Memory-efficient evaluation

Define a custom videoDenoise function for memory-efficient processing of video with Gaussian or Poisson noise:

Get a noisy video:

Get a final denoised video assuming a Gaussian distribution on the input noise:

Get a final denoised video assuming a Poisson distribution on the input noise:

Visualize the four frames of a noisy video and the denoised results assuming a Gaussian and Poisson distribution on the input noise:

Net information

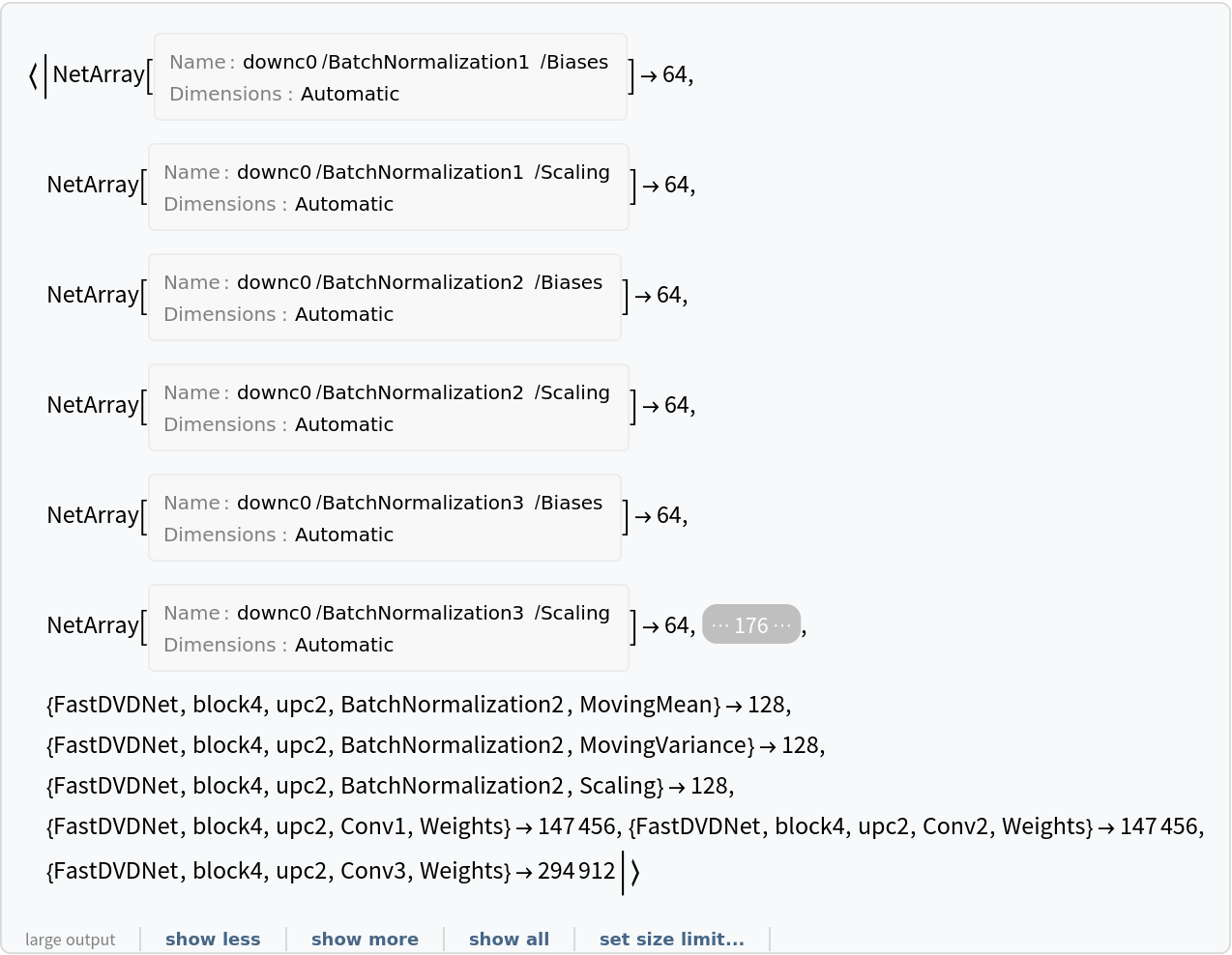

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Export to ONNX

Export the net to the ONNX format:

Get the size of the ONNX file:

The byte count of the resource object is smaller because shared arrays are currently being duplicated when exporting to ONNX:

Check some metadata of the ONNX model:

Import the model back into the Wolfram Language. However, the NetEncoder and NetDecoder will be absent because they are not supported by ONNX:

![denoised = VideoFrameMap[

NetModel["FastDVDNet Trained on DAVIS Data"][<|"Video" -> #, "Noise" -> noise|>] &, video, 5, 1]; // AbsoluteTiming](https://www.wolframcloud.com/obj/resourcesystem/images/828/82811a9b-be56-4050-8aaf-d6dcac80407c/4b17f21b082064cc.png)

![netEnc = NetEncoder[{"VideoFrames", First[Information[video, "OriginalRasterSize"]], "ColorSpace" -> "RGB", "TargetLength" -> 5, FrameRate -> Inherited}]](https://www.wolframcloud.com/obj/resourcesystem/images/828/82811a9b-be56-4050-8aaf-d6dcac80407c/10be5ed309c90544.png)

![resizedNet = NetReplacePart[

net, {"Video" -> netEnc, "Noise" -> {1, Automatic, Automatic}}];

noise = ConstantArray[0.1, Prepend[Reverse[First[Information[video, "OriginalRasterSize"]]], 1]];

denoised = VideoFrameMap[resizedNet[<|"Video" -> #, "Noise" -> noise|>] &, video, 5, 1];](https://www.wolframcloud.com/obj/resourcesystem/images/828/82811a9b-be56-4050-8aaf-d6dcac80407c/3e9c985ee27a3299.png)

![]() . Finally, (

. Finally, (![]() ,

, ![]() ,

, ![]() , Noise) are given as inputs to the last block to get the final denoised output frame:

, Noise) are given as inputs to the last block to get the final denoised output frame:

![{w, h} = First[Information[video, "OriginalRasterSize"]];

{blockIn, blockOut} = Map[NetReplacePart[

NetExtract[

NetModel["FastDVDNet Trained on DAVIS Data"], {"FastDVDNet", #}],

{"Frame1" -> NetEncoder[{"Image", {w, h}}], "Frame2" -> NetEncoder[{"Image", {w, h}}], "Frame3" -> NetEncoder[{"Image", {w, h}}], "Noise" -> {1, h, w},

"Output" -> NetDecoder["Image"]}

] &, {"block1", "block4"}];](https://www.wolframcloud.com/obj/resourcesystem/images/828/82811a9b-be56-4050-8aaf-d6dcac80407c/1a4616b4832f9ded.png)

![video = ResourceData["Sample Video: Surfing (Noisy)"];

noise = ConstantArray[0.17, {1, h, w}];](https://www.wolframcloud.com/obj/resourcesystem/images/828/82811a9b-be56-4050-8aaf-d6dcac80407c/010d0b53cd224d42.png)

![interm = VideoFrameMap[

blockIn[<| "Noise" -> noise, "Frame1" -> #[[1]], "Frame2" -> #[[2]], "Frame3" -> #[[3]] |>] &, video, 3, 1];](https://www.wolframcloud.com/obj/resourcesystem/images/828/82811a9b-be56-4050-8aaf-d6dcac80407c/5e6750d5d7397275.png)

![final = VideoFrameMap[

blockOut[<| "Noise" -> noise, "Frame1" -> #[[1]], "Frame2" -> #[[2]], "Frame3" -> #[[3]] |>] &, interm, 3, 1];](https://www.wolframcloud.com/obj/resourcesystem/images/828/82811a9b-be56-4050-8aaf-d6dcac80407c/100443ee7ed6aa21.png)

![Clear[videoDenoise];

Options[videoDenoise] = {TargetDevice -> "CPU"};

videoDenoise[video_Video,

{noiseType : "GaussianNoise" | "PoissonNoise", a_?Internal`RealValuedNumberQ} /; 0 < a <= 1, opts : OptionsPattern[]] :=

Module[

{VideoDenoiseFrame, w, h, blockIn, blockOut, net, sigmaFactor, interFrame2 = Null, interFrame3 = Null, interFrame4 = Null},

{w, h} = Normal[Information[video]["VideoTracks"][1]["OriginalRasterSize"]];

{w, h} = Round[{w, h}, 4];

(* Load first level U-Net *)

net = NetModel["FastDVDNet Trained on DAVIS Data"];

blockIn = NetReplacePart[

NetExtract[net, {"FastDVDNet", "block1"}],

{"Frame1" -> NetEncoder[{"Image", {w, h}}],

"Frame2" -> NetEncoder[{"Image", {w, h}}],

"Frame3" -> NetEncoder[{"Image", {w, h}}],

"Noise" -> {1, h, w},

"Output" -> {3, h, w} }

];

(* Load second level U-Net *)

blockOut = NetReplacePart[

NetExtract[net, {"FastDVDNet", "block4"}],

{"Frame1" -> {3, h, w},

"Frame2" -> {3, h, w},

"Frame3" -> {3, h, w},

"Noise" -> {1, h, w},

"Output" -> NetDecoder["Image"] }

];

sigmaFactor = Switch[noiseType, "GaussianNoise", a, "PoissonNoise", Sqrt[a/(100*(1 - a))]];

(* Neural function to combine five frames into one denoised frame *)

VideoDenoiseFrame[frames : {__Image}] :=

Block[

{sigma, interFrame},

sigma = Switch[noiseType,

"GaussianNoise",

ConstantArray[sigmaFactor, {1, h, w}],

"PoissonNoise",

ArrayReshape[

ImageData[

Sqrt[ColorConvert[frames[[3]], "Grayscale"]]*

sigmaFactor], {1, h, w}]

];

interFrame = blockIn[AssociationThread[{"Frame1", "Frame2", "Frame3", "Noise"} -> Append[frames, sigma] ], TargetDevice -> OptionValue[TargetDevice]];

interFrame2 = If[NumericArrayQ[interFrame3], interFrame3, interFrame];

interFrame3 = If[NumericArrayQ[interFrame4], interFrame4, interFrame];

interFrame4 = interFrame;

blockOut[<|"Frame1" -> interFrame2, "Frame2" -> interFrame3, "Frame3" -> interFrame4, "Noise" -> sigma |>, TargetDevice -> OptionValue[TargetDevice]]

];

(* Map denoise function onto the entire video *)

VideoFrameMap[VideoDenoiseFrame, video, 3, 1]

];](https://www.wolframcloud.com/obj/resourcesystem/images/828/82811a9b-be56-4050-8aaf-d6dcac80407c/4d35671f526701aa.png)

![onnxFile = Export[FileNameJoin[{$TemporaryDirectory, "net.onnx"}], NetModel["FastDVDNet Trained on DAVIS Data"]]](https://www.wolframcloud.com/obj/resourcesystem/images/828/82811a9b-be56-4050-8aaf-d6dcac80407c/331882b7152db569.png)