DoubleU-Net

Trained on

Medical Image Segmentation Datasets

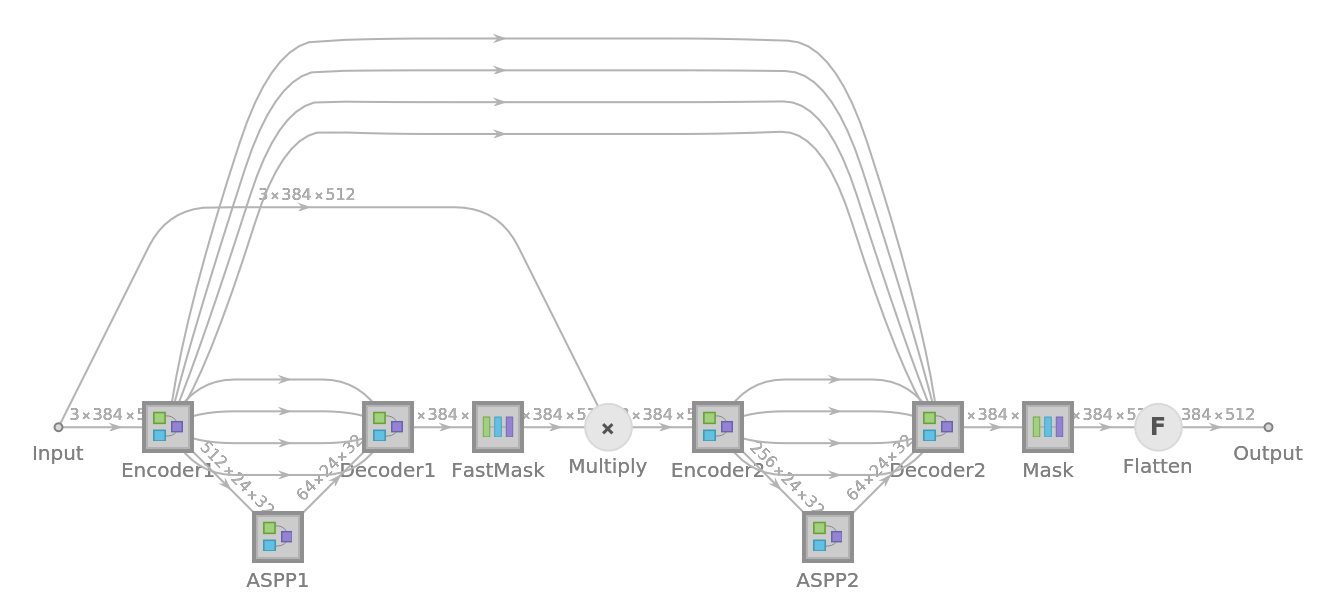

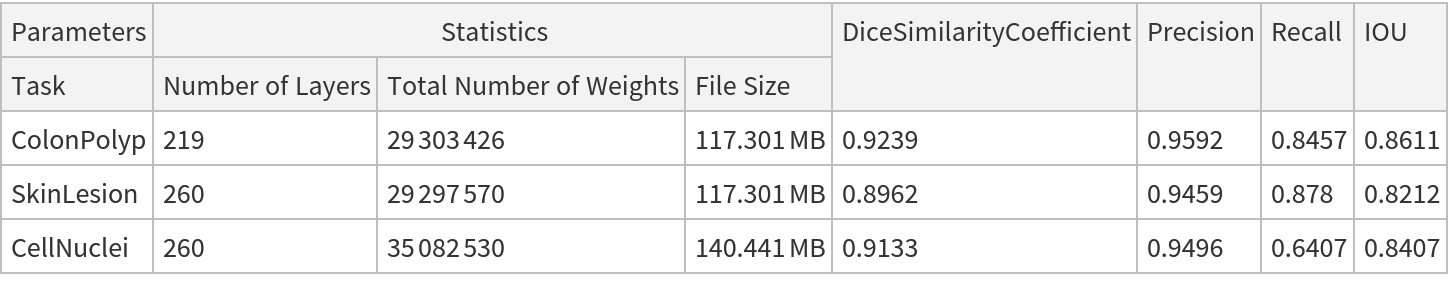

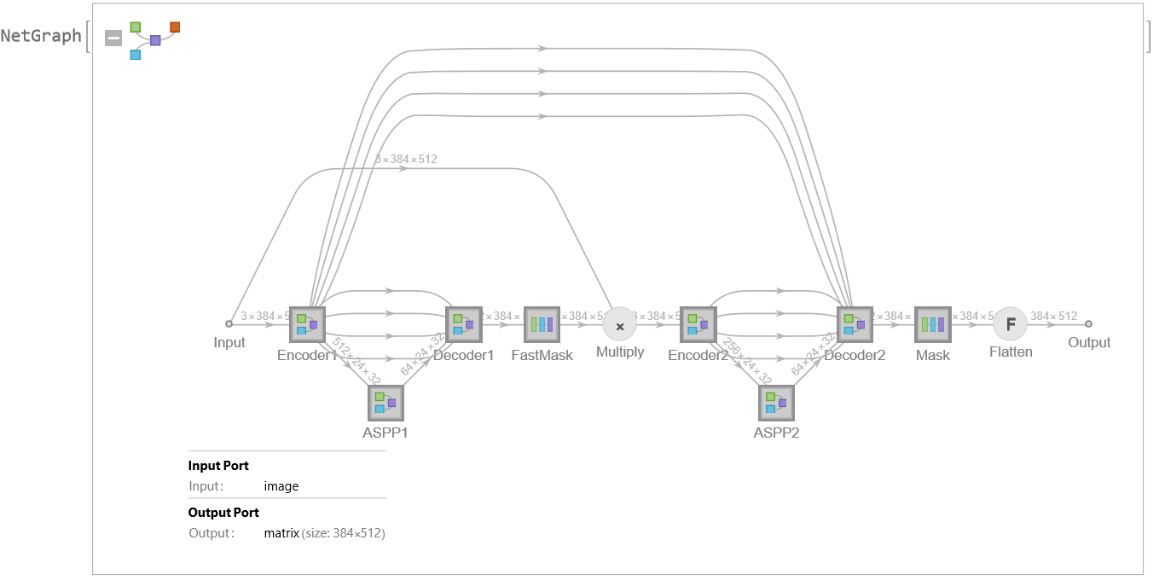

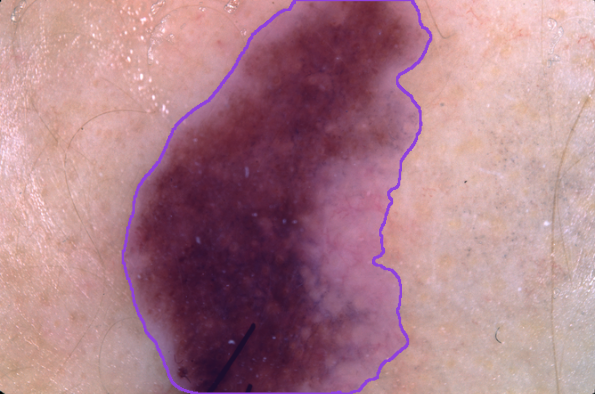

The authors propose a novel architecture called DoubleU-Net, combining two U-Net blocks stacked on top of each other. The first U-Net works as an encoder, using a VGG-19 pre-tained on the ImageNet dataset, while the second U-Net is added to capture more semantic information. An atrous spatial pyramid pooling (ASPP) block is added to capture contextual information within the network. The architecture was trained over different medical image segmentation datasets covering areas such as colonoscopy, dermoscopy and microscopy. The result outperforms U-Net for all the tasks.

Examples

Resource retrieval

Get the pre-trained net:

NetModel parameters

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

Pick a non-default net by specifying the parameters:

Pick a non-default uninitialized net:

Evaluation function

Define an evaluation function to select the evaluation mode and postprocess the net output:

Basic usage

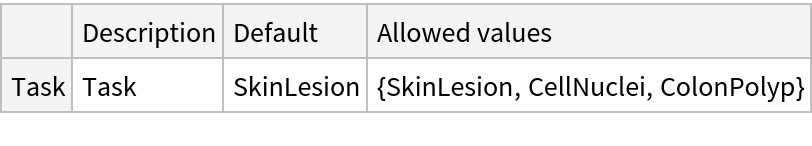

Obtain the segmentation mask of a skin lesion:

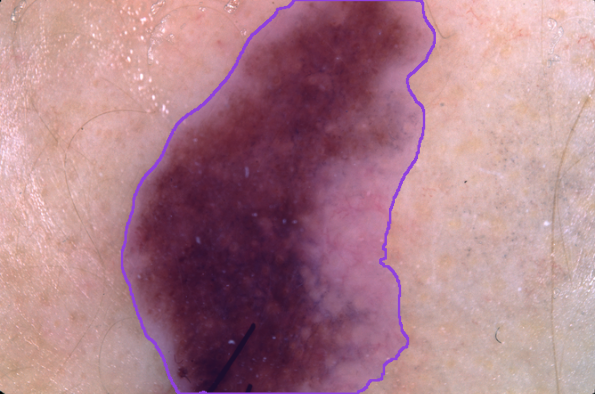

Visualize the mask:

The mask is a matrix of 0 and 1 whose size matches the dimensions of the input image:

Overlay the mask on the input image:

Performance tradeoff

The nets compute a lower-quality segmentation mask as an intermediate step. It is possible to stop the computation at such intermediate step to obtain a segmentation mask faster. This can be enabled by setting the third argument of the evaluation function to True:

Visualize the mask:

Overlay the mask on the input image:

Compare the evaluation timings of the fast and slow masks:

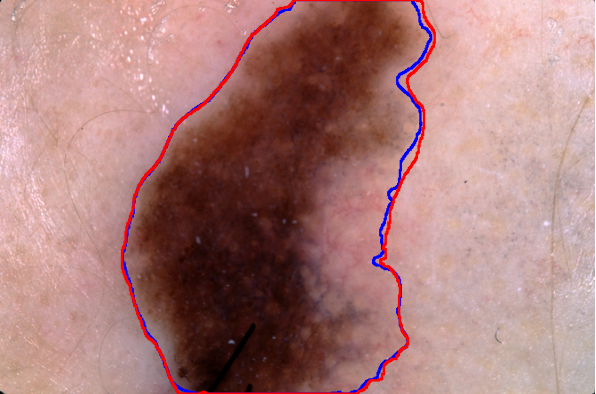

Compare the quality of the two segmentation masks. The fast mask is in blue and the slow mask is in red:

Net information

Obtain the total number of parameters:

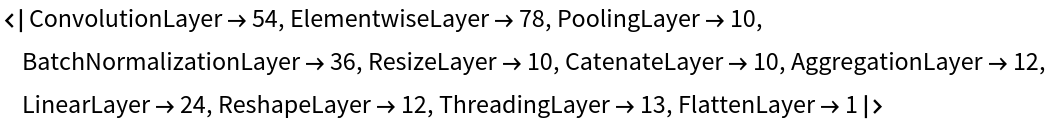

Obtain the layer type counts:

Display the summary graphic:

Resource History

Reference

![netevaluate[net_, img_, fast_ : False, device_ : "CPU"] := Module[{probs},

probs = If[fast,

Flatten[net[img, NetPort[{"FastMask"}], TargetDevice -> device], 1],

net[img, TargetDevice -> device]

];

probs = ArrayResample[probs, Reverse@ImageDimensions@img];

Round[probs]

];](https://www.wolframcloud.com/obj/resourcesystem/images/7d9/7d9e5051-d1fc-4cd5-8d19-11ac2a3df91b/5dd64da646283afa.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/dee58408-0861-4a1a-93c5-d504c1eadcbe"]](https://www.wolframcloud.com/obj/resourcesystem/images/7d9/7d9e5051-d1fc-4cd5-8d19-11ac2a3df91b/49938fe1e04c8f1f.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/768d29f7-1603-42fd-8f6b-07e764075381"]](https://www.wolframcloud.com/obj/resourcesystem/images/7d9/7d9e5051-d1fc-4cd5-8d19-11ac2a3df91b/032e81e4f5d4bce9.png)

![{slowTime, slowMask} = RepeatedTiming@

netevaluate[

NetModel[

"DoubleU-Net Trained on Medical Image Segmentation Datasets"], img];

{fastTime, fastMask} = RepeatedTiming@

netevaluate[

NetModel[

"DoubleU-Net Trained on Medical Image Segmentation Datasets"], img, True];](https://www.wolframcloud.com/obj/resourcesystem/images/7d9/7d9e5051-d1fc-4cd5-8d19-11ac2a3df91b/6d57c45b9d2c7f1d.png)