Dilated ResNet-105

Trained on

Cityscapes Data

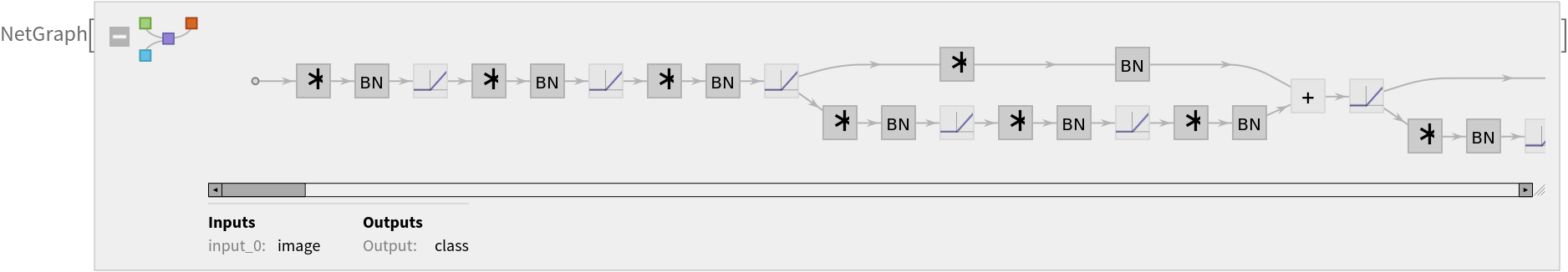

Released in 2017, this architecure combines the technique of dilated convolutions with the paradigm of residual networks, outperforming their nonrelated counterparts in image classification and semantic segmentation.

Number of layers: 360 |

Parameter count: 54,497,859 |

Trained size: 220 MB |

Examples

Resource retrieval

Get the pre-trained net:

Evaluation function

Write an evaluation function to handle net reshaping and resampling of input and output:

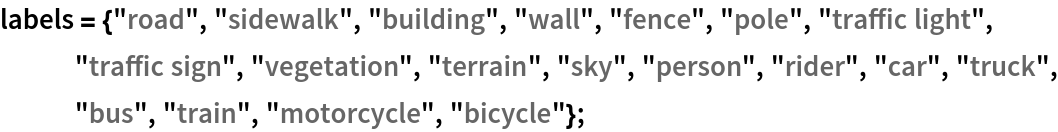

Label list

Obtain the label list for this model. Integers in the model’s output correspond to elements in the label list:

Basic usage

Obtain a segmentation mask for a given image:

Inspect which classes are detected:

Visualize the mask:

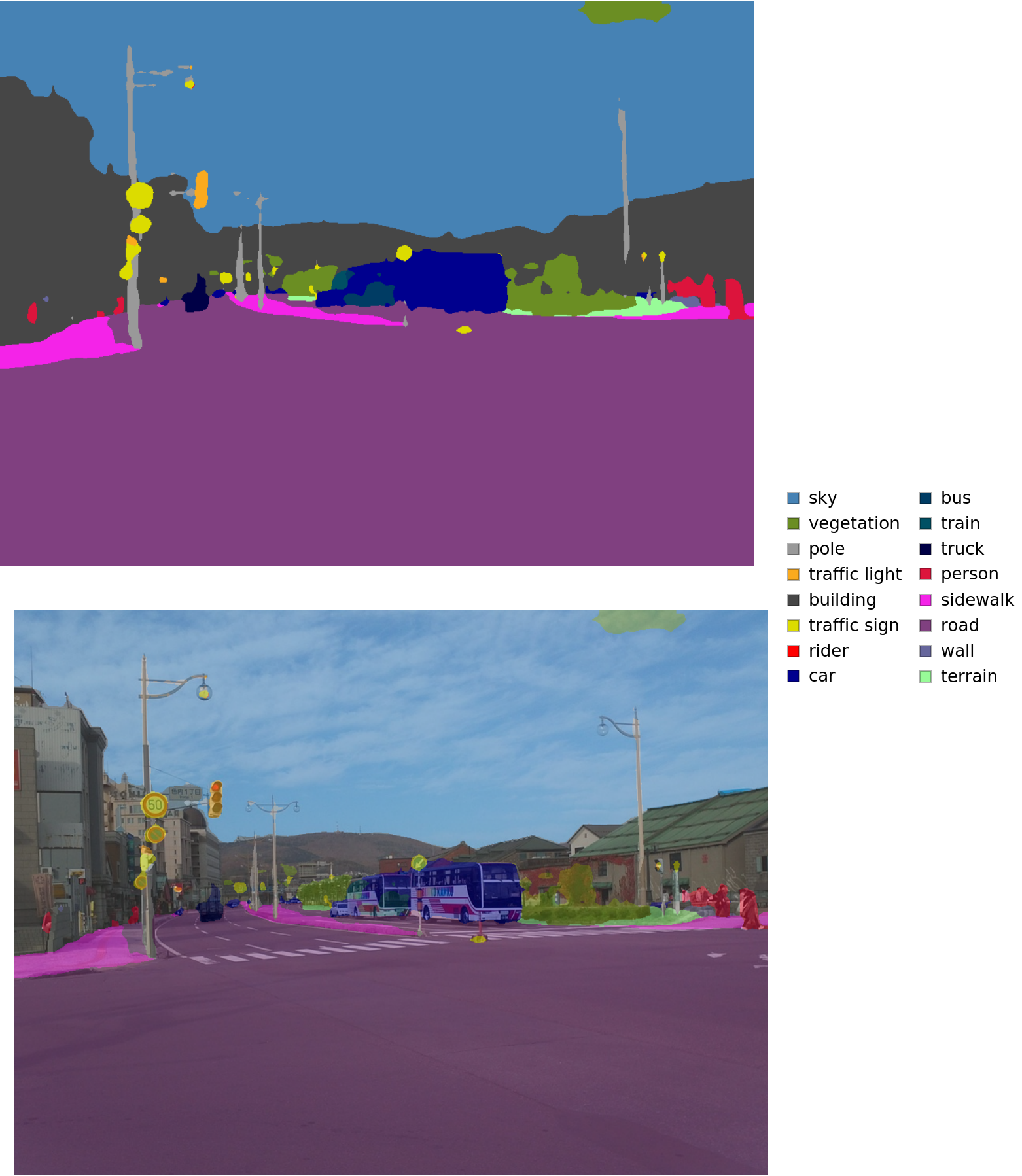

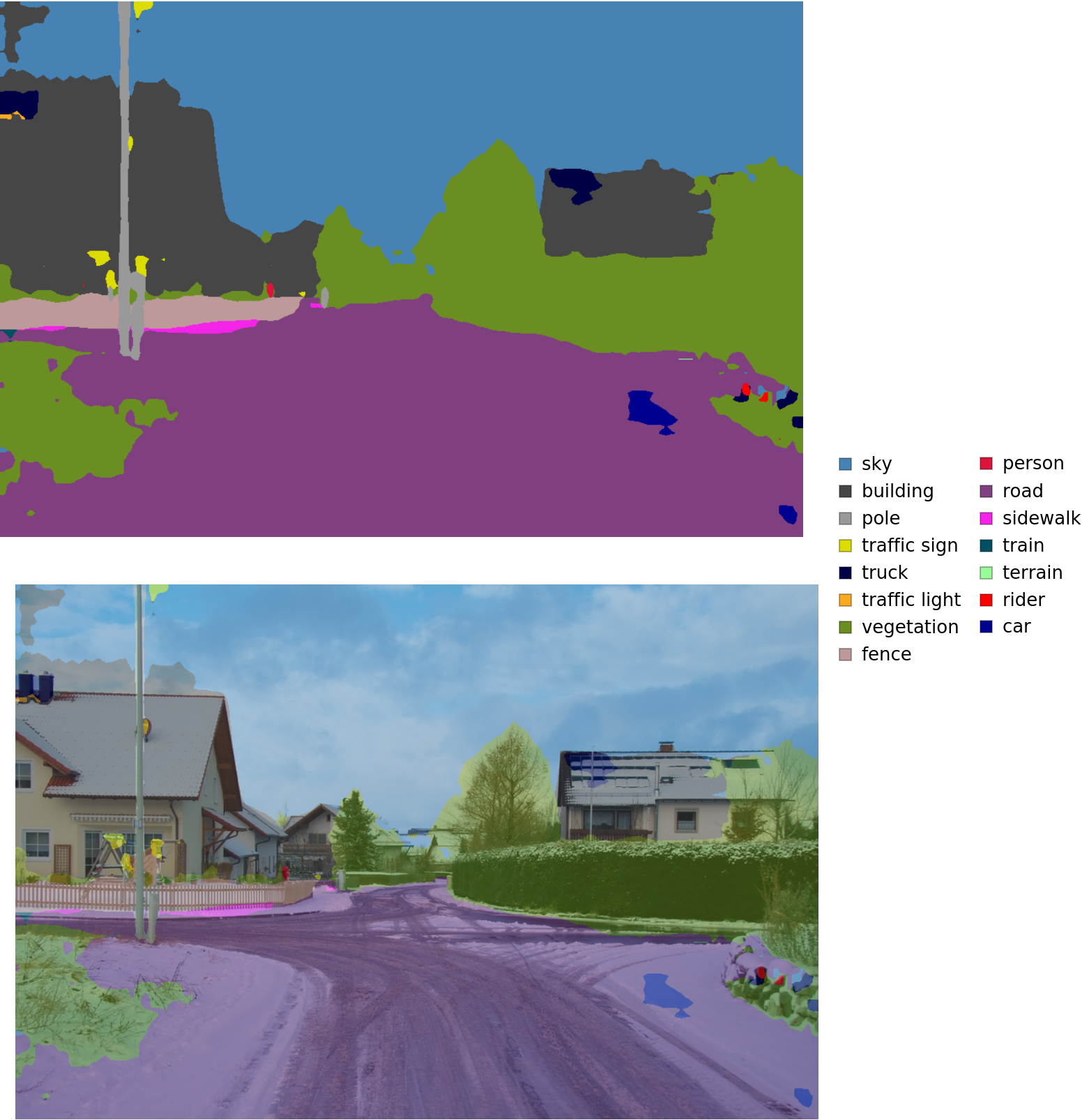

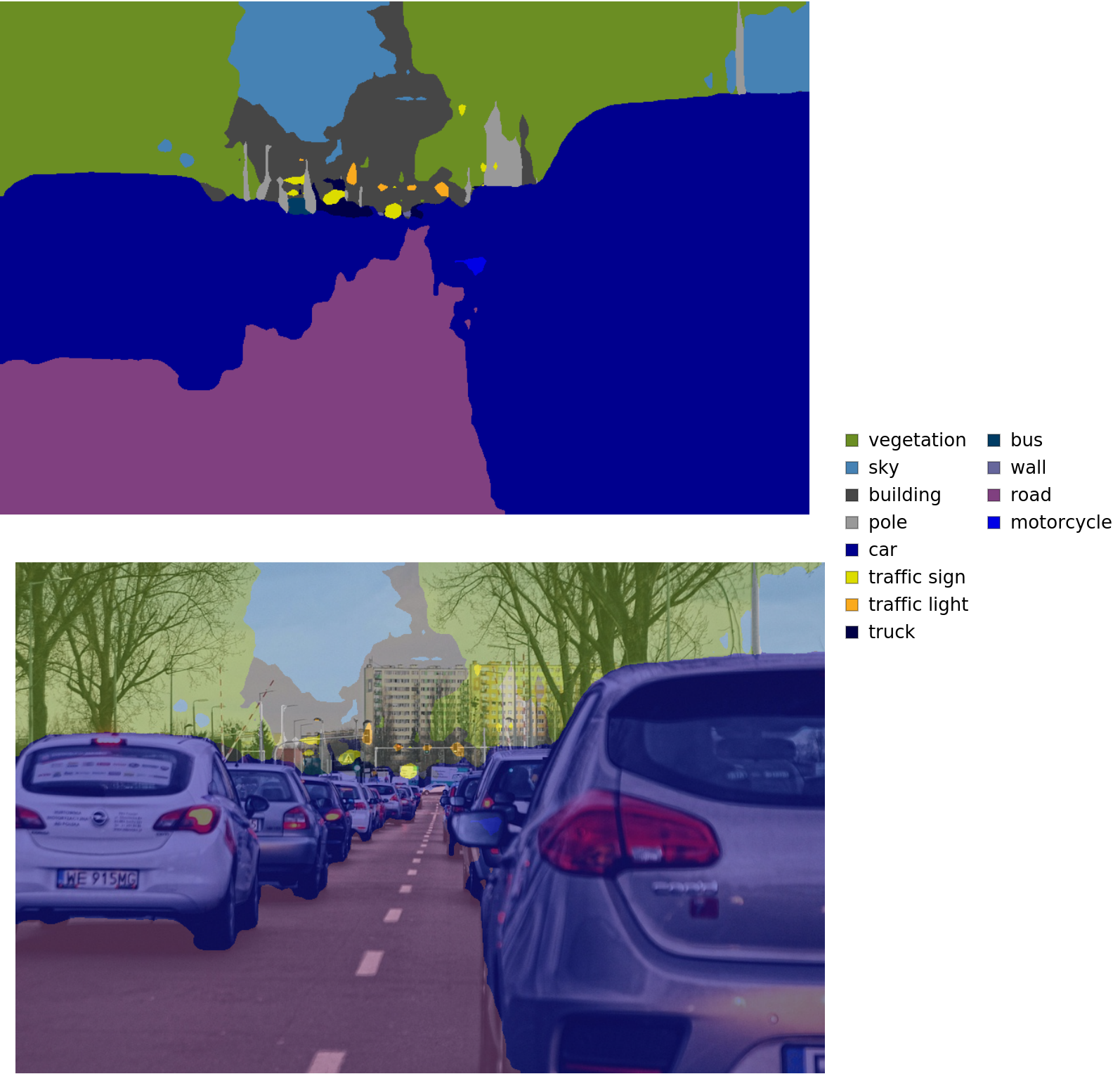

Advanced visualization

Associate classes to colors:

Write a function to overlap the image and the mask with a legend:

Inspect the results:

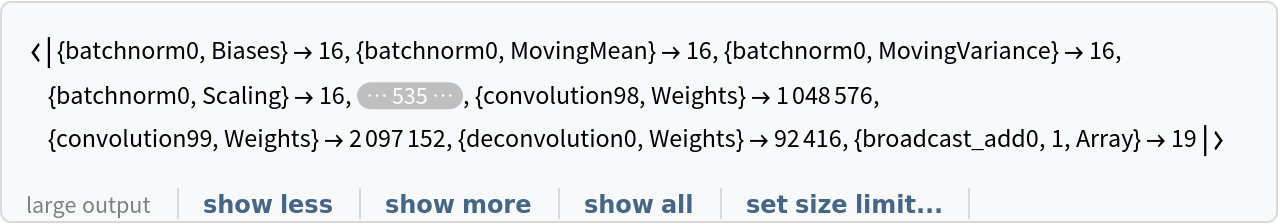

Net information

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

Requirements

Wolfram Language

11.3

(March 2018)

or above

Resource History

Reference

![netevaluate[img_, device_ : "CPU"] := Block[

{net, encData, dec, mean, var, prob},

net = NetModel["Dilated ResNet-105 Trained on Cityscapes Data"];

encData = Normal@NetExtract[net, "input_0"];

dec = NetExtract[net, "Output"];

{mean, var} = Lookup[encData, {"MeanImage", "VarianceImage"}];

NetReplacePart[net,

{"input_0" -> NetEncoder[{"Image", ImageDimensions@img, "MeanImage" -> mean, "VarianceImage" -> var}], "Output" -> dec}

][img, TargetDevice -> device]

]](https://www.wolframcloud.com/obj/resourcesystem/images/487/487cc9f1-ad1f-4d64-ace2-75a2b13ad090/40366516c408c580.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/cb6ef03f-89b0-448b-b575-2366bbf0703d"]](https://www.wolframcloud.com/obj/resourcesystem/images/487/487cc9f1-ad1f-4d64-ace2-75a2b13ad090/0c01cb3c975a672a.png)

![colors = Apply[

RGBColor, {{128, 64, 128}, {244, 35, 232}, {70, 70, 70}, {102, 102, 156}, {190, 153, 153}, {153, 153, 153}, {250, 170, 30}, {220, 220, 0}, {107, 142, 35}, {152, 251, 152}, {70, 130, 180}, {220, 20, 60}, {255, 0, 0}, {0, 0, 142}, {0, 0, 70}, {0, 60, 100}, {0, 80, 100}, {0, 0, 230}, {119, 11, 32}}/255., {1}]](https://www.wolframcloud.com/obj/resourcesystem/images/487/487cc9f1-ad1f-4d64-ace2-75a2b13ad090/3d8366b288b83d86.png)

![result[img_, device_ : "CPU"] := Block[

{mask, classes, maskPlot, composition},

mask = netevaluate[img, device];

classes = DeleteDuplicates[Flatten@mask];

maskPlot = Colorize[mask, ColorRules -> indexToColor];

composition = ImageCompose[img, {maskPlot, 0.5}];

Legended[

Row[Image[#, ImageSize -> Large] & /@ {maskPlot, composition}], SwatchLegend[indexToColor[[classes, 2]], labels[[classes]]]]

]](https://www.wolframcloud.com/obj/resourcesystem/images/487/487cc9f1-ad1f-4d64-ace2-75a2b13ad090/67ff18f1c209c673.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/862660b2-9b37-4dba-b3de-8ef0c190edb6"]](https://www.wolframcloud.com/obj/resourcesystem/images/487/487cc9f1-ad1f-4d64-ace2-75a2b13ad090/669793ad63112fef.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/eb1106b1-c28b-4700-9c9a-2f86c312b7af"]](https://www.wolframcloud.com/obj/resourcesystem/images/487/487cc9f1-ad1f-4d64-ace2-75a2b13ad090/25a4aee8fc08763a.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/d3572bce-64e8-445f-8052-543aa0ff1c5e"]](https://www.wolframcloud.com/obj/resourcesystem/images/487/487cc9f1-ad1f-4d64-ace2-75a2b13ad090/594625cbfe876d9e.png)