Resource retrieval

Get the pre-trained net:

Evaluation function

Write an evaluation function to post-process the net output in order to obtain keypoint position, strength and features:

Basic usage

Obtain the keypoints of a given image:

Visualize the keypoints:

Specify a maximum of 15 keypoints and visualize the new detection:

Network result

For the default input size of 224⨯224, the net divides the input image in 55⨯55 patches and computes a feature vector of size 512 for each patch:

Every patch is associated to a scalar strength value indicating the likelihood that the patch contains a keypoint. The strength of each patch is the maximal element of its feature vector after an L2 normalization. Obtain the strength of each patch:

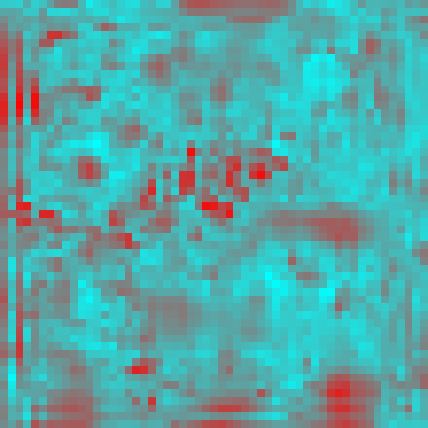

Visualize the strength of each patch as a heat map:

Overlay the heat map on the image:

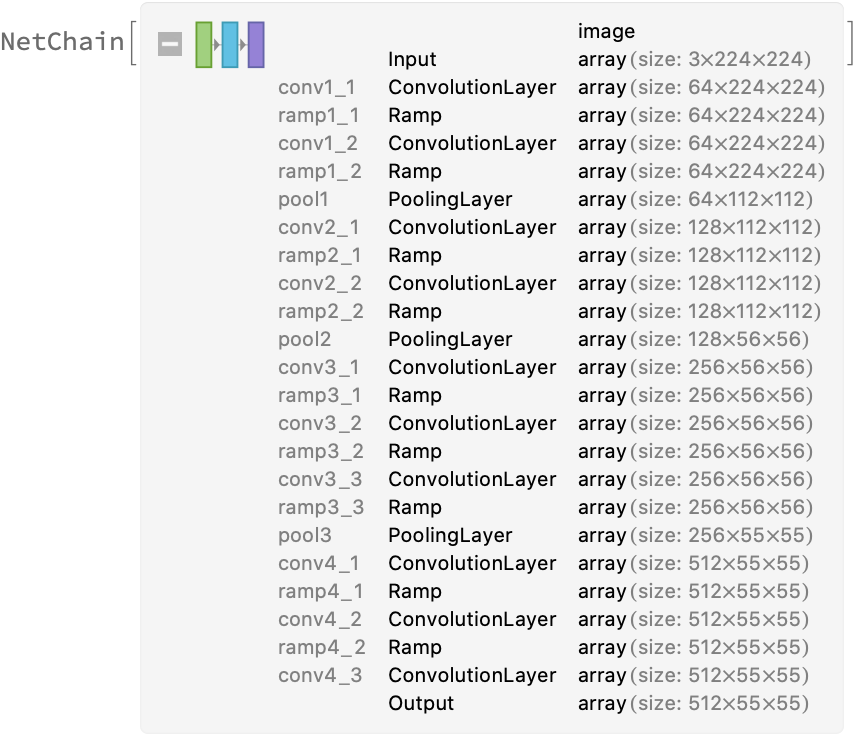

Keypoints are selected starting from the patch with highest strength, up to keypoints. Highlight the top 10 keypoints:

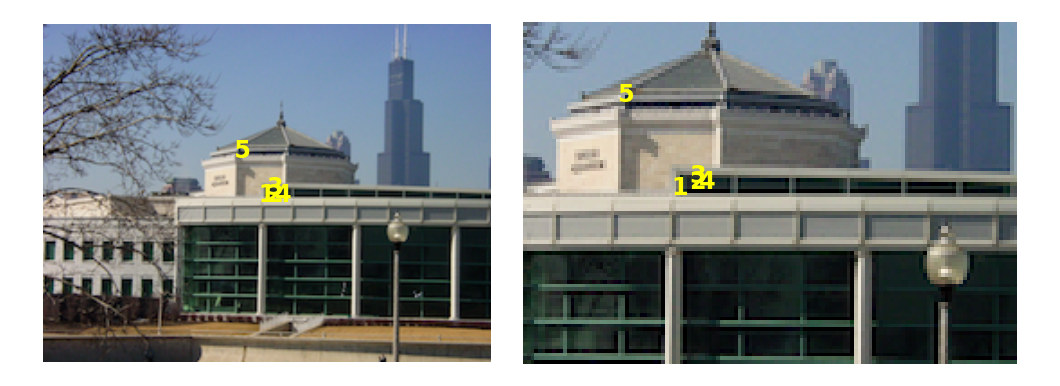

Find correspondences between images

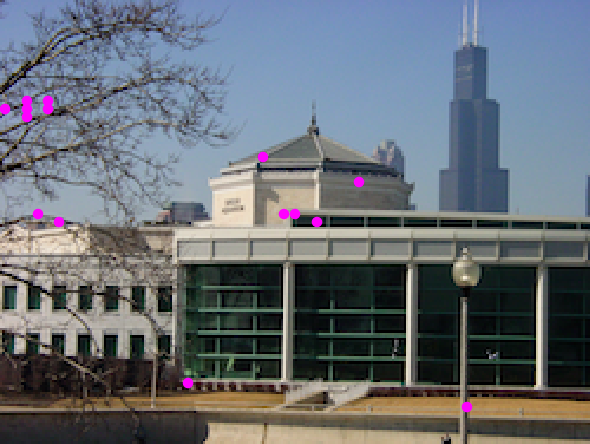

The main application of computing feature vectors for the image keypoints is to find correspondences in different images of the same scene. Get two hundred keypoint features from two images:

Define a function to find the n nearest pairs of keypoints (in feature space) and use it to find the five nearest pairs:

Get the keypoint positions associated with each pair and visualize them on the respective images:

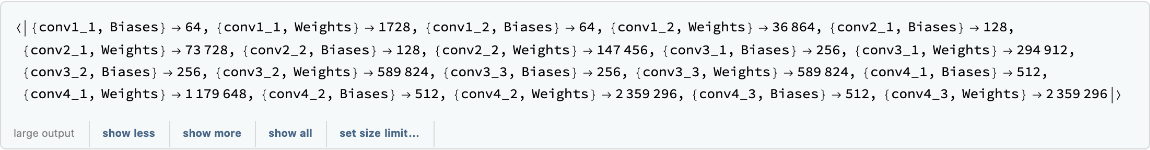

Net information

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Export to ONNX

Export the net to the ONNX format:

Get the size of the ONNX file:

The byte count of the resource object is similar to the ONNX file:

Check some metadata of the ONNX model:

Import the model back into the Wolfram Language. However, the NetEncoder and NetDecoder will be absent because they are not supported by ONNX:

![Options[netevaluate] = {MaxFeatures -> 50};

netevaluate[img_Image, opts : OptionsPattern[]] := Module[

{dims, featureMap, c, h, w, transposed, normalized, strengthArray, pos, scalex, scaley, keypointStr, keypointPos, keypointFeats},

dims = ImageDimensions[img];

featureMap = NetModel["D2-Net Trained on MegaDepth Data"][img];

{c, h, w} = Dimensions[featureMap];

transposed = Transpose[featureMap, {3, 1, 2}];

normalized = transposed/Map[Norm, transposed, {2}]; (* Matrix containing the strengths of each keypoint *) strengthArray = Map[Max, normalized, {2}];

(* Find positions of (up to) MaxFeatures strongest keypoints *) pos = Ordering[

Flatten@strengthArray, -Min[OptionValue[MaxFeatures], w*h]] - 1;

pos = QuotientRemainder[#, w] + {1, 1} & /@ pos; (* matrix position *)

(* From array positions to image keypoint positions *)

{scalex, scaley} = N[dims/{w, h}];

keypointPos = {scalex*(#[[1]] - 0.5), scaley*(h - #[[2]] + 0.5)} & /@

Reverse /@ pos;

(* Extract the features and strengths *) keypointFeats = Extract[normalized, pos];

keypointStr = Extract[strengthArray, pos];

{keypointPos, keypointStr, keypointFeats}

]](https://www.wolframcloud.com/obj/resourcesystem/images/ecc/ecc7ca3f-d9a8-460a-9818-8a3cb69e1951/36efb7374489d86c.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/ae785a2c-eb63-4af1-ad3c-70c6946b852b"]](https://www.wolframcloud.com/obj/resourcesystem/images/ecc/ecc7ca3f-d9a8-460a-9818-8a3cb69e1951/6230bfd041cffff3.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/085bf1db-e081-4a91-b16e-dc13c2f24cb7"]](https://www.wolframcloud.com/obj/resourcesystem/images/ecc/ecc7ca3f-d9a8-460a-9818-8a3cb69e1951/71f505fa12334b5a.png)

![strengthArray = With[{transposed = Transpose[netResult, {3, 1, 2}]},

Map[Max, transposed/Map[Norm, transposed, {2}], {2}]

];](https://www.wolframcloud.com/obj/resourcesystem/images/ecc/ecc7ca3f-d9a8-460a-9818-8a3cb69e1951/00cb70e91a83ae9e.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/e0808678-8175-4e4d-89c9-6e3205aabbd9"]](https://www.wolframcloud.com/obj/resourcesystem/images/ecc/ecc7ca3f-d9a8-460a-9818-8a3cb69e1951/3f071932657ad917.png)

![findKeypointPairs[feats1_, feats2_, n_] := Module[

{distances, nearestPairs, nearestDistances},

distances = DistanceMatrix[feats1, feats2];

nearestPairs = MapIndexed[Flatten@{#2, Ordering[#1, 1]} &, distances];

nearestDistances = Extract[distances, nearestPairs];

nearestPairs[[Ordering[nearestDistances, n]]]

];](https://www.wolframcloud.com/obj/resourcesystem/images/ecc/ecc7ca3f-d9a8-460a-9818-8a3cb69e1951/368287450b15e40e.png)

![GraphicsRow@MapThread[

Function[{img, keypoints},

Show[img, Graphics@

MapIndexed[Inset[Style[First@#2, 12, Yellow, Bold], #1] &, keypoints]]

],

{{img1, img2}, {pos1, pos2}}

]](https://www.wolframcloud.com/obj/resourcesystem/images/ecc/ecc7ca3f-d9a8-460a-9818-8a3cb69e1951/4c8b8f208a7a90d3.png)