CycleGAN Summer-to-Winter Translation

Released in 2017, this model exploits a novel technique for image translation, in which two models translating from A to B and vice versa are trained jointly with adversarial training. In addition to the adversarial loss, cycle consistency is also enforced in the loss function: when the output of the first translator is fed into the second, the final result is encouraged to match the input of the first translator. This allows successful training for image translation tasks in which only unpaired training data can be collected. This model was trained to translate summertime photos into wintertime photos.

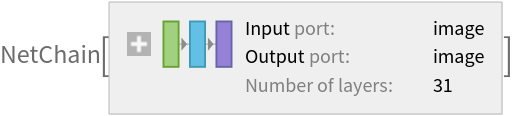

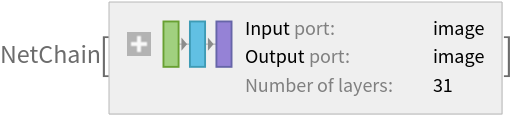

Number of layers: 94 |

Parameter count: 2,855,811 |

Trained size: 12 MB |

Examples

Resource retrieval

Get the pre-trained net:

Basic usage

Run the net on a photo:

Adapt to any size

Automatic image resizing can be avoided by replacing the net encoders. First get the net:

Get a photo:

Create a new encoder with the desired dimensions:

Attach the new net encoder and run the network:

Net information

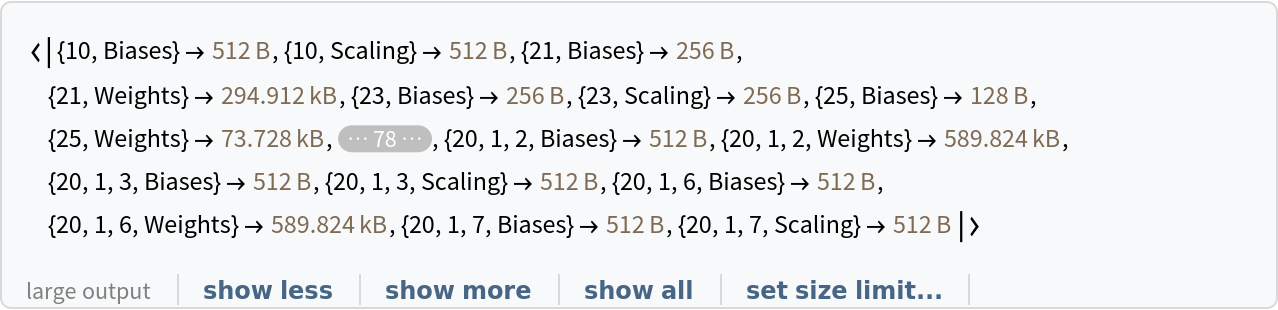

Inspect the sizes of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

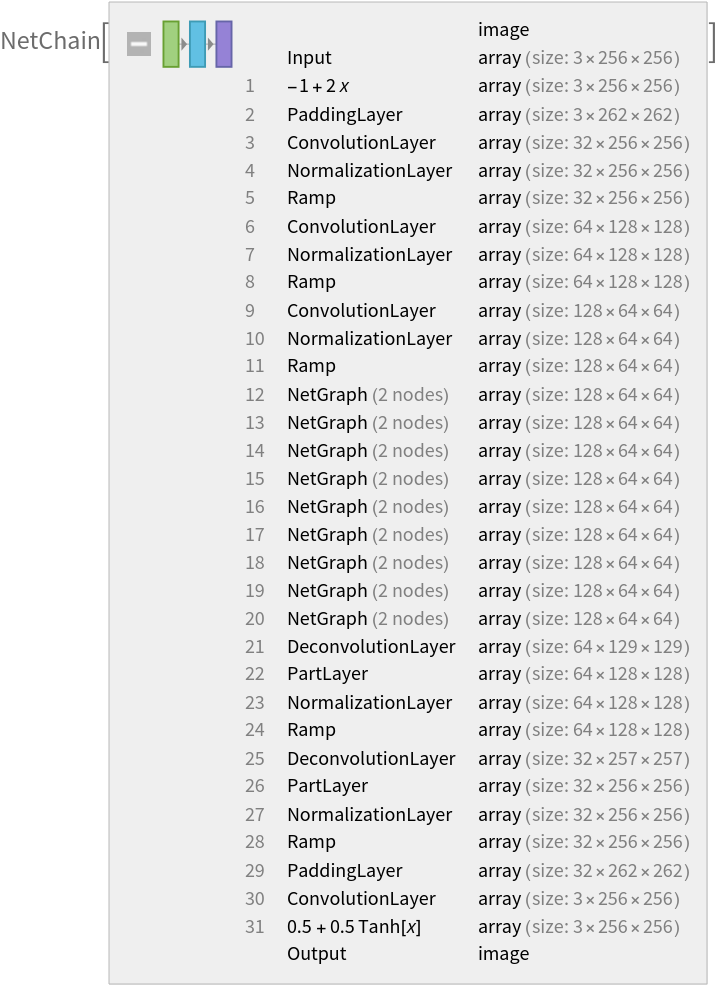

Display the summary graphic:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

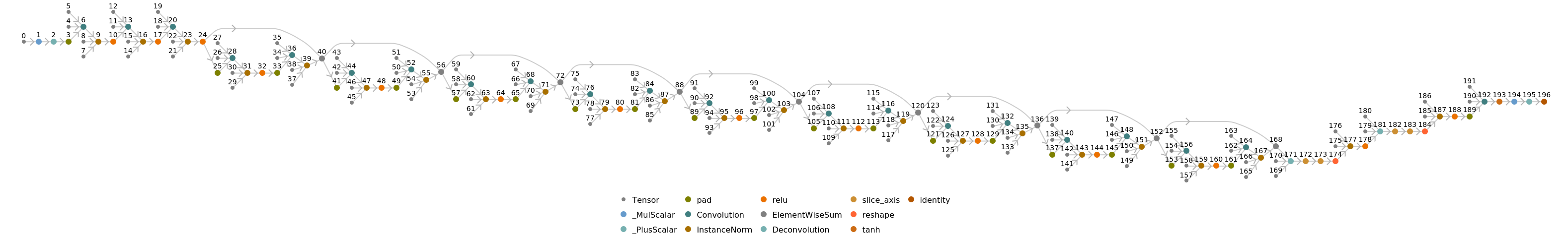

Represent the MXNet net as a graph:

Requirements

Wolfram Language

11.3

(March 2018)

or above

Resource History

Reference

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/db4dfe7c-5b5b-4dd4-a7ee-03beaedfc931"]](https://www.wolframcloud.com/obj/resourcesystem/images/e05/e059c9ff-cd57-47b0-9b60-8bbc117cc978/2105f5b866ea7c49.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/2df00e08-022a-4db8-ac05-1b3d09567a97"]](https://www.wolframcloud.com/obj/resourcesystem/images/e05/e059c9ff-cd57-47b0-9b60-8bbc117cc978/31ed360c36ab1d3a.png)