Resource retrieval

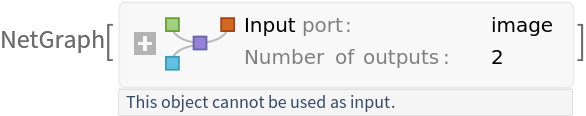

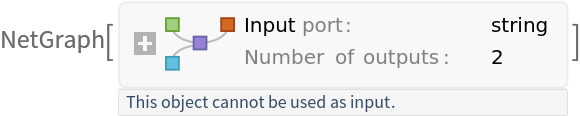

Get the pre-trained net:

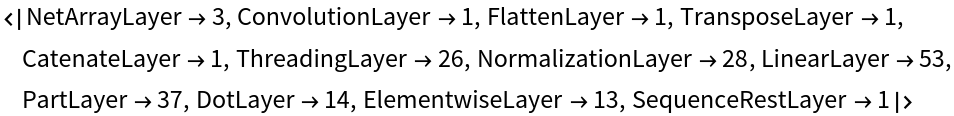

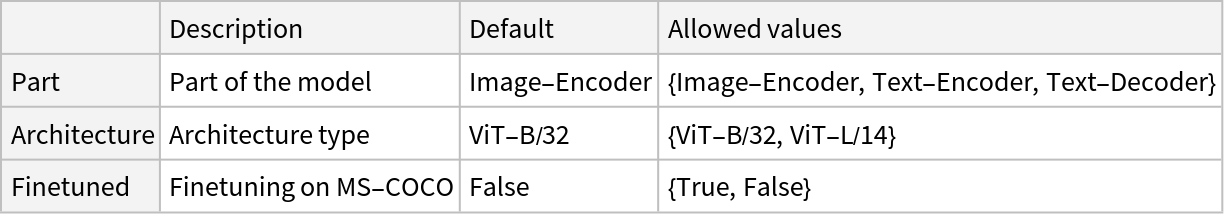

NetModel parameters

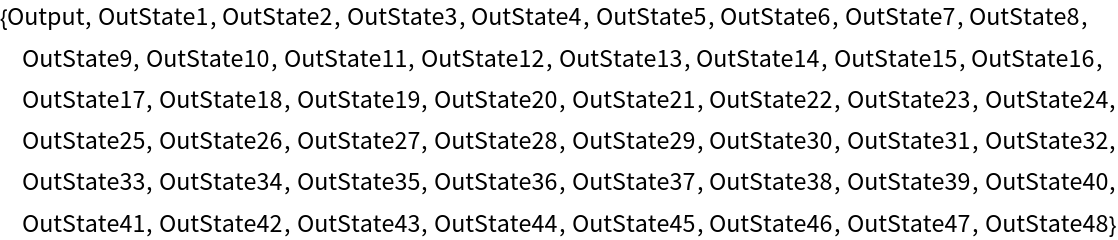

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

Pick a non-default net by specifying the parameters:

Pick a non-default uninitialized net:

Evaluation function

Define an evaluation function that uses all model parts to obtain the image features and automate the caption generation:

Basic usage

Define a test image:

Generate an image caption:

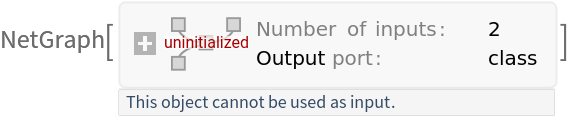

Define a test video and visualize the first five frames:

For zero-shot video-text retrieval, the mean image embedding is computed across uniformly sampled video frames:

Efficient generation

The evaluation function defined in the previous section is inefficient because every time a token of the caption is generated, the entire caption is read by the text encoder from the start. It is possible to avoid this by using NetUnfold. Reimplement the evaluation function using nets prepared by NetUnfold:

Prepare the unfolded net beforehand for the efficient image caption generation:

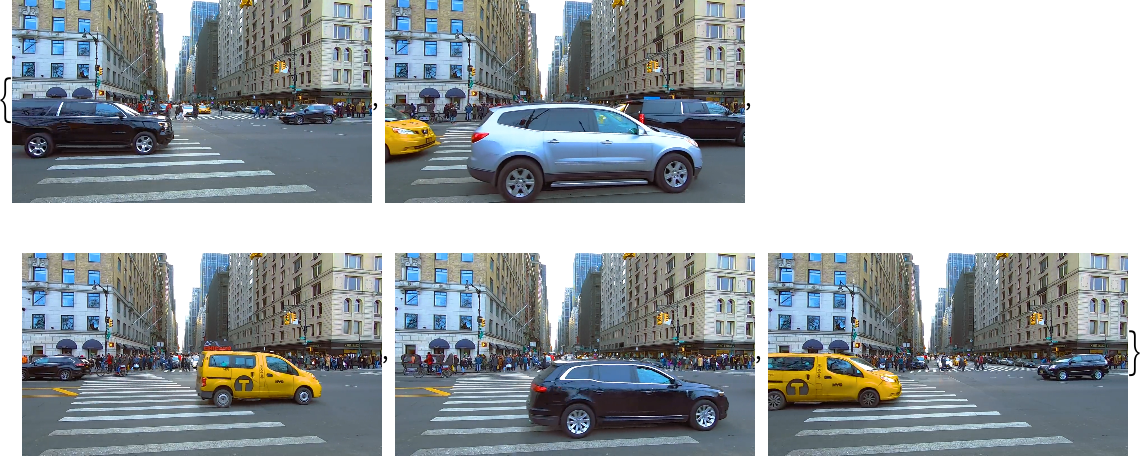

The new textDecoder is an unfolded version of the old text encoder and text decoder combined and exposes ports for the hidden attention states:

Compare evaluation timing of the methods:

The speedup in the efficient case depends on the length of the generated sequence, and image captioning models generally don't generate very long pieces of text. Hence, it's not very significant.

Feature space visualization

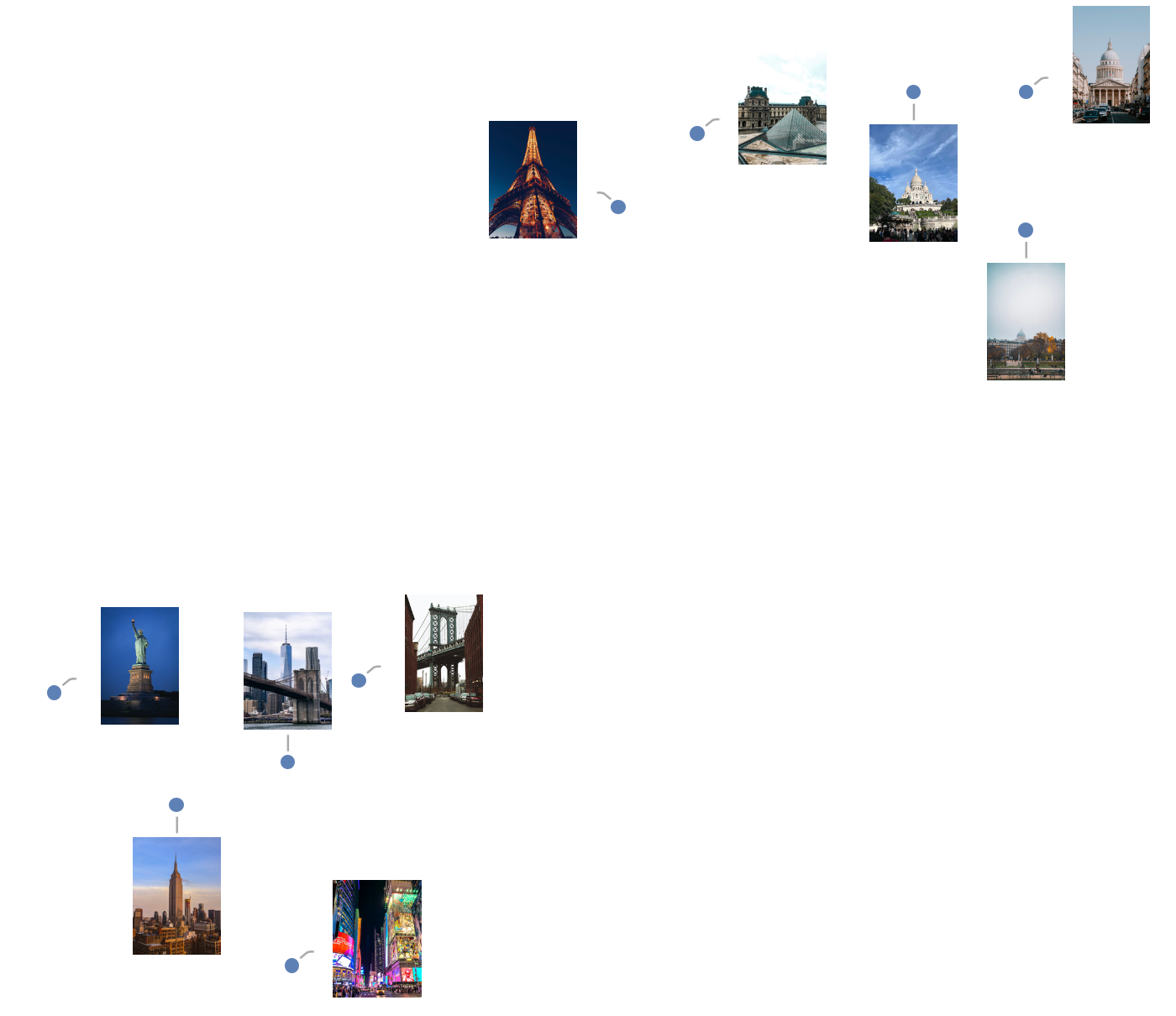

Get a set of images:

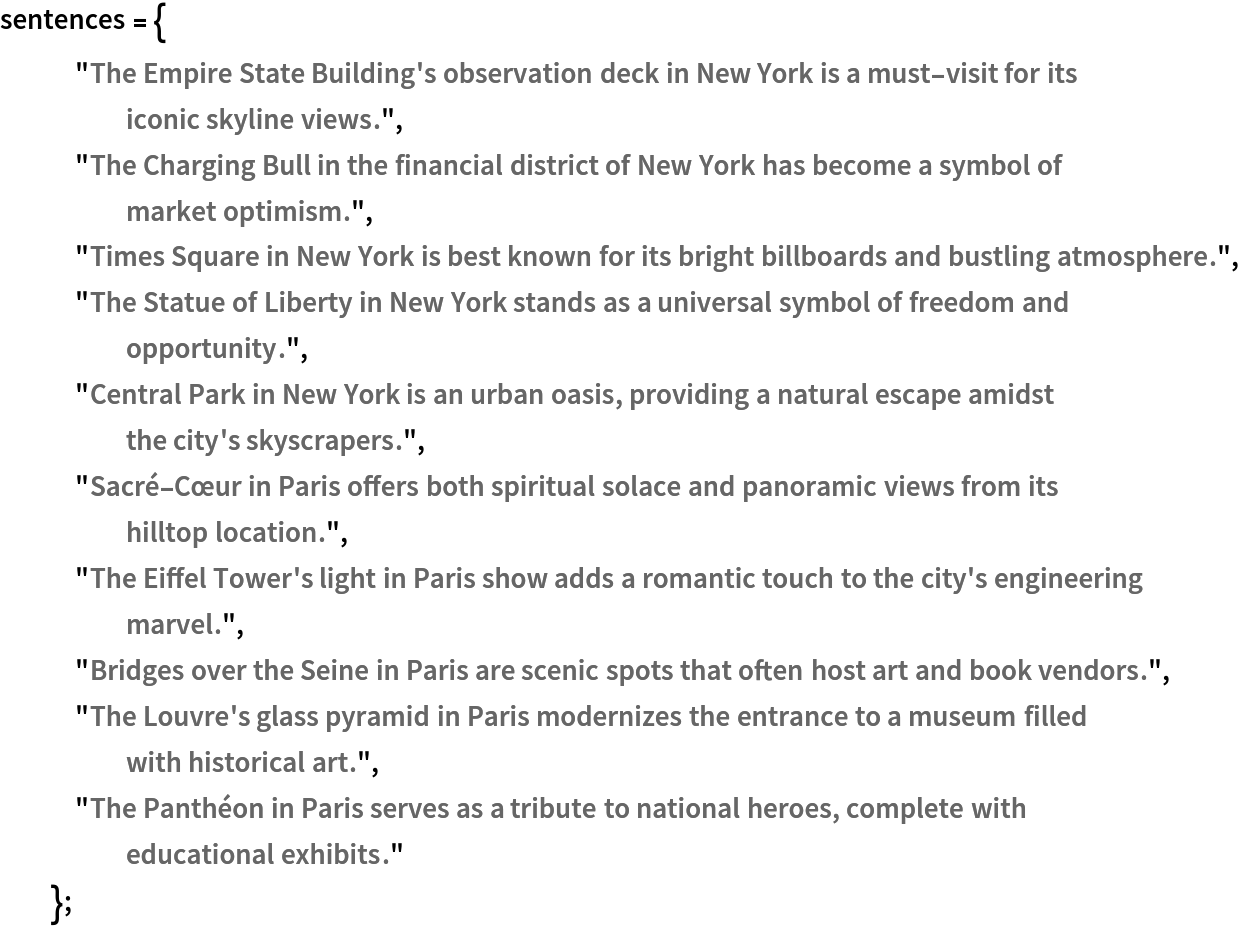

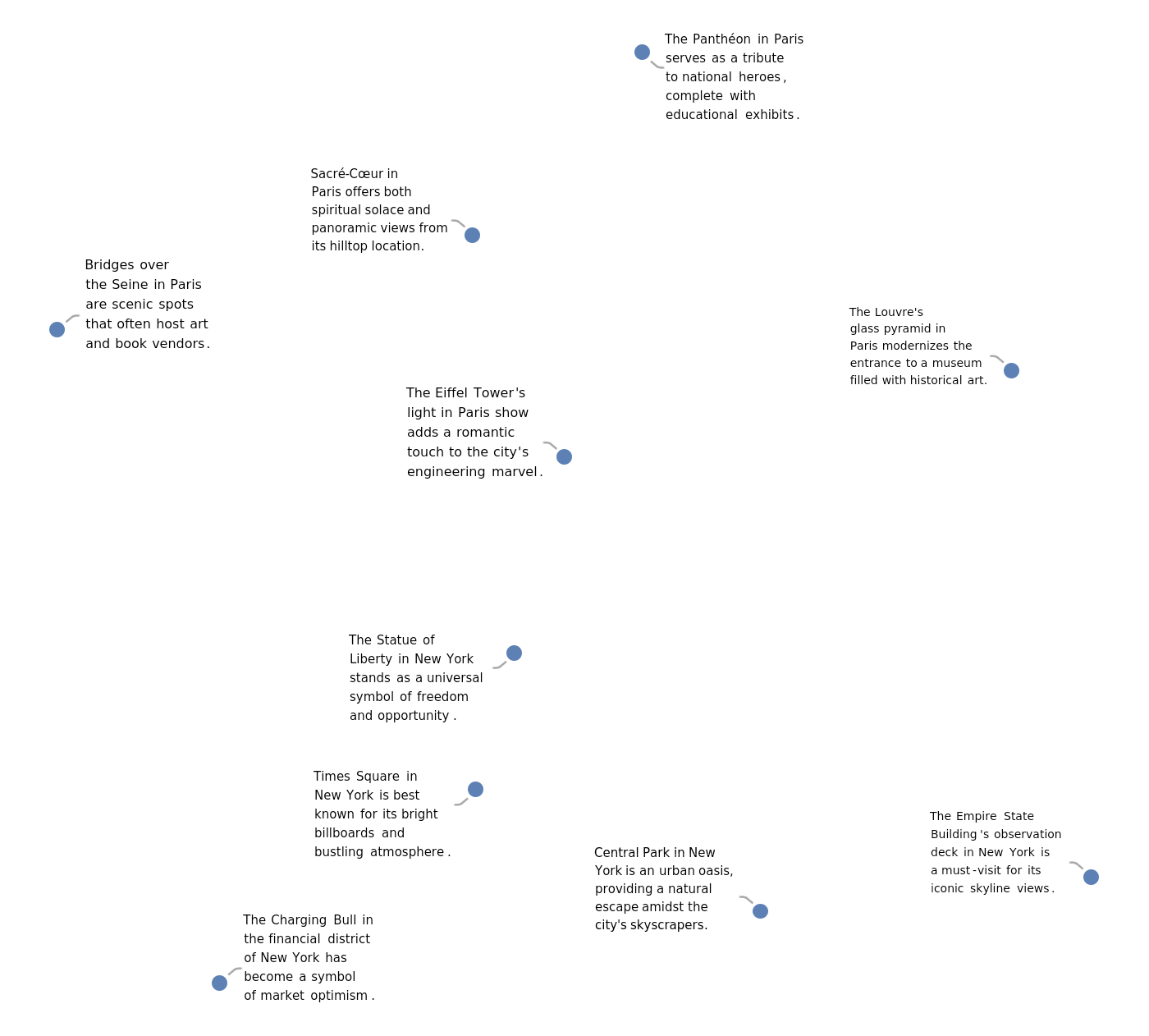

Define a list of sentences in two categories:

Connecting text and images

Define a test image:

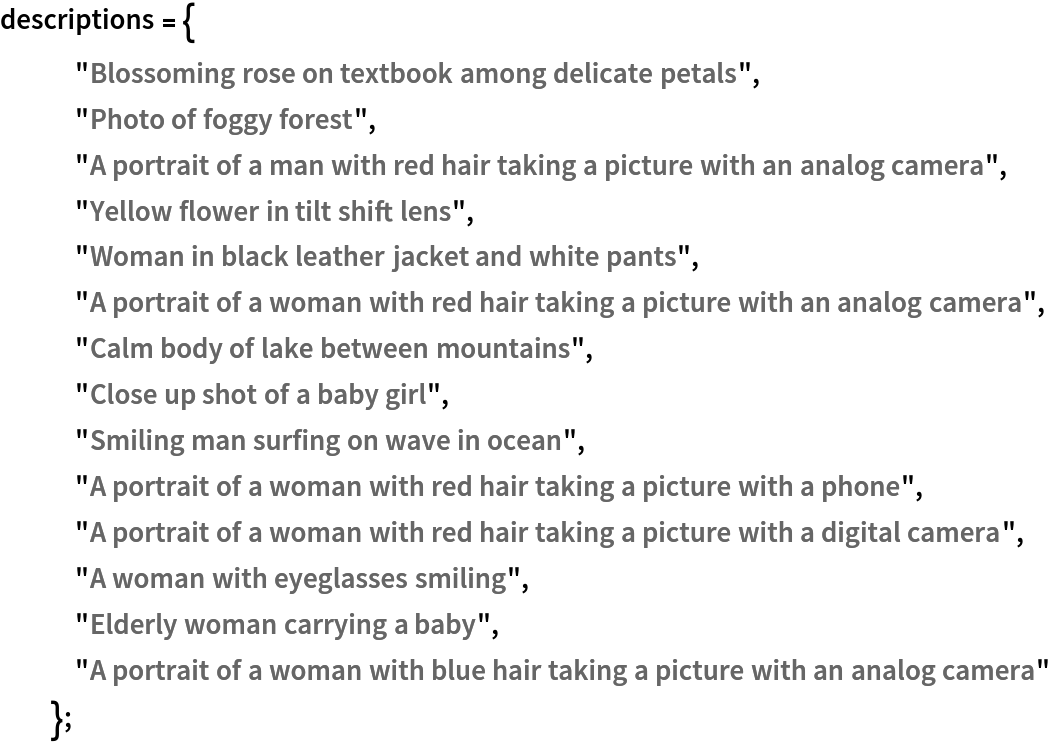

Define a list of text descriptions:

Embed the test image and text descriptions into the same feature space:

Rank the text description with respect to the correspondence to the input image according to the CosineDistance. Smaller distances mean higher correspondence between the text and the image:

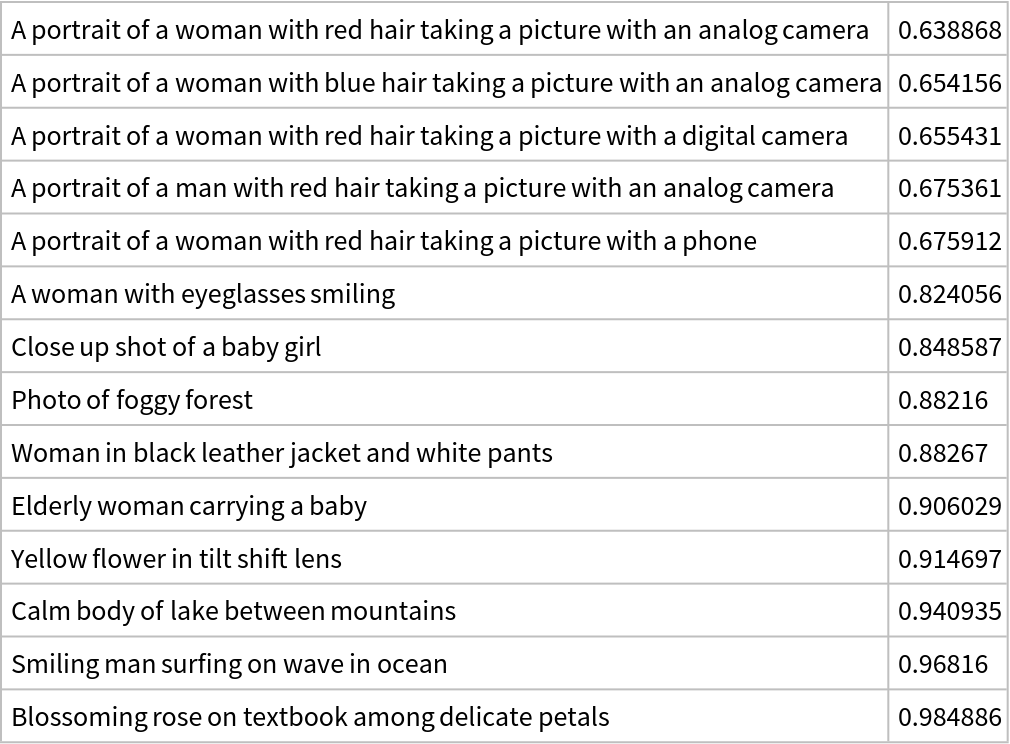

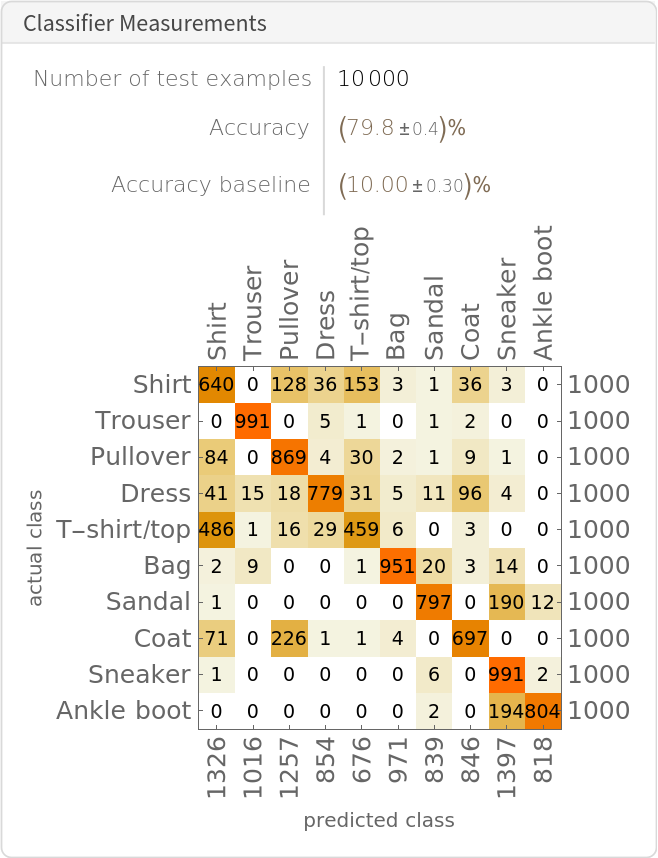

Zero-shot image classification

By using the text and image feature extractors together, it's possible to perform generic image classification between any set of classes without having to explicitly train any model for those particular classes (zero-shot classification). Obtain the FashionMNIST test data, which contains ten thousand test images and 10 classes:

Display a few random examples from the set:

Get a mapping between class IDs and labels:

Generate the text templates for the FashionMNIST labels and embed them. The text templates will effectively act as classification labels:

Classify an image from the test set. Obtain its embedding:

The result of the classification is the description of the embedding that is closest to the image embedding:

Find the top 10 descriptions nearest to the image embedding:

Obtain the accuracy of this procedure on the entire test set. Extract the features for all the images (if a GPU is available, setting TargetDevice -> "GPU" is recommended as the computation will take several minutes on CPU):

Calculate the distance matrix between the computed text and image embeddings:

Obtain the top-1 prediction:

Obtain the final classification results:

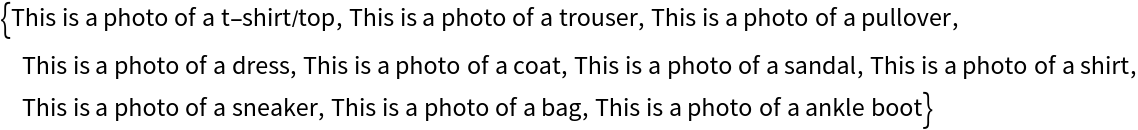

Attention visualization for images

Just like the original Vision Transformer (see the model "Vision Transformer Trained on ImageNet Competition Data"), the image feature extractor divides the input images in 7x7 patches and performs self-attention on a set of 50 vectors: 49 vectors, or "tokens," representing the 7x7 patches and an additional one, a "feature extraction token," that is eventually used to produce the final feature representation of the image. Thus the attention procedure for this model can be visualized by inspecting the attention weights between the feature extraction token and the patch tokens. Define a test image:

Extract the attention weights used for the last block of self-attention:

Extract the attention weights between the feature extraction token and the input patches. These weights can be interpreted as which patches in the original image the net is "looking at" in order to perform the feature extraction:

Reshape the weights as a 3D array of 12 7x7 matrices. Each matrix corresponds to an attention head, while each element of the matrices corresponds to a patch in the original image:

Visualize the attention weight matrices. Patches with higher values (red) are what is mostly being "looked at" for each attention head:

Define a function to visualize the attention matrix on an image:

Visualize the mean attention across all the attention heads:

Visualize each attention head separately:

Attention visualization for text

The text feature extractor tokenizes the input string prepending and appending the special tokens StartOfString and EndOfString, adds a special "class" token and then performs causal self-attention on the token embedding vectors. After the self-attention stack, the last vector (corresponding to the "class" token) is used to obtain the final feature representation of the text. Thus the attention procedure for this model can be visualized by inspecting the attention weights between the last vector and the previous ones. Define a test string:

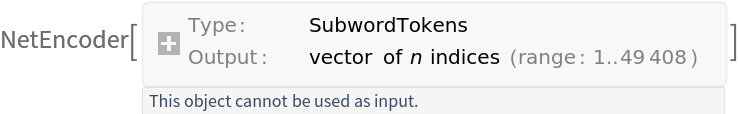

Extract the NetEncoder of the net to encode the string:

Extract the list of available tokens and inspect how the input string was tokenized. Even though the BPE tokenization generally segments the input into subwords, it's common to observe that all tokens correspond to full words. Also observe that the StartOfString and EndOfString tokens are added automatically:

Feed the string to the net and extract the attention weights used for the last block of self-attention. Note that the original implementation uses a slightly different implementation for the attention mask:

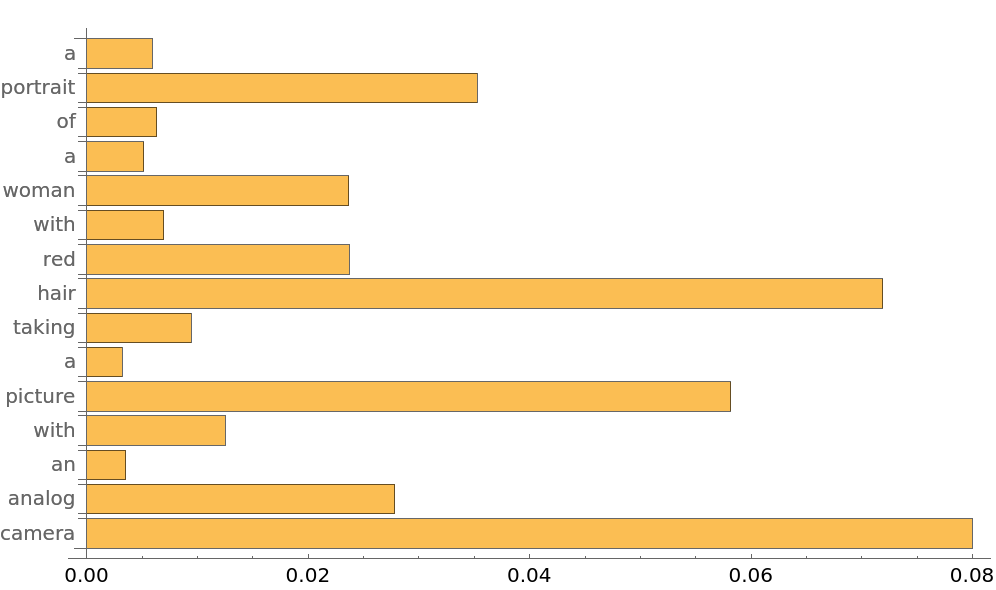

Extract the attention weights between the last vector and the previous ones, leaving out the vectors corresponding to StartOfString, EndOfString and the "class" tokens. These weights can be interpreted as which tokens in the original sentence the net is "looking at" in order to perform the feature extraction:

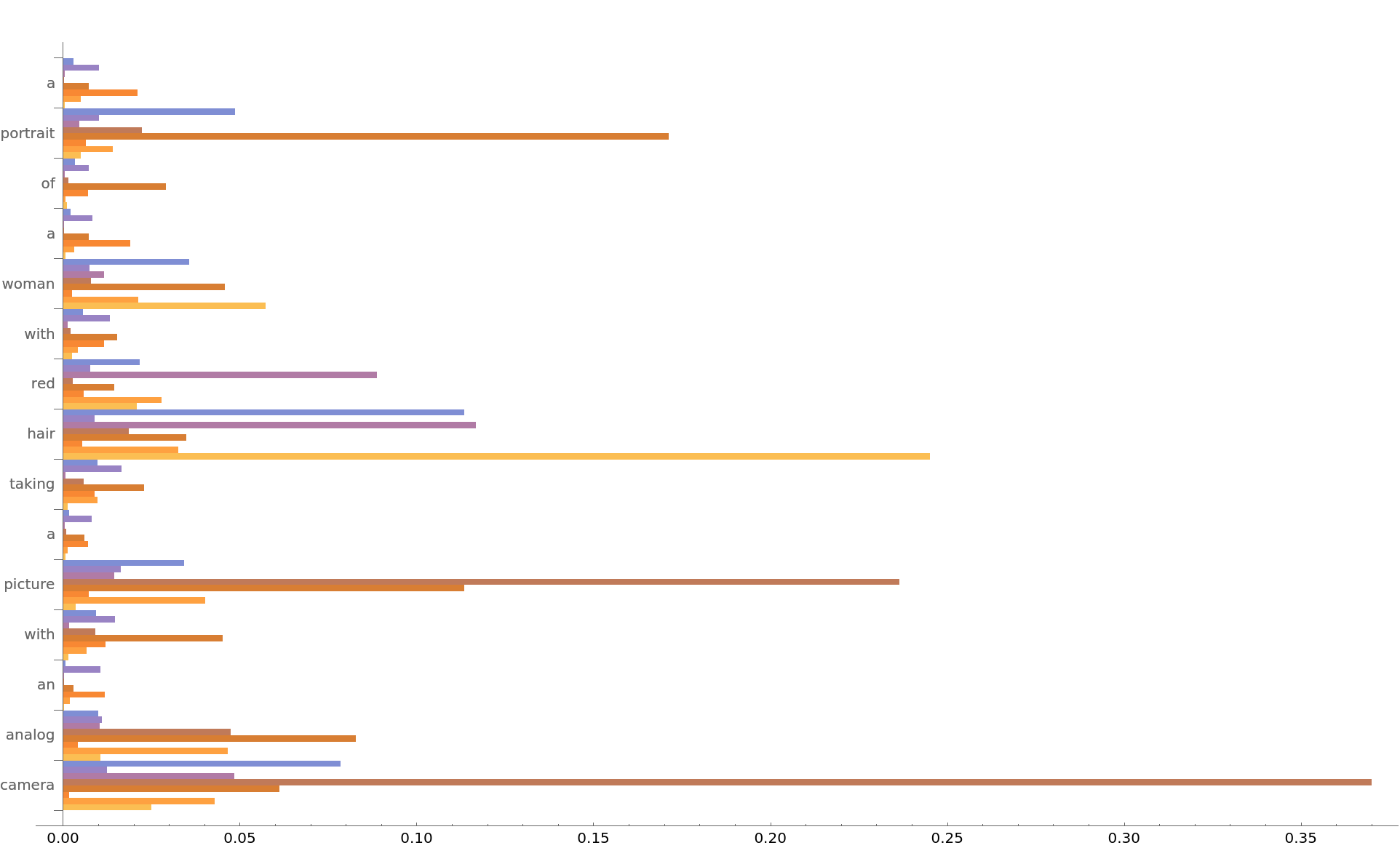

Inspect the average attention weights for each token across the attention heads. Observe that the tokens the net is mostly focused on are "hair," "camera" and "picture":

Visualize each head separately:

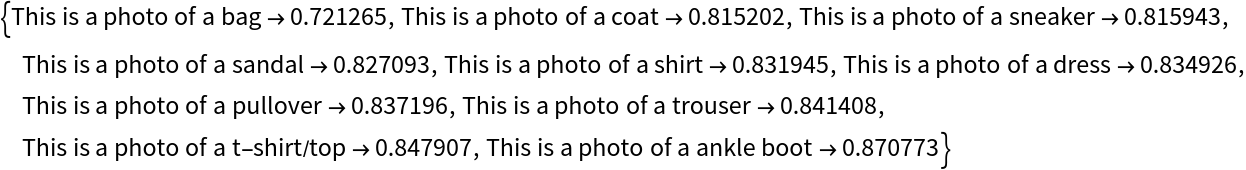

Extract the attention weights for all 12 attention layers:

Compute the average across all heads, leaving the StartOfString and EndOfString tokens out:

Define a function to visualize the attention weights:

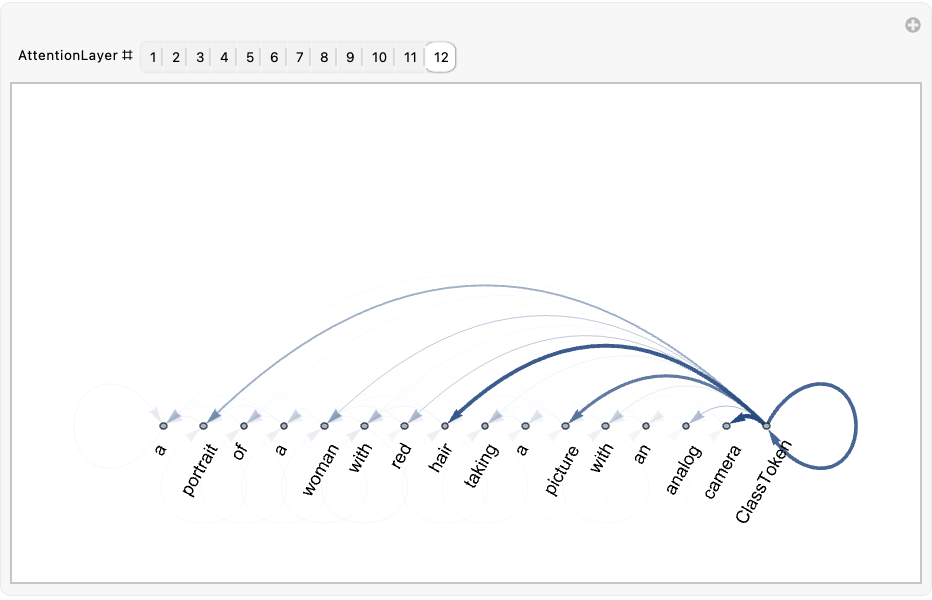

Explore the attention weights for every layer. A thicker arrow pointing from token A to token B indicates that the layer is paying attention to token B when generating the vector corresponding to token A:

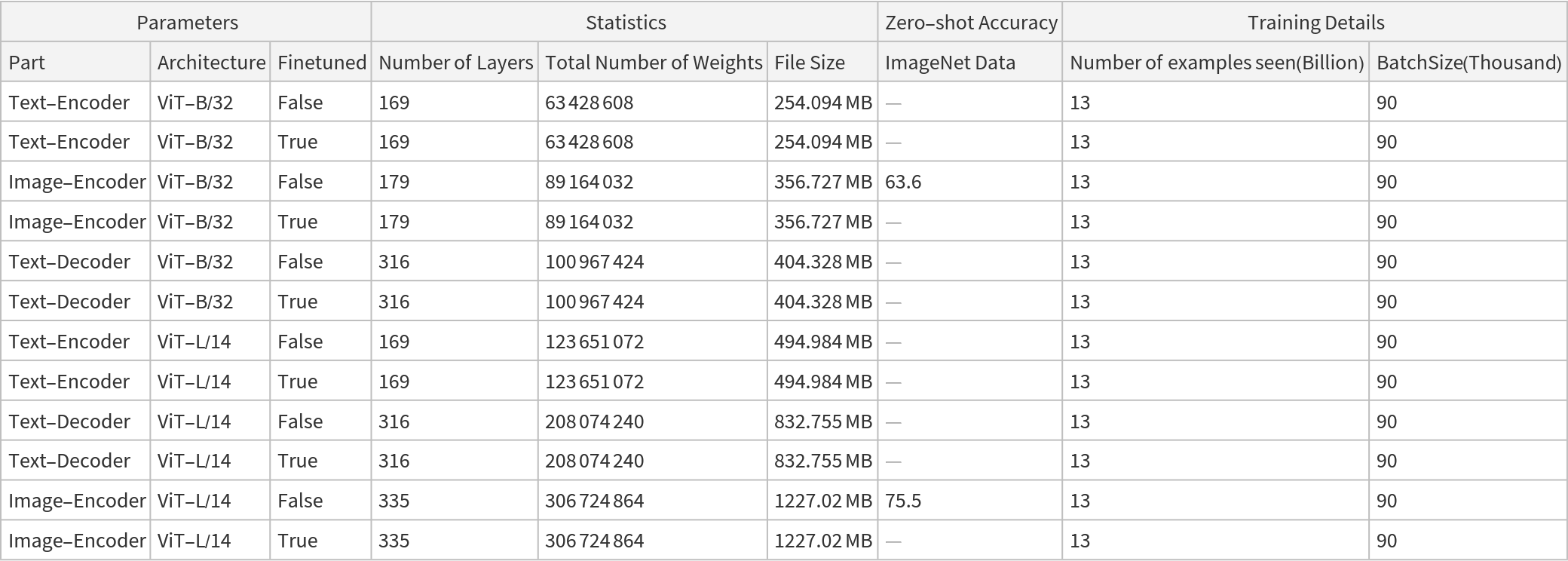

Net information

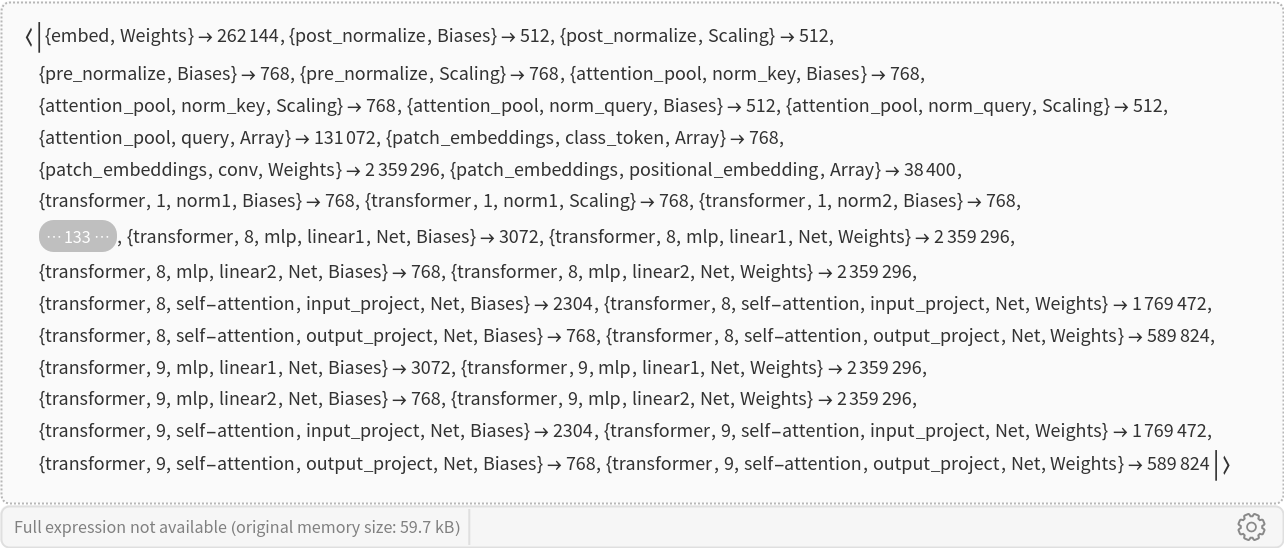

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

![NetModel[{"CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Text-Decoder"}, "UninitializedEvaluationNet"]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/02a779a0b69f9180.png)

![decodeStep[textDecoder_, textEncoderBare_, imageEmbeddings_, temperature_, tokenDict_, targetDevice_, topK_][textTokens_] := Append[textTokens,

textDecoder[

<|

"ImageEmbeddings" -> imageEmbeddings,

"TextEmbeddings" -> textEncoderBare[Lookup[tokenDict, textTokens], NetPort["TextEmbeddings"]]

|>,

"RandomSample" -> { "Temperature" -> temperature, "TopProbabilities" -> topK},

TargetDevice -> targetDevice

]

]

Options[netevaluate] = {

"Architecture" -> "ViT-B/32",

"Finetuned" -> False,

"Temperature" -> 0,

"TopProbabilities" -> 100,

"NumberOfFrames" -> 16,

MaxIterations -> 25,

TargetDevice -> "CPU"

};

netevaluate[input : (_?ImageQ | _?VideoQ), opts : OptionsPattern[]] := Block[

{imageEmbeddings, textEmbeddings, textTokens, tokenDict, textEncoderBare, decode, imageEncoder, textEncoder, textDecoder, images}, {imageEncoder, textEncoder, textDecoder} = NetModel[{

"CoCa Image Captioning Nets Trained on LAION-2B Data",

"Architecture" -> OptionValue["Architecture"], "Finetuned" -> OptionValue["Finetuned"], "Part" -> #

}] & /@ {"Image-Encoder", "Text-Encoder", "Text-Decoder"}; images = Switch[input,

_?VideoQ,

VideoFrameList[input, OptionValue["NumberOfFrames"]],

_?ImageQ,

input

];

imageEmbeddings = imageEncoder[images, NetPort["ImageEmbeddings"], TargetDevice -> OptionValue[TargetDevice]];

If[MatchQ[input, _?VideoQ],

imageEmbeddings = Mean[imageEmbeddings]

]; tokenDict = AssociationThread[# -> Range @ Length @ #] &@ NetExtract[textEncoder, {"Input", "Tokens"}]; textEncoderBare = NetReplacePart[textEncoder, "Input" -> None]; decode = decodeStep[textDecoder, textEncoderBare, imageEmbeddings,

OptionValue["Temperature"], tokenDict, OptionValue[TargetDevice],

OptionValue["TopProbabilities"]

];

textTokens = NestWhile[

decode, {StartOfString},

If[Length[#] > 0, Last[#] =!= EndOfString, True] &,

1, OptionValue[MaxIterations]

]; StringJoin @ ReplaceAll[textTokens, StartOfString | EndOfString -> ""] ]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/0439ee89653770d3.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/63844ace-6670-4e72-be37-5eaae531d4ed"]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/03f061aadd7a4d89.png)

![Options[prepareNets] = {

"Architecture" -> "ViT-B/32",

"Finetuned" -> False

};

prepareNets[opts : OptionsPattern[]] := Block[

{imageEncoder, textEncoder, textDecoder}, {imageEncoder, textEncoder, textDecoder} = NetModel[{

"CoCa Image Captioning Nets Trained on LAION-2B Data",

"Architecture" -> OptionValue["Architecture"], "Finetuned" -> OptionValue["Finetuned"], "Part" -> #

}] & /@ {"Image-Encoder", "Text-Encoder", "Text-Decoder"};

textDecoder = NetUnfold@NetFlatten@NetGraph[{

"text_decoder" -> textDecoder,

(*drop the class token and sequence most layer*)

"text_encoder" -> NetChain[{ NetExtract[

textEncoder, {"input_embeddings", "token_embeddings"}], NetTake[NetExtract[textEncoder, "input_embeddings"], {NetPort[{"append", "Output"}], "add"}],

NetExtract[textEncoder, "transformer"]

}]

},

{NetPort["Input"] -> "text_encoder", "text_encoder" -> NetPort[{"text_decoder", "TextEmbeddings"}]}

]; {imageEncoder, textDecoder}

];](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/5069ab9e6b8d3176.png)

![decodeStepEfficient[textDecoder_, imageEmbeddings_, tokenDict_, temperature_, targetDevice_, topK_][{generatedTokens_, prevState_, index_}] := Block[

{decoded, inputs, outputPorts},

inputs = Join[

<|

"ImageEmbeddings" -> imageEmbeddings,

"Index" -> index, "Input" -> Lookup[tokenDict, Last[generatedTokens]]

|>,

prevState

];

outputPorts = Prepend[

NetPort /@ Rest[Information[textDecoder, "OutputPortNames"]],

NetPort[

"Output"] -> ("RandomSample" -> { "Temperature" -> temperature, "TopProbabilities" -> topK})

];

decoded = textDecoder[inputs, outputPorts, TargetDevice -> targetDevice];

{

Append[generatedTokens, decoded["Output"]],

KeyMap[

StringReplace["OutState" -> "State"],

KeySelect[decoded, StringStartsQ["OutState"]]

],

index + 1

}

];

Options[netevaluateEfficient] = {

"Temperature" -> 0,

"TopProbabilities" -> 100,

"NumberOfFrames" -> 16,

MaxIterations -> 25,

TargetDevice -> "CPU"

};

netevaluateEfficient[

input : (_?ImageQ | _?VideoQ), {imageEncoder_, textDecoder_}, opts : OptionsPattern[]] := Block[

{imageEmbeddings, textEmbeddings, textTokens, decode, images, initStates, tokenDict}, images = Switch[input,

_?VideoQ,

VideoFrameList[input, OptionValue["NumberOfFrames"]],

_?ImageQ,

input

];

imageEmbeddings = imageEncoder[images, NetPort["ImageEmbeddings"], TargetDevice -> OptionValue[TargetDevice]];

If[MatchQ[input, _?VideoQ],

imageEmbeddings = Mean[imageEmbeddings]

]; initStates = AssociationMap[

Function[x, {}],

Select[Information[textDecoder, "InputPortNames"], StringStartsQ["State"]]

]; tokenDict = AssociationThread[# -> Range @ Length @ #] &@ NetExtract[textDecoder, {"Output", "Labels"}];

decode = decodeStepEfficient[textDecoder, imageEmbeddings, tokenDict, OptionValue["Temperature"], OptionValue[TargetDevice], OptionValue["TopProbabilities"]

];

textTokens = First@ NestWhile[

decode, {{StartOfString}, initStates, 1},

Last[First@#] =!= EndOfString &,

1, OptionValue[MaxIterations]

]; StringJoin @ ReplaceAll[textTokens, StartOfString | EndOfString -> ""] ]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/007227bedd0d671a.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/7c149292-bc7d-4529-90b8-88784e68ebca"]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/60a8d631f670fa83.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/038a536e-1280-4011-b69a-53f4b9ceeeff"]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/5b43c7ad61fcaa5b.png)

![FeatureSpacePlot[

Thread[NetModel[{"CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Image-Encoder"}][imgs, NetPort[{"embed", "Output"}]] -> imgs],

LabelingSize -> 70,

LabelingFunction -> Callout,

ImageSize -> 700,

Method -> "TSNE",

AspectRatio -> 0.9

]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/3aa46ca0856515b1.png)

![FeatureSpacePlot[

Thread[NetModel[{"CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Text-Encoder"}][sentences, NetPort[{"normalize", "Output"}]] -> (Tooltip[Style[Text@#, Medium]] & /@ sentences)],

LabelingSize -> {90, 60}, RandomSeeding -> 23,

LabelingFunction -> Callout,

ImageSize -> 700,

AspectRatio -> 0.9

]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/23595357a509244c.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/09a89369-b737-4925-9311-106245696450"]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/1d7bc30afad56962.png)

![textFeatures = NetModel[{"CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Text-Encoder"}][descriptions, NetPort["ClassEmbedding"]];

imgFeatures = NetModel[{"CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Image-Encoder"}][img, NetPort["ClassEmbedding"]];](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/43ea06b82f024f74.png)

![Dataset@SortBy[

Thread[{descriptions, First@DistanceMatrix[{imgFeatures}, textFeatures, DistanceFunction -> CosineDistance]}], Last]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/55099cf210027279.png)

![textEmbeddings = NetModel["CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Text-Encoder"][labelTemplates, NetPort["ClassEmbedding"]];](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/1e4ca64a57da59a8.png)

![imgFeatures = NetModel["CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Image-Encoder"][img, NetPort["ClassEmbedding"]];](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/4d687005e2e0fa42.png)

![SortBy[Rule @@@ MapAt[labelTemplates[[#]] &, Nearest[textEmbeddings -> {"Index", "Distance"}, imgFeatures, 10, DistanceFunction -> CosineDistance], {All, 1}], Last]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/0e130995b9b2e216.png)

![imageEmbeddings = NetModel["CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Image-Encoder"][testData[[All, 1]], NetPort["ClassEmbedding"], TargetDevice -> "CPU"];](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/508bc71458c47c06.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/c261a393-840a-4320-aa70-1948a2fadf14"]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/505821fb4c911ee5.png)

![attentionMatrix = Transpose@

NetModel[{"CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Image-Encoder"}][testImage, NetPort[{"transformer", -1, "self-attention", "attention", "AttentionWeights"}]];](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/4a231dfbd0949824.png)

![visualizeAttention[img_Image, attentionMatrix_] := Block[{heatmap, wh},

wh = ImageDimensions[img];

heatmap = ImageApply[{#, 1 - #, 1 - #} &, ImageAdjust@Image[attentionMatrix]];

heatmap = ImageResize[heatmap, wh*256/Min[wh]];

ImageCrop[ImageCompose[img, {ColorConvert[heatmap, "RGB"], 0.4}], ImageDimensions[heatmap]]

]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/24cfdbb8fe2ff674.png)

![netEnc = NetExtract[

NetModel[{"CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Text-Encoder"}], "Input"]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/7abb5e9405967429.png)

![attentionMatrix = Transpose@

NetModel[{"CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Text-Encoder"}][text, NetPort[{"transformer", -1, "self-attention", "attention", "AttentionWeights"}]];

Dimensions[attentionMatrix]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/6dcd57caf4d1d10b.png)

![allAttentionWeights = Transpose[

Values@NetModel[{"CoCa Image Captioning Nets Trained on LAION-2B Data", "Part" -> "Text-Encoder"}][text, spec], 2 <-> 3];

Dimensions[allAttentionWeights]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/3727cbb6f88b43b3.png)

![pos = Append[Range[2, 16], 18];

avgAttentionWeights = ArrayReduce[Mean, allAttentionWeights, 2][[All, pos, pos]];

Dimensions[avgAttentionWeights]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/4d1f36fb382a5f57.png)

![visualizeTokenAttention[attnMatrix_] := Block[{g, style},

g = WeightedAdjacencyGraph[attnMatrix];

style = Thread@Directive[

Arrowheads[.02],

Thickness /@ (Rescale[AnnotationValue[g, EdgeWeight]]/200),

Opacity /@ Rescale@AnnotationValue[g, EdgeWeight]

];

Graph[g, GraphLayout -> "LinearEmbedding", EdgeStyle -> Thread[EdgeList[g] -> style], VertexLabels -> Thread[Range[16] -> Map[Rotate[Style[Text[#], 12, Bold], 60 Degree] &, Append[tokens[[2 ;; -2]], "ClassToken"]]], VertexCoordinates -> Table[{i, 0}, {i, Length[attnMatrix]}], ImageSize -> Large]

]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/6c0db3064a6fca52.png)

![Manipulate[

visualizeTokenAttention@

avgAttentionWeights[[i]], {{i, 12, "AttentionLayer #"}, Range[12]}, ControlType -> SetterBar]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/11c0cad3d0703fc9.png)

![Information[

NetModel[

"CoCa Image Captioning Nets Trained on LAION-2B Data"], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/578c9f08bd9a9018.png)

![Information[

NetModel[

"CoCa Image Captioning Nets Trained on LAION-2B Data"], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/4d8e31d8cc58704d.png)

![Information[

NetModel[

"CoCa Image Captioning Nets Trained on LAION-2B Data"], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/d05/d0558f8f-7edc-42db-bff9-62e67ff3a1fe/2749fcdc3cf6c9bf.png)