OpenFace Face Recognition Net

Trained on

CASIA-WebFace and FaceScrub Data

Released in 2015, this facial feature extractor, based on the Inception architecture, was trained to learn a mapping directly from facial images to 128-dimensional feature vectors. Training was performed using triplets of examples, with two examples belonging to the same person. Facial images sharing the same identity are optimized to be embedded close to each other in the feature space.

Number of layers: 172 |

Parameter count: 3,743,280 |

Trained size: 15 MB |

Examples

Resource retrieval

Get the pre-trained net:

Basic usage

Compute a feature vector for a given image:

Get the length of the feature vector:

Use a batch of face images:

Compute the feature vectors for the batch of images, and obtain the dimensions of the features:

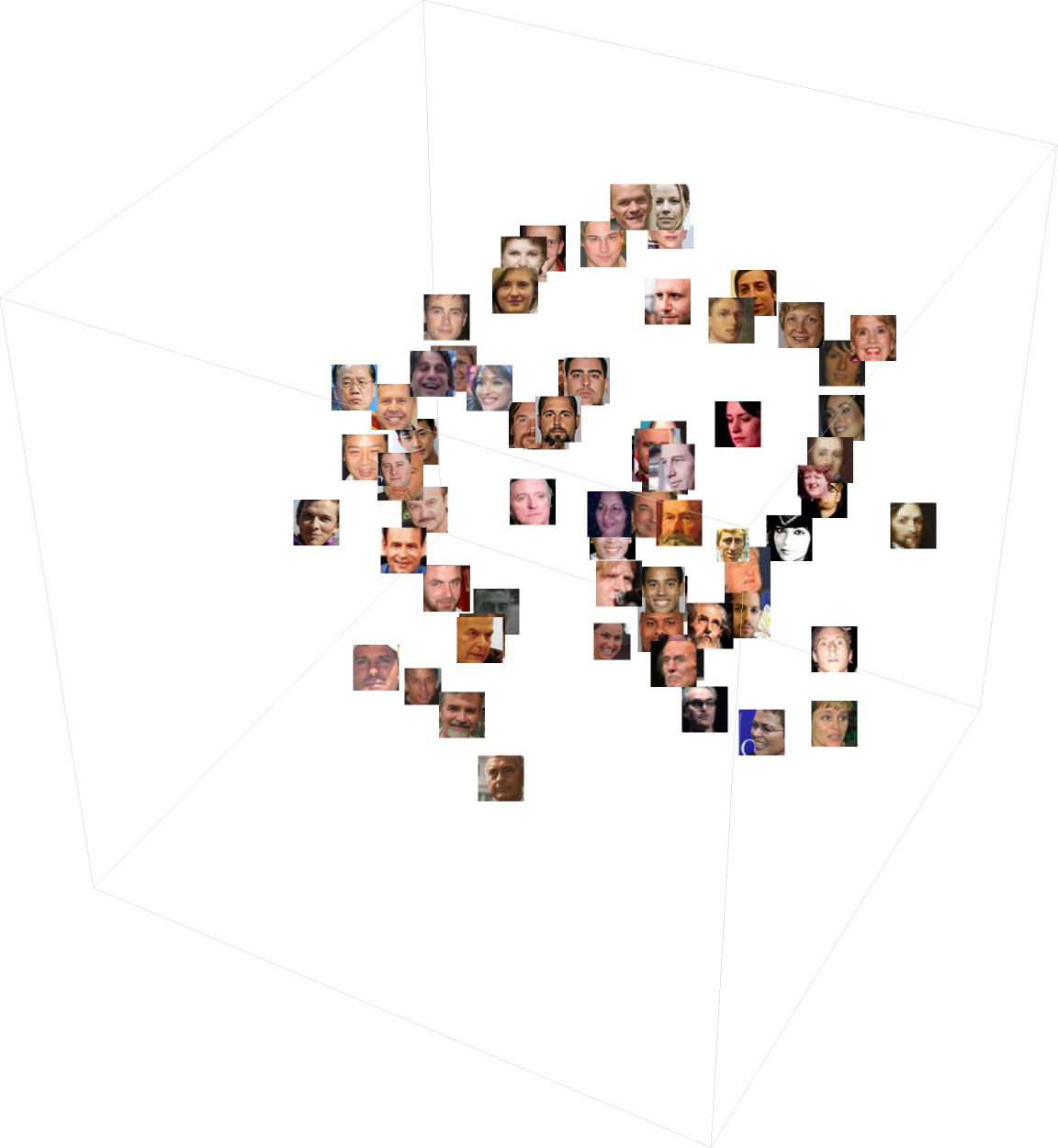

Visualize the features in two and three dimensions:

Obtain the five closest faces to a given one:

Net information

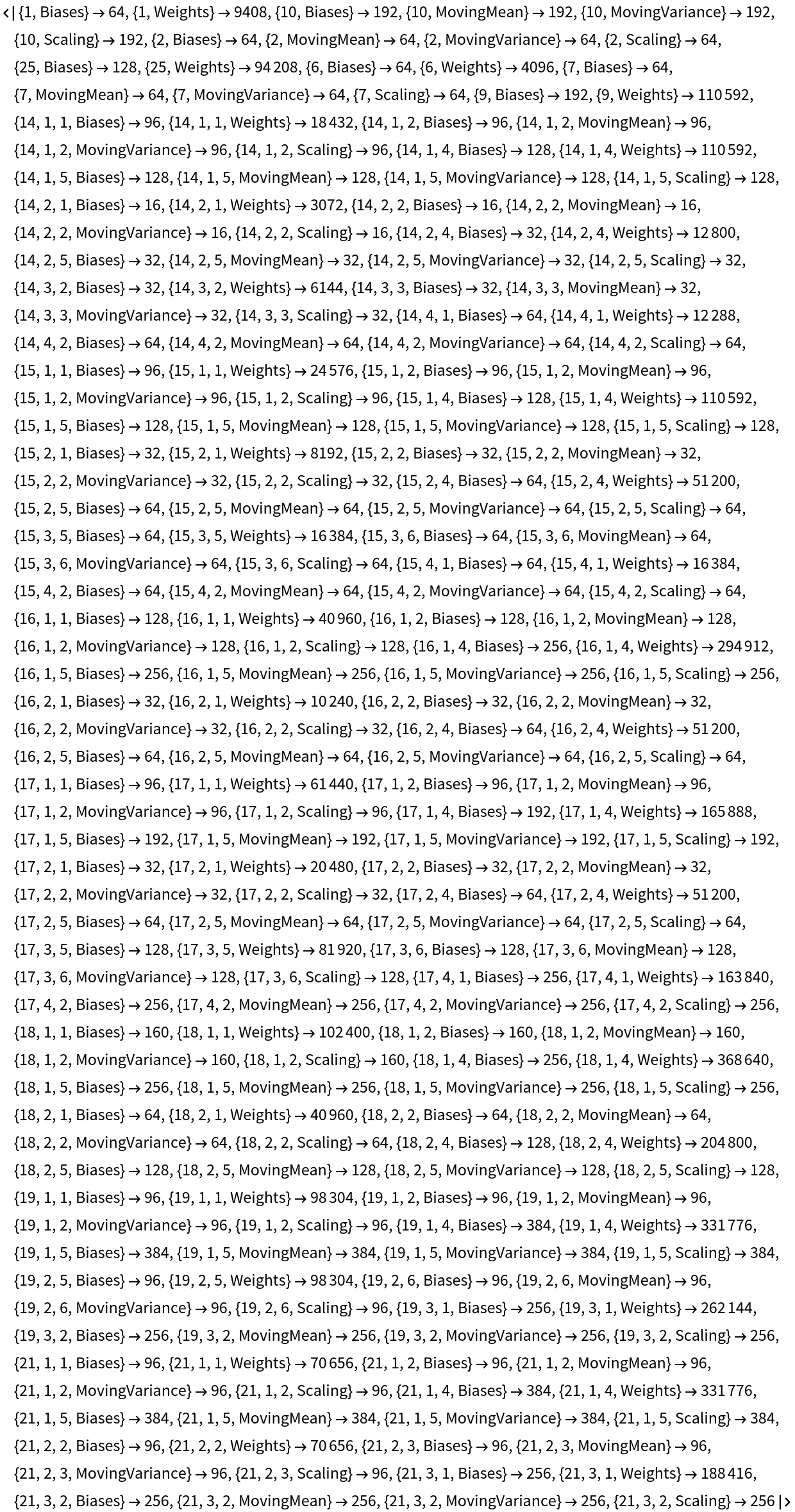

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

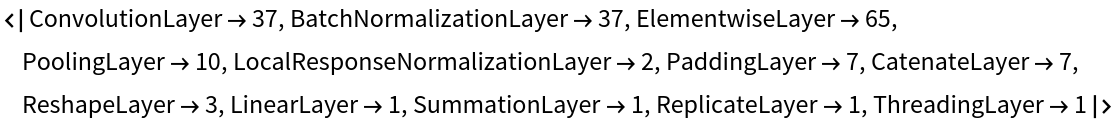

Obtain the layer type counts:

Display the summary graphic:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

Requirements

Wolfram Language

11.3

(March 2018)

or above

Resource History

Reference

![features = NetModel["OpenFace Face Recognition Net Trained on CASIA-WebFace \

and FaceScrub Data"][\!\(\*

GraphicsBox[

TagBox[RasterBox[CompressedData["

1:eJylmQVUm/nW7ntOi7u7U9zdIbg7FEmQhCS4u7u7u7u7S5W6C4UCLVBKqXs7

7Zzh7pQ5nZ6Z+c5377qs3ayXl5SVX569n2f/Ax86yBb3zyNHjoSRwoOtZxQi

NNQzxo4WvnEIDPPBB2K9TALDsXhsqAr6KNw0+/0f4frFq28vPh3go1Ol9Uxp

BUSJGJjJGZkpGJiIaGip2ThImFip2XgoWbjoOHlJaJmoWNgoGVmomVlJaOjI

6RkpGZmhKJiYSeloiaipyGlpiSkpSampKejo4JqIguIYOTkpJRUZFTUJBSUp

JQUJBTkJGen3IiYmJSIiOXaU6J/EpEehqKjJ6KjIGajJ2egpuRkphDgZhDjp

+dlo2RipaajJScnJ/klMdJSI8HwiEvi/JEf+cQQe4VfBryWjoSKiIAtLirux

trq6ubW6vrW5+WTj0e761uMHj3YA8N3Xg+TCGmp+URpeYVpuPgomFjIGRhI6

ehp2zkNAGnZeeg5+UjpmGjYOGhZ2AIR3AAouKJlZAJCYlo6YhsD1MyDQHSUj

A0ByahoAJKemghcDL/V7kUAB4zHio+RUxBRUpPR0lIx0lEDHz0ojxceqISWg

IsYlK8wlzMvKysJARUMJLIB2jBj+CxHQwS/5x1EC7DFSEmA/Rk7qiEHeeLAC

UCtrD9fXHz/Y3Fl7uH3vweaL119efzkobeomYuNnEpKkYeemYuWkYuclYeQk

Z+IgoWakYuen5xKgY+MkoqGj4uAmY2EnZ2IhZ2SgYGalZmWnZmUDRiIqmqPw

TtITAOFpoC8ZNd0xUjJicsLbS0xJTkxDSURGTgFfJMdoqMmoachAPniRZKRU

lPS05JRkdNRk7HSkMrwMKseZTeR47dWFDWU4jGX5VIVYBThoGVkoiWlJ/kly

lISEiIj4H8TEx4iIiI4dIzwSf/86evQfYgriy9cvrT3avL/xaGP7ycr69sr6

o5WH2wD48deDwbmzlLyi9AJitBygFzctlwAFKzcAktIyULDy0rHzUDGxEtPS

U7NxUbJzURCEYyJjZIaOBUAqFlZialoAhEYFQLg+BAQiEA7UJKGiIqElgIAK

FBRkTMx0lNRk/zx6hIKcmJmOmoHyGCcDuawAu66UgJmCkIu2FNpQ1lNXEoUQ

P6EmYirDJyfIwslOTcNIRUFFTkJEfJToCOh+7HvHwiM0KhTcoedkbOnt2H76

9O7axp3VDQB88OgxAL5+/cunrwcXbq0zishScQoAAjDScfKDiNCTxNR05Cw8

NKxcQEpCB6qx03DyAyAVEwspdCkTCyVBRzZSWnoAJKGlARZghEdoVAIgFRUA

wjV0LCUTLQklKT09LQMjDRnpUXpqIhEOBgU+doQwq7OWJEpHCqsv62esEGiq

gEWIBpjIBpnJ4vUkUVpiRrICItyMzMzUNDBrIDzxP+DhsFcP5xEK7lCx0qbl

Z91YvX9ndR20W92E/nwIF2/efH3z4eD6yjaXtCopCzclCwfgwNxBEVEzEFHR

UrLxwR24IGMA1VhBXLLvLgSN+n1amaBFyegYjlFSwxgCC8FJKCjBWMBkyOA1

MTBQgF/R09GAXdBScLAwcjDQctJRyAswm0jzOqsIR1oqZLjqJtqrx9uqJjmq

RVvJB5tIpLpqRVpIh5hK+pvKIhFSGhJcvGx01NSU0PbHyI4eIyOC0TtKQvxH

kR6jYacvb6pZ3d4Cn7lxd/X+xtbt++u37z8AwFfv/gWAx5URZKw8h4BUrNxg

LAAICsIF3DlGSUPBwExCz0wApCcQUbGyQ4uCrMAIjgpjCIAgGRkFwTlBMlIq

WpCPlpGJjoGeiYWZnYWWi5FGlJ1JgYfdRErAWU0EqyGUYKFU6W1Y5KZZjtMv

9dLNQapnuKjkeyGy0VpxdjKxdnKhVrI4MxlbTTEpQXZaOgoSKgoi8mNE5ARv

OaxDQEAmZiCPzUi6cvcOGAsAgnY3762BmtCi7z8fXL77UFBJl4KNHwCpmDlg

+oCRmIYRRokQE0zsRymovgOyQvdSMLIRMoKFA0wGBpPASAdvBS0wEsYNUgA8

k4Ye7gAmaAdtyc7GJMTBpMjPqSPAYSHO46svm2SnluWoUo3Rawo0rPPVbfQ3

KsNoFnlqFmG0yn31M1Cqma4qSc6KoZYSPkbibroS2pI8rEyUJNQk32Pl99H7

3pyELgVPPkJ+VNfSdGJp8frK6t21TejP63dWVtYfHprMwqXbnFJqlOwCwELO

wErKwAaMAAgqACAkIzEFNQDCfVoOPsJz6JlIGVioWTiBDmwTZhDoCICUNATz

hLCgJQBCi9LQ0TLRUfOwM8sf5zRXEEOqiPlrS6Q7qNd4G7UGm7UHmwBdW6hp

rQ+i2E211luvzs+gxEuzGK1V4KmVhVSNsZMONBLBaAtbKQiI8TLT0EKGHvt7

QIpj1kin5RvXb66uX7u9cmvlwc17q9CiAPjhXwf9M6eZRRS+A3IeApIxEtQB

QGhRgnbfWxTuHwpKRsd4lJoetCalZySISEMPM3gICNFAaE4GJmAEBenoaNgY

aYW4WHWkjzuqywQZK+cjDep9TZqDjLqiLdtCjdpCzKGa/AzbgkwPq9JLuwKj

VeqlV4TRyXFTj7GU8tUUclUT1Zbm52ClISMnIiUlJv3+BQFxmBRwScZKhw8L

Wji/fOM+oK0B4LXb9wDw9dvfIOi7x0/SC8lScAqAK0KUE3SBAaRhBKVgjSGg

gcnQMYJqxHQsDJx8IChMIrwVx76HAhQROSHQgY6cnJyKiooG3hxaBsJ7RUYh

wMRoJC5soSSC1pPNdjOuwJn2RJ4YjHceSXCYTHHoj7LqDTPvDDLuCDSCi44Q

k0q8ZoW3Vi5KodhDpcRTM81WLsxIAtzVSVNCiQf2D1JwGhAOnJOMnFCEdYiM

GubRBWl5+tYAdObCzfV7965cW7l1+96DF6++vnx/0DGywCqhTM0jBH4Cyxhh

+uhZICZAGgpmTgLad0B4wQBIz8ELShF+xEDIkcPpO0YGuwodKSUNeB0saAxM

hEWOnJGJio5WlJsdIcJnrywc7YCoC3FqCLTuS0DO5eFnst3HEm07oi37kxz6

kmzbwo1B0K5gkyaMVqOberGXdq6bah5KNdtFJdFOPsJCHqcnaSfPLcxFR09F

Ar1J2BMoiKFjIQSPHiNlJyZyUxDK9TO/OjJwbf3htVu3b9y5e3nl7ps3v776

cDCxdJFdSpWKQxB2TsLE0RBYCIxUdORMbMACROAbBMlomGD0gBdEBKs5nDXo

TwAkg86koadnoIbFkpmViYYJgpIZTEaQjUFXXMDfSCEDpd8RgxzOwMwVB8wW

4qey3eayXedSXAdjrHpizftSrIYzHXtiLevwOnUYHRjAJHvZVEfZbKRypoty

kr1clKVMkKGYlZqoLC8zPSx7pKTgn4T1hozQqEqstKla4mEyzLWe1jfvXFy+

/eDezftn7t0CwA9fDm6u7fAr6h2j52Dg5ocuBUBiOiYyWmYKWgaYOMJmQkV9

CHiUmhHuEFyFjJwSNKVnhJ8SthdKGgCkpqEDQPBMNlZmemYWOjYOkJKNlsJY

QTLWWqXS12owzXOqyG+pJnShMmAmH72Y53Y2F72Ug5rPcZnMdBjJsO9OsK7y

0y9Ea4LJ5KDUslDKmUgISsUMF+U0R8U4S+kQGxXYdkQ56MmJif5BQvJPmD4y

EnLio3oiLMlGEkguajQb8VhH7sUHG3cv3zt3/96LF1/evj/YffFJ39Hrn7Qc

YJJg/jBrRLTwyukBAXgBB8Ia1KGgB3ZmAIT2IwACPh3hadC9ECiEG7T0LAy0

x3m5eNjY2Nk5WTi4ubh4uOnp9aTE0520WyOdJ3K9F6vDzrbEnWmIXCr3PVOK

n67ETZWgF0q8FgvQc1nuQ0mudYEWRTjDfE/tXHcNQEt1koPKQKllojRTndUT

HFVjHHWMZAUZKInhNcAGRUR0lIr4CEpeAKPIhaCmcKP8RwXO+Mrq9Uvnb5y7

fe/l8y+Q9e8+HcTm1ZEy80H8wbINGQcKEqYMVINRoqEH04ARA0ASWoK3QO/B

LydA0TES1jl6JnAY6BkmRno+NmZxPh4JPp7jPDzcnDxC/AJSvDzyHCwpztod

ie6zJSEXWpKvdGeeb0s6VxdxqSHsZGPIbIXfmeqIM9XR/em+BX7W4S6IIFfd

FHe9ZJR2Okorx0MHYIEuxUU1wVk1xUklC23kri/LywCHL2I4sxAdO8JCTRIs

xe+nJGjPxetBSpKhwn3y7MCFm/fOXrvx9vWvkPWfvx60jZ+l4hACz4R1FBhB

RIINUtOBgrTMzCys7HSwxjBxkNGzwT5DSAdyKphQMrrfhaagpCYnI+HlYJXg

Z1MU4lGXOC4nLCAvJS4hyKcrL6UpyOVjItOZhj9dl3ixNeNSR/b1vrxLrYnn

akLPVUWeqoidLEsoi/HGOhgaaEkpyQvKyfDpSnNbKPB66IjG2qrkuiFy3TXT

kapp7upZHtrZnrqh9hqKx1nJKYihRQFQgJXeW4wjXFMkTFXqBAVFGCfZQGf+

xbWt85evvHvz7eXLjx+/HIyfu0vNKQoKwrJNwcYJFN91oWPg4mbj4eET4Gdl

42Dm4KNi5gJ2yBHC0FHTk9Ox/A5IBYQkonzcSiIcEFimKhJaMsLWRjoK4oIn

DDU8jDXcdMVqopCzFbE3e4pu9RZfaEk/WR1xviFqPD80y8fGzVJLT1tBRk5S

VFRYlI9HjIdLWUbIQFkMiZAJtwQj1cl318p2U8l0V8rB6oGsKe76ZmpiTPQU

4DMACGuSn5E0Tosj0VjCW1YMQ3e0sTR26eaDC2dPvQIX/V5r2y+lNU2P0HLT

cAnRsnLQMRE2atjN6PlEjvPwWWgoaitJi4mJQdMxsvIR0f7eupALlDSMBGXJ

qXlYmNRE+c1UxBxUJb1sdJ3NNXwczF2MNWwRsiWhHnhr3UK843im/8WWhMst

MReqomYLI0rD3BxMNEUEWQVF+Zm4uTi4BSRFxTTlxTzsdFO8bDPQlgVY80Ks

CXQpbKd5aN0cN61MtDFcJKMQXmaK/IwkJJS0R4gpJblokVLcKH5OnDx/jJYE

jpu0PgZ34fbqwpWLPwBffzxIK2o4Qs5GzspHz8lNzcgIGya0K6egsKacTLSH

o5etIdLeRl5KgYNDAIYUNjQABJ+BszDAwv4pIySIkBC2VhUPskDEudtEYx1j

ULYRKJtoT5uO5OCyAI8Md/OuBOx4dsB0SXhhsCPGQkWel46Fg52Vi5eeiV2A

n9daVzncWb8owLYyxLE44EQ+zgoA871MM9G6GZ6IHA/dLFetLLQBtGuah26g

vaaCIBMJBTUROe1xJlIDdjInTkaUAFO0qkioOFNDou/Zmytz58/9AHz17rf1

x29d8RHkLLy0bLwgIg0bB4BIS0tbqCnEOpkXR3jF4lCBbu5q8mrwVjOycjCw

sNMysRJsFs6GNNTKEiL64oK+FprpKMt0T8tsP+dsL6dsnEtpBLYzzm8gPqAy

2LU63K09Ehdtp2esIiIjxikrzC3AySjBz2esoupvb1zob90QaVXha1ob4Fjq

Y5HrYZSF0k93N0jz0IfKdCcA5mL0ISIBMMpVz0xFGLYmUgpaftpjJryUruyM

LhyUUQqCiRr8rfkRS9duLy5fOKR7+frby9cEEW/c31XUNqNg4mbiFYS8oGHn

VJCXdTNCJDmbd6QGlodjaxOiwtw9jHV1JUWkeLh4xSVkaNk5Gbh5IflURAVt

FKSCbHXz0bZVIciGWK/WKHyJr2trSuB0bmxXDLo23D3SRrsI7+pvjrDSlFWX

FlCTEEAcZ8EaqKS6WXbHY/rjnXpj7bvj3CoDnCt9TAs99eGomOyilwAHRiQi

ww2R765bgNWF6E/30IlH6qFNFGGNJyenFGejcpbn9BXmCRLjSFQVTdUV7iiL

m7t6c+ns+R+AsJQ+f/n1/S8HiZllRyhZmfjFgBGSWkZKOhaLzkQ7dqUGdacF

T5dmNSTHJgTica6eGvIqttYOXPxC0NJc3Lz6cjLBNuZZwR5Nsb69mcE9GQED

yQHVQajmBJ/5oviOVFxbDCbBXtfXRM3XVNNDQ8ZLR87DQC4VadMYgx8rDL/Y

kDyd49cW4liOMy/0Mivw0MtD6WU4IeIdtaMdNWNPqGcgtUrQ+qU4RIGHaqa7

ThIKEeagqSotBO6tIsSK0RIMkxZKUBTOM1RK0haszQ6ev3ZrZvEPk4Gl9PXb

f718++vFm5tiSvpH6Tg5BMUZ2Ng0FOSKYqOqowL7cmMG86LPNpbM1pfX56V1

1TdjXFBBvsFyyposvMcFhMUM1VUDHaxzY4La06P6c8JGC8LG0wMH0gJak/FT

uRFtyR6t4cgStKUXQjLUSiPeTCPJVC3D1aAvGr9YnjhSEh2FNLJSFDGVFNbk

Z9ERYnGR5cKpiwQbykRaKsY5qic7q2Wj1MsxunCYKvFUz3DTTEFqJ7hoWevI

M1CT6snxR5jJeQux4wUZCs1UUrQEc8KQc5euT588/ccMftdx//lnOFxUtI7S

cImRM7CDLs7GOl35GcvdzWNVebONxZPVhVNNdYVZKcnxcXZW1oYGpscl5Oi4

BUFEHg5WSEBjdcVAa+PiAJe+NN+hOOxAEr4n3WciK6gzxLbRz7IcaxZuKhdk

IJ1krZFur9MaihzLCEzH2imL8bOys5HTMFCQ0yiIS4nz8Kgwk1uKcHipikSa

yqa7qhdidMqwCDgwVmC1y9GamShtGMkMN213c4hcDmstsSQbZTduek9hunQ9

6XgFjuIQ1NTZS9Nnz/6h4JtvcDYExqevft19d2Dh4vtPMjoWFrYwpPnZ9ppr

w52zrZXnhtoXOpszoiJdkK66elpaWhosrJxwAKHhFabk4IYtTUqMz0RT001b

I9nVrDEcORSN6YtBTxWET6b7zYQ6NuKNizx0M+014yyUou01MtxNm4PcvKwN

VOSVJTWsFKy8KAQkiaipbI30sBZmICVKXTZATz7WUrHAQ7vG3wDO/ocH5Cqs

DjhqHlo/1xPha4cw0JRDmSlG6IlhhVhSzORjNUWCjlO2JQdMn7k4duqPFn32

9tv799+ePv/w7NUvnz8eVPVMHGFk5SKlSgzyvTzWc2+089Zw+5WJofLCYhML

J2UtS3UZHWFFFXYJKUFBGXFhFSFpFQUFBWsVBTszlUBboxQH8yKkWV+Qw3SU

60wKbrwofCoT1x9u147Vr0ZpZ9ioRhgrlmEdEy115aVlGpsn3HzTSZi4KahI

eamIExzMavH2sVYasdZqqY7qJRjdpgDTzlCzzmBTODM2++lV4bVLMIh8FCIH

Yx6FMcM6IoJdzK0FuHGijFXuGsGqx6vUhTpKIntOLs/MTf7Ronu/vPl88O7Z

531YTT8cbD7+wIHQJTlCmuDjtrY4eme0c3Vu5MxgT0xAQKh/YFpCSm52Tl5G

VmFGdnpiYrgfLgrrmujlkORlk4o0Kna3LnM1L/cwrUUbDYY6LuWEnK5JO1MZ

NZPuNRhm04DWSTKXDtaTynAxRSuJWsiJD9e19jX0oh1d8PYWud7I9kh8obNJ

sp1WhrNOMcawIciiJ8p2MNZ2INq6L9yiPcCwxgdRgtUFR83BmiX424agLSBq

XfioA8VYim20kx0UC6zEWupzxi6szpw682P63n842H5PmMFnH357tvf1t3cH

KcUVR4+QYSy010+Or0wOXBroOjPYN9XRdmli9O781O35/rWpsev9w2dGhtrL

0qZL48aSMX2xznUY8zoX4ypH/RJXRC3edCbF+3JV6s3OiivtubP5fmNxjh1+

BqnmYkE6QgVe1vEO+s1+qIGEkDPVJbOFeVM58S0hrqVuJkUu5umOGhAT9YHm

XVE2Q4kOY8kOI/G2/VEW3YHG1d46xV4IWFAzcKZpoU4JAY6JGNswdf4sc7VU

hGacEW8hWrZnrK998frY/MwPwE/Pfvv85mDvzUeYxHevD14/ezs9OkrEwqYi

LnBpcmD3/MLKzMjt6ZEHpxfuL0yuzY9vjnevdrds9Hddaa8fKkgrC8XEOej6

60kHGCrGGqrkWCHSrNXznHV6QlzOF8fd6y5Zn2y52py2kOU5EGSYZyUWocNX

gbeqC0X2+Lu04K16Q1B9QdgOvFOZi2axi1auo3E13qg1xLovzmEk+cRkmuNM

utN4kl1/lFlPgHENXrcArQ1Gmow2TAs/kRbinOhmFa4vg9cUDFeUytfjrwsy

HptbaJtbGpvr/9Gi0J9PXn959OH96w9f37789fH5i/db6l1MTUlIyWuLCnaW

Fx/ND2/MjdyZGro3O3l/fmZjcf5WV+NIanC2p62HoYGskBQ7GzcxBQkDLYmB

hLCrlEiChXa2g267r+PFkug7bRlP5ocfTzZdLAsaDDKsPiGdaSRU5qZd62dV

h7MHoRu9zVtw9i1o63pPREuQZWOwc2+0w0iSy1QmaibTdS7TZTbzxGi8dVeI

Qbe/cRUeke+pRYhCT4O0UMeMMNcEN/MQTekwbfZMA+kiK/mGrODR8ZP98/OD

iyOQfYeAH55+ePHm1y+fvu2/+vD4+ceHw93fxhqmSrIhLPBu7ntXzq1Ndm/M

DNyeHLw2MXJnfvZif31zFDYBaWakp6Nh4a5s4CGnZXqEjpiJmy0S424uwBWu

r9Lg6zwU4TmTjN3oy96aHH4623utLn4qxrYHq1VqKV7trNQTZjWYhJ3Mxk/n

+gzEug9Hu08kug8nuvYnooBuOh01n+2xmIOCms90GYux6QowaMLrl3vpwDqa

5aaT7GmQFGibHuqS4GEZgxArNhXKtJZK87VvHOgcGF4aml7qmJ37YwbBQj8c

7O+/3X/55f23g4XYkFNGMueDfYRFxER5uO7Mje+em12bH70x0X+mu2l1fny2

ODrPwwrM3BcXPrCwIqRgycjERkd0xE5bIdfdMclAtcnXqS0YdTIn4mpF/L32

lO2Zqf2lkbvdeWcLvcfDLGrsJWucZIYjzJZyPRbycPP5uJkczFym13QKZizR

bSbbfTYbs5TndbIAfTLP/WSu22I6ciLGvi/ItAaDKPLUznbVyEXqprkbxXtb

pQa7pOAcQk0kI/Wk4vBWzc2NXSfPdo+O9y2c6p5a/Pci+u3Vq09vnh+8evev

Ny9/e/72YLuraoqHtFdPzcnGjJuGdLCicPv8yavjA9cm+y/2Nd/obZjOSYs/

YRljbT6Zknehfbixut7Xw708ILjd37UFTMZcMddSoRJntVyWeKk8fr0r+9H0

9NOz06sjVReqw2fjT3Sh1ZuQsi1eipMxeicznGezkIuFbkt5mPk075NZPks5

rqdLfM4UeZ/M95rPQs0mO03E2vYHm3V5G1RgdIvQiGxXrXykQYa7cayXeXKw

c7qvs6+rVkFaasNw/8TYhc7h2a7p7rq54anJuR+Ar999e/vm0/67b+/2f93/

eLB2Y/m0ueB5LaWJlEgWDu5kD7+dq0sPlsbujHctD1evjHTOVeWMFaVd7my+

3NayWFJ4vjR/MS2i2+dEs5thjathgaNusbvlbEHsrfaCi7XJ6z35j08OPzt/

7tHcxNWmjMUMlz5f3QaUcgNaqc9XZyTUaDLOaiELoLyXK0PPVcecq4lfbow7

Xxd9stAHfGk22WU02rYvzLLV16ASp1sI8rlqZ3gZJKH1E931CqLco4IdA5JS

2iZmWkem28dmOyfmW0cmuyZnuqenf7TocxDx/edXr768evbLi3cHL57uX84K

X1Q8vlafhTQ20lBSend5+dZU/9253rtz7Wt9bfdGW2/0N69NDFzrap0vyZvJ

ih+K8u4Jcm3BWtR6WjQHuIxnRq701z0cbbjRnLPZX7azNPT8wvKTkwv3ekvO

F+PHws2a0eq1ngqtGLVOb+2xWKtTuZ5ni73PVYRcaUy42Z5xqSn+Qm3kqSLf

pVz0TKrrULQNBH2jt34xWgcAc5Da6Rj9FLRBmqdhfjgyzMfaNz6xeXSycWC8

bXSmY3wOANvHJrumpv5Y1V7/9vTTL2+ff3754uuLl+/efDq4u3x1QV/6VqFf

Z3iA2nHBxY6WlYvz65M921Oda8Pt69PdqxNdK2NdlzsbzjWULRanDCT4jCZ6

d4Wh+uKwZyoy7vTWbox1AOPN9qJHo3Xb80PPz519enrpwXDDleqwmUTHdh9E

ladiC1an0UurJ8hkMsX5VAHuck34zeYkYLzSFH2pNhSQT+d5zaWjBmNs2oPN

ICBy3dQLkFowgBlo/VSMYQbGOCfIKdjL0iM0vHF4vGFwomV4CqptFGqic/L3

TQa89O2b3/Y+/Ov1q09A+uL5m6fvft3c/9eN9KjlcMuLudEBMoqleSnbN88/

HOveneh6tDS8OdO1Ntm5MtZxrbf+SlfV+cb86cLo+eKos1Wpy3XZQHdvsPV2

X9O9oebVwYbNkcbt2WGge3ruzOZ459XauPlUl+4g/Sq0So2nVi1aE3pvNNZh

KQdzrsT/QmXY1fq4C9XBF6oCzxR6LWW7g4IDMbatwWYVWEQ6UjXXVRMAs7wM

oUsz0cZZfg5R3nbIwGAAbBmdaRyaaugf6xifAQXbx8d/AL55/fnZm4Nnb3/Z

f30Ai/fuyzev3h7s3lhZDrJfr0jqwnlEoxz3lpfWFoY25gcezQ5szfce1up4

642e6qtd5Vfbi691FF3rrrjeXXV3uPlmX+Pljto7A40bY22bY61P5safnlmA

Lt2ZG7nRmDGfgewLNajFaMD2WOaqUuKs0O5vtJiJOZ3vfbrA7ypMXxHuXInP

yRyP6RSn0Tj7ngjLhgCTfA/CZ2s5LoQWzcYaZHnpZ6GNsrztUsOQAUlphwrW

D0zU9Y/DJIKCwPjHZzKvPrx6c7D/4euTF1+fvzvYf/nm/fNPex8ObjZV3ksM

Xm0tKD1hc3uobXt59uHC+Npcz85836OZ7u253u3p7rXhZqj7I823+mqB7nZ/

w+pIB9St3sabXTX3hxoejrfszU88WZp+eWl5/+T87ea8hUzUQJhBrYdaA04b

WrTATirXUqzD13ghA3063/dMoT9kxJlCHDxtPMG+L9KyLci4yscA+jPdTS0P

WhSlk4vTz8bpZaENMvBWObGYnLqmuoGRyq7B2r4xELFxaLJl+A/A7yf6f715

A2hf4UABIsLp/t3zd1vvDp4/eXktOXKzKfl2YdJSSere8sLu/OzW6eHHC/1b

U10Px9t2pruhNkc7Vgc7bnQ3P5rp3ZjogSGFXW57smdtqOlub9XacO3TuYmt

udH95dP7p5buthadykaNhBvUe6i3+miNx9gMBBnnmYum6Qs0Yw3m0zFL2T6L

uRjo2OkUl5FYu64Qs3pvvVIvnTx3jQyUWqGHdr47AMKyjcjBGqTgzLJjPUo7

eqr7Rqp7Rmp6RxsGJ2t6R1rGpppHx//IwQ8HL1+9ef3yy6vX7/df/vLi1cGb

918ev/y0++LgxcXJi8nu52Lx9wszbs8NPVia2xnpBOG2JjsfDDdtj3c8me7b

HO3aHOt/Mju1NdUHW9nGSOf9/pbVvsYbraXX2gvXhqv35yc3pwd2T88/PXny

XlvpmVz3iRijNqzOQKjhbJLdqTRkG0Y7RZev0EZuKNJpNs1rLgs9k+Y+EmM/

GGXdHmhc4alR4K7+HVAVDlBFnjr5AIjTycMbJHkZpUS65De1VfUOt4zOgoLA

WN0z3D452zg8+vNxHurFq6/PX3+FIyHsM1BPX3zeffnlybNP65NjN6OQy8FW

d4Ya1i+fWZ0afDDV82iy9+FQ20Z/w/ZYC0HNqYGtmantyYHNqc67gw33e1rW

OiquVsdcqolbH2x5ONcHou8tjYGd3u8sul7kMxFh0hli0hekPh5rPBFv1RNq

lmImnGEl2R5kNRDtNBaPmk71GEtw7g41bwkwBHvJc9OEE0S+mwYcJaAg7oux

Brl4kzRf84wQx/zG5qq+8YaB2dq2/uaBsZrh6fqxmbaRkf8OCLX37P3e669P

15/sDvduVcQ9bE54MNm2srC4OtO7Od23Ptz2YKBhY7z14UwnfPtwZnh9oAOG

7k5fzWpP3Z2G7JPZ+MvVcU8mu5+eml0f6d0aH9ydHr1bn7ec5DkeaN6KM23E

GbT7m9V66tW6I9LNJIudlEfiTowlnJhIQo3GO0G4twcZHQLmItWzkdp5KPUi

jA5UoadOkZd+NtYoxds0Ocguv76uome0pm+qsXukqX+0cmCicXSmbWjofwL8

Q8RnBBH3Xv22fW97Fxqv0O9+U/rq0uL9uYEHs32r4+13hxvBSB9Mt69PdWyO

t2+NdKwNNd4frF7pKrlUFjEW7XinPuX90sjT+bGHA6332+ruNlffLE1ZDHMc

wpq2eFnBod6BjzFMXSLDTDlYmbPKXWsmDTWaZD+ZihpPdBqKte0JN2/y0y9C

qWU5q+S5AaBGIVobKt9TOw+tm4kxSvQyifO1zK4qregZruiebBqcaOgdruwd

aRqdbhkY+F8Bn7w82Hv+4cnrT1tPv+zeWN3oqdvqrnh6euruzOC9mb674x23

wUZGm+6PNa+NNDwYrLrfXbvSW3Wvp/RWS9rZfJ/RSNsHbdnPp7vWu0s32vJW

GnOvlKedzQwawMGhWC3OUMHsOD9KQSrSSNNbUTBSV6w50KIjwmI6z3MsBTkO

jMnOkICNvnoFriqZTkrQpQCY764FdIS/r3nqZaANEzDG0XizlLzkusHRsp5J

iIn6vmEwnIbh8db+/wZ4yPj02S/PX33effFh7+2/9p582ruxBvO4MtJ+Z24K

jk53RjvvDDffHmy83V+30lux0l14vSnvVkfpza6iS/WxJ3PQE7FOt2rTzpal

NmFMapA6QxHuI4kBjTiHYnuNFHM1O4njNkqSDsoSTgqCgQbSGUidUh+T5liH

+kibzhin7lin3ljHrgjbel+jEk8Id7VsF9XvGaGR5wGMhA/z0z0NADASaxYT

69syNFTRN1nZPdrQP1LTPwqp0Trw9y36M+Or/ZcvXn17tP916/nnx0/eP99+

v35l5frc/P3zV2/Pzdwa77k30gLBd6u79nZnya22nJttObe6ym92lZyvjTmZ

j1/K9r1WlzmZE5lhqhKjJZXlYFqIxWQgnZJtjCItjLzNrF31FTyM5H0tVaKc

NWG3LAq0akn2LAs/UehrXe5vWxtiVx9kXY4zKHAnAGY5KwNgDlIz1107z0Mn

E4VIddOP8zQO9TQKxlo1dLbVDs6UdQwBYC34TP9o8+Dfm8zPgC/evH/24tve

/m87z8FO379++e3V81/3nnzYXn92Z5mwfq+Mtd0dbLreXXO1vfRSc9bV1uRL

LQWX2kvO1SeeLgm+UpsGLnS5o3QxP20wKaI5Ia4yIaUqIbHADx3laJUXGZHj

4xJkqxODMgCvyAq0zgm0yfGzycRZRTgZxjjBJmZegrcsRBsQoEBBpCqMYaar

ehZSIxulmeaileCqE+mmH4DU9XFQKynNbxicrugaqesdIig4OA6k/yvg1ntC

Z8IZCqJ/5+Wnja2Xj3c/bz39+vzFbyvXbt+YHbo31QEiXu+pv9heerY5G04B

yw0551uLzzennSwLv1KfvTLQdGuo7nZfy+mG8snG2r6mlp7aitasyDS8bU1a

RENUYL4fMifgRF6IY3aIbaa/TVGwa5SjnrclAm2gHGCunoo0yvM0LvAgxMQh

YIaLGjBmumgmOarFOmmGuSK8nbTx5tIZqXFNA9PVveO1PYOQifXDU7V9f87B

Q8afJ/HpC7AawuPe88+7+x8fP/2w+/Qz1P1nB8939x8sTt8a778z1ntnoP5C

U87JhpxzDWkX23MvdeRdaM46V5cG2+nKaOv1ofbL3RVzzUWLAw3DzSWjtYWT

pekNQe7dYZ4zdWlDabjBaFuIvM5YVEUossDXMdFJz1hUWJGRwkyEKdJWLQNF

+CtMvoceLDAZJzRTTyinuignI4FOI8xBM8BOw8dG1d9CJRxr2NDbVtm9CJgl

gz3Fw0NdvWM//23id7SXv0D9lPW/FwBC4hMY9z4B4Mb+L48fv9q5fefexODq

WOetgYYrXeWXWnMugYgteVc6i66058H1ze7SmwMNF3qbrg10nuloWGisOddU

f6e743Z7/dnKrFOVadu52TN+7uXWqnlGMnmGSonqckhhHm0WGgVqIjsp7ghz

JXCeEi+jGl/LcqxRjqtquqNGsoNi0gmFeCeVCDsVPytlvIWSl7mCj6lSoKtm

QVlOw9BCXc94Wd9AUd9ga8/QnwAP6aD+CPrnn/8KSKgn7zYef9jdfrm2OLsy

3HJnqOl6f831jvyLTTnn6jMvdxRfbM5dbsy42lV8qaf6XE/D6a7m2ba6s/2t

a7ND6/2tFzMTlyNCVlMS+mxMUuTEg6REPMUELNioTZkpLDjo7fi5cCoicRZK

Vd6m/XEuvTFOtXjjAle1PBcCYJKdUryDQrSDcqClItZEHmMihzGT9TFV8bdR

iI1At4yMVHWPVvWMlXcPNfxdDh7WjxkErp8ZDwF3nnzc23v7aO+XRzsfHl6/

dWu4c2W46WZfNTTkzc4yMJmrXaVLNanz5XHnWnIWm/KmG4tmG4rOd1Y9num5

XpHT4e7QbGdWZ2lcaKBVIH08io8fxyXiwSYcJaNQrKuVpyJXqKqQ6agNB9uO

MOuJFGRXqHU11rDEA/pTKc1BPcFGIcZWPtxWycdM3tNABm0sizWT8zNXCTSX

DUTpVTSVw+jV98zWdo/VDPX/rzP4g+6HiIeAMIxwvfX4/aPN5zcX5m8P1t/r

r7zYXQUZcaml6EJr0XRp3HRx1HJL1mRlSltm5GhBHJyCZ9Oj07VVspSV8tW0

IoUlw8Rk0zkForlFGs1dTnuFnrN3GdfRadCQr7VEJNsoA9H3D+0dGv3NqnDG

pVhDMM9Ue7UYa4UwS1l/c3mMvjQKIeGhL4UzlvExUQgyk/ezVUhN8W8cHGzs

mavrGivr7/4Z8Ocx/KHgz3S/d+nTz8C4ufvxKTA+/bi58+n+jQeXh9rvDNZd

6q272FZ8piF3qSZ9PC9ipijyXF1qT0ZQOsY20lwnUFfVhJXBkZ0zWVm3ztB5

zj36nHfGUkjS7dS8Bylp5z2RExY6TcaKSQayUfY6mSfU63xNO8OtusOtWoOt

CAOI0snH6KU6qsfYKgZbyuFNZFE6UigtCbSuJN5AwttQ3N9cOcBaLszbvL6r

rXlwrq57pKyn668m8wPw32P4yw8X/RkQtIPcByk3H3/YevTq2umzV4Y7rvdW

g9Wcby06WZ8J8s0UhM4XhjeGu4VZqGFVpZItjbJMTIcwAXdTStZyanfq+9fq

ex6VVN2Kjl10dWwzUstFyCSaKsZYa4Rbqha46rSFWHeEmNfjdBp9jBr8zYsx

ujluGokn1KJslf0tZEE4Zw0xlKYUVlcKjxDzNRDBm6sGWMkHnlDOK8uGRbSm

e6Cy53+fwWevvgLgId3vjPtfgHF//+P23teN7Y9Pdt/DQr6+/vTc6ODdvrKL

ncUXO0vPNOUsViYsFEXMF4R1x3mlOxsX4G1nipIu1ZVcLMi/nJZ1OTblYlTC

ubCo08gTPTYG2XrysQbK4UYIf4RalKlyjotGnbdZR7BlX4RVZ5Bxo7dug59x

pbd+lqtSrK1KqJU8zljCRUfUQUUEqSGJ1ZH01hb1NxD0NFXBmcr5WkhGxwfU

jo5WdPXA2RAO8oef3v/HAH5X8DDxf1YQ5Ds0UoLVPH+7tfN69/Gnh48/r+6+

33n+6cG9jYXukTvthWebyxcaSs/Wpi/mBV6oSZrKjRhI8p7OiThVlXKhKW+5

LvdUQdJMQuhQgGeHp2OChV6gvoqntjzeVCPQSjPETCHbUb3Oy2AgxHwoynY4

3qEjwqra36jCxxAUzHJRjbBT8zGRQ2qK2ikJOigJIlWFvNRFfTXFfA0lfIyk

gq1VcWYyaBv5upr01v7Bso6Zn+n+BHjI+APw56SA2n7+YWf33dM9aNcvDx6/

39r/8HjrxerFe4sdzWeaiiD0YQwn8iPPlIafKg5dLI25UJtGCI7W3Lnq1MHc

8NYYbLmPY7abcYypWpy1TrSdXvQJw9gT+ulIvaYA2+E41+lUJBScJnpjbJrD

zGuDzPO89GIdlXxMFZE6YraKAjZyvI6Kgm6qwjhNUR9t0QADcZyhJM5cHmcu

62ut6I8ybm1vqh0Y/2Evfwv4o0X/JCLU9otPYKQEwCcf1x+/3dh9B7x7j95c

v3h7saPxZFXaYnXKXFUKjOFiUciFuvirNWnXW3KvduSfb8yeL40fSQ9qCXcv

87ErdDcrxtrmo23zvOwq/F064jAwtssVUafKgk4W+05luvXF2raEW1QEmSV5

6PlYyrvoS9uqHjeX5rGR4XFRFHBXFUJrHMfqCAUgRHxMZNxNpT1NJH1NZdGG

MrFhmKa+lr9O338B/Jlx+8WXnf3Pu4/fPd55u7X7dvPxu42d99s7n2Ekryzf

mGlvmSlLPlWVNFORNFUSu1wRcbo8/nxD+rXOgusdBdcasy9VppzOi5pNCxrK

DB3KDB9MD58sSj5dnQ09DOsQeO98Zch8qf9ohlt7uHlNgHE21iDYUd3FWMZC

TdREjt9CkvuEHL+H8nG06nG0pgAWIeyDEMYbSbnriXvqieP0RYOtlZGmctlp

AX/ron81mZ8BDxkf7RMAQTUA3N37sP30E+TFxvantQcv914c3LiyNtvSMFcO

OmbMlqecrk5dqEk605K53JV3sSPvYkv2lSao3EvNuZe6yq/11l7proV1HQq+

XW4rWqxPGyrwHcjBtsWdqPI3KcQbxqMQaHNFa21xfSleQwkuG0kuNwVBnKoQ

TkMI5MPriaL1hNE6wl5aoliEONZAAmMs7mMtB/XfAX/ExF8Bt/Y+7j7/BYZx

h9CcH7Z3P4CC6zvvHz35dWUNThy/PNp8Md7YPJodvVyXPVtbcL4q7Wpj7vWO

ohudhTc6i2FHvT1Uc3us/s5Y+/3J3huDrefaqk42lp5sKlxqyp2sTOrL9m5P

8SwPscnGG6dgjIIctBy0xQzk+IzEOC0luZ1keb2UBX3UhLzVBXFax3EIYZSu

MBYhitcQhZH0MpRw1RWETITs+P0zw/93QIh4eCSI+OTjNuwz22+hHu68fvjk

y+bWm/WHb7aefntwd2u0srQ/I/x0Xe7ZsvRLdTlw2L/fV/mgv3ZjuGFtvGV1

su32UNvNobYL3XWnWyuWmgvn67OmyhP7sgLak9A1Ua4pOBM4Dfmd0HY1kDGV

4TEQ5bCV5HKR4cUoCeBVBH3UBX00juM1hb3Uj3tqieH1JLHaImgtIU+EGNZI

GoMQCbWQP8T50wz+FfBnukNAyEEYvUdPPuzs/7L75Mvjnfcwjzu7bzce7cMS

vrb1fm3jBXTv/NTiWFX+TDp2tCZtsjn7ZHfxpf7ym4PVsPbcGqq/Pthwe6D1

clfdUmPRQkPhdE3GYF5Eb4ZfVzKm0t8uB28e7KSFMlN00JcxlufXFWI1E+d0

leT2kOPHKvHjVfn9NI8H6Ij66oiBajgNCQ9NYTcdIShPDVE8QsrHQNpLX/xv

HOY/P3T6GfCvYXG41Wzvfnq08w4K1putvc8bm2/2t76srb+9t/f6+rnlU5XF

04XJfVlxA8Wp0/WFZ9srLrVXXWuvhHE721FytrNwpiZ1ojQehBsqCG9MxJaG

u+SHOKZgzIIddEA4CxVhQ2k+E3FeWwk+Z2kBD0VBtCI/TlnAT104UEvEXxNC

UMRbS8RTSwStLequIQQFFxg9CaDDG0v83wD+rN2f4v4HIGHr/g64sfP24aN3

O4/eg68+evEF1tSlhvrTVZmzGQlNET5Vsb6D1WkLrUUz1VmTZclTlWlgQUM5

ka3xPrUR6KpQ94pgVC7WNsZR111f9oSGuIW8oLEUj4kEN7iKqwwvWh4A+WH6

oD8B0F9DyE9DBFoUqyHkqXkcrSXi8W9AL12C7XgZiP5/Ah6eLA4Bf6+9j4+2

Pq4/fLW6vb+6/nzjwZvTQ2NzhTHnC1JncuKa43zyA5wrYryGiuP7C2LKwj2a

Y7zro7DlIW6ZWNt4V9MoR4NAUzUPdXEzaT4zSX5zCR4bST4nKV53WT4vRT5v

FWhOQV+CdmIBmsL+WsJ+WsKQ8jhNYQgLjCYhE4EU3AYAMQghrJ7IXwH/TffL

n+zlb472Tz/8FXDz8ZvdJ9/WHr96sLe3vfV+Y/PXU7OnrrXknipNnsuPnUgP

G4j1qfN1LvKyycXbZfk5pKLMok/oB1hrYYwV7dVELWR4rST57KUEXBSEXeWF

3eVFvBREcIrCOEVBmDucCjAKBmmLh+pKBOuIBeqIBiLE/HQlcDqieM3j3lpC

QOqlJYzTFcPqiXnpCuMNxP6HJe3rXwEPD4Z/nCn+ExBi4hBw7dGLzYcfHuy+

Xn385NHDt1uPD66cv7ZUkbBUnjKXFzWTEjwR6z0cge0Mca/COeS4miVa6wQb

KProyvobq0D56SkH6iqH6qmGakuFakqGqkkGq4j7K4vilYQxisfdFPn8VI6H

aIlG6EqGIABQhGAyuqKEZfu7mjCMAAuAoCDI52Mk8beAf9C9+Pan/vwPh/l3

i+48+XwICGMICu48/gIXK4/27j54vrH5/s6FK2ebc89XZ54qjFvIDJ1M8huM

wvSEug0Ge44EYfoDPdu8XZpxTs3erg1YpyqUTaG1Qbq+epKudIKOVLSaeKiy

cJCyiK+qGFZNzENVJEhDOBwhEa4vGaYnEYQQ/e6iIoRdVFM4QFsEHBWOTiCf

h44wGsBNJP8K+DPdfwH88fnMnwDXt99s73xcW3kGS/je+4OdrRcXR0bm6nPB

Z5YKE+bSg8fi8QMxmJFY7HAEZsAP1efv2uvn0ufj3I1zbPO0aXG3qncxrbTX

zzBUTNGVjdEQA8AQVZEQLckgHakAHclIfcloQ+koI5kII+kQPXFYQX01hXw0

hAEcOtZfV8JbH06IEm5awh7Qt0ZS/yPgdzpC/cVkfvaZnwGB7uH22wc7b8Ba

P704uHXrWUhaUWNNw92xkZmGkqnq9LnihFOZkSeTAiZjcIOxXn1x2N5YXJv/

iVYfh05/p75A5x4/xzacTT3avNrNONdcO91QNUZLKlRVJFRdOAIhEaknBZ0Z

aywbZyIXYyIXaSwVqi/hp01YZvBqxwEwGCEZpC/rZyTrpS+J0haGgrh/8ebb

vz/p/Y+5O6zDm4TPQvc/Hk7iIdfO3ns47cLJ98mzj7t774ELzvWQ74+ff9t/

fVDXP25q7UFHy2SvIXi9Ne9Kf9NMRdZMYdxsQfR0duhUWsBkIn40FjMY7tYT

5Nwd6NIb4NLp49iCtm50t6hDmlU6G5XY62ZbqMfoSH5HEydAmSnEmitEmcjG

mstBJZjJxRhIh2iLgK9C4gOjjyZ/iJFUOBx4EeIeGoLu2iIeumIohOj/AS7r

xyo=

"], {{0, 75}, {75, 0}}, {0, 255},

ColorFunction->RGBColor],

BoxForm`ImageTag[

"Byte", ColorSpace -> "RGB", Interleaving -> True, MetaInformation -> Association[

"Source" -> "http://wiki.d-addicts.com/File:RaymondLam.jpg", "URL" -> "http://www.wolframcdn.com/waimage/hset028/891/\

8918eb5845add22945c22846d797ed18_v001s.jpg"]],

Selectable->False],

DefaultBaseStyle->"ImageGraphics",

ImageSizeRaw->{75, 75},

PlotRange->{{0, 75}, {0, 75}}]\)];](https://www.wolframcloud.com/obj/resourcesystem/images/868/8687829f-8191-4724-91fe-4d8618d93b36/20d337546a8f9a9d.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/ff24125f-4c9e-403d-9d1c-fe29fb9e370d"]](https://www.wolframcloud.com/obj/resourcesystem/images/868/8687829f-8191-4724-91fe-4d8618d93b36/0b8588c448572f4c.png)

![features = NetModel["OpenFace Face Recognition Net Trained on CASIA-WebFace \

and FaceScrub Data"][faces];](https://www.wolframcloud.com/obj/resourcesystem/images/868/8687829f-8191-4724-91fe-4d8618d93b36/0e025e9cf3e9a8c7.png)

![FeatureSpacePlot[faces, FeatureExtractor -> NetModel["OpenFace Face Recognition Net Trained on CASIA-WebFace \

and FaceScrub Data"], ImageSize -> Large]](https://www.wolframcloud.com/obj/resourcesystem/images/868/8687829f-8191-4724-91fe-4d8618d93b36/0cf0e212db748ef1.png)

![FeatureSpacePlot3D[faces, FeatureExtractor -> NetModel["OpenFace Face Recognition Net Trained on CASIA-WebFace \

and FaceScrub Data"], ImageSize -> Large]](https://www.wolframcloud.com/obj/resourcesystem/images/868/8687829f-8191-4724-91fe-4d8618d93b36/699164416eb015d7.png)

![FeatureNearest[faces, \!\(\*

GraphicsBox[

TagBox[RasterBox[CompressedData["

1:eJwtlwdQ21m25ntn69X2zuvodm5jk4wBQxNMzhiDE9iADW5jchswGDAZk3PO

GQkQSAKBIggFlLNQFhJBImcwxtkdZmbfVM3efvOqjm5d/VUlfbr3nO/8jlH8

i9DEv3zxxRfZX4IlNC7fNysrruDBd+BNWHp2clJ6wtPb6TkJSQlZLvH/Gzy0

/5/Xn/tHLiaxPuZJN21T7jrF+FiHu5iHOJhfO/2fjue+9jQ57Wb4g7PBNyA8

TL4PsD57x/7SdesLHldOu5icAKvP1fPelufcLp/898bd7JSj4bcOF792MvoO

7G9YXwh2vBzlZ59w2z3Cy/aRq1W4s8Utc4MAsx9vWl66a2N6z8Ei0MH8lp1p

gJ2pt8VZT7NTftaG162Nfa1NI9zNIjyM0+45Z4R4hF676Pbjlz7G3/uYnPQ1

OeVvfj7A4sfbVhfvXTOO9P3pxQOf/Kg7qWF+sbedHnpeDXEzD/e2jvCze3zd

NvG+Z+wtp3Bvqwcelj/72oCHj3x+CvO4+tDlSri7ZfR1+0gv2yhP2xgvu4cO

V25aXAwwN/A1O+9tes7jynkPi/Melhc8zc/4mJ/1sbroZ2PqesUgzt8u2PF8

rL9V0p1rwbYXXM79h6/xt4FWF0JtjcIdzSLdrRL8nXLC/OqSHw0WJ4/V58Aa

8nvL05rzfqnNiKrLiG7NT+gofAary4VWZfaUpvZXvBiozuoqTi5P+Tkv8u7z

IK+Y6/Yx3raPnM0f2Js+uGZ238Y4wsM2zNX6to2xu+lpB8Mf7A1PWBl862T8

vbf5GZfLZ9wtL9obno7yBX/KKMzN8ImPRaijwXWTb+5YnAq2+jHeyzrjrnth

mH9DUthQUcpUWykX1iJB9yrICCUZqSQjZMRhyeSQfHpETR3TcXCLLIyWPq6h

jYMnM7AWeGN+T0FyRVxoaqBnUoBzlIf1A3uTsGtmwbYmQbbGwQ7md+0u+1w1

8LIy8P7poquVgYPhty5G39pc+MbB6NRPF06Eu5vH3baJ9rd64Gp4x/qUn+nX

wTbnn/r+9DLUpyb2XveLqImaTA6kQYGFLJGRK0zM1iz5QMl8o+WBOJrjHGu4

bxcEbxf4b+b5R2r21ixFx8bN4gdJ0AZEXU5LeuTLn28+v+P2xM0y2MbokdOV

SA8bkBKBtsa3bY39bQ19bQzdrS/Ym52yu/T1tYtfWZ750vbi906m529aX3hy

4+ovgfZ+Ft8HWJwIc7qY6Gdd/uR249OQzrQnqIoMdn+tGgtdnhlfY2E3+JO7

Ssa+inmk5R4v8EG8XRK+04nA5r1e/HqetymhbMySl3kEAQ6K76oYLktrTnuS

Her72PnKbfOz93+6FO1lc8/O+M5PRrdsLvrbGV63NXK3umB/+ZSz6QlHw29s

DL5xMjt3y9n62rkvA2zPPXAz9b3y3W2r0xFupnmhXpDs2OHCpLHS59SOUulY

9xJ1bINH3BKRdyUzr8ApLQrfL89+XJWC+LQm+3Vd8WFZ8nFV9l4/e6jhHKjZ

23LaHH2CiWjDNOdDip/VJj0EwuJ8bSI9rGJ97MJAmTtduWNn7Gv1o7vFWZcr

Z5zMTzsYfeNo9K2b+XlvG2Mfm8vuJj94mJ0ItDe4fuVEoM25aA+LqphAdFUm

senlTFe5GNkxT0Ju8kl7cvaBirM/x3u1KHqjl75fU3xcV37aUH3eVP+6Nfd+

Vf5+VfFuRXa0JD5aFO9r+HoRBWQgB9GEaspty4ouiQnKDPV5HuiWctft6S2X

+ACnx142QQ6XvSzPOZp872Dyvb3hVw5G3zqbnXE2O2dvctbjylkP05O3bQxu

WZ0PuXYpxtOyMSEUX59L6yrnwxpBOulZ2B0pc18rPFyUHC5JXi3OvllRvF9X

f9iY+3d82tK+W1O9W1W+XVEc6aRHS5KDeeGqjLnIJSiJA9P9VdDS5KYXEVUJ

D0qiA/N/DsgI9s18eCMt1Dc2wCnQyczjyhl7g68djL91unzC3viEjdFJG+Mz

zoYn3IE1WRrctbkELDTBz7YnI5rcVsLqrxUhOlQEmJ43uaVk7y1IdnXyPb3i

9bLiLZC0pf24Pf/nujX/aWcBbN5vasDzN6uq4xUl0L89J1yZJWsYCAG2dxpS

NdFaAK/J6AJ+khRW8PhWUfS9ktjgrMc3Y28637U3cTP5wdHoG3ujb2wufWtr

fNLB7EdXk1Ouhid9Lp8LsjWJ9rHLCPJElKRyILUCWJN0oneOjFzmkzaVnO0F

8eaSbEuneLWsfL2mebe58G5r4e3mPFjfby+CzZsN7fGa5nhdCz490Cv2dIoN

FVsvxCxwxyXTA3x0JxPehGsvhZWltQGXy4hrSo+uSAzLCPP/2dv2to2hi8l3

tpe+sjP83tb41LXL591MT7sanfI2PXvf3uxpgHP+Q39cXZ5guEmM7FQRBhZo

E6tCyqaauz4/u74oA7G7IDvQK49W547WNK9W1GDz+r83h8sqEK9W5kAc6BT7

OtX+knB7jrwmnZpjIKRT0Fl8HxfRTumtxjYXjNXkQAqeN6RFv4wKjL/lEups

4Wt1zs3iNMj8a5fPgrNytzzjbfLXm1d/eOxlnhLkXJMYgm8t4Aw3Sia6NVOD

OgpyXzj9EZiSkvlWwfhVJz5eFH1clh0vzG7JWR83tYeL0oNF2ds17Z5WfLAg

OdZJD+b4B2ruvppzqGK9UTL2xKRNLh7EFgevnYLx4a0ieCu5o4TQlD9SklwT

G5QZ5PrM72qQy1Ufx6ue1oaOJqfcTE45m530vfxVgOUPUb5XXz7xHyhOYg83

isY65Lj+ddbEBguzSp/QTsI2WdgDIem1jLEno3/QzX5Yka9J6LtqwY5a8GpJ

BrRtqfi7auGOmrchmdlXso5U7AMp7bWM/kbB2BUS9TTUIgmhnRqaIwyosf3c

wQZSezG8NKU7PbImLijJx/zWNbPrTtY+tiYuZmeBKgfj7/wtT96xOffsrmNL

+uOpzmIZpgeoYgzU0aF1+JZicnclvPwFqbOSCWnkIzo5o11yInxHztiSMfbn

+Pta8Yacu6sR7mtEhwviHQVrXUw5AJIU9HnKmAwNFY/1UPtqMM0FE/X5E3V5

qJpMbGM+sbVoqqUAXpICyY5rfhb21MP8ho3RDcerfnZmXuYXAKs4G5+4dfXU

A8dLBRH+8Mo01lC9DNPLhjWSeypwLUVjtTk8eBumoQhZldOV9bQj65eqZw+r

ksKGa3LZoz16PvFwXgTOZ1PO2lXzXmkEm7PUZTZhhYuTYPvRzYXQwtTWFzE1

CeHNaZH9hUn9LxNb0yIanoX15cZP1GShql5A8uLrngYn+9n4Wxt6/ckwRr4W

Bt6mZzzMzwRZn432tmxKfTTdVSocbZOhe/mIVmJnOX2wkQVrnu6qhhSmtGfG

lceGFD65kxdxI+OhT3Hc/YHKTCKkUTaNXBNRV4XUdfHMtpS+xMZL8FAGrAlS

klQcG/gy4m7+kzvF0fean0fBKzNwLYW45sKh4uT2F5GDBYno2qxh4Py/3H92

w8b/qoGr6XlP07M+l89fv3z+joPJQ0fD5DuO/QUJbFiTAtunwPVL0X1D5en9

xclN6VEPnMxCHEzDXS3iA1yife1C7A3iA+ySg1xbs2MhZS/QHZUaOmZTQtuW

0Q+VLD0TQx9qHKl+UZ0UkhrknhLknR1+Mys8IO/RrZqEsK6c+KHSVHTDy86M

6K6smNHKF8iy1PrE0FgP81tXL3iD0rt00tPwlN/l84+8bX65YZMR4jlYkixA

tqnwEPF4D22gAV6dVRwd9MDZzO3i18UxwaClOp3/+r69cW1icFHkzXh/2+aM

yJ6i5OHa3Hk6+rWGd6ThfVgSbfAJuPbClhcRDSnAye8m3HKPve4Q5WMXfd0u

ytsm6aZTyl1X4PDNqRHw8jRUTRaiLK09PTLF3y7kmgkAVFeD7zwv/uBrfCY9

9EbyHafyuKCJhlxwcQocVEOCS7AQBqx5sCKjMim8IjEcXpuf9yQwzN06+1GA

AteNqstKu+dakRACLUtH1Ocv0jHHc/xfl6VAlWp6eLwptzMrpiT6ZtZD356C

1PJfwm5eNbh++XRZfGjafe8Qe6NYH5uK+GCQ+cT2krGazJ7suNwH3vHX7cOc

zf1ARl347o7lpWeBXqmBrp1Z0ZMdpayhRjkOus4lbAJCEJLW+JPzM+PyKTgd

3kmFtVJADDYLEC2i8c7xxlzaYL2ajOBN9IFcOlRz/thUv5nnSXD9tME6an/V

QFFCQ2qEGD002VWTHX475Z4vtrUMCEu46VybEN6T+5TYVc4aaCC0FPXnJ+SF

+T6/4xrmYBpiZ/zI2eK6ydlYP8fMYM/BohRsc9F0V6UCN7DGmdyTUN8v8F/P

cbbEII0pmxLqipCyIaHruZMKbO8aD7shwL/Tct4tCBdZOCUVdagRfF5THKm5

c2SEiji0xsXo6Uj11PAiDU+Fto7VF8Fr8zAtZbCKLEJ7OWOwhT3QIh7vFY11

UXtrgO2UPLmTfMspzMHwoaNxhJu145mvEm+6AZ3IikzgJ9jmQjluYJmJPZTT

X6nYOxLaxix1Y3YG6NmUMoCZbyuYB5LpAxnlUEHbA5YlmVkTU5e4xKN50ccV

xZ6CqSCPasjIfSn5tWpmR0xeE1D0nCkdk6Ahjc9TJ5ZZhHUuUTU1IsUMKHFD

YlT3TH/teF1effKj3LDruQ99QuwNAyzO3792Jc7XIdHfYaQsHVw0tqVIhhkA

7rfOI75Wc98sAM4ENq4A8W5V8WZFDgjqt2XxR53wjzXFsVawKaVtKdh68Qxw

rTfL8l0lW0tHayiodYCskul1PnGRSdgS0zZFM0sMwhINu8qZUk7CNdOjQJhm

GiGd6ANtEVWXU/ssvDX9cWPywwg3syee1k98HcqiQgAoDpem45qKSZ3VorFe

BQG2RENvif606PfL8s/r6s9bms/bmg//TXe/6sUf9eIPK7JdBWuRN60X0Rb4

lB2N6FinOJwXrwjI80zsMm9qS0z6pJf8sT73SsU71gjBuiumHyk5r1VccFyr

bPwKCyfHDU53VA2VphVF3mlJ+znvkXe0r9VjT2u/qxdR1fmdmbGo6jxyVzWt

v4Ez0ibFDS3SMKvcKdBW3uoABis/bs79trfw2+7Sb9vaf+5qf11TgM6iZuAk

FLSWQwaSdrVi0A0P5iU6IUVGRqlnJnYk1Ndq3pFScKwW/GNr/p97ut9XVIdy

1isFGwSAWz0DI0FDCK1lvXlJzwM9CyL84q5bPPG2eOhmUZ4SCSvOwDeXYJuK

aH31rMFm+kCTBDu4RMf9qUrGfL0w+2ZZ9n5V+XlH+/vu0udNzecV2fG8UEPH

iggjgkmEhDy+ImHtamYPF2Z354QaJo47AeWjofNMzL6S88e69o8NzScAigvi

39dUHxYlH5ZmXylYKyy8cmqYDWvFNRd3ZMYl3XTJC/d54mX62NOsKvnR5425

7FA/BqQe5Dm+rZg12MQd6eCPdmtIY8usqU3hzKFa8HZR+nFZ/WFF/VanfD0/

+07LBQnDG+1SkxArbJyMMAjWzzrxW0A7Gu4CbVw43ssb65biBjYk1N1lyZsN

1VtQnmAC0ouPl8BVsnZmSUs0FHekmdRRimvM6cmM6s2IKom+HepikhLswcVC

/vVp76mfA3uwcaavFtdcROwoFyC7JRiohjy2RMcuc4h7UtYrwCpzwgOlYEcO

BivGMgenIiFmsdB1/tQKBy8jDB0qmb+vyo7nOAdyOmBX+SQMrCriiJ43ta3l

Hy9Lj/+cMkSvF4VH8zzAEpvCqWXmBKWvitxdPlyagm3IxdblpAW5RPpaQUpT

loXE/3e0GmxjiKx8MdNXjanPB4jIgbWIUD1SDFRBGJkjja2wp3aljEM5d2eW

sSacWWDgpfhB8LsCVO8SHb0nnQGzho6BAXr2ZbRXCgZ4uEgDDoCV4QaBSyyJ

KFtK1sE8/1DL25/j7AHWklK3REQ9C82ANeBaChAVaVMdRU0p4fftfsx+7CeY

6D5eFB4vy8MdzfIf+gDBwP/RdbkzvTXsoSb2UAswrjkiQgvKnEfcEc8sMycV

RKQIJAwZeaRgSDAQeHX2RFMhB96uIAzp6BOgI+yKyWAvRPXI8YNSDEQ1jVBT

J3TcyW0ZbV/BBpK2ZymbwmkgCdw7B9kGq0yj9FeBRhDmYvqzuxloVat8PFC1

peJmP/APv3apOzOK2lVG7iglthWTO8unO8r48BYRsks42g0seoGCBFXMR3QL

RrtXWJhjFUOOH6hL+Tne/9rLJ7dBH2cMNQLsIffVdObG1yY/ArzBRbSBQ1NN

I9WkMR0TtyWm7Egoa/ypZTZugTbGR3VN9ZR35T+d6i6HVaXbnv5Lc2bUMnt8

V0reltPXpHQAGIl+NlWxgQCkmf3VxNZCalc50IZveolvKphqL2FA61iDDcyB

evFo9zJ94lBO2xYSN/mTAlR3X+Gz6qSw+tQIsGnJjAGNLzv8RsYD36b0yBlo

vZ6BnpuCC1F9wAHmKaN/iiTCQUJykO0UaN1IXRaquYDUX9OUFVmSFLLMQe9L

SesCgp5P0AnJyXfdW5LDKqLvTrcVzSLbwGQqH+/iDNThGnJBgNMDIkcrXoyU

pPBgTQDjlxkTq0z0jpC4ysSAVs4YbBypyGhJj6xOfNj3Mmm8Po/UXUUfaJgj

Du8JpxWYgZneOuA5otHeWVQfE9ZK7K7Gd5Zi20v6y59jOkshVWkuxidhDTl/

bEhBb9VzMKtC4rqUxkF2vpHPAF4dLk7Wk+HM/holugcIA4fGhtaCA+zPjcPU

ZbMgNXJU5xp9bJGK2BNN7YmIayz0KyllRzCpxEMm24sxTS+lEz3qyQEVAbrO

Rm/xcCs0lGKinzfYQmgsJIACby/HNBcia3OHq7JQTQVdBUm4rvLkhzcsT3wp

JAx8WOJvCKf2FTOABFbF5H8dLv3rYHF+arg8+i7kZcI8EaYhDCjQvZLRDulY

J3ewHpofn/fAO8bDvOSxP6mjZAZSw4O3aKdhAFnBhjPctMaeOJJRNjgYDXFI

ju1bYaCA7A0uZnF6ZIU0qsZASW1l4zW5yMoMMKKO1mTj28q7C55NdlcRuqt8

zc/cdTARTQ5tiIkbQsKOlLwKfEM4/V87mncqjo6CAu0bWpQ83Vmuwg+qcVDx

aIcI0SZDdc10lwPmL37sf8/yrPeFb/PD/Yqf3G5JfQyGAkB3vbnxo1UZhJZC

Wl81tadypDwNXZ+LqEhnDdTzYc3ompympDBI7lN6fx2hpRh8/0RDPrhEZF0e

uCOgzeH8//UyOVmTFjU3g1wTEEAN6rh4PW9yU0Q6VnE3uMT/2lt8o5uFFD2n

QZt2BEQ5FiJH9+soCCWmnw9rko13IcpSQ20uXDf+uiYhGN2Q05sX15Mb25cf

j6rN6n/5S0nUraGSZ7WJIZ1ZUZXxQRVxgdEe5j4Gf71rfir/oS+5q0w63ssY

aKBB6ght5cyhFiGqN/vRbS/jk3an/0+Ej710cnBdOAmELfMJi2zsgZL5UScD

zf1Qzvnb2pySiOjKT55FQ3aFZCkaMjcFW2WgpahuFQ4yPwUDyd/0PBz84kBR

Irm7TInrW2OM/WNb8buOT4dU/2NFBJ4QWguA4NRA5xhvy7aMCN5IsxDRJkK0

zxNHZsd7wOjEgbXRoY2s4fZHrlauP37tafhDvJ8rE9m1wMas8PDguObpY79t

KD+tzP1zf3lPytbOYP+2rkHWFUJLMxWEYdF4n5aI0NPGFVjoCn1ikTRC6akk

dhSDSXOk/Dk4E6AN25QHlLCH6sHbZRqSO9KEqs0EAT6ClaVMtRfx4c1a4tAq

HSVD94CzEiA7RKM9oB7bM35xPfefPoYn7X/4MvmW18xQiwg/oCINa2eQKvLI

vgpMwVzQ4xbZ09zxgb9vLx7NCYYq89AtZcBqdHTMIhklHO1U4gZWaBNSVA+4

O3hFKlCFa86fRXVwhxvB4bAGaml9leiGbGxj7mRbwWhV+khZMrW3QkmA8BGg

NIZ2hQRQmyB1ARUDAGAPtRVF3AuyNgp3snrkbN2d9YwBRgNEO3O0Q4jtlRIH

12epAhyyNjMxOzK4tyTrQMX7pFewYJ2DpRnknjoeonOdg5ubhi1QEYpJqHpq

EFTBaHUGtDCx/+XTruyYzuwYfGvRcEUaqM2hslRkTdZYfQ60JJnSX80ZAaNH

69zUwBIVvkRFqAgQGaaPj2gTwNsBqzcm/VwQfivllntfTpISPSDDQGjQBnJP

FRcAHgZysCDWskihXs5n/vLFzauGdckxKjzy73plb04itOCZANmuIcFU0wML

DDgf26amweTjffyRdlp/Lb61BFGVCSvPGKnORdTlD1ZmwetfIhuL0V1VNHgH

Bw2VEoaU2D4wY25ysFtsLAfWIBnvpPVXTbcWwEuTerKedGdGdGU8Hir4hTtY

rRppnanORRemkGoLqC2Vr3WSz9u6xvx0f/ufqlKir535argi759rGvZga8Z9

LzBigF6zJcTP4nrEhB4dG7XOwKjxA5KJ3tmJXnCzoDtz4J1cZCeodCa8nQX0

oHqEABgoYwBywCS+SBnRURGyiR7WQB3IsYn6bOjLp2CQx9RlzXSX0nrKplry

0XWZUzXZ2JJ0WM7TgaynkJyk33fmfz9cBawrJCD+9W5nqOpljJ+LANH728Js

epDn0+v2mKZ8KqSaN9aqocK0lOF9wfQGE7vJwe+JSYcSyt4sZVdMAUizLSYD

4tKzcUss7CITu8TGqaZH5gjQ5ZlRObpvpq8KlAnIwO6cGERV2kRtFrYxj9RR

xB9qkI61ixAtnJ5KTlcls6tyuqkY11j0J5ZvL/y+r3+1KF2T0F9rRaS+psHy

7EUKCkB14NUf04M9OrJiAKQRu0sFY206yug6C3MgJr+W096pWR8X+J+XRJ+W

RO8XeEcqJhgkQePQc3Ba2vgcUIWHSlBdtP5qgFKj1S9AndYkBiMq0whtBaSu

UuAtQnjLPHFoiTyiRvdoMf1aHEQIb+cNd7xdEn/aX3q/rVmVMdcljA96+Zt5

IeA9MOqqCMO54Tesvvpfj5xNe/PiSd3lxK4ycGuLFMQWj7DNn9wTTR8BbVru

2zn2ayXjQEoF9AtQU89GK0kjSsIgf6QZ31owVpsBJAHLLYoIqIq/N1SUDFgO

eAjoVsLRdiW+H3TSpenhVSpShe0H8zt7pO2DbvZ4U/1xd+HXvcVdDQ/MCEc6

yR+78/PUMUxr6fO77sG2hrevnHY+/VVFbCCi8gWmuZgz3AZGbA1xWE9DbQJ5

AGwEU4D6VtjoZfaEjjEGxmfBeCdjsA5k0VBp8r+7QGNyeFaIV2NKOLoxj9pX

BdyVO9LCg7fK0H1a0sgGG7PGHJfjIKyRNsF4P8D7g1XZ317pP+/O/7Y7vyln

LvCm3oNpdFW+xp+c7qlpy4jOCfPzNfze5fR/PL/jBgYleEXWZFsFc6AJfMki

BblARi5Q4KBfA2AA/3p2ops+VI9tKxyuTO3NjYMUPAWzXv2z0JqnIVmh3h1Z

0cTO0pn+Oi6iXQxKBt2nAPMICwtIXkMZluKhwok+OWV0g08EzP92XQkmvk/b

mj/25/UC4sEc9+87mqM5zhpvEgyto9W5afc8wh0vW/71iwCzM2lBXvXPHqPq

8ujQOmrfnwjBhjXSobXUvsrprlJcy0tkdTqsPKk9I6InOxpamFAcebM2Mbgi

NuhFsMdweSqupYgDbwM0K8UNqqbhSwy0jokBgConDiqAMTLROuH0/wcW2LtT

"], {{0, 50}, {50, 0}}, {0, 255},

ColorFunction->RGBColor],

BoxForm`ImageTag[

"Byte", ColorSpace -> "RGB", Interleaving -> True, MetaInformation -> Association[

"Source" -> "http://en.wikipedia.org/wiki/File:Elizabeth_Clare_\

Prophet_-_Guru_Ma.JPG", "URL" -> "http://www.wolframcdn.com/waimage/hset028/aeb/\

aeb98eda6645fcb390425ec1e451a972_v001s.jpg"]],

Selectable->False],

DefaultBaseStyle->"ImageGraphics",

ImageSizeRaw->{50, 50},

PlotRange->{{0, 50}, {0, 50}}]\), 5, FeatureExtractor -> NetModel["OpenFace Face Recognition Net Trained on CASIA-WebFace \

and FaceScrub Data"]]](https://www.wolframcloud.com/obj/resourcesystem/images/868/8687829f-8191-4724-91fe-4d8618d93b36/7470bfd1370b495e.png)

![NetInformation[

NetModel["OpenFace Face Recognition Net Trained on CASIA-WebFace and \

FaceScrub Data"], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/868/8687829f-8191-4724-91fe-4d8618d93b36/7437784b59baf5c4.png)

![NetInformation[

NetModel["OpenFace Face Recognition Net Trained on CASIA-WebFace and \

FaceScrub Data"], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/868/8687829f-8191-4724-91fe-4d8618d93b36/7113b882800d62b6.png)

![NetInformation[

NetModel["OpenFace Face Recognition Net Trained on CASIA-WebFace and \

FaceScrub Data"], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/868/8687829f-8191-4724-91fe-4d8618d93b36/5b89b4e80a2b5d8a.png)

![NetInformation[

NetModel["OpenFace Face Recognition Net Trained on CASIA-WebFace and \

FaceScrub Data"], "SummaryGraphic"]](https://www.wolframcloud.com/obj/resourcesystem/images/868/8687829f-8191-4724-91fe-4d8618d93b36/5fd8003a07920677.png)