Resource retrieval

Get the pre-trained net:

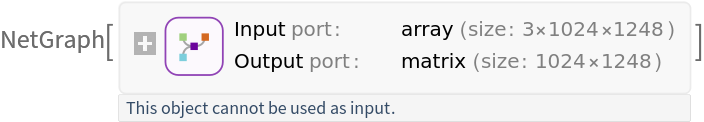

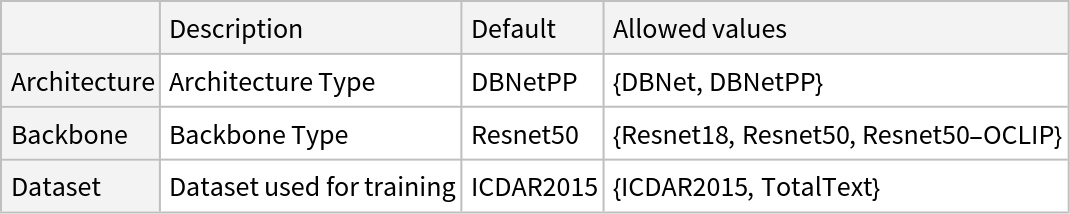

NetModel parameters

This model consists of a family of individual nets, each identified by a specific architecture. Inspect the available parameters:

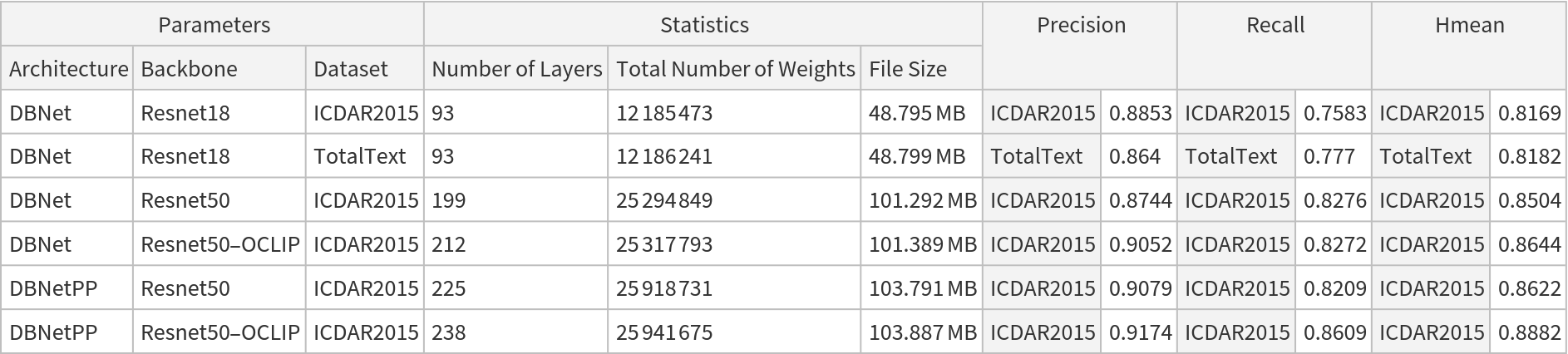

Pick a non-default net by specifying the architecture:

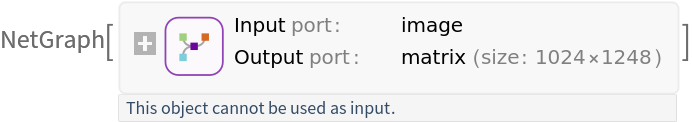

Pick a non-default uninitialized net:

Evaluation function

Write an evaluation function to scale the result to the input image size and suppress the least probable detections:

Basic usage

Obtain the detected bounding boxes with their corresponding scores for a given image:

The model's output is an Association containing the detected "BoundingBoxes" and "Scores":

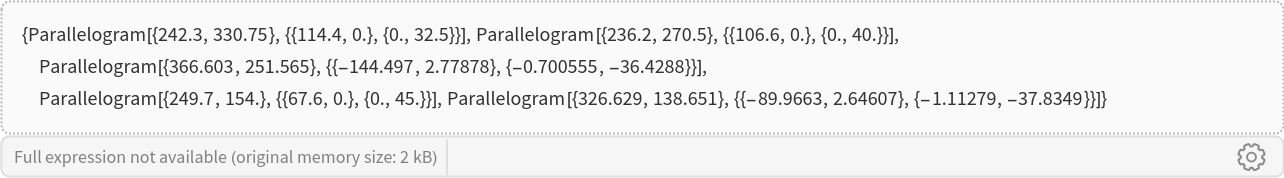

The "BoundingBoxes" key is a list of Parallelogram expressions corresponding to the bounding regions of the detected objects:

Visualize the bounding regions:

The "Scores" key contains the score values of the detected objects:

Advanced usage

Get an image:

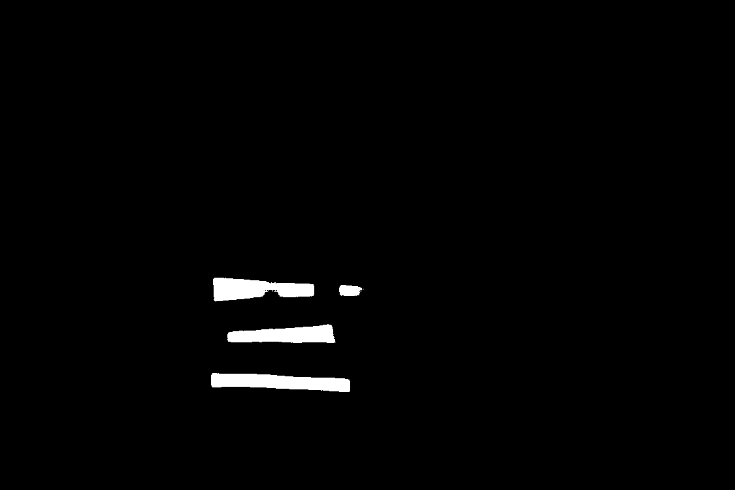

Get the text region masks via the option "Output"->"Masks" and visualize it:

Obtain the boxes using the default evaluation and visualize them:

Increase the "MinPerimeter" to remove small boxes:

Visualize the selected and filtered out boxes:

Increase the "AcceptanceThreshold" to remove low probability boxes:

Visualize the selected and filtered out boxes:

Change the box padding with the "ScaledPadding" option:

Visualize the original and padded boxes:

The "MaskThreshold" option can help to filter noisy detections. Increasing the "MaskThreshold" helps to select the boxes with the strongest probability map:

Visualize the selected boxes:

Network result

Get an image:

Get the probability map for the detected text:

Adjust the result dimensions to the original image shape:

Visualize the probability map:

Binarize the probability map to obtain the mask:

Visualize the bounding boxes around the masked regions:

Scale the boxes to the cover the whole text:

Net information

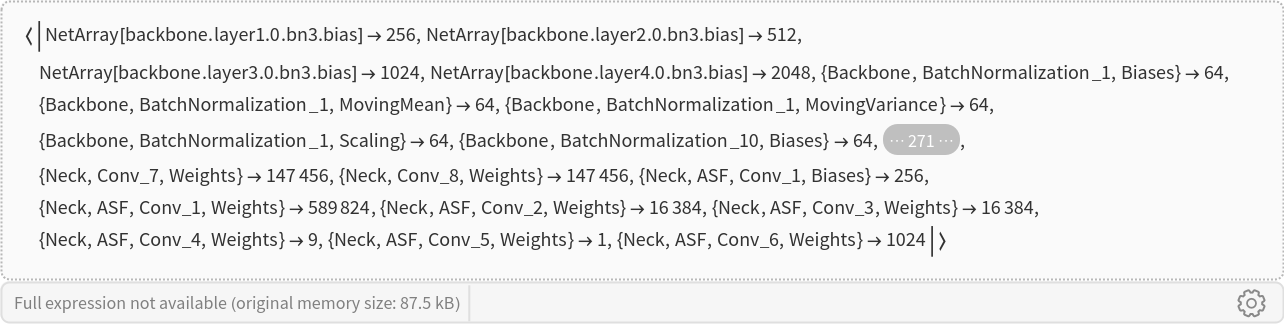

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

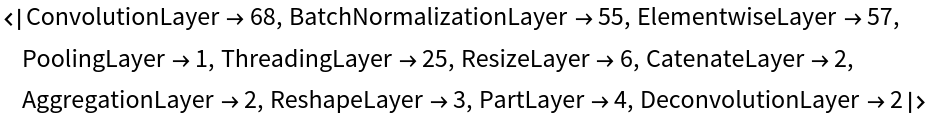

Obtain the layer type counts:

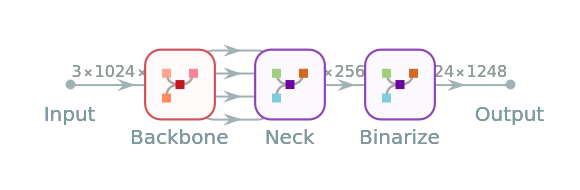

Display the summary graphic:

Export to ONNX

Export the net to the ONNX format:

Get the size of the ONNX file:

The size is similar to the byte count of the resource object:

Check some metadata of the ONNX model:

Import the model back into Wolfram Language. However, the NetEncoder and NetDecoder will be absent because they are not supported by ONNX:

![NetModel["DBNet Text Detector Trained on ICDAR-2015 and Total-Text Data", "ParametersInformation"]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/28d1a4eca7425c17.png)

![NetModel[{"DBNet Text Detector Trained on ICDAR-2015 and Total-Text Data", "Architecture" -> "DBNet", "Backbone" -> "Resnet18", "Dataset" -> "TotalText"}]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/2da73842ab6371fa.png)

![NetModel[{"DBNet Text Detector Trained on ICDAR-2015 and Total-Text Data", "Architecture" -> "DBNetPP", "Backbone" -> "Resnet50-OCLIP"}, "UninitializedEvaluationNet"]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/7ffd610b690d1f18.png)

![xywha2Parallelogram[input_] := Block[{cp, w, h, a, r, pts},

cp = input[[;; 2]];

{w, h} = input[[3 ;; 4]] / 2;

a = N[input[[5]]];

r = {cp - {w, h}, cp + {w, -h}, cp + {-w, h}};

pts = RotationTransform[a, cp][r];

Parallelogram[pts[[1]], {pts[[2]] - pts[[1]], pts[[3]] - pts[[1]]}]

];

parallelogram2xywha[Parallelogram[r1_, {v1_, v2_}]] := Block[{n1, n2, w, h, a},

{n1, n2} = {Norm[v1], Norm[v2]};

If[n1 === 0. || n2 === 0., Return[{Sequence @@ ( r1 + (v1 + v2) / 2), 0., 0., 0.}];];

If[ n1 > n2,

w = n1; h = n2; a = ArcTan[Sequence @@ v1],

w = n2; h = n1; a = ArcTan[Sequence @@ v2]

];

(*If[a > Pi/2, a = Pi - a];*)

{Sequence @@ ( r1 + (v1 + v2) / 2), w, h, a}

];](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/7f4049f2dad723ba.png)

![scaleParallelogram[pgram_, scale_] := Module[{cpx, cpy, w, h, a}, {cpx, cpy, w, h, a} = parallelogram2xywha[pgram];

xywha2Parallelogram[{cpx, cpy, scale[[1]]*w, scale[[2]]*h, a}]

];

parallelogramPerimeter[Parallelogram[r1_, {v11_, v12_}]] := 2*(Norm[v12] + Norm[v11]);

filterLowScoreDetections[{boundingParallelograms_, scores_}, acceptanceThreshold_] := Transpose@Select[

Transpose[{boundingParallelograms, scores}], #[[2]] >= acceptanceThreshold &];

filterSmallPerimeterDetections[{boundingParallelograms_, scores_}, minPerimeter_] := Transpose@Select[

Transpose[{boundingParallelograms, scores}], parallelogramPerimeter[#[[1]]] >= minPerimeter &];](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/06baf180363992e0.png)

![Options[netevaluate] = { "MaskThreshold" -> 0.3, "AcceptanceThreshold" -> 0.1, "MinPerimeter" -> 13, "ScaledPadding" -> {1.3, 2.5}, "Output" -> "Regions" | "Masks"};

netevaluate[img_, OptionsPattern[]] := Module[

{probabilitymap, inputImageDims, w, h, ratio, tRatio, probmap, probmapdims, mask, contours, boundingRects,

probcrops, maskcrops, scores, boundingParallelograms, res}, probabilitymap = Image[NetModel[

"DBNet Text Detector Trained on ICDAR-2015 and Total-Text Data"][

img]];

(* Scale probability map to original image dimensions *)

inputImageDims = ImageDimensions[img];

{w, h} = ImageDimensions[probabilitymap];

ratio = ImageAspectRatio[img];

tRatio = ImageAspectRatio[probabilitymap];

probmap = If[tRatio/ratio > 1,

ImageResize[ImageCrop[probabilitymap, {w, w*ratio}], inputImageDims],

ImageResize[ImageCrop[probabilitymap, {h /ratio, h}], inputImageDims]

];

probmapdims = ImageDimensions[probmap];

(* Binarize the probability map to get the mask *)

mask = Binarize[probmap, OptionValue["MaskThreshold"]];

(*Output mask*)

If[SameQ[OptionValue["Output"], "Masks"], Return[mask]];

(* extract contours*)

contours = ImageMeasurements[mask, "PerimeterPositions", CornerNeighbors -> True];

(* get bounding box for each contour *)

boundingRects = BoundingRegion[#, "MinRectangle"] & /@ contours;

If[boundingRects === {}, Return @{{}, {}}];

(* score computation defined as the mean intensity of the pixels inside the mask region for each contour *)

probcrops = ImageTrim[probmap, boundingRects];

maskcrops = ImageTrim[mask, boundingRects];

scores = MapThread[

ImageMeasurements[ImageMultiply[#1, #2], "Mean"] &, {probcrops, maskcrops}];

(*Get parallelograms*)

boundingParallelograms = BoundingRegion[#, "MinOrientedRectangle"] & /@ contours;

If[boundingParallelograms === {}, Return @{{}, {}}];

(* Filter parallelograms by score *)

res = filterLowScoreDetections[{boundingParallelograms, scores}, OptionValue["AcceptanceThreshold"]];

If[res === {}, Return @{{}, {}}];

(* scale resulting parallelograms *)

If[UnsameQ[OptionValue["ScaledPadding"], Automatic],

boundingParallelograms = scaleParallelogram[#, OptionValue["ScaledPadding"]] & /@ res[[1]],

boundingParallelograms = res[[1]]

];

(* Filter small perimeters *)

res = filterSmallPerimeterDetections[{boundingParallelograms, res[[2]]}, OptionValue["MinPerimeter"]];

If[res === {}, Return @{{}, {}}];

(* show result *)

Association[{"BoundingBoxes" -> res[[1]], "Scores" -> res[[2]]}]

];](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/10301fd2f7f5aa1c.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/8c24702b-fa56-4788-b52b-bdd4281b120d"]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/632d2144766646bf.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/82246f4b-9314-420b-a89f-853355af775b"]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/3c93461f306aa10f.png)

![mask = netevaluate[testImage, "Output" -> "Masks"];

mask = Dilation[mask, 10];

HighlightImage[testImage, mask, ImageSize -> Medium]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/17a71ad815f30281.png)

![HighlightImage[testImage, {Legended[Style[boxes2, Green], "Selected"],

Legended[Style[Complement[boxes1, boxes2], Blue], "Filtered out"]},

ImageSize -> Medium]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/0dc0c58e8387477a.png)

![HighlightImage[testImage, {Legended[Style[boxes3, Green], "Selected"],

Legended[Style[Complement[boxes1, boxes3], Blue], "Filtered out"]},

ImageSize -> Medium]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/4f33a2cc9c9835c6.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/9250a93e-b846-4b3e-b09d-066964fb936c"]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/5bc60884987c1c80.png)

![probabilitymap = Image[probabilitymap];

inputImageDims = ImageDimensions[testImage];

{w, h} = ImageDimensions[probabilitymap];

ratio = ImageAspectRatio[testImage];

tRatio = ImageAspectRatio[probabilitymap];

probmap = If[tRatio/ratio > 1,

ImageResize[ImageCrop[probabilitymap, {w, w*ratio}], inputImageDims],

ImageResize[ImageCrop[probabilitymap, {h /ratio, h}], inputImageDims]

];](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/110cb5bf6ca3147c.png)

![ImageCompose[

Colorize[Threshold[probmap, 0.005], ColorFunction -> ColorData["TemperatureMap"], ColorRules -> {0 -> White}], {testImage, 0.5}]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/269245847547dfc1.png)

![contours = ImageMeasurements[mask, "PerimeterPositions", CornerNeighbors -> True];

boundingRects = BoundingRegion[#, "MinRectangle"] & /@ contours;

HighlightImage[testImage, boundingRects]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/455c77005f1f1cf2.png)

![boundingParallelograms = BoundingRegion[#, "MinOrientedRectangle"] & /@ contours;

boundingParallelograms = scaleParallelogram[#, {1.2, 2.1}] & /@ boundingParallelograms;

HighlightImage[testImage, boundingParallelograms]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/40d78f4eaf602271.png)

![Information[

NetModel[

"DBNet Text Detector Trained on ICDAR-2015 and Total-Text Data"], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/35aa37c7a1b7cc4f.png)

![Information[

NetModel[

"DBNet Text Detector Trained on ICDAR-2015 and Total-Text Data"], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/03b9ecd0a30df69b.png)

![Information[

NetModel[

"DBNet Text Detector Trained on ICDAR-2015 and Total-Text Data"], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/04bb273d1b1565cf.png)

![Information[

NetModel[

"DBNet Text Detector Trained on ICDAR-2015 and Total-Text Data"], "SummaryGraphic"]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/078d2a67c8f11e61.png)

![onnxFile = Export[FileNameJoin[{$TemporaryDirectory, "net.onnx"}], NetModel[

"DBNet Text Detector Trained on ICDAR-2015 and Total-Text Data"]]](https://www.wolframcloud.com/obj/resourcesystem/images/7cf/7cf4f829-b969-4961-894f-b9f4e6b3104f/4732a8614d0fbcb0.png)