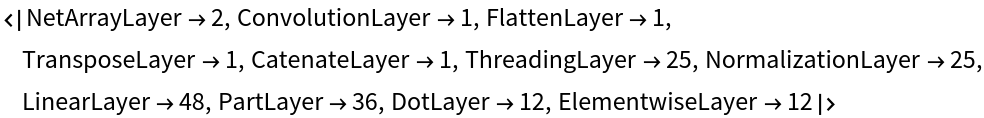

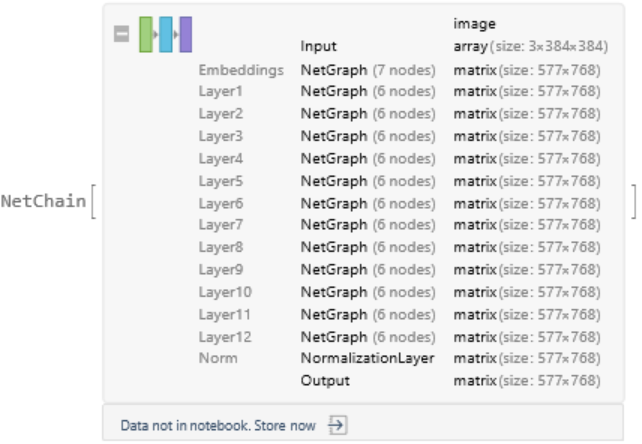

NetModel parameters

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

Pick a non-default net by specifying the parameters:

Pick a non-default uninitialized net:

Basic usage

Define a test image:

Answer a question about the image:

Try different questions:

Obtain a test video:

Generate an answer to a question about the video. The answer is generated from a number of uniformly spaced frames whose features are averaged. The number of frames can be controlled via an option:

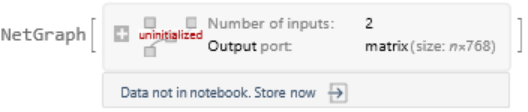

Feature space visualization

Get a set of images of coffee and ice cream:

Visualize the feature space embedding performed by the image encoder. Notice that images from the same class are clustered together:

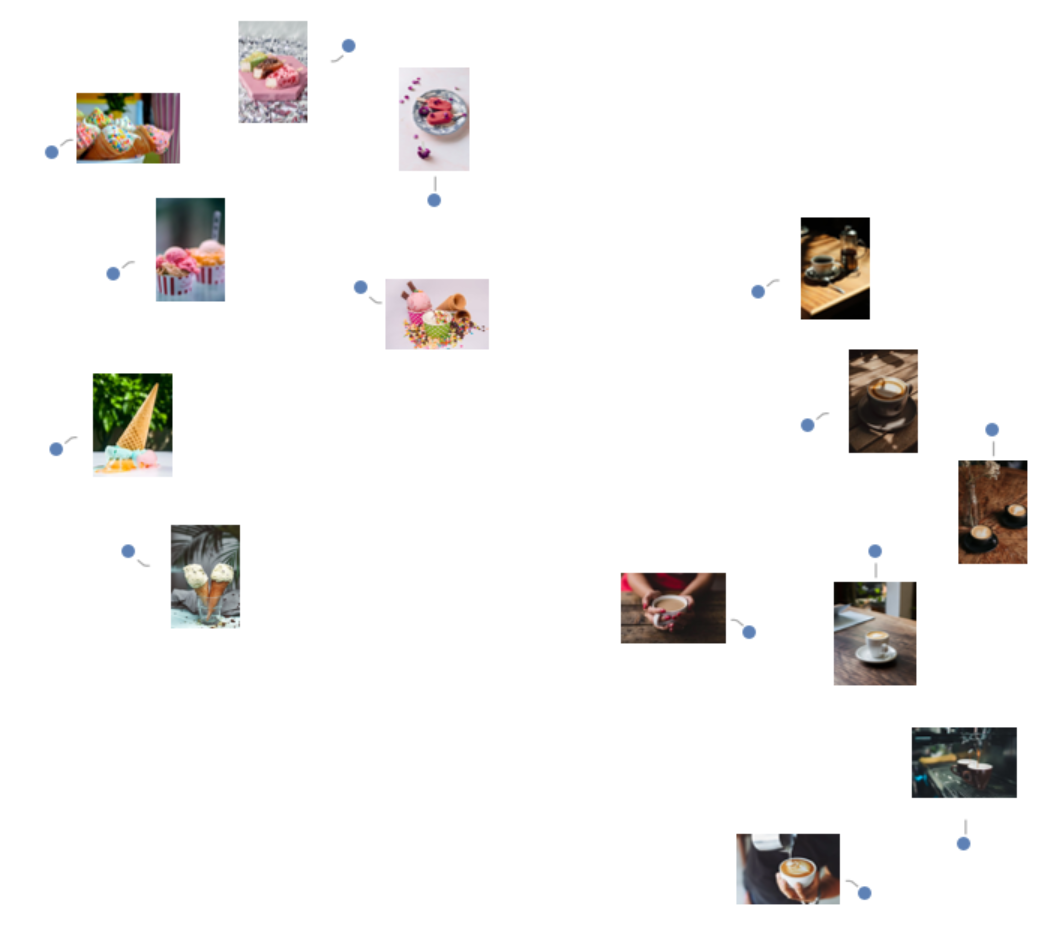

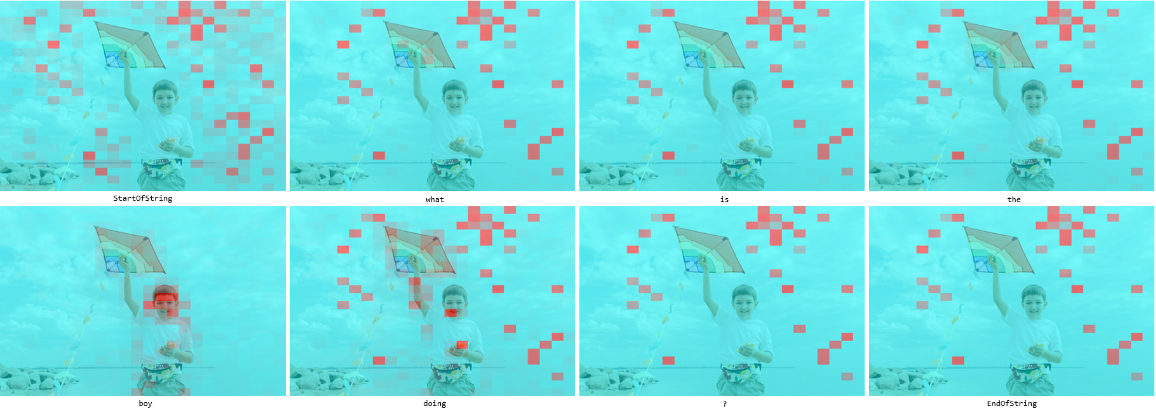

Cross-attention visualization for images

When encoding a question, the text encoder attends on the image features produced by the image encoder. Such features are a set of 577 vectors of length 768, where every vector except the first one corresponds to one of 24x24 patches taken from the input image (the extra vector exists because the image encoder inherits the architecture of the image classification model Vision Transformer Trained on ImageNet Competition Data, but in this case, it doesn’t have any special importance). This means that the text encoder attention weights to these image features can be interpreted as the image patches the encoder is "looking at" when generating every new token, and it is possible to visualize this information. Get a test image and compute the features:

Define a question pass it through the text encoder. There are 12 attention blocks in the encoder and each generates its own set of attention weights. Inspect the attention weights for a single block:

Each question's token corresponds to a 12x577 array of attention weights, where 12 is the number of attention heads and 577 is the 24x24 patches plus the extra one:

Extract the attention weights related to the image patches:

Reshape the flat image patch dimension to 24x24 and take the average over the attention heads, thus obtaining a 24x24 attention matrix for each of the eight generated tokens:

To reveal novel patch interactions specific to each token, suppress the consistently high attention weights by subtracting the minimum value aggregated across the token dimension:

Visualize the attention weight matrices. Patches with higher values (red) are what is mostly being "looked at" when generating the corresponding token:

Define a function to visualize the attention matrix on the image:

Visualize the attention mechanism for each token. A recurrent noisy pattern of large positive activation can be observed, but notice the emphasis on the head for the token "boy" and on the hands for "doing":

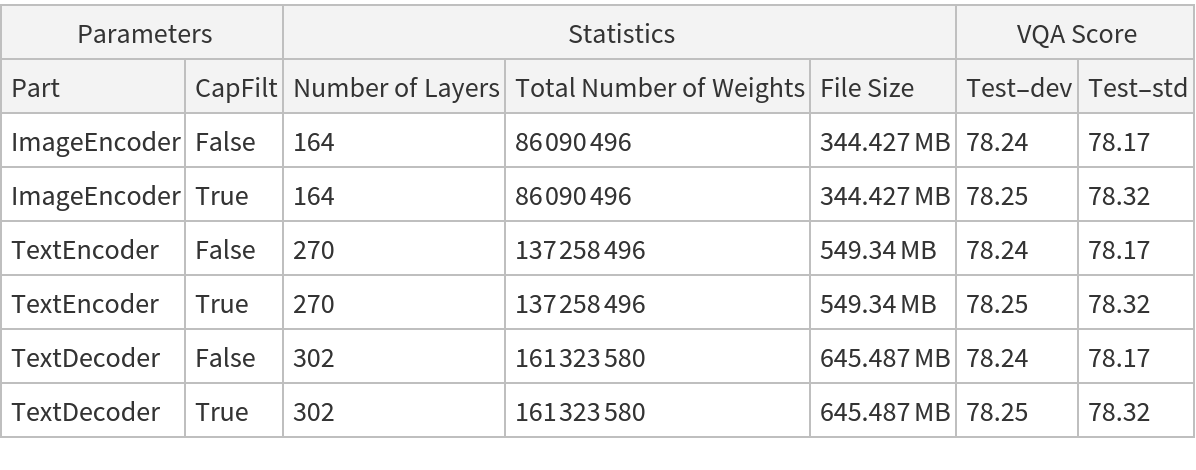

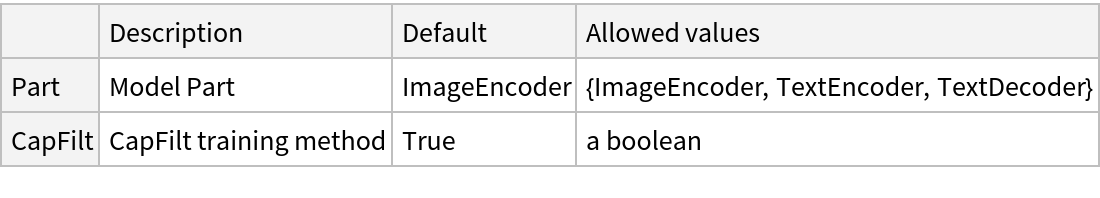

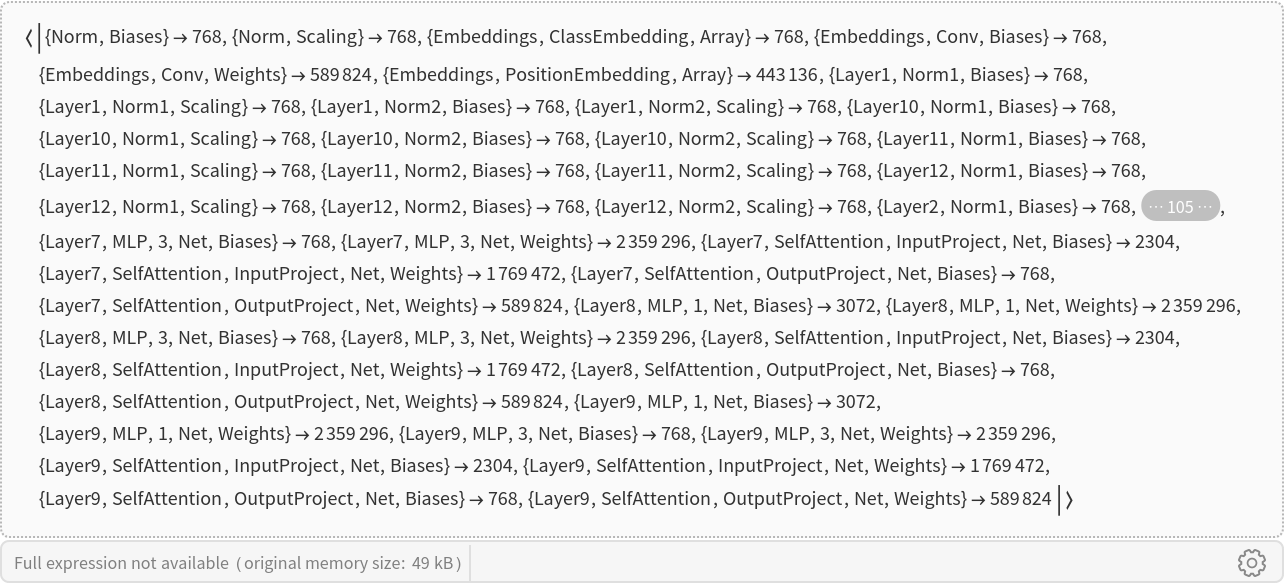

Net information

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

![NetModel[{"BLIP Visual Question Answering Nets Trained on VQA Data", "Part" -> "TextEncoder", "CapFilt" -> False}, "UninitializedEvaluationNet"]](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/60f0e826584cca9a.png)

![Options[netevaluate] = {"CapFilt" -> True, MaxIterations -> 25, "NumberOfFrames" -> 16, "Temperature" -> 0, "TopProbabilities" -> 10, TargetDevice -> "CPU"};

netevaluate[input : (_?ImageQ | _?VideoQ), question_ : (_?StringQ), opts : OptionsPattern[]] := Module[

{imgInput, imageEncoder, textEncoder, textDecoder, questionFeatures, tokens, imgFeatures, outSpec, init, netOut, index = 1, generated = {}, eosCode = 103, bosCode = 102}, imgInput = Switch[input,

_?VideoQ,

VideoFrameList[input, OptionValue["NumberOfFrames"]],

_?ImageQ,

input

]; {imageEncoder, textEncoder, textDecoder} = NetModel[{"BLIP Visual Question Answering Nets Trained on VQA Data", "CapFilt" -> OptionValue["CapFilt"], "Part" -> #}] & /@ {"ImageEncoder", "TextEncoder", "TextDecoder"}; tokens = NetExtract[textEncoder, {"Input", "Tokens"}]; imgFeatures = imageEncoder[imgInput, TargetDevice -> OptionValue[TargetDevice]];

If[MatchQ[input, _?VideoQ],

imgFeatures = Mean[imgFeatures]

]; questionFeatures = textEncoder[<|"Input" -> question, "ImageFeatures" -> imgFeatures|>];

outSpec = Replace[NetPort /@ Information[textDecoder, "OutputPortNames"], NetPort["Output"] -> (NetPort["Output"] -> {"RandomSample", "Temperature" -> OptionValue["Temperature"], "TopProbabilities" -> OptionValue["TopProbabilities"]}), {1}];

init = Join[

<|

"Index" -> index,

"Input" -> bosCode,

"QuestionFeatures" -> questionFeatures

|>,

Association@Table["State" <> ToString[i] -> {}, {i, 24}]

]; NestWhile[

Function[

netOut = textDecoder[#, outSpec, TargetDevice -> OptionValue[TargetDevice]];

AppendTo[generated, netOut["Output"]];

Join[

KeyMap[StringReplace["OutState" -> "State"], netOut],

<|

"Index" -> ++index,

"Input" -> netOut["Output"],

"QuestionFeatures" -> questionFeatures

|>

]

],

init,

#Input =!= eosCode &,

1,

OptionValue[MaxIterations]

];

If[Last[generated] === eosCode,

generated = Most[generated]

];

StringTrim@StringJoin@tokens[[generated]]

];](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/21d4c6af27bf3b97.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/d514a8d9-c524-421a-abd1-a37b3c399aa8"]](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/6eceb32d8efb5bb1.png)

![netevaluate[img, #] & /@ {"Where is she?", "What is on the blanket?", "How many people are with her?", "How is the weather?", "Who took the picture?"}](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/4500e2fabaa38a21.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/f014372c-6ba0-4368-9462-a2c6e91e12a6"]](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/4ec7ec8ba5ffd368.png)

![FeatureSpacePlot[

Thread[NetModel[{"BLIP Visual Question Answering Nets Trained on VQA Data", "Part" -> "ImageEncoder"}][imgs] -> imgs],

LabelingSize -> 70,

LabelingFunction -> Callout,

ImageSize -> 700,

AspectRatio -> 0.9

]](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/0cfcd128cf2095da.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/94d26d67-ee4b-4c03-a45b-d341783a72e6"]](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/663d3034c9f259aa.png)

![imgFeatures = NetModel[{"BLIP Visual Question Answering Nets Trained on VQA Data",

"Part" -> "ImageEncoder"}][testImage];](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/4fdde7a8667edc92.png)

![question = "what is the boy doing?";

tokenizer = NetExtract[

NetModel[{"BLIP Visual Question Answering Nets Trained on VQA Data", "Part" -> "TextEncoder"}], "Input"];

tokens = NetExtract[tokenizer, "Tokens"];](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/51cad25e0864a631.png)

![tokenAttentionWeights = Thread[tokens[[tokenizer[question]]] -> NetModel[{"BLIP Visual Question Answering Nets Trained on VQA Data", "Part" -> "TextEncoder"}][<|"Input" -> question, "ImageFeatures" -> imgFeatures|>, NetPort[{"TextEncoder", "TextLayer1", "CrossAttention", "Attention", "AttentionWeights"}]]];](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/46f93455dfd500ef.png)

![Length[{StartOfString -> {12, 577}, " what" -> {12, 577}, " is" -> {12, 577}, " the" -> {12, 577}, " boy" -> {12, 577}, " doing" -> {12, 577}, " ?" -> {12, 577}, EndOfString -> {12, 577}}]](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/359d14d728dca84f.png)

![attentionWeights = ArrayReshape[

attentionWeights, {numTokens, numHeads, Sqrt[numPatches], Sqrt[numPatches]}];

attentionWeights = Map[Mean, attentionWeights, {1}];

attentionWeights // Dimensions](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/1c97d0b99b06139a.png)

![GraphicsGrid[

Partition[

MapThread[

Labeled[#1, #2] &, {MatrixPlot /@ attentionWeights, Keys[tokenAttentionWeights]}], 4, 4, {1, 1}, ""], ImageSize -> Large]](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/6349c227e0d1b91c.png)

![visualizeAttention[img_Image, attentionMatrix_, label_] := Block[{heatmap, wh},

wh = ImageDimensions[img];

heatmap = ImageApply[{#, 1 - #, 1 - #} &, ImageAdjust@Image[attentionMatrix]];

heatmap = ImageResize[heatmap, ImageDimensions[img]];

Labeled[

ImageResize[ImageCompose[img, {ColorConvert[heatmap, "RGB"], 0.5}],

wh*500/Min[wh]], label]

]](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/2fc5c0a20312dbd0.png)

![Information[

NetModel[

"BLIP Visual Question Answering Nets Trained on VQA Data"], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/709d53edea5913a9.png)

![Information[

NetModel[

"BLIP Visual Question Answering Nets Trained on VQA Data"], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/1f9a58e01c057d87.png)

![Information[

NetModel[

"BLIP Visual Question Answering Nets Trained on VQA Data"], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/71a/71aa8af8-9793-4fa6-a190-bef0e098ffad/0fce0fc32e3b1a72.png)