Resource retrieval

Get the pre-trained net:

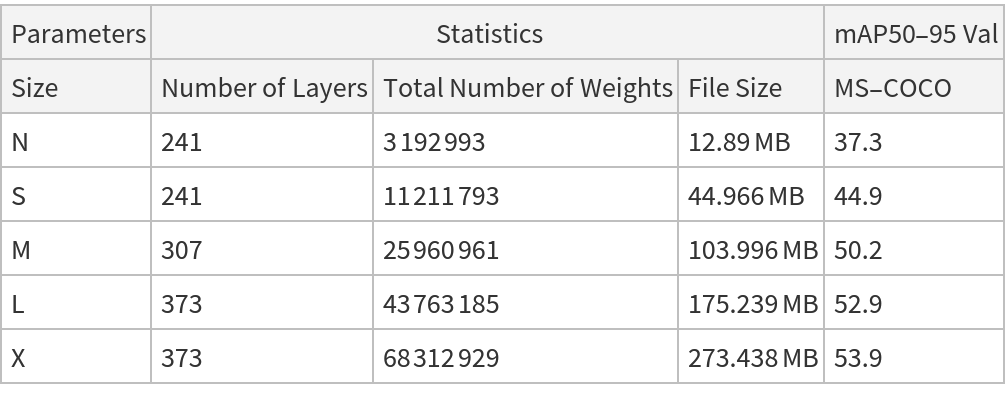

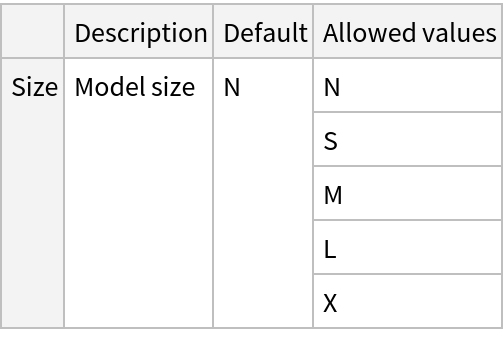

NetModel parameters

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

Pick a non-default net by specifying the parameters:

Pick a non-default uninitialized net:

Evaluation function

Write an evaluation function to scale the result to the input image size and suppress the least probable detections:

Basic usage

Obtain the detected bounding boxes with their corresponding classes and confidences for a given image:

The model's output is an Association containing the detected "Boxes" and "Classes":

The "Boxes" key is a list of Rectangle expressions corresponding to the bounding boxes of the detected objects:

The "Classes" key contains the classes of the detected objects:

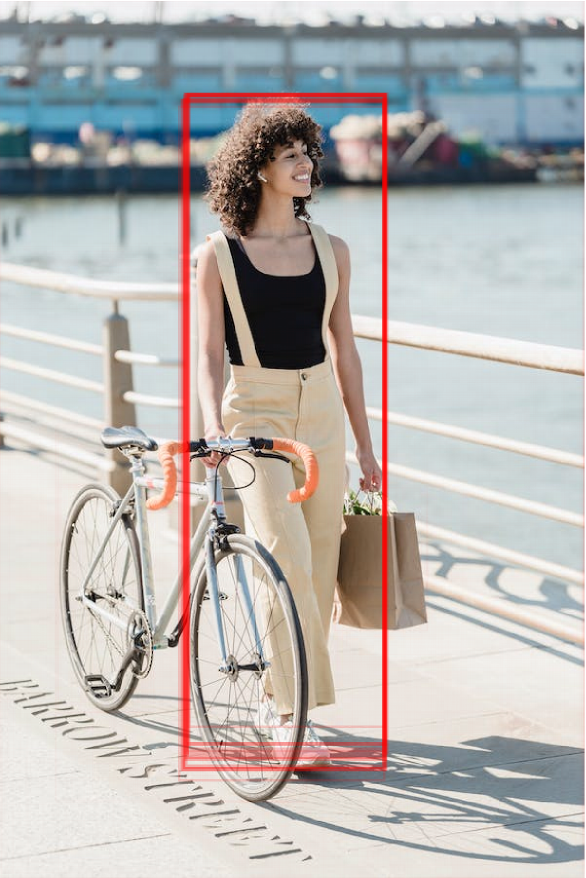

Visualize the detection:

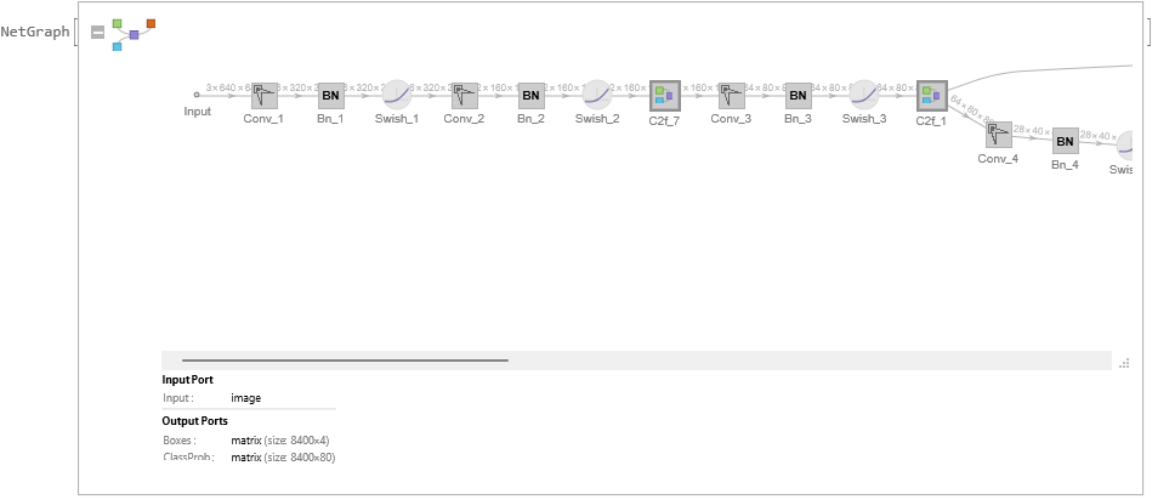

Network result

The network computes eight thousand four hundred bounding boxes and the probability that the object is of any given class:

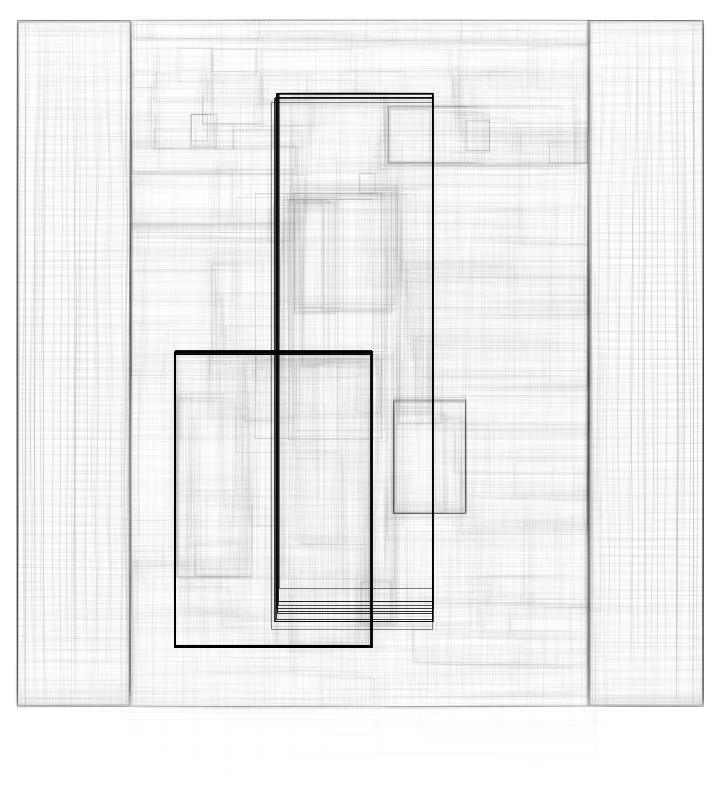

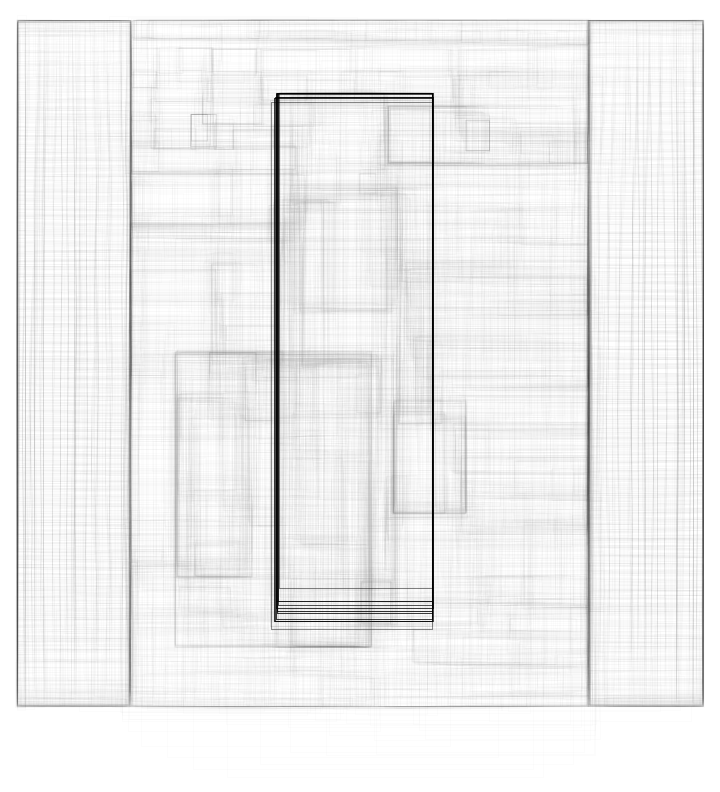

Visualize the bounding boxes scaled by their class probabilities:

Visualize all the boxes scaled by the probability that they contain a person:

Superimpose the person predictions on top of the input received by the net:

Net information

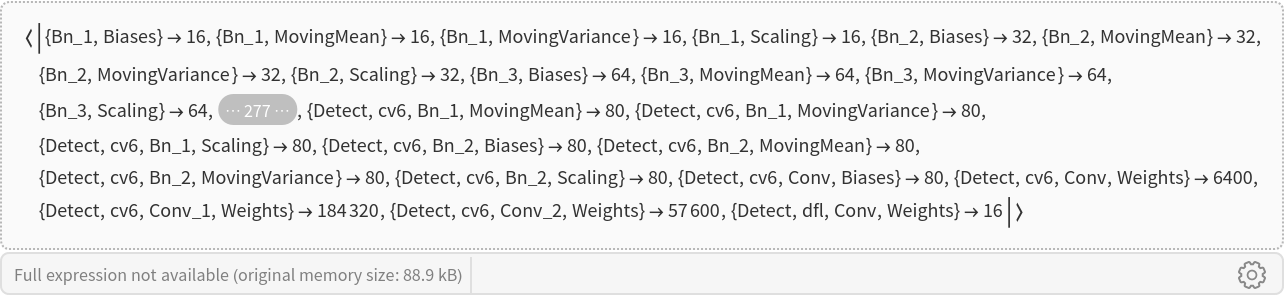

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

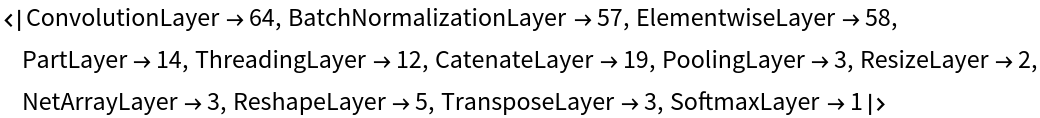

Obtain the layer type counts:

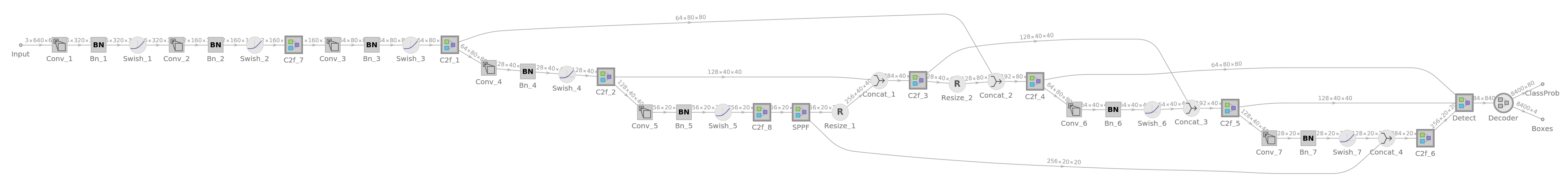

Display the summary graphic:

Export to ONNX

Export the net to the ONNX format:

Get the size of the ONNX file:

The size is similar to the byte count of the resource object:

Check some metadata of the ONNX model:

Import the model back into Wolfram Language. However, the NetEncoder and NetDecoder will be absent because they are not supported by ONNX:

![NetModel[{"YOLO V8 Detect Trained on MS-COCO Data", "Size" -> "L"}, "UninitializedEvaluationNet"]](https://www.wolframcloud.com/obj/resourcesystem/images/6c5/6c5797fd-4248-4cbc-921f-045f79f182f6/24c4d89a3ba70814.png)

![netevaluate[net_, img_, detectionThreshold_ : .25, overlapThreshold_ : .5] := Module[{res, imgSize, isDetection, probableClasses, probableBoxes, h, w, max, scale, padding, nms, result},

(*define image dimensions*)

imgSize = 640;

{w, h} = ImageDimensions[img];

(*get inference*)

res = net[img];

(*filter by probability*)

(*very small probability are thresholded*)

isDetection = UnitStep[Max /@ res["ClassProb"] - detectionThreshold];

{probableClasses, probableBoxes} = Map[Pick[#, isDetection, 1] &, {res["ClassProb"], res["Boxes"]}];

If[Length[probableBoxes] == 0, Return[{}]]; (*transform coordinates into rectangular boxes*)

max = Max[{w, h}];

scale = max/imgSize;

padding = imgSize*(1 - {w, h}/max)/2; probableBoxes = Apply[Rectangle[

scale*({#1 - #3/2, imgSize - #2 - #4/2} - padding),

scale*({#1 + #3/2, imgSize - #2 + #4/2} - padding)] &, probableBoxes, 2]; (*gather the boxes of the same class and perform non-

max suppression*)

nms = ResourceFunction["NonMaximumSuppression"][

probableBoxes -> Max /@ probableClasses, "Index", MaxOverlapFraction -> overlapThreshold];

<|

"Boxes" -> probableBoxes[[nms]],

"Classes" -> labels[[Last@*Ordering /@ Part[probableClasses, nms]]]

|>

];](https://www.wolframcloud.com/obj/resourcesystem/images/6c5/6c5797fd-4248-4cbc-921f-045f79f182f6/4644528d9627d47e.png)

![(* Evaluate this cell to get the example input *) CloudGet["https://www.wolframcloud.com/obj/8acba9d3-bd1e-433e-8309-df969dd2897d"]](https://www.wolframcloud.com/obj/resourcesystem/images/6c5/6c5797fd-4248-4cbc-921f-045f79f182f6/18032e54e4e32ca5.png)

![imgSize = 640;

{w, h} = ImageDimensions[testImage];

max = Max[{w, h}];

scale = max/imgSize;

padding = imgSize*(1 - {w, h}/max)/2;

rectangles = Apply[Rectangle[

scale*({#1 - #3/2, imgSize - #2 - #4/2} - padding),

scale*({#1 + #3/2, imgSize - #2 + #4/2} - padding)

] &, res["Boxes"], 2];

Graphics[

MapThread[

{EdgeForm[Opacity[Total[#1] + .01]], #2} &,

{Max /@ res["ClassProb"], rectangles}

],

BaseStyle -> {FaceForm[], EdgeForm[{Thin, Black}]}

]](https://www.wolframcloud.com/obj/resourcesystem/images/6c5/6c5797fd-4248-4cbc-921f-045f79f182f6/5891756a66fa5305.png)

![Graphics[

MapThread[{EdgeForm[Opacity[#1 + .01]], #2} &, {Extract[

res["ClassProb"], {All, idx}], rectangles}],

BaseStyle -> {FaceForm[], EdgeForm[{Thin, Black}]}

]](https://www.wolframcloud.com/obj/resourcesystem/images/6c5/6c5797fd-4248-4cbc-921f-045f79f182f6/2fd13f5eccf4872b.png)

![HighlightImage[testImage, Graphics[

MapThread[{EdgeForm[{Thickness[#1/100], Opacity[(#1 + .01)/3]}], #2} &, {Extract[

res["ClassProb"], {All, idx}], rectangles}]], BaseStyle -> {FaceForm[], EdgeForm[{Thin, Red}]}]](https://www.wolframcloud.com/obj/resourcesystem/images/6c5/6c5797fd-4248-4cbc-921f-045f79f182f6/5706f7b5e1fd0c94.png)

![onnxFile = Export[FileNameJoin[{$TemporaryDirectory, "net.onnx"}], NetModel["YOLO V8 Detect Trained on MS-COCO Data"]]](https://www.wolframcloud.com/obj/resourcesystem/images/6c5/6c5797fd-4248-4cbc-921f-045f79f182f6/65451efb2a5143b8.png)