GloVe 50-Dimensional Word Vectors

Trained on

Wikipedia and Gigaword 5 Data

Released in 2014 by the computer science department at Stanford University, this representation is trained using an original method called Global Vectors (GloVe). It encodes 400,000 tokens as unique vectors, with all tokens outside the vocabulary encoded as the zero-vector. Token case is ignored.

Number of layers: 1 |

Parameter count: 20,000,050 |

Trained size: 83 MB |

Examples

Resource retrieval

Get the pre-trained net:

Basic usage

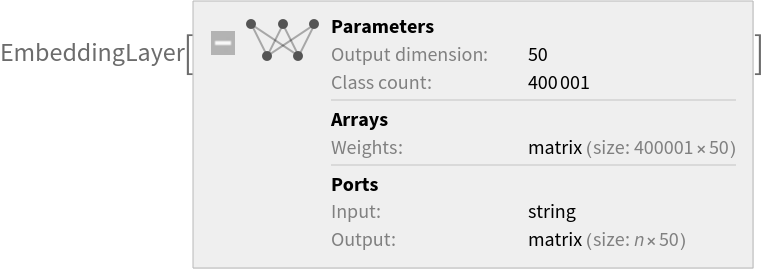

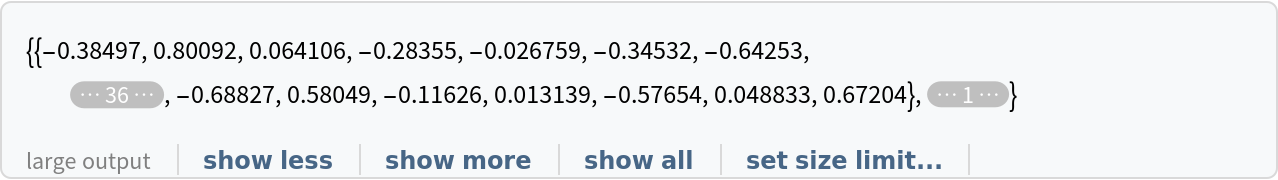

Use the net to obtain a list of word vectors:

Obtain the dimensions of the vectors:

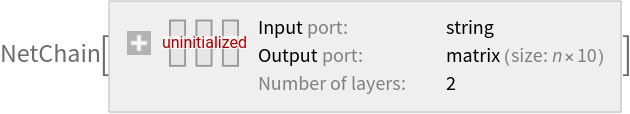

Use the embedding layer inside a NetChain:

Feature visualization

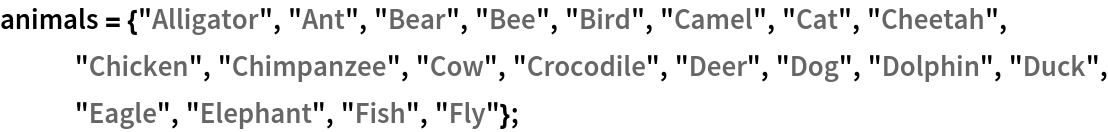

Create two lists of related words:

Visualize relationships between the words using the net as a feature extractor:

Word analogies

Get the pre-trained net:

Get a list of words:

Obtain the vectors:

Create an association whose keys are words and whose values are vectors:

Find the eight nearest words to "king":

Man is to king as woman is to:

France is to Paris as Germany is to:

Net information

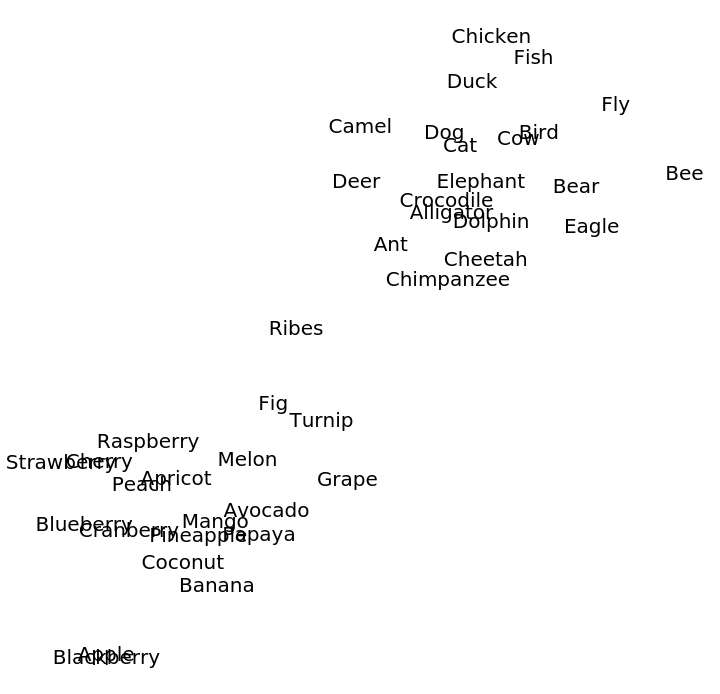

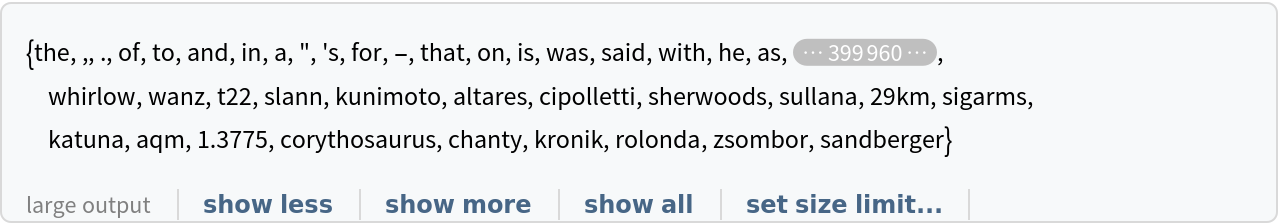

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

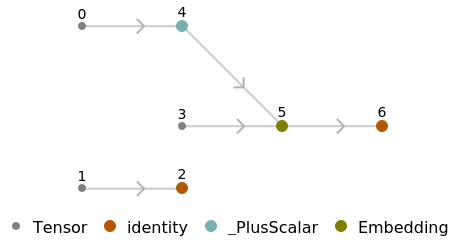

Represent the MXNet net as a graph:

Requirements

Wolfram Language

11.1

(March 2017)

or above

Resource History

Reference

![vectors = NetModel["GloVe 50-Dimensional Word Vectors Trained on Wikipedia and \

Gigaword 5 Data"]["hello world"]](https://www.wolframcloud.com/obj/resourcesystem/images/187/187d1cff-0b06-45e0-9d6b-84a63d572693/4fb898f3dbf53429.png)

![chain = NetChain[{NetModel[

"GloVe 50-Dimensional Word Vectors Trained on Wikipedia and \

Gigaword 5 Data"], LongShortTermMemoryLayer[10]}]](https://www.wolframcloud.com/obj/resourcesystem/images/187/187d1cff-0b06-45e0-9d6b-84a63d572693/79ac4e330a34ec98.png)

![FeatureSpacePlot[Join[animals, fruits], FeatureExtractor -> NetModel["GloVe 50-Dimensional Word Vectors Trained on Wikipedia \

and Gigaword 5 Data"]]](https://www.wolframcloud.com/obj/resourcesystem/images/187/187d1cff-0b06-45e0-9d6b-84a63d572693/0f7b3ad81d260dbc.png)

![NetInformation[

NetModel["GloVe 50-Dimensional Word Vectors Trained on Wikipedia and \

Gigaword 5 Data"], "ArraysElementCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/187/187d1cff-0b06-45e0-9d6b-84a63d572693/1d719ab96da97795.png)

![NetInformation[

NetModel["GloVe 50-Dimensional Word Vectors Trained on Wikipedia and \

Gigaword 5 Data"], "ArraysTotalElementCount"]](https://www.wolframcloud.com/obj/resourcesystem/images/187/187d1cff-0b06-45e0-9d6b-84a63d572693/4e4e11587b5d80b8.png)

![NetInformation[

NetModel["GloVe 50-Dimensional Word Vectors Trained on Wikipedia and \

Gigaword 5 Data"], "LayerTypeCounts"]](https://www.wolframcloud.com/obj/resourcesystem/images/187/187d1cff-0b06-45e0-9d6b-84a63d572693/5da9b052afb497b4.png)

![jsonPath = Export[FileNameJoin[{$TemporaryDirectory, "net.json"}], NetModel["GloVe 50-Dimensional Word Vectors Trained on Wikipedia \

and Gigaword 5 Data"], "MXNet"]](https://www.wolframcloud.com/obj/resourcesystem/images/187/187d1cff-0b06-45e0-9d6b-84a63d572693/5a17cb52f7825f0d.png)