Resource retrieval

Retrieve the pre-trained net:

Basic usage

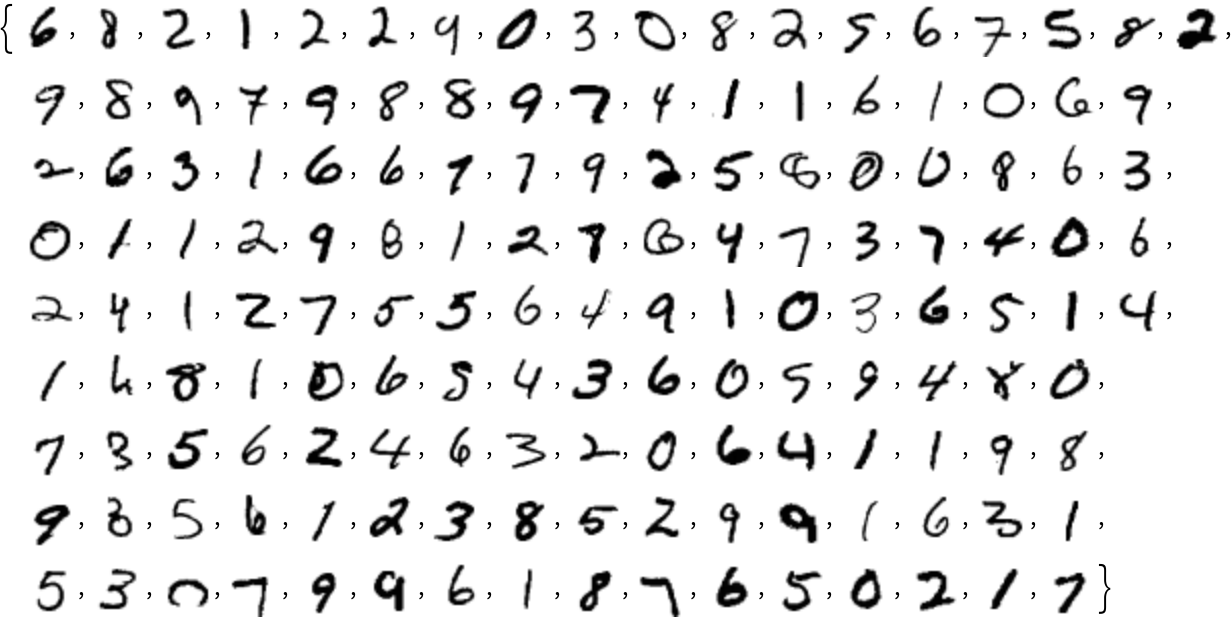

Apply the trained net to a set of inputs:

Give class probabilities for a single input:

Feature extraction

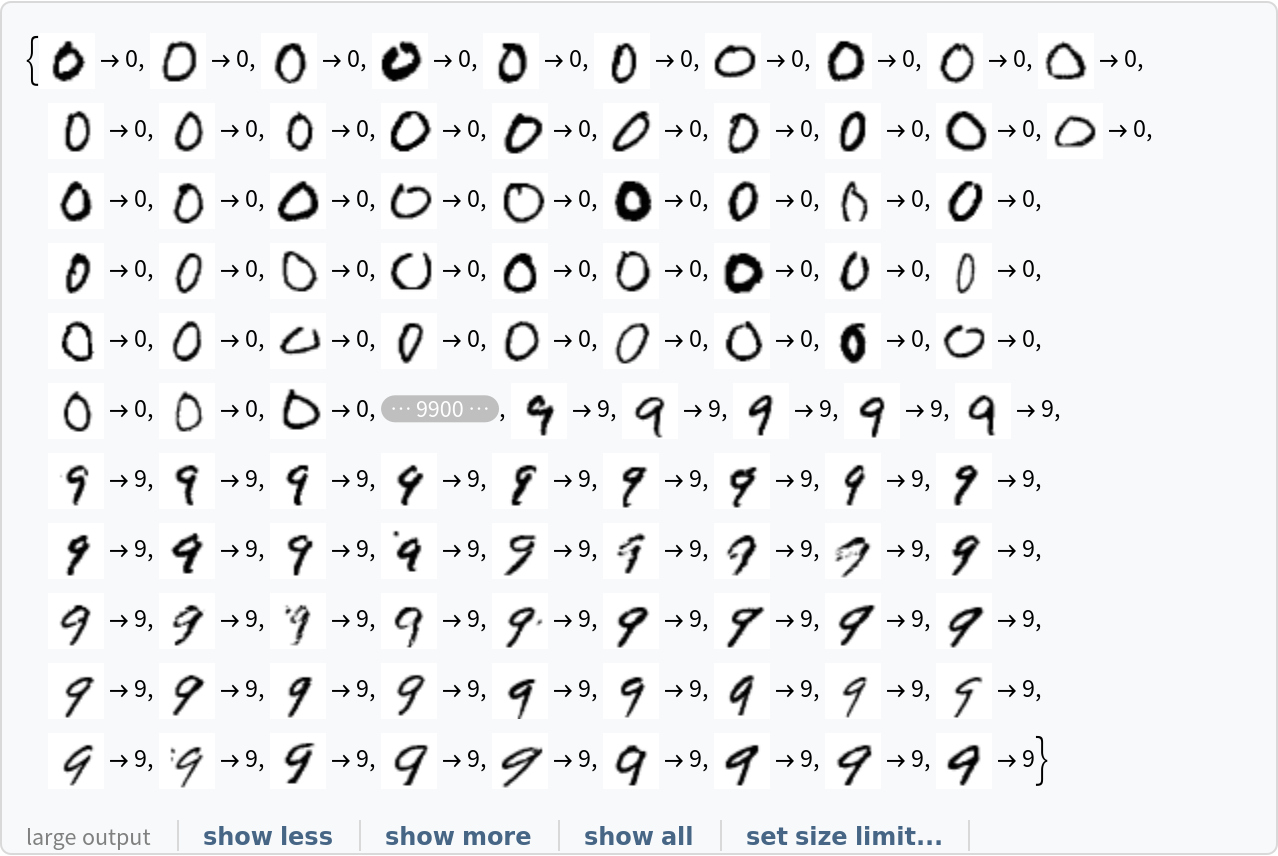

Create a subset of the MNIST dataset:

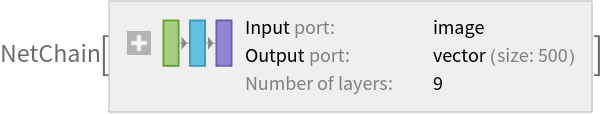

Remove the last linear layer of the net, which will be used as a feature extractor:

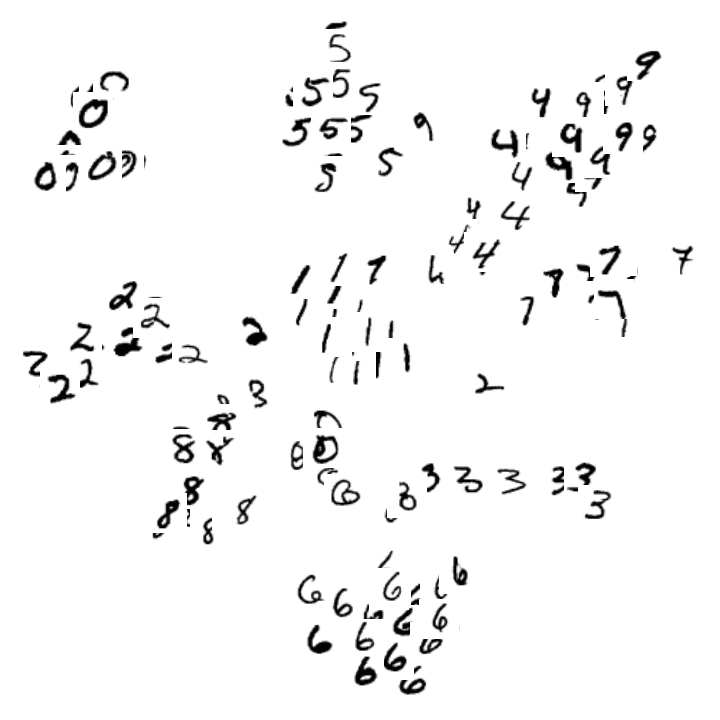

Visualize the features of a subset of the MNIST dataset:

Visualization of net operation

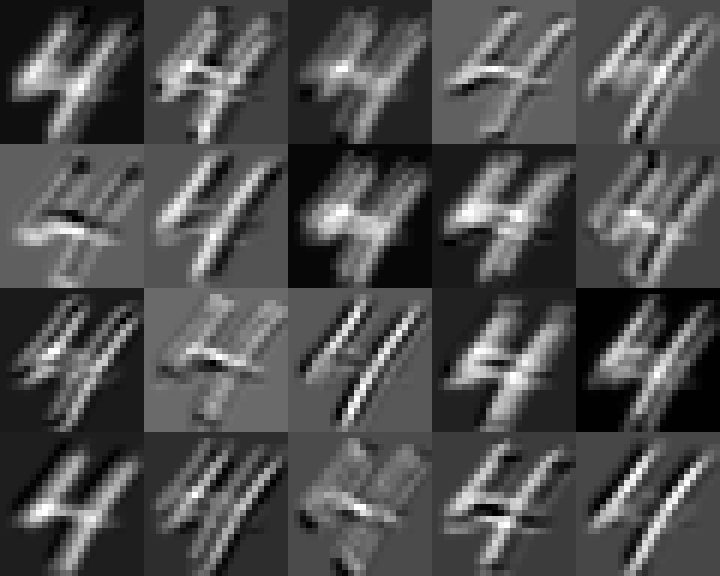

Extract the convolutional features from the first layer:

Visualize the features:

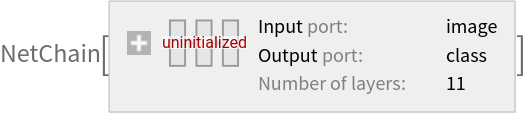

Training the uninitialized architecture

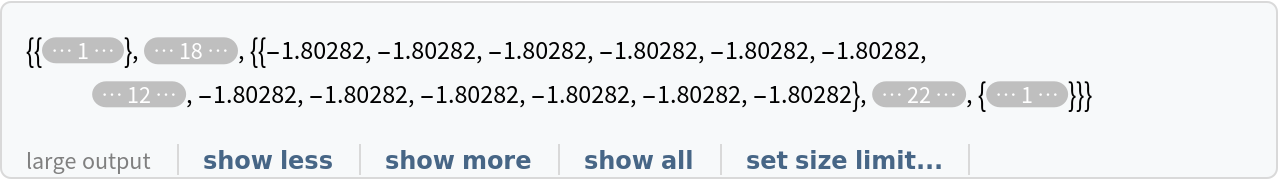

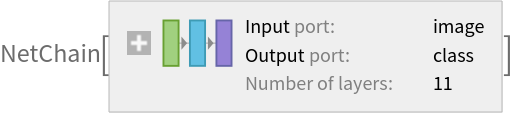

Retrieve the uninitialized architecture:

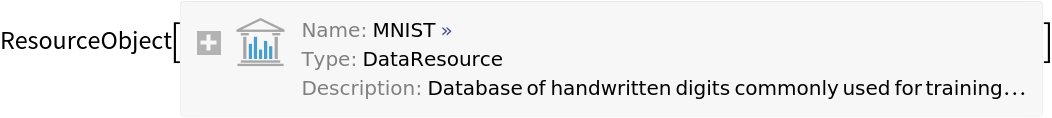

Retrieve the MNIST dataset:

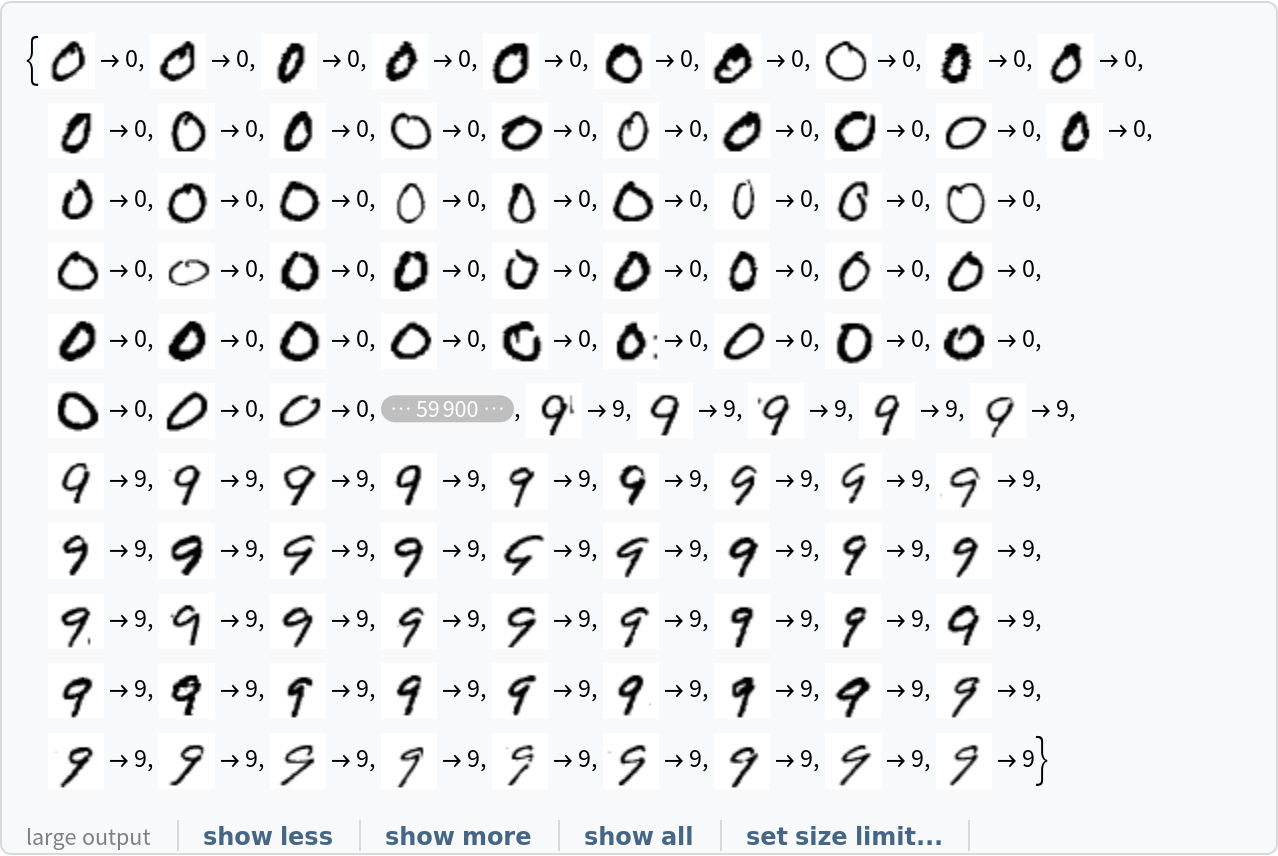

Use the training dataset provided:

Use the test dataset provided:

Train the net:

Generate a ClassifierMeasurementsObject of the net with the test set:

Evaluate the accuracy on the validation set:

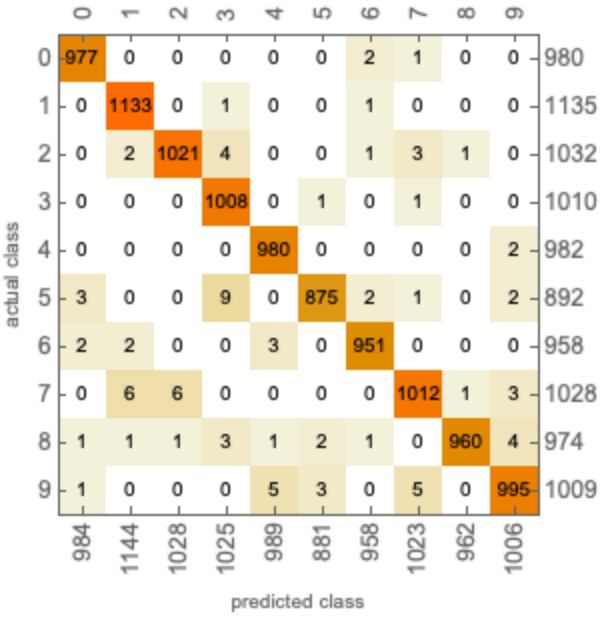

Visualize the confusion matrix:

Net information

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

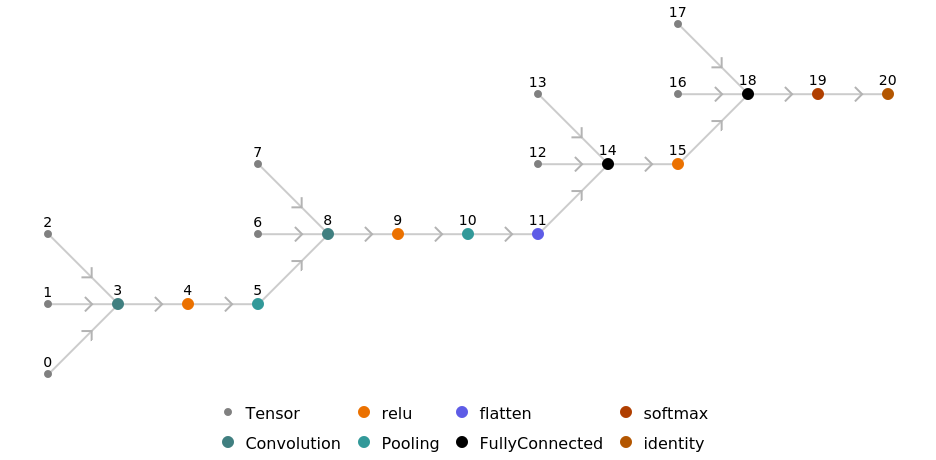

Display the summary graphic:

Export to MXNet

Export the net into a format that can be opened in MXNet:

Export also creates a net.params file containing parameters:

Get the size of the parameter file:

The size is similar to the byte count of the resource object:

Represent the MXNet net as a graph: